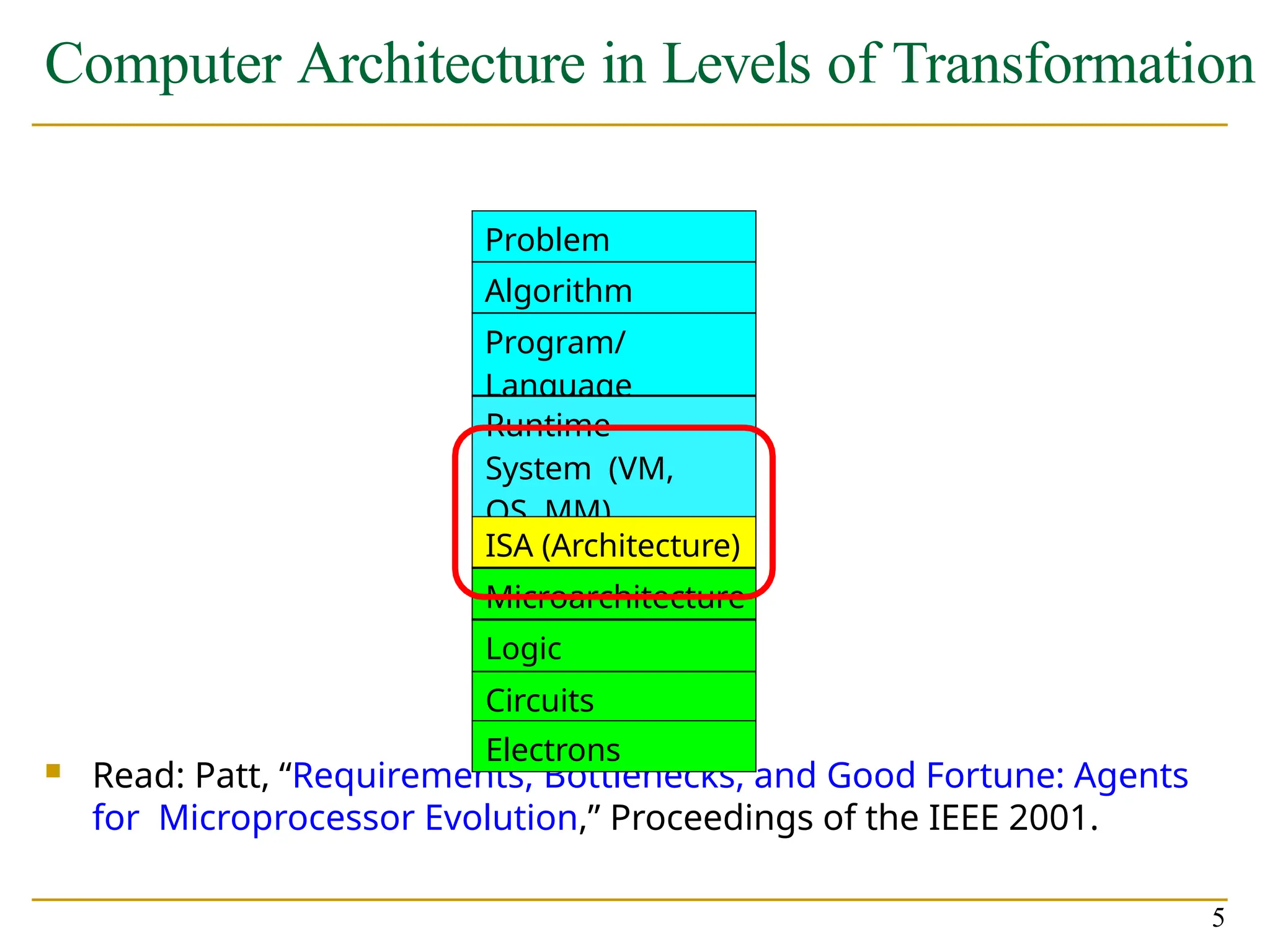

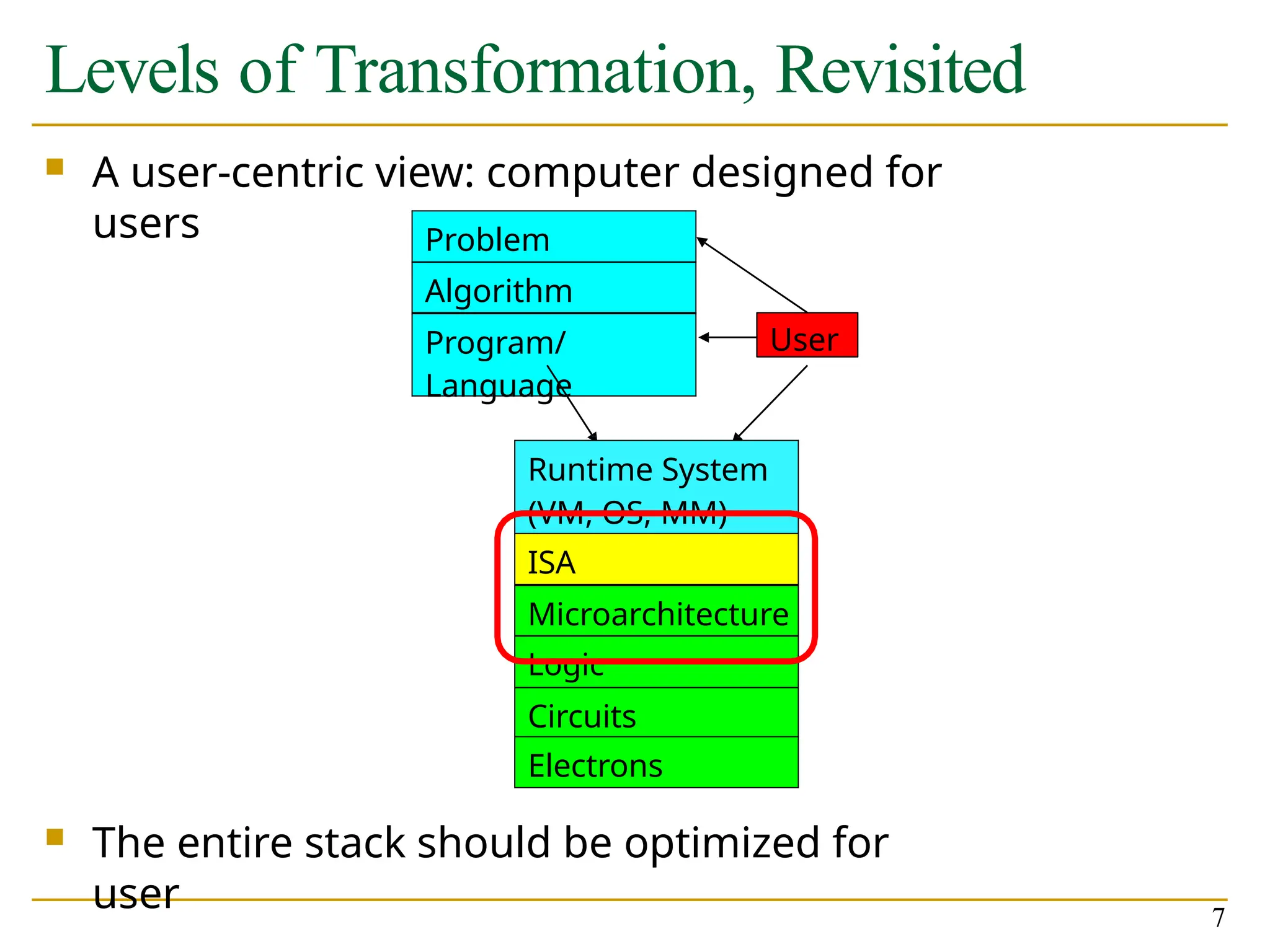

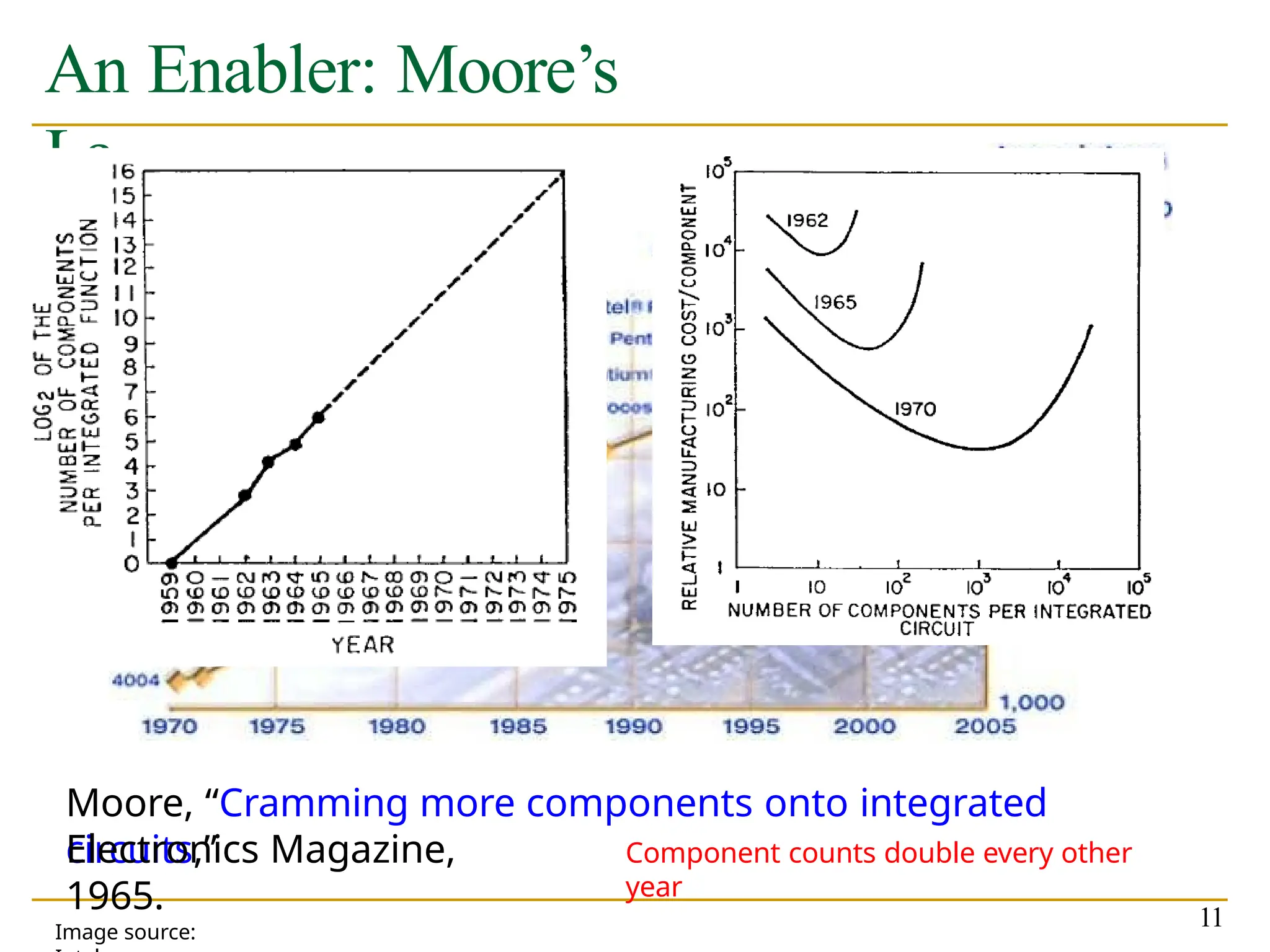

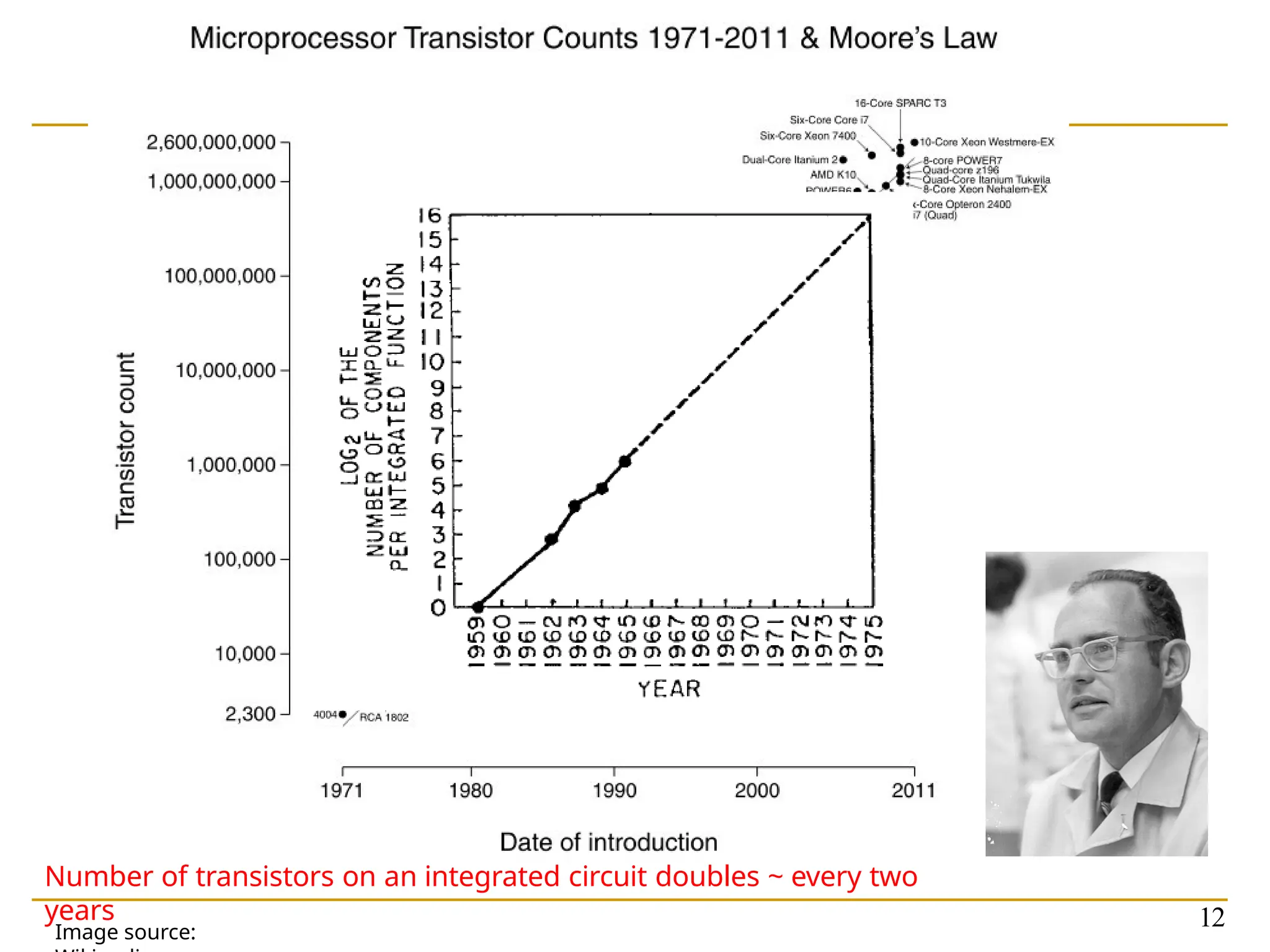

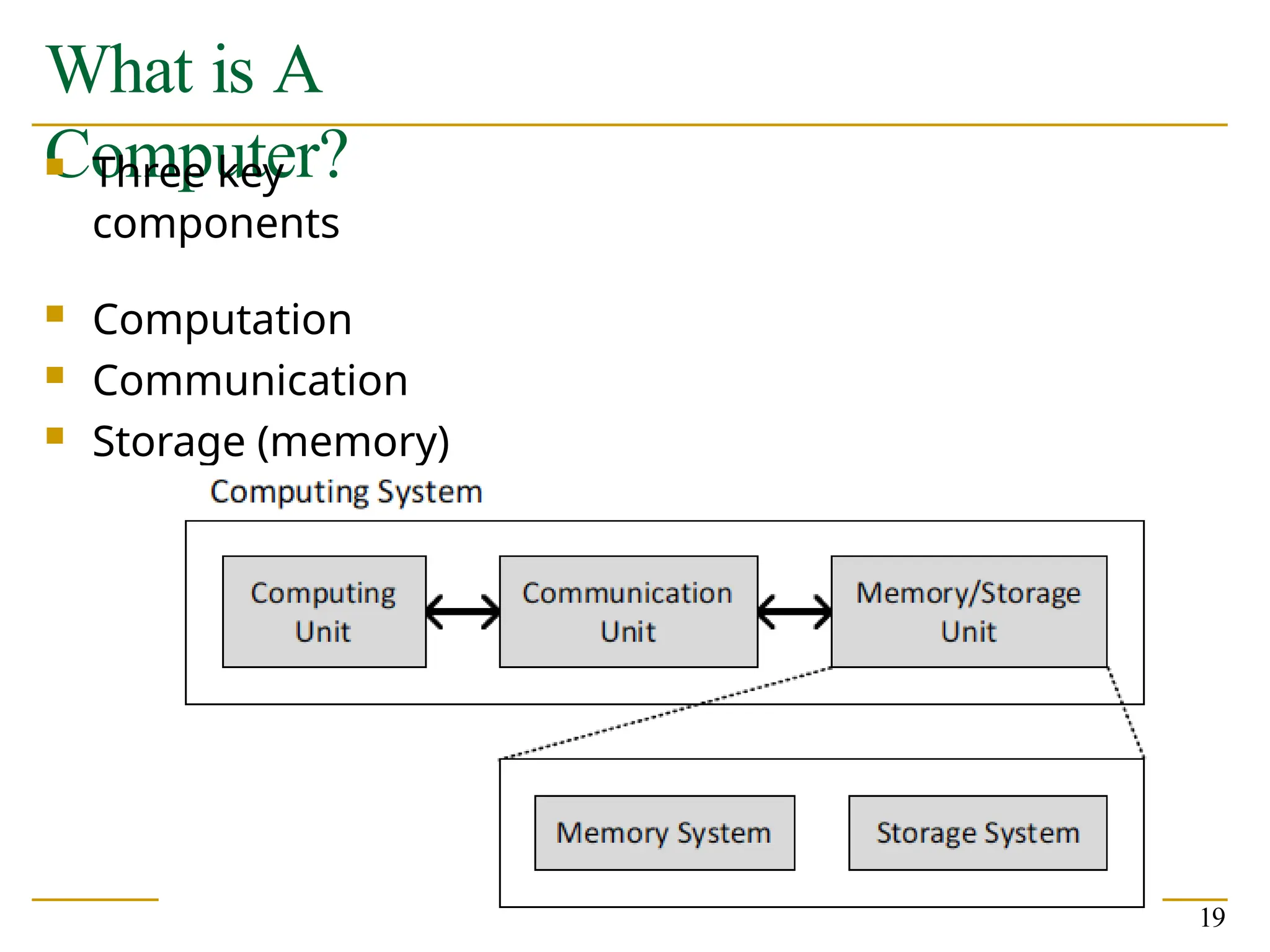

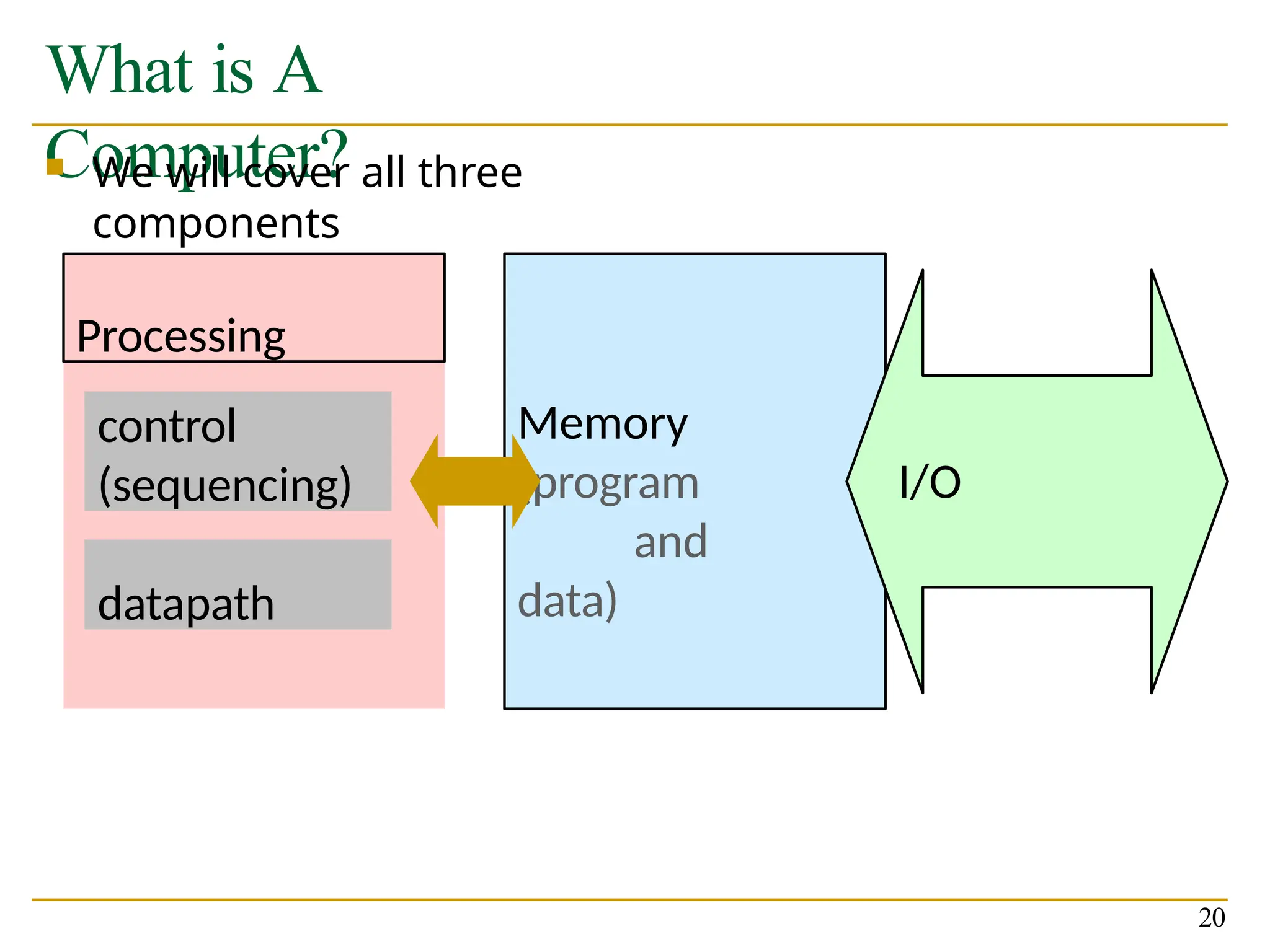

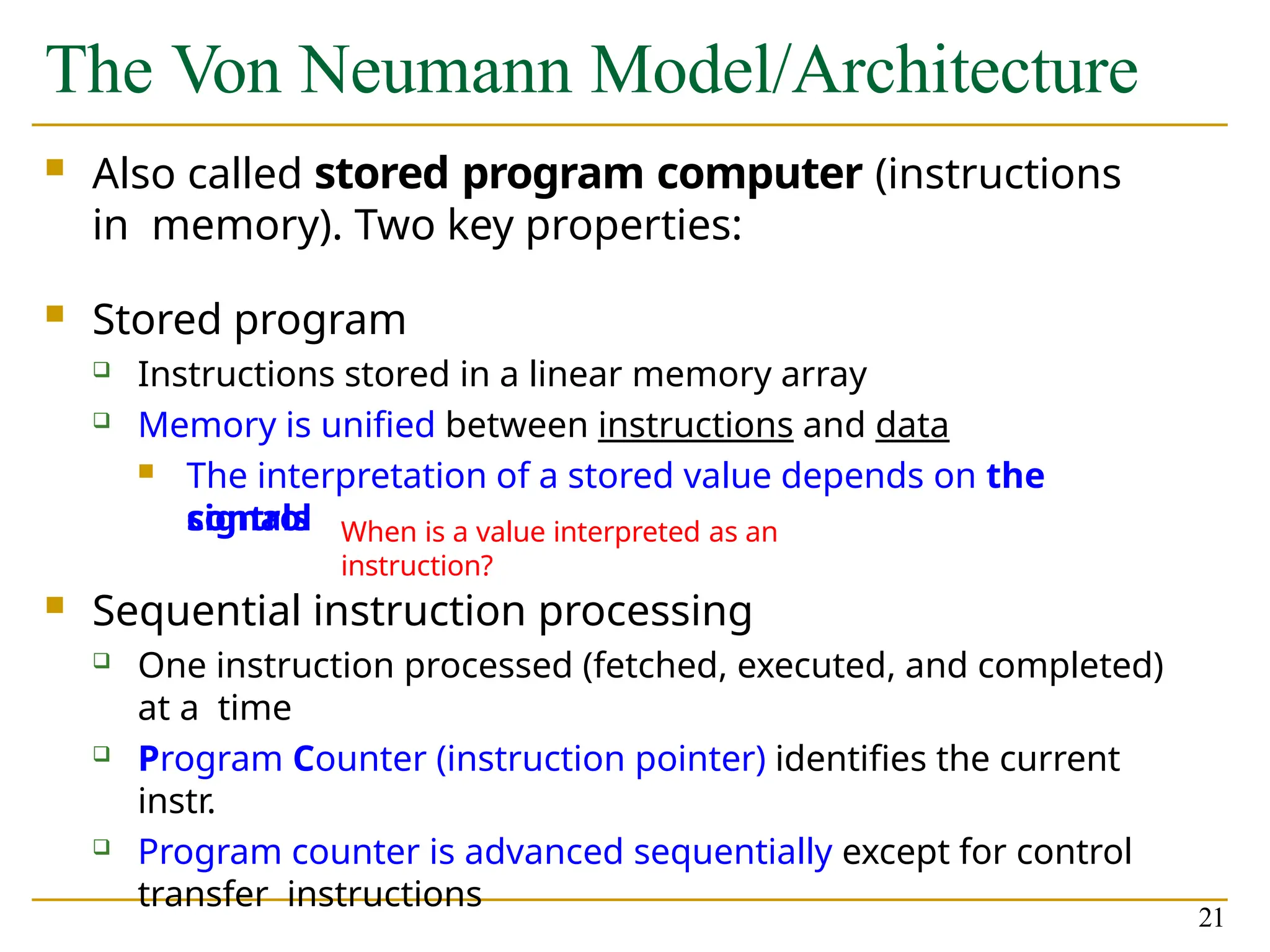

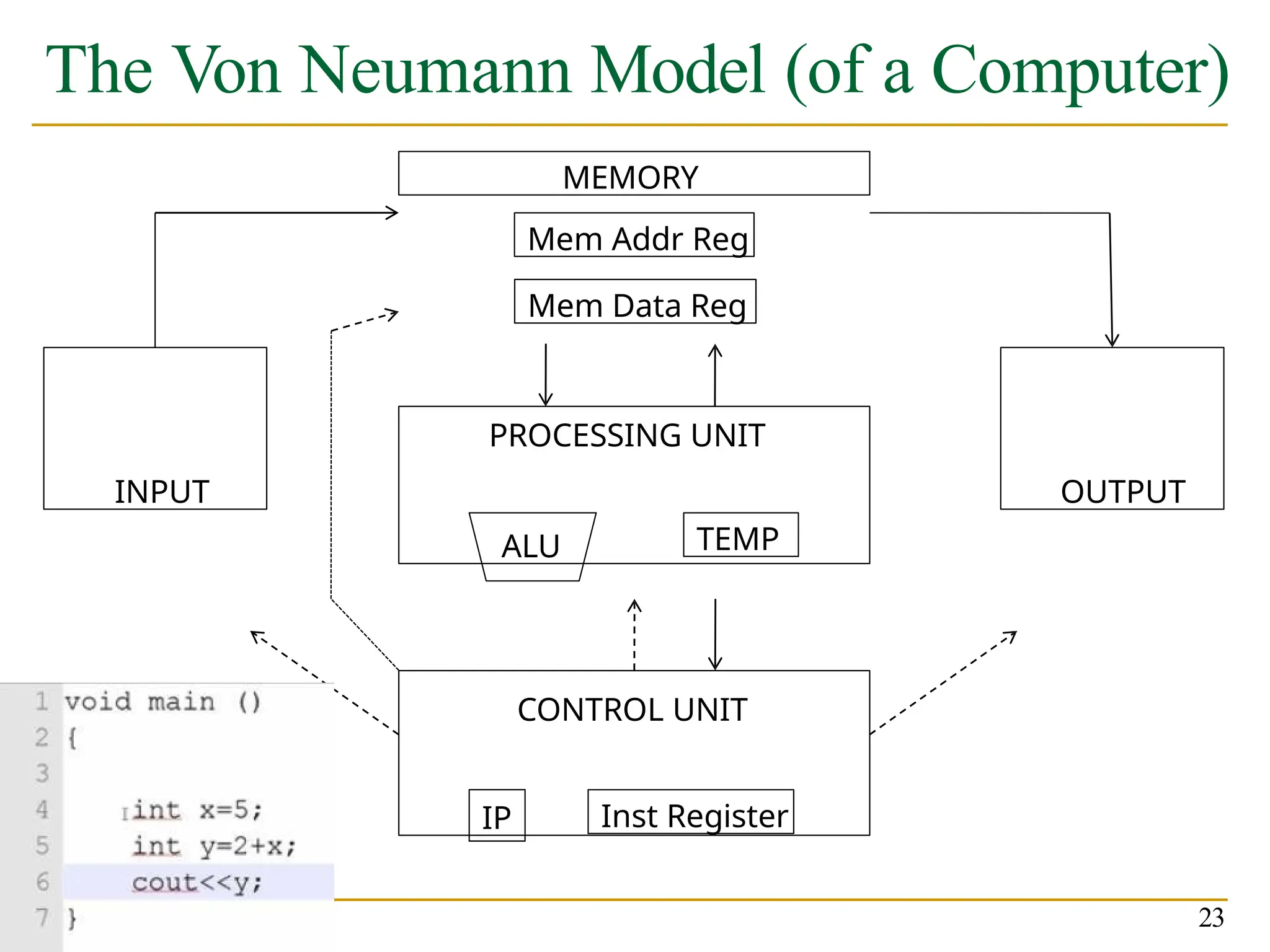

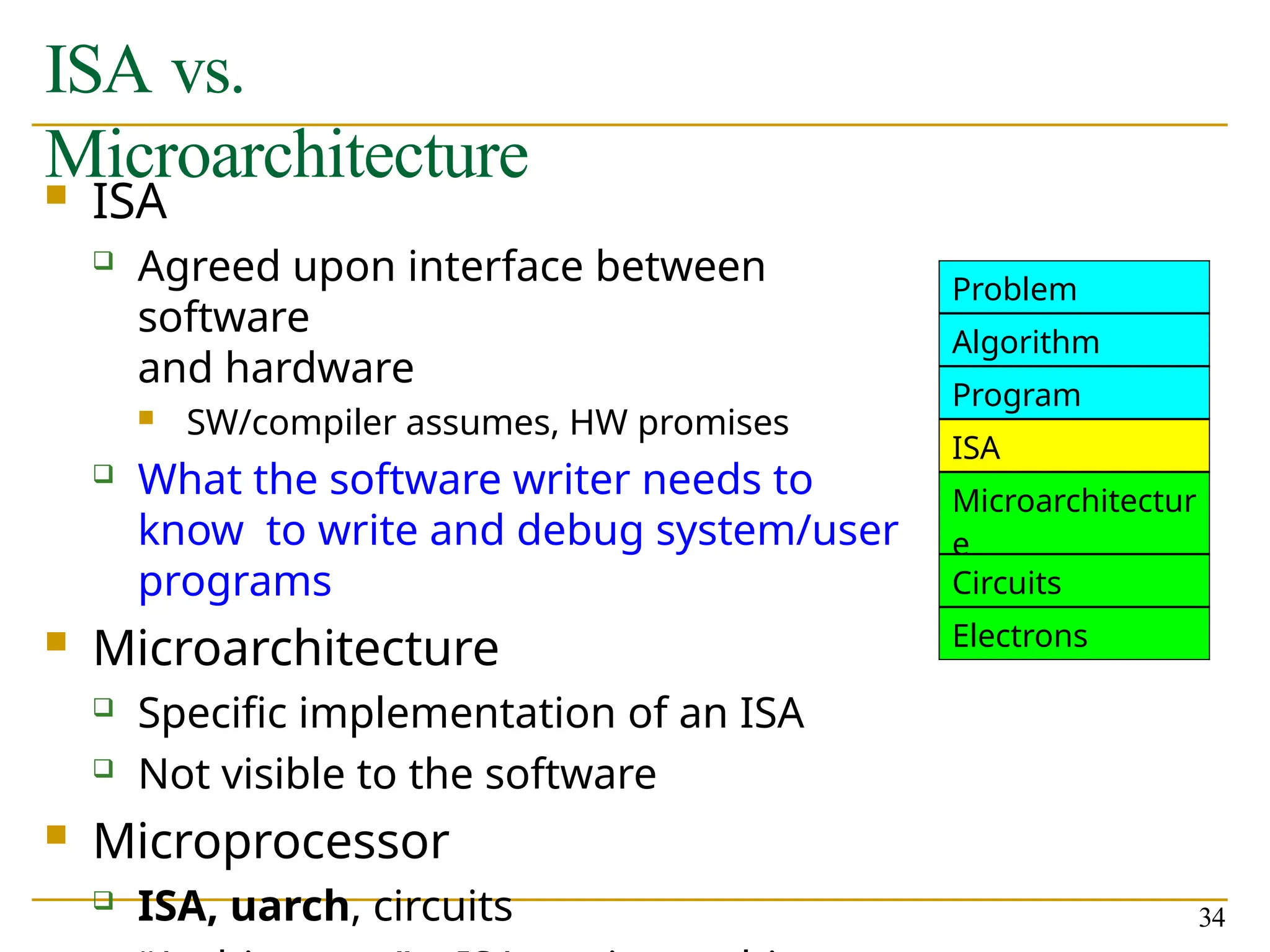

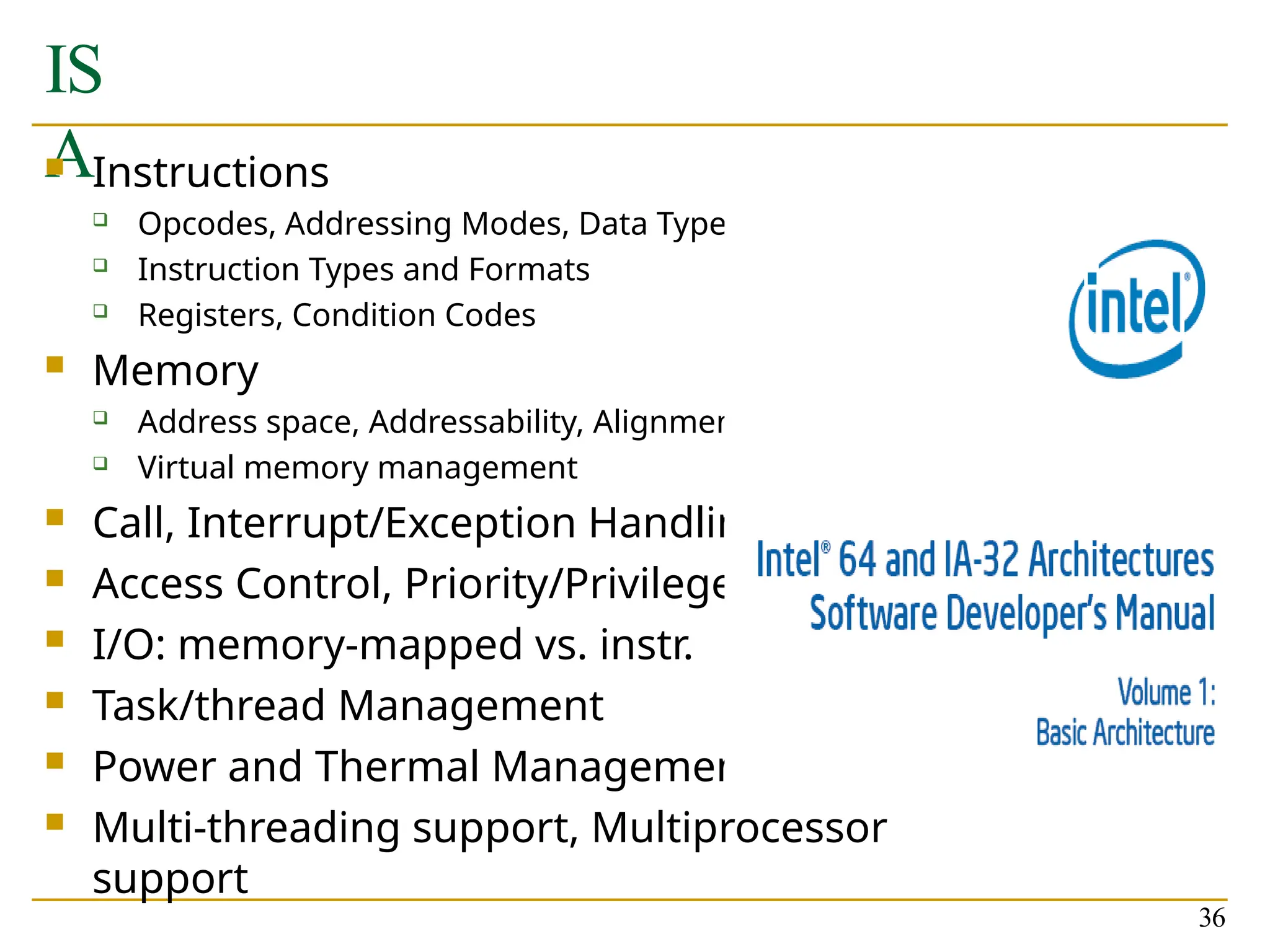

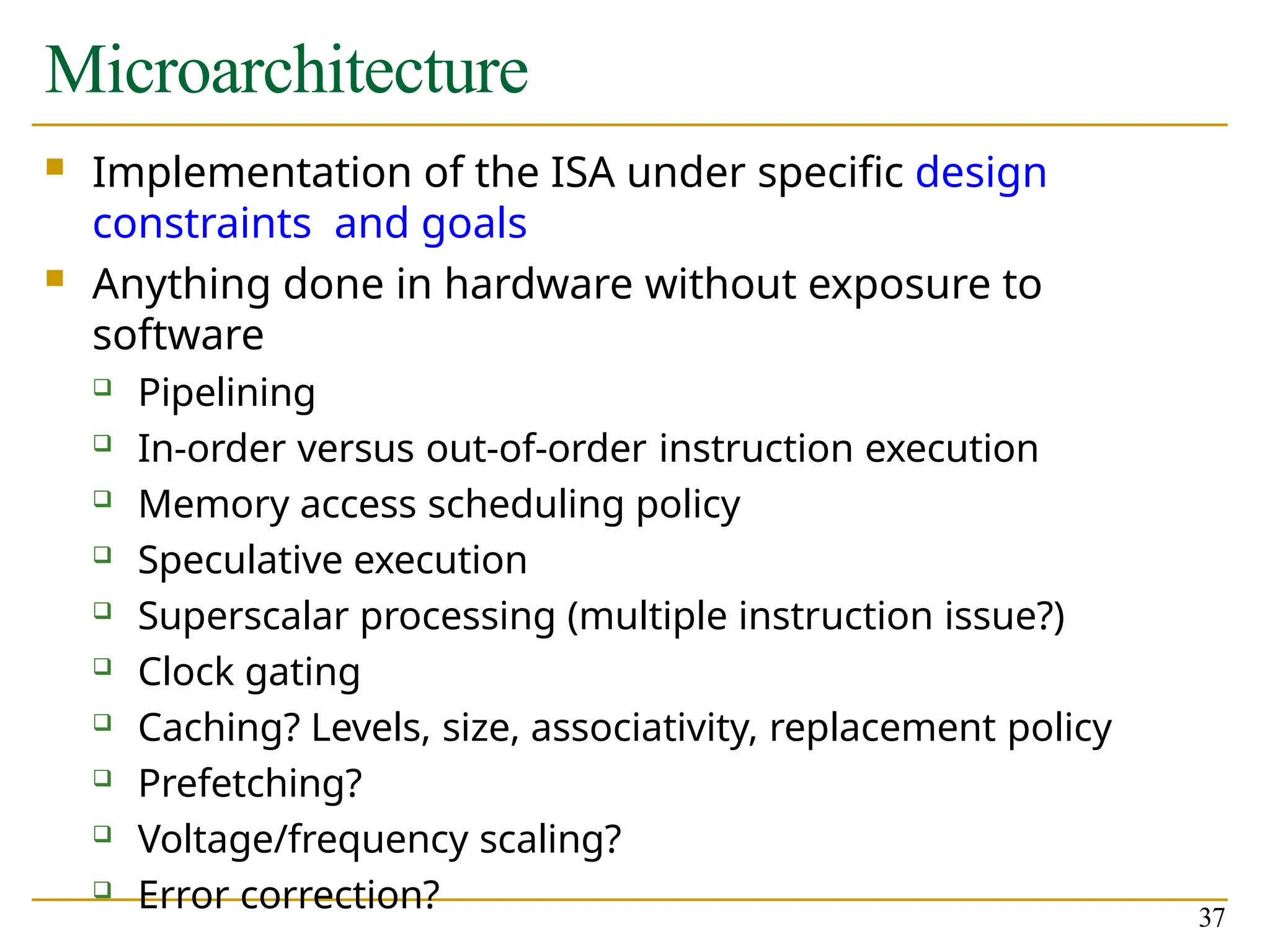

The document provides an overview of computer architecture, emphasizing its role in designing hardware and software interfaces to meet specific requirements like performance and energy efficiency. It highlights the transformation levels from algorithms to microarchitectures, the implications of Moore's Law, and the differences between instruction set architecture (ISA) and microarchitecture. The course aims to equip students with foundational knowledge and hands-on experience in modern processor design and evaluation.