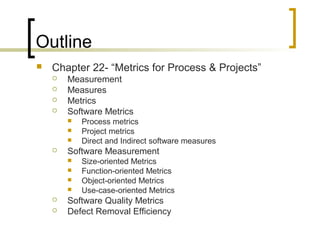

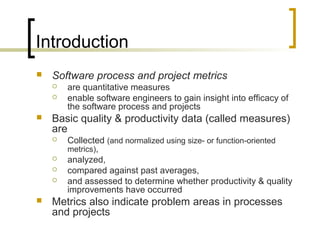

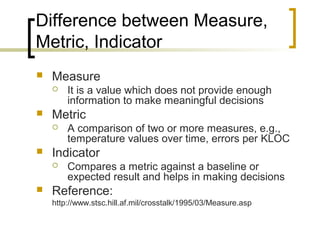

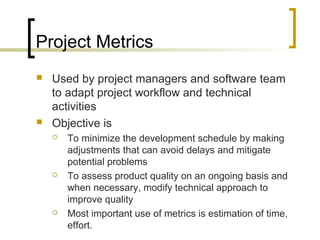

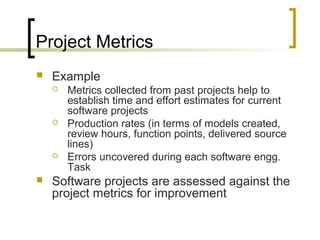

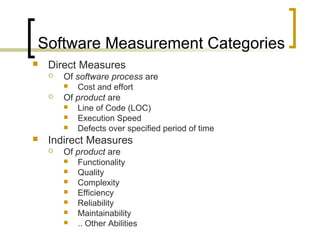

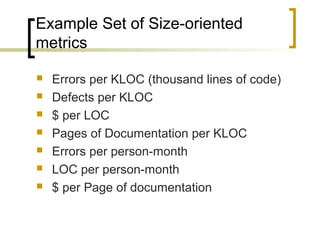

This document discusses software metrics that can be used to measure process and project attributes. It defines key terms like measurement, measure, metric and indicator. It describes different types of metrics like process metrics, project metrics, size-oriented metrics, function-oriented metrics and quality metrics. It also discusses concepts like defect removal efficiency and redefining defect removal efficiency to measure effectiveness of quality assurance activities.

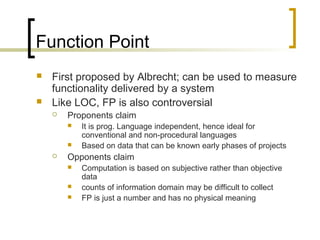

![Computing function points

(cont..)

Following empirical relationship is used to

compute Function Point (FP)

FP=total count x [0.65+ 0.01 x Σ(Fi)]

Fi (i=1 to 14 ) are VAF (Value adjustment

factors) or simply ‘adjustment values’ based

on answers to some questions (See list of Qs on

PAGE 473 from book 6th

edition )](https://image.slidesharecdn.com/lecture3-160729071759/85/Lecture3-18-320.jpg)

![Software Quality Metrics

Integrity

System’s ability to withstand (accidental & intentional)

attacks

Two more attributes that help determine integrity

threat = probability of attack within a given time (that

causes failure)

security = probability that an attack will be repelled

Integrity = Σ [1 – (threat * (1 – security))]

Usability

It quantifies ease of use and learning (time)](https://image.slidesharecdn.com/lecture3-160729071759/85/Lecture3-23-320.jpg)