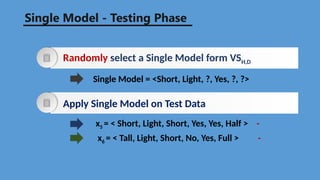

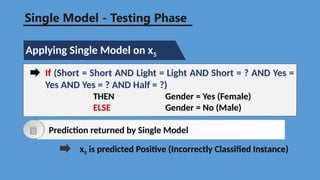

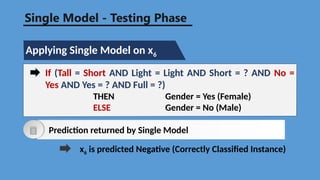

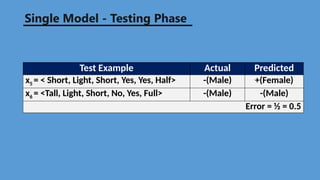

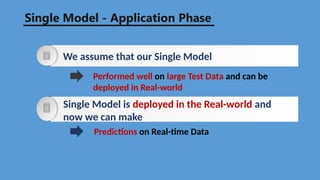

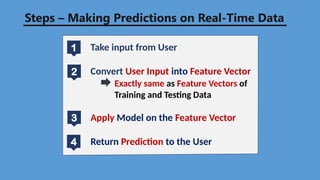

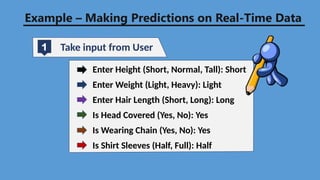

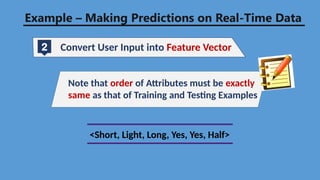

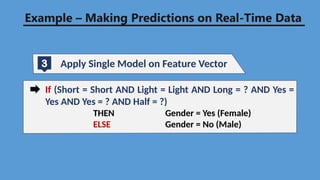

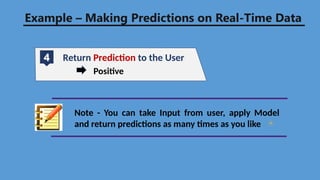

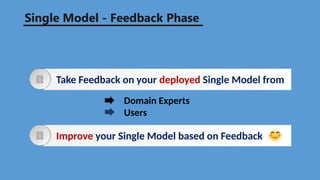

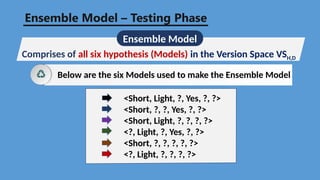

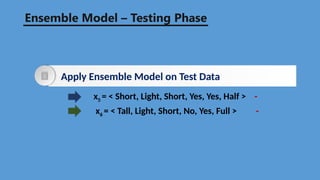

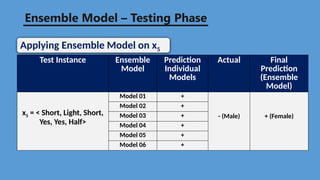

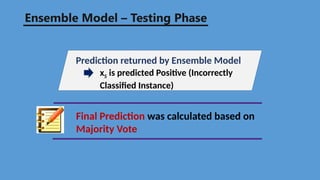

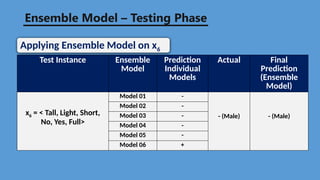

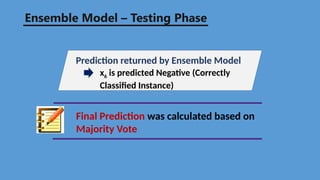

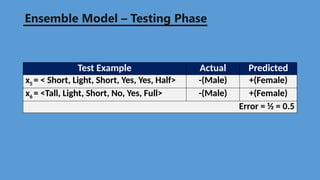

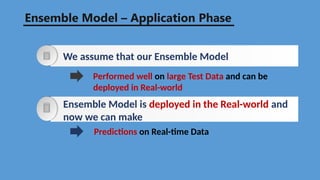

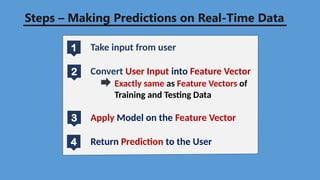

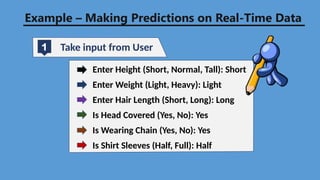

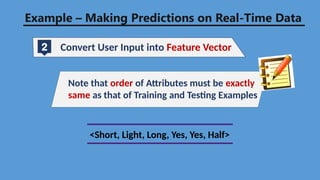

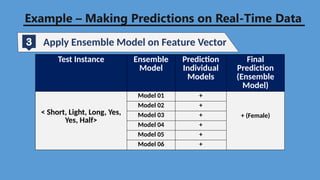

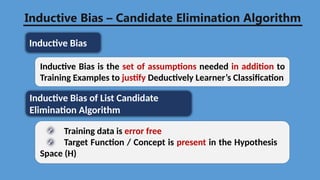

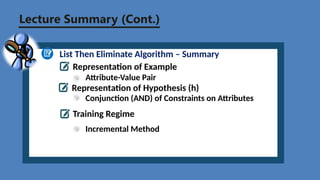

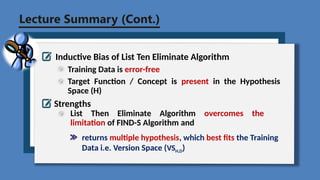

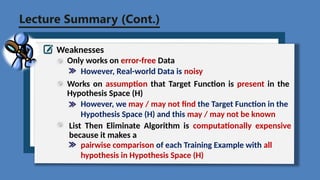

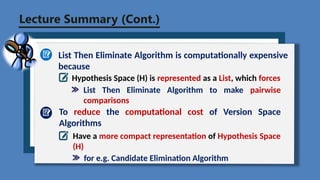

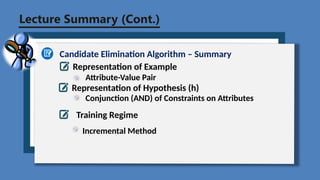

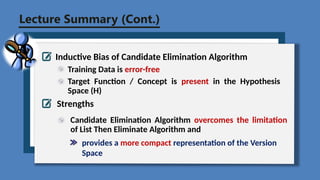

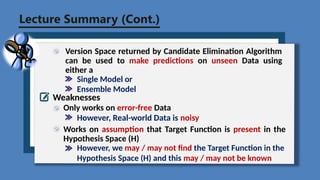

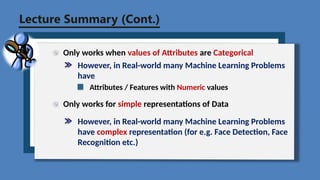

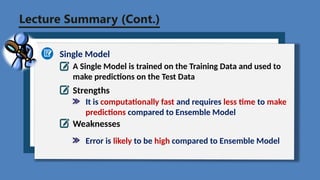

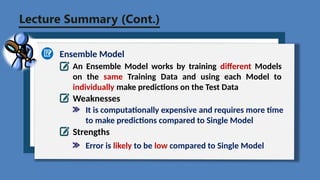

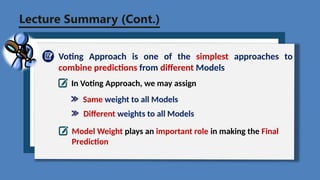

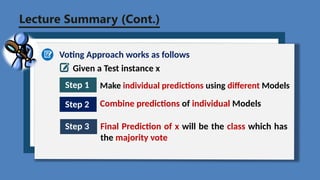

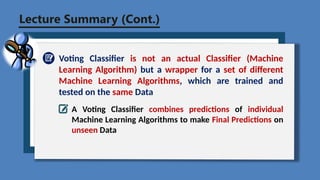

The document discusses machine learning models, specifically focusing on the version space algorithm and its applications in predicting gender based on certain features. It outlines the process of using both single and ensemble models to classify data, including the testing phases and prediction accuracy for each model. Additionally, it addresses the strengths and weaknesses of these models, emphasizing the importance of error-free training data and the computational demands of various approaches.