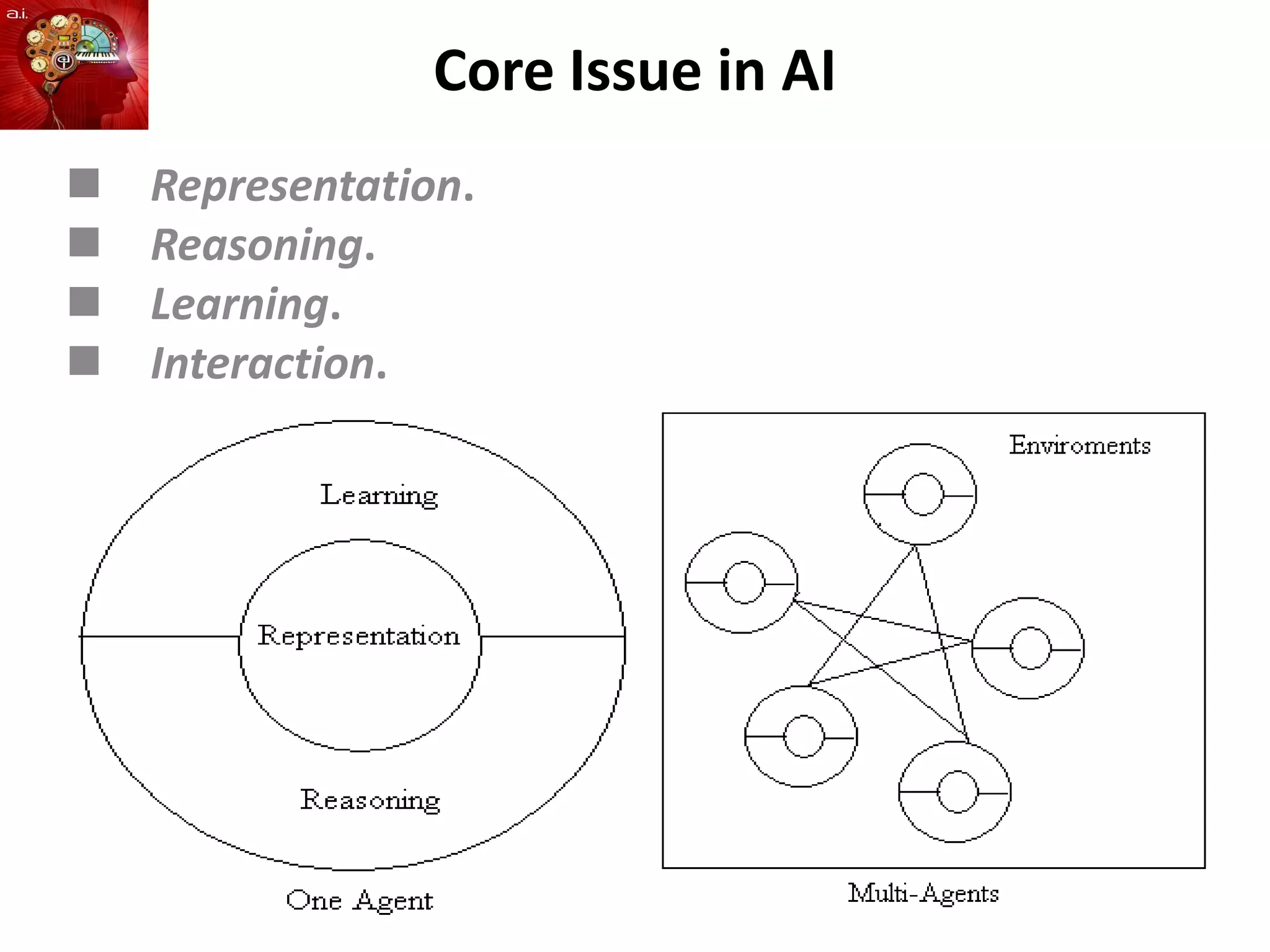

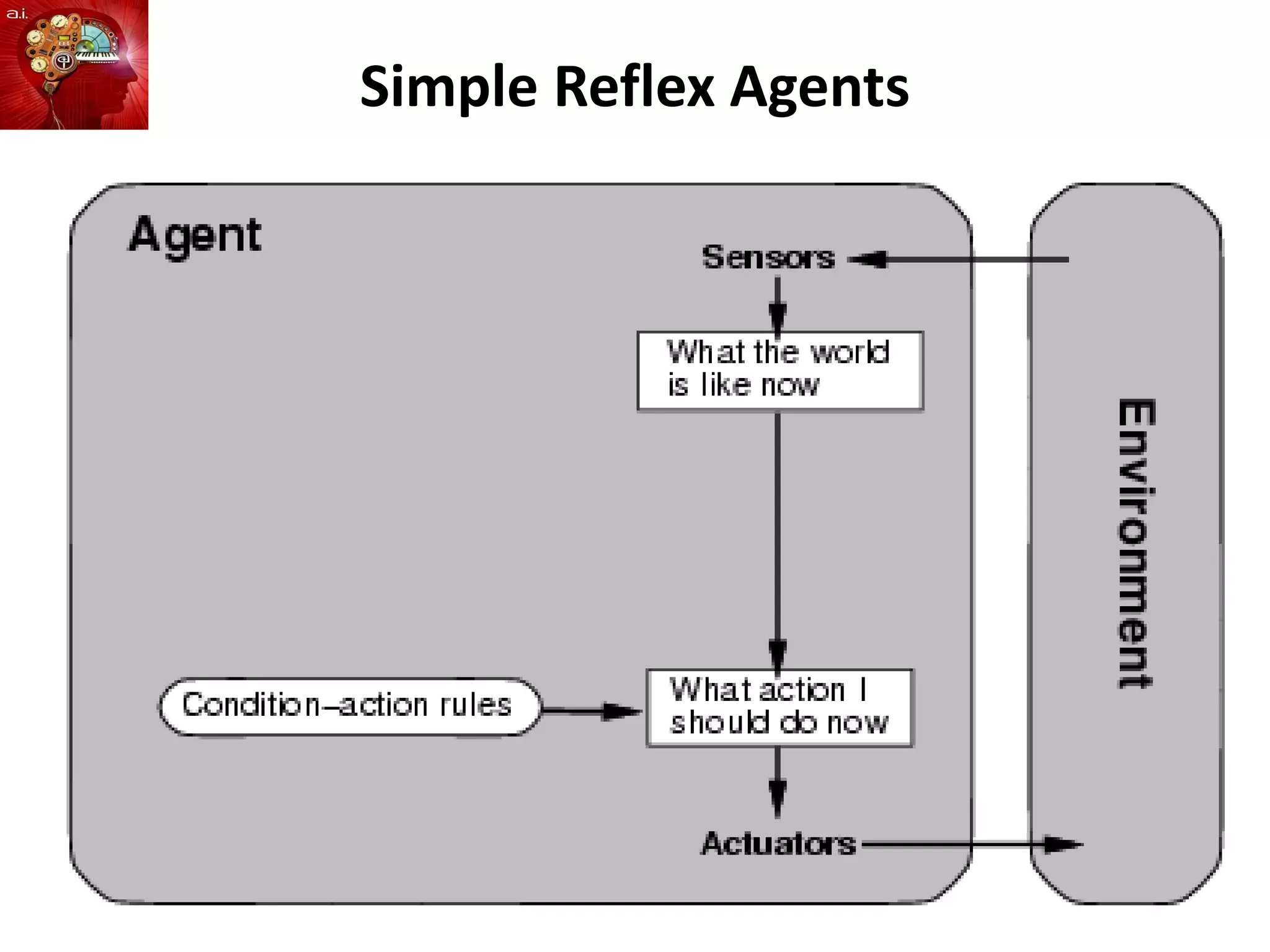

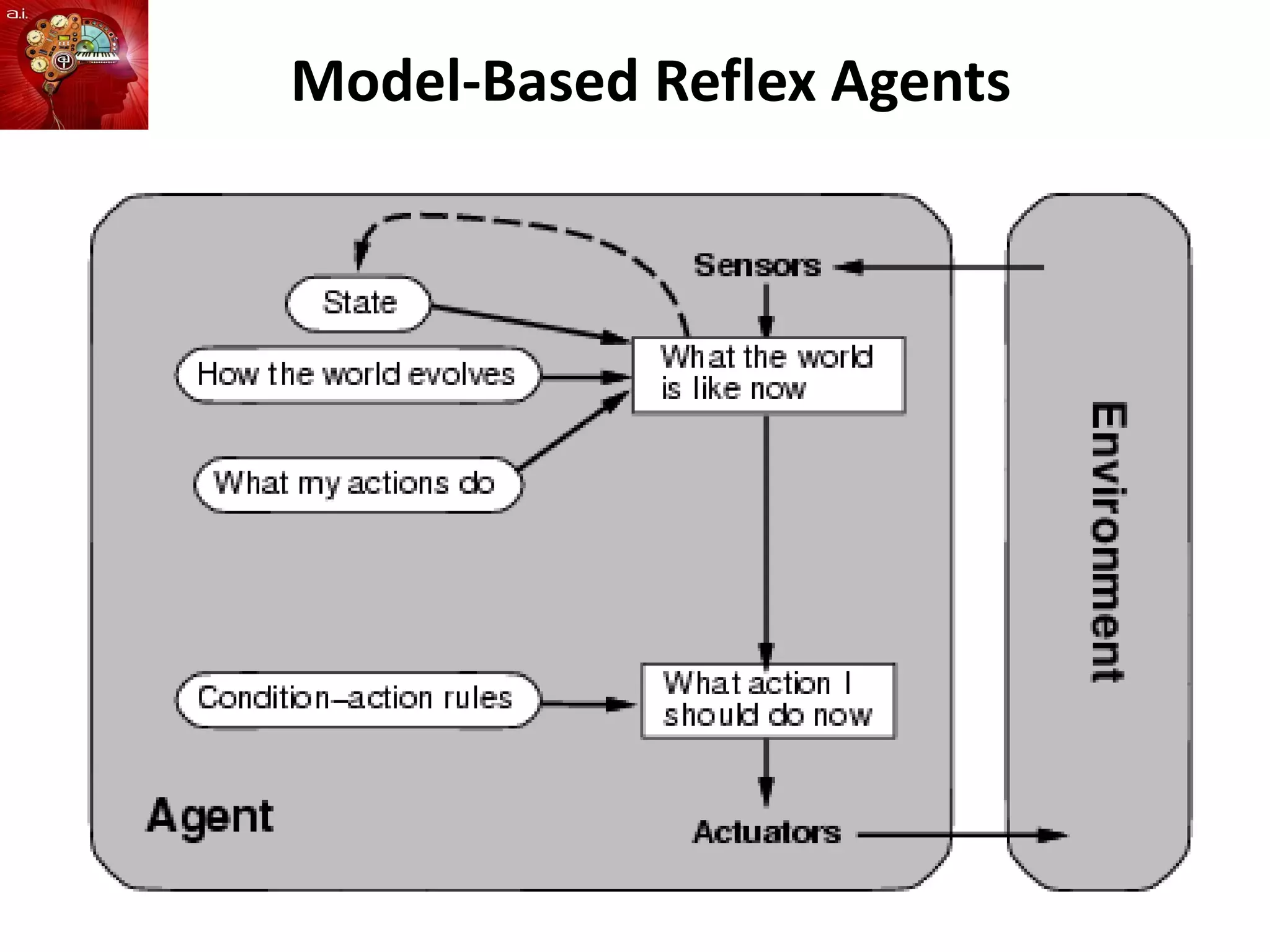

This document provides a general introduction to artificial intelligence (AI) including definitions of AI, different views on AI, a brief history of AI, core issues in AI, and applications of AI. It discusses what AI is, including strong AI which implies intelligent agents can become self-aware versus weak AI which implies agents can only simulate some human behaviors. It also summarizes different types of intelligent agents including simple reflex agents, model-based reflex agents, goal-based agents, and utility-based agents.

![Rational Agents

• An agent is an entity that perceives and acts

• This course is about designing rational agents

• Abstractly, an agent is a function from percept

histories to actions:

[f: P* A]

• For any given class of environments and tasks, we

seek the agent (or class of agents) with the best

performance

• Caveat: computational limitations make perfect

rationality unachievable

design best program for given machine resources.](https://image.slidesharecdn.com/lecture1-230117205756-4d227ea0/75/Lecture-1-ppt-13-2048.jpg)

![Agents and Environments

• The agent function maps from percept histories to

actions:

[f: P* A]

• The agent program runs on the physical architecture

to produce f

• agent = architecture + program](https://image.slidesharecdn.com/lecture1-230117205756-4d227ea0/75/Lecture-1-ppt-30-2048.jpg)

![Vacuum-Cleaner World

• Percepts: location and contents, e.g., [A,Dirty]

• Actions: Left, Right, Suck, NoOp](https://image.slidesharecdn.com/lecture1-230117205756-4d227ea0/75/Lecture-1-ppt-31-2048.jpg)

![A Vacuum-Cleaner Agent

Percept sequence Action

[A, Clean] Right

[A,Dirty] Suck

[B, Clean] Left

[B, Dirty] Suck

[A, Clean], [A, Clean] Right

[A, Clean], [A, Dirty] Suck

…. ….

[A, Clean], [A, Clean], [A, Clean] Right

[A, Clean], [A, Clean], [A, Dirty] Suck

…. ….](https://image.slidesharecdn.com/lecture1-230117205756-4d227ea0/75/Lecture-1-ppt-32-2048.jpg)

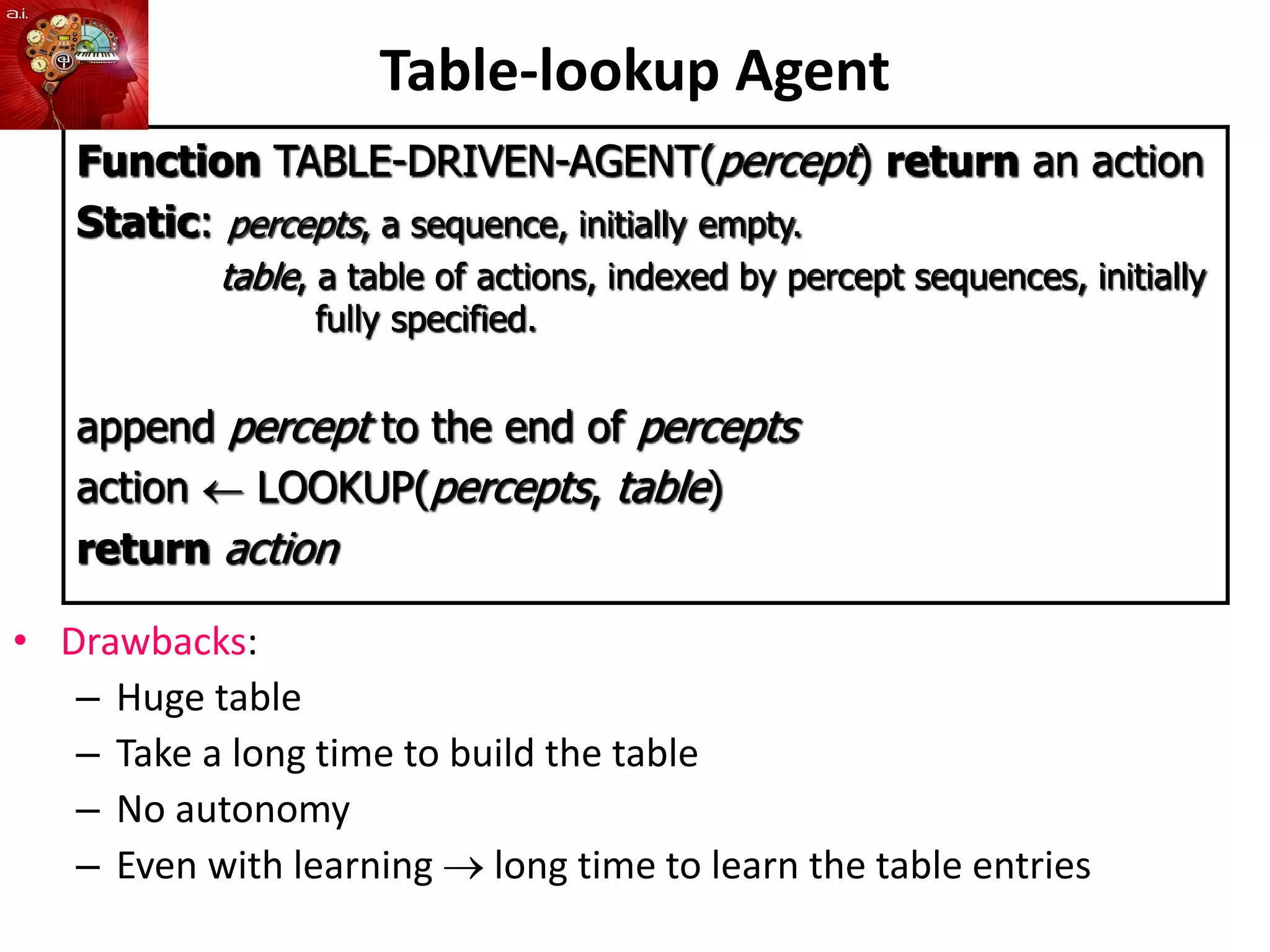

![Simple Reflex Agents

Function SIMPLE-REFLEX-AGENT (percept)

Return an action

Static: rules, a set of condition-action rules

state INTERPRET-INPUT (percept)

rule RULE-MATCH (state, rules)

action RULE-ACTION [rule];

return action](https://image.slidesharecdn.com/lecture1-230117205756-4d227ea0/75/Lecture-1-ppt-49-2048.jpg)

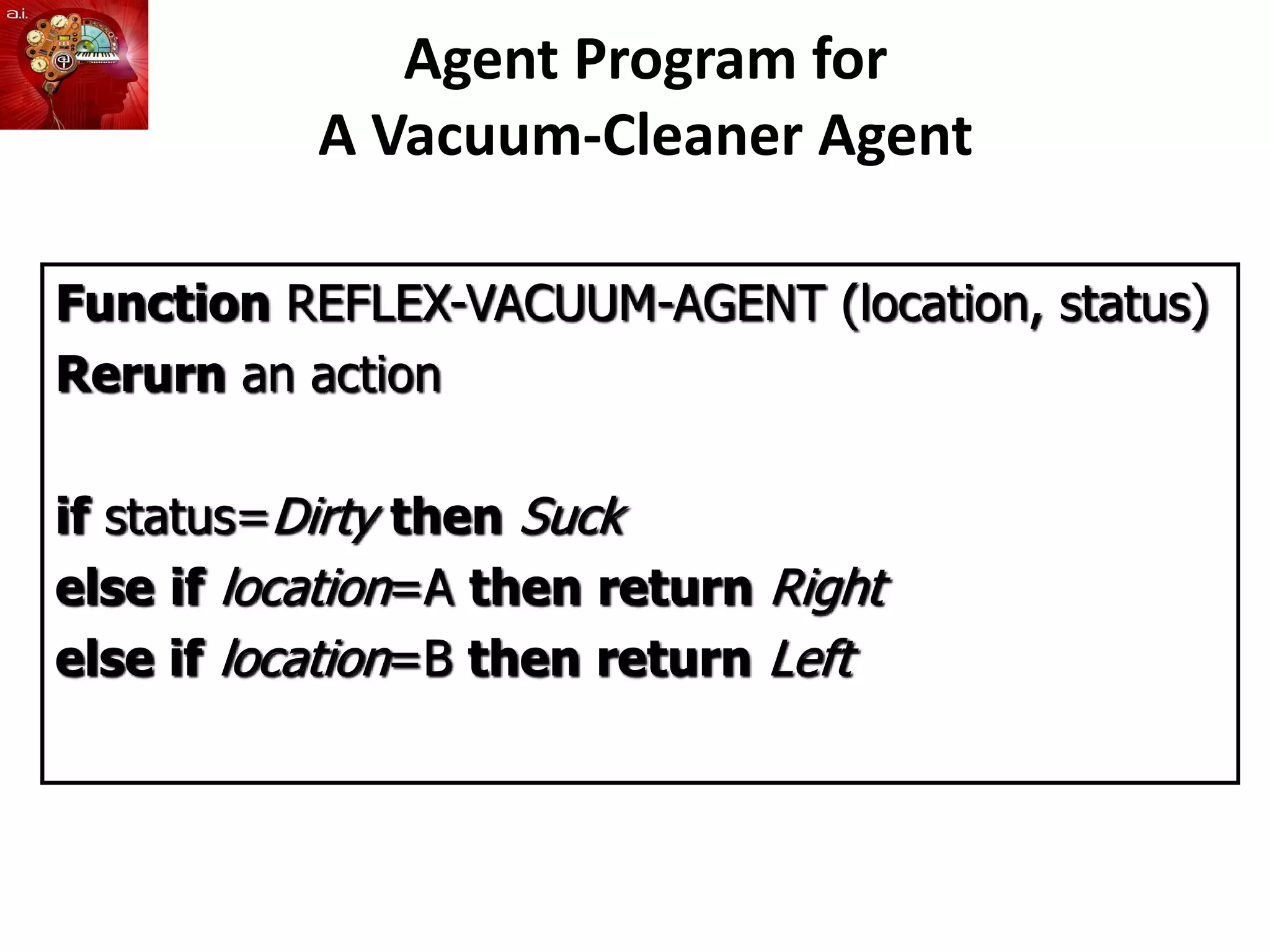

![Model-based Reflex Agents

Function REFLEX-AGENT-WITH-STATE (percept)

Return an action

Static state, a description of the current world state

rules, a set of condition-action rules

action, the most recent action, initially none

state UPDATE-STATE (state, action, percept)

rule RULE-MATCH (state, rules)

action RULE-ACTION [rule]

return action](https://image.slidesharecdn.com/lecture1-230117205756-4d227ea0/75/Lecture-1-ppt-51-2048.jpg)