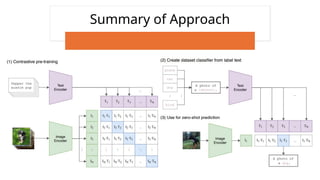

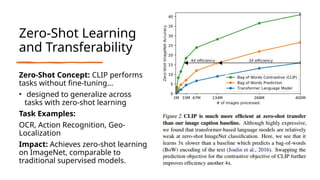

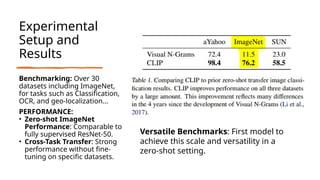

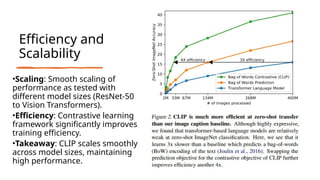

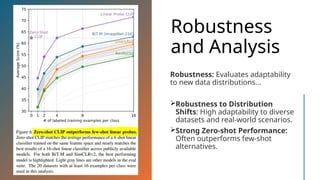

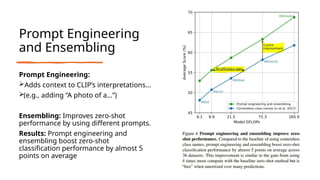

The document discusses CLIP (Contrastive Language-Image Pre-training), a framework designed to overcome the limitations of traditional computer vision models by enabling learning from natural language paired with images. It utilizes a dataset of 400 million image-text pairs and employs a contrastive learning strategy to achieve high performance, including zero-shot capabilities across various tasks. While CLIP shows strong generalization and scalability, it faces challenges such as data bias and underperformance in specialized domains.