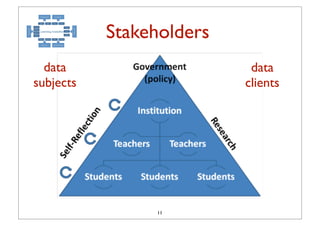

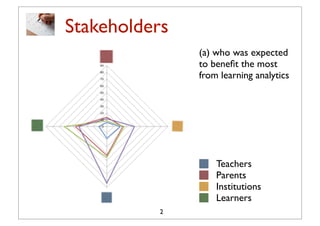

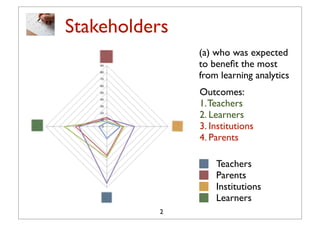

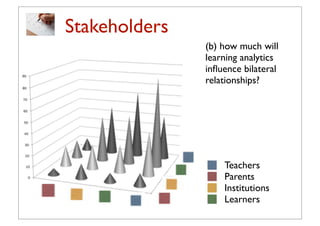

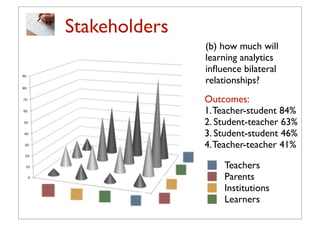

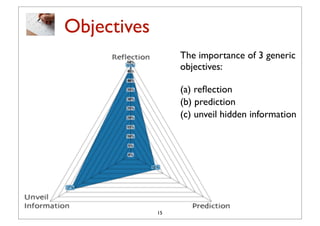

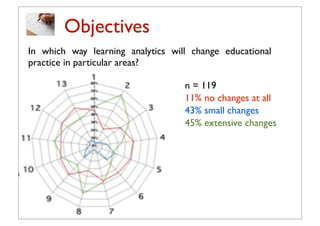

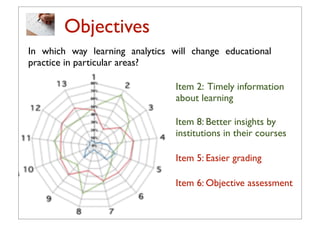

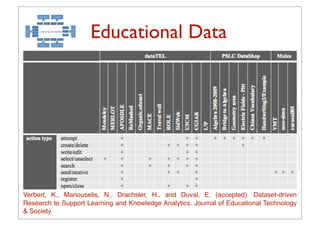

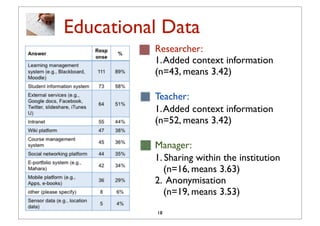

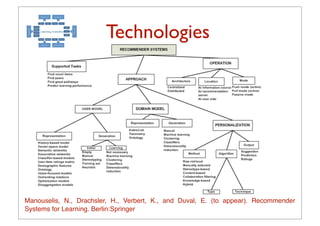

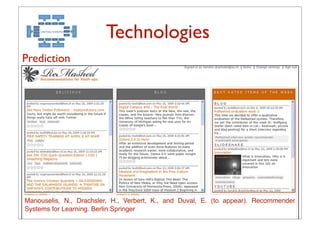

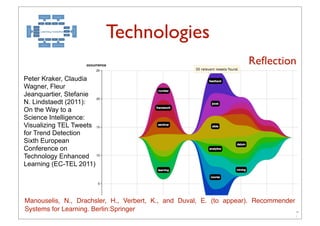

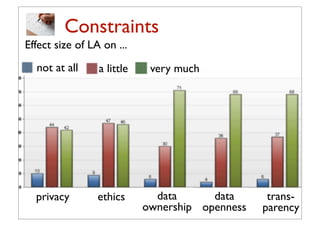

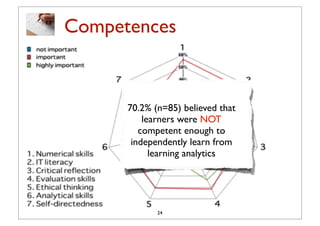

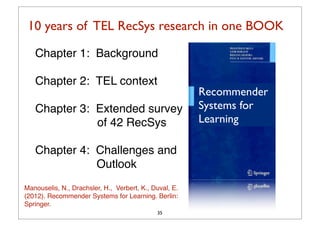

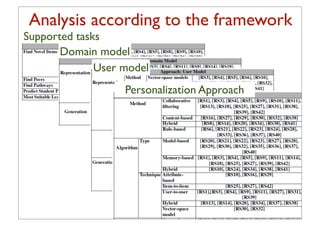

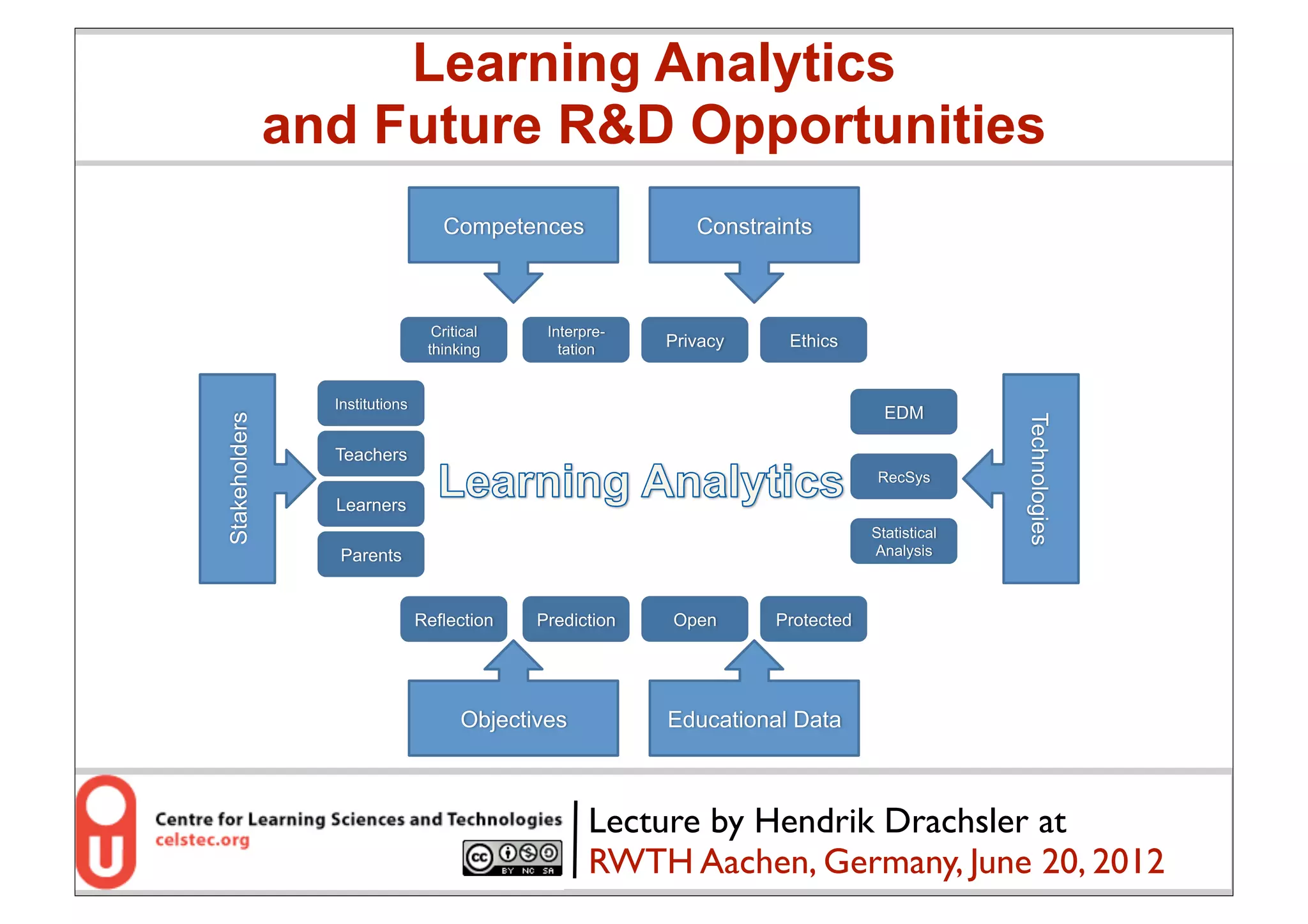

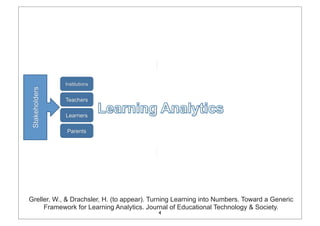

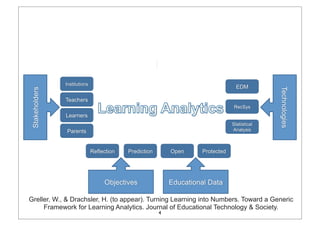

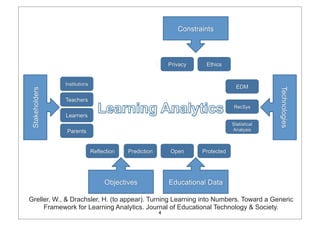

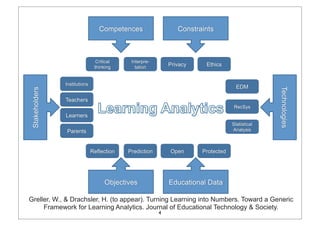

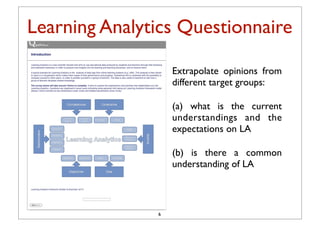

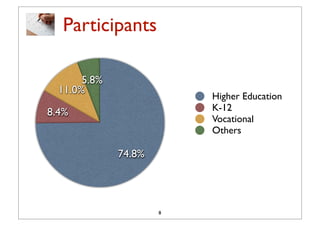

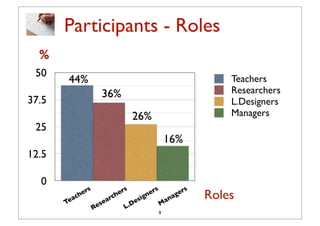

The lecture by Hendrik Drachsler discusses the framework and findings of learning analytics, highlighting the role of various stakeholders including teachers, learners, and institutions. Key survey results indicate that the main beneficiaries of learning analytics are learners and teachers, with the latter expected to benefit the most from improved teacher-student relationships. The document also addresses future research directions, privacy concerns, and the competencies needed for effective utilization of learning analytics.

![Participants - Reach

Responses from 31 countries [UK (38), US (30), NL (22)]

10](https://image.slidesharecdn.com/rwthlaklecture-120620151653-phpapp02/85/Learning-Analytics-and-Future-R-D-at-CELSTEC-16-320.jpg)