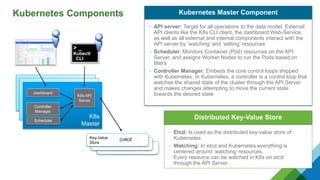

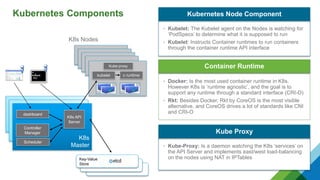

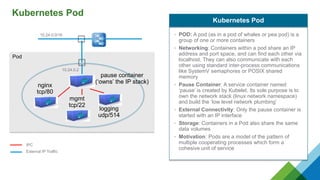

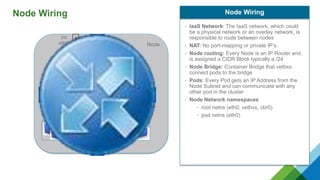

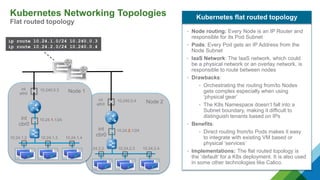

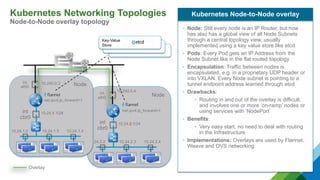

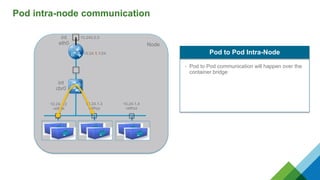

The document provides a comprehensive overview of Kubernetes, highlighting its purpose as an open-source platform for automating application container deployment and management, designed for scalability and efficient resource use. It details the architecture of Kubernetes, including its major components such as the API server, scheduler, controller manager, and networking topologies, while also addressing service abstractions and external connectivity options. Additionally, it discusses the role of CNI (Container Network Interface) and the use of namespaces in building separate network topologies for enhanced routing and management.

![What is Kubernetes?

[1] http://kubernetes.io/docs/whatisk8s/ [0] http://static.googleusercontent.com/media/research.google.com/de//pubs/archive/43438.pdf

• Kubernetes: Kubernetes or K8s in short is the ancient Greek word for

Helmsmen

• K8s roots: Kubernetes was championed by Google and is now backed by

major enterprise IT vendors and users (including VMware)

• Borg: Google’s internal task scheduling system Borg served as the

blueprint for Kubernetes, but the code base is different [1]

Kubernetes Roots

• Mission statement: Kubernetes is an open-source platform for

automating deployment, scaling, and operations of application containers

across clusters of hosts, providing container-centric infrastructure.

• Capabilities:

• Deploy your applications quickly and predictably

• Scale your applications on the fly

• Seamlessly roll out new features

• Optimize use of your hardware by using only the resources you need

• Role: K8s sits in the Container as a Service (CaaS) or Container

orchestration layer

What Kubernetes is [0]](https://image.slidesharecdn.com/kubernetes-meetup-170413160043/85/KuberNETes-meetup-3-320.jpg)