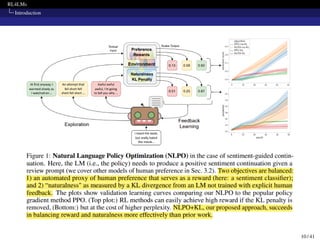

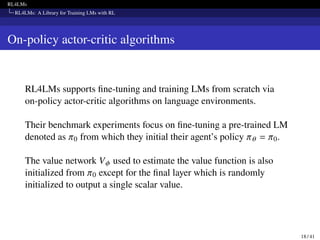

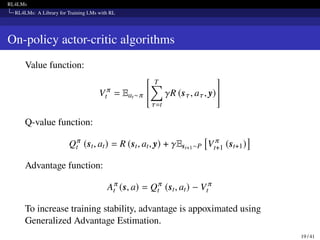

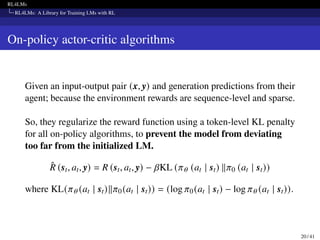

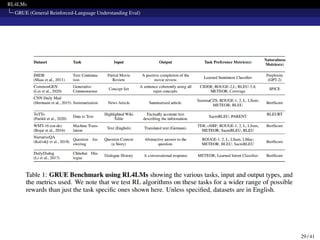

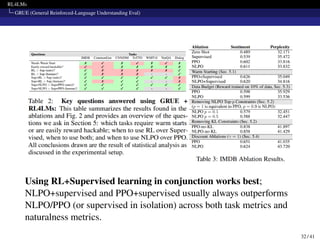

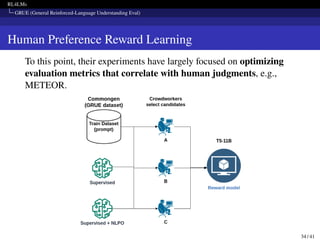

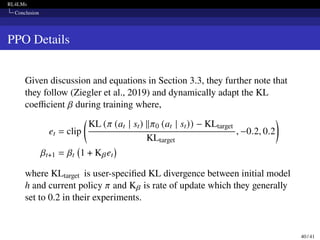

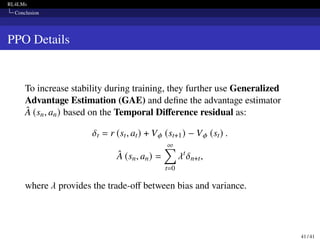

The document presents RL4LMs, a library for training language models with reinforcement learning. It introduces RL4LMs, which enables generative models to be optimized with RL algorithms. It also presents the GRUE benchmark for evaluating models, which pairs NLP tasks with reward functions capturing human preferences. Additionally, it introduces the NLPO algorithm that dynamically learns task-specific constraints to reduce the large action space in language generation. The goal is to facilitate research in building RL methods to better align language models with human preferences.