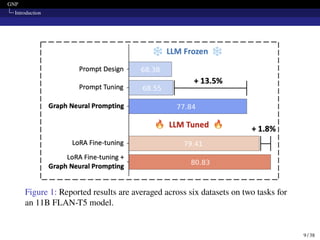

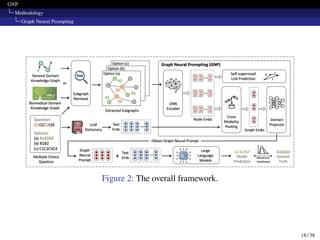

The document presents Graph Neural Prompting (GNP), a novel plug-and-play method aimed at enhancing large language models (LLMs) by integrating beneficial knowledge from knowledge graphs (KGs). It addresses the challenges of combining KGs with LLMs, particularly concerning noise and model architecture complexities. Through experiments in commonsense and biomedical reasoning, the authors demonstrate the efficacy of GNP in improving LLM performance.

![GNP

Introduction

Introduction

Knowledge graphs (KGs) store enormous facts and serve as a

systematic way of presenting knowledge [2].

Existing methods [4] have incorporated KGs with language models by

designing customized model architectures to accommodate both

textual data and KGs.

The joint training model becomes challenging due to the parameter

size of the language model.

6 / 38](https://image.slidesharecdn.com/20240604-240528061224-750087f6/85/Graph-Neural-Prompting-with-Large-Language-Models-pdf-6-320.jpg)

![GNP

Introduction

Introduction (Cont.)

A direct way to combine the benefit of KGs and the language model

feeding the KG triples1 into LLMs [1].

However, this method can introduce substantial noise since KGs might

contain various extraneous contexts.

Can we learn beneficial knowledge from KGs

and integrate them into pre-trained LLMs?

1KG triples are structured data elements consisting of a subject, predicate, and

object, representing relationships between entities in a Knowledge Graph.

7 / 38](https://image.slidesharecdn.com/20240604-240528061224-750087f6/85/Graph-Neural-Prompting-with-Large-Language-Models-pdf-7-320.jpg)

![GNP

Methodology

Prompting LLMs for Question Answering

Prompting LLMs for Question Answering

Below are the steps:

1 Tokenizing the concatenation of C, Q, A into a sequence of input

text tokens X.

2 Designing a series of prompt tokens P, pretending it to the input

tokens X. Using it as input for the LLM model to generate

prediction y′ = f ([P, X]).

3 The model can be trained for downstream task adaptation using

standard maximum likelihood loss using teacher forcing and a

cross-entropy loss Lllm = − log p(y | X, Θ).

15 / 38](https://image.slidesharecdn.com/20240604-240528061224-750087f6/85/Graph-Neural-Prompting-with-Large-Language-Models-pdf-15-320.jpg)

![GNP

Methodology

Graph Neural Prompting

Cross-modality Pooling (Cont.)

Then, they calculate the cross-modality attention using H2 and T′.

H3 = softmax[H2 · (T′

)T

/

√︁

dg] · T ′

where T′ = FFN1(𝜎(FFN2(T))), 𝜎 is the GELU activation function.

Finally, they generate graph-level embedding by average pooling the

node embeddings H3 in G′.

H4 = POOL(H3)

22 / 38](https://image.slidesharecdn.com/20240604-240528061224-750087f6/85/Graph-Neural-Prompting-with-Large-Language-Models-pdf-22-320.jpg)

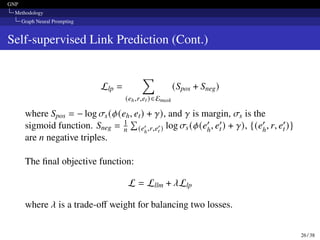

![GNP

Methodology

Graph Neural Prompting

Self-supervised Link Prediction

In this section, they design an objective function to enable the model

to learn and adapt to the target dataset.

They mask some edges from G′ and enforce the model to predict

them, which the set of masked-out edges as Emask ⊆ E.

They adopt a widely used knowledge graph embedding method

DistMult [3] to map the entity embeddings and relation in the KG to

vectors, h, r, t, where entity from H3.

24 / 38](https://image.slidesharecdn.com/20240604-240528061224-750087f6/85/Graph-Neural-Prompting-with-Large-Language-Models-pdf-24-320.jpg)

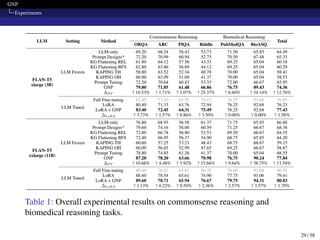

![GNP

Experiments

Model Design Comparison

For prompt tuning, they use dataset-level prompt (DLP). For modeling

relations, they use Relational GNN (RGNN) [5].

Table 3: Results of integrating different model designs.

31 / 38](https://image.slidesharecdn.com/20240604-240528061224-750087f6/85/Graph-Neural-Prompting-with-Large-Language-Models-pdf-31-320.jpg)

![GNP

Conclusion

References I

[1] Jinheon Baek, Alham Fikri Aji, et al. “Knowledge-Augmented

Language Model Prompting for Zero-Shot Knowledge Graph

Question Answering”. In: Proceedings of the 1st Workshop on

Natural Language Reasoning and Structured Explanations

(NLRSE). Association for Computational Linguistics, 2023,

pp. 78–106.

[2] Shaoxiong Ji, Shirui Pan, et al. “A Survey on Knowledge

Graphs: Representation, Acquisition, and Applications”. In:

IEEE Transactions on Neural Networks and Learning Systems

(2022), pp. 494–514.

37 / 38](https://image.slidesharecdn.com/20240604-240528061224-750087f6/85/Graph-Neural-Prompting-with-Large-Language-Models-pdf-37-320.jpg)

![GNP

Conclusion

References II

[3] Bishan Yang, Scott Wen-tau Yih, et al. “Embedding Entities and

Relations for Learning and Inference in Knowledge Bases”. In:

Proceedings of the International Conference on Learning

Representations (ICLR) 2015. 2015.

[4] Michihiro Yasunaga, Antoine Bosselut, et al. “Deep

Bidirectional Language-Knowledge Graph Pretraining”. In:

Advances in Neural Information Processing Systems. 2022,

pp. 37309–37323.

[5] Xikun Zhang et al. “GreaseLM: Graph REASoning Enhanced

Language Models”. In: International Conference on Learning

Representations. 2021.

38 / 38](https://image.slidesharecdn.com/20240604-240528061224-750087f6/85/Graph-Neural-Prompting-with-Large-Language-Models-pdf-38-320.jpg)