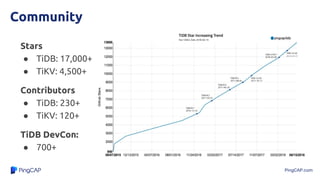

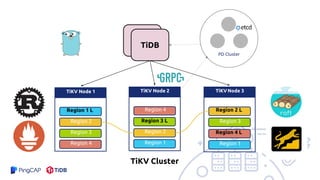

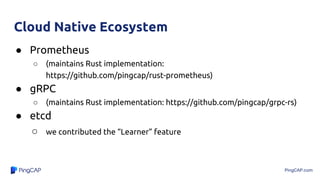

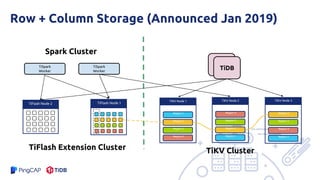

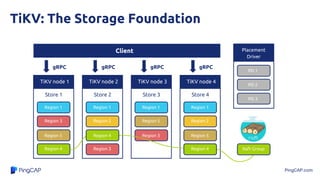

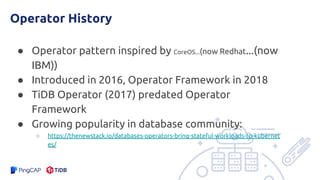

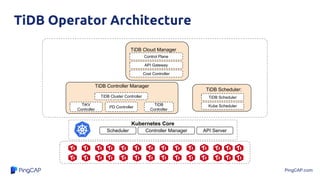

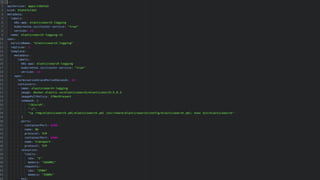

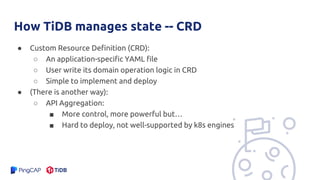

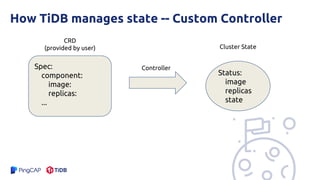

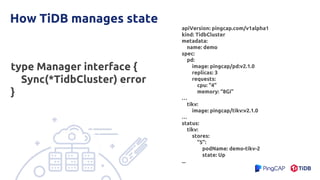

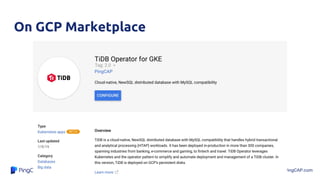

The document discusses the history and architecture of TiDB, a NewSQL database developed by PingCAP. It highlights the growing popularity of TiDB, with over 300 deployments and features like cloud-native support, state management through the operator pattern, and a custom resource definition for managing clusters. The presentation also outlines the uses of TiDB in various applications, emphasizing its scalability and strong consistency.