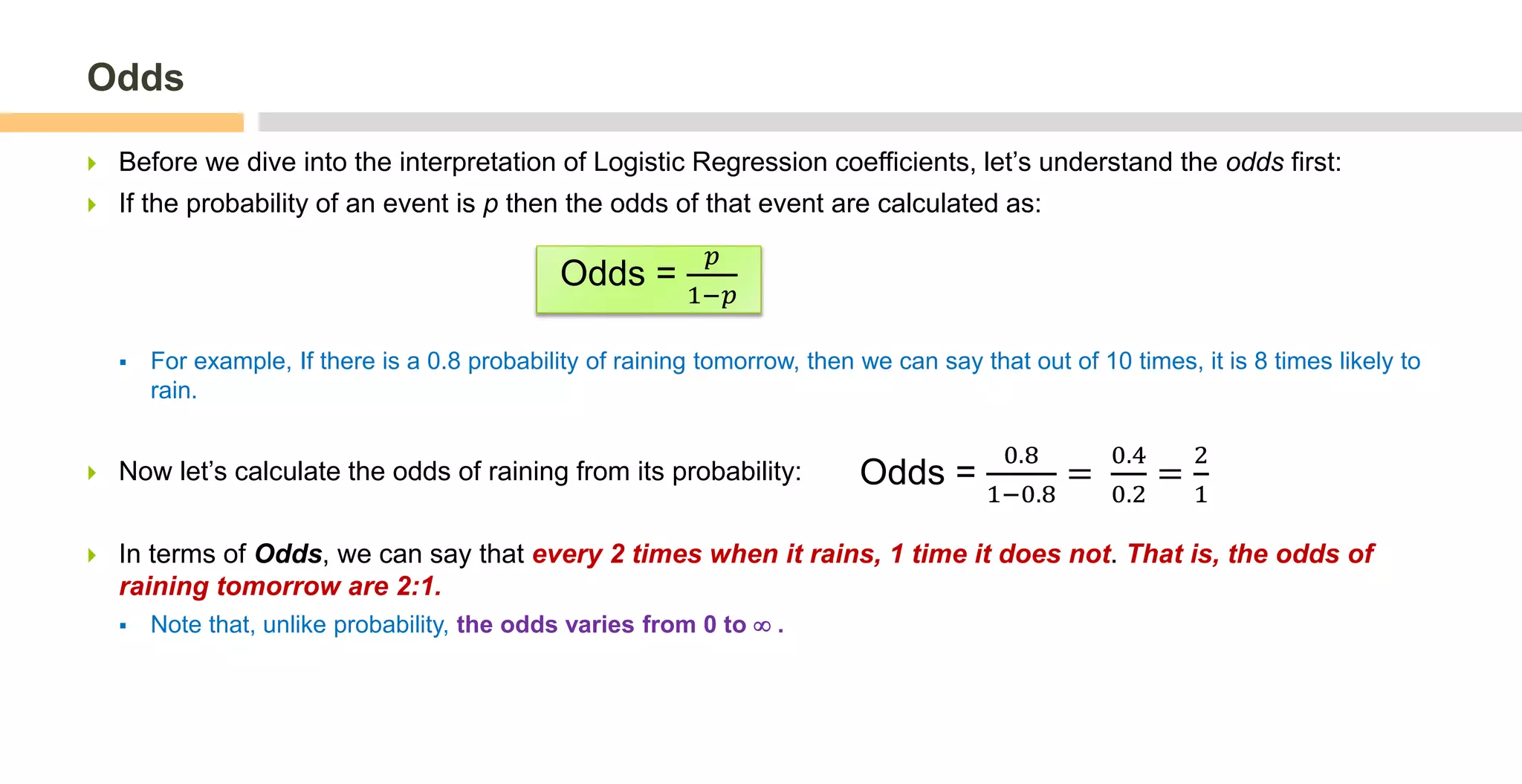

This document discusses the interpretation of coefficients in linear and logistic regression models.

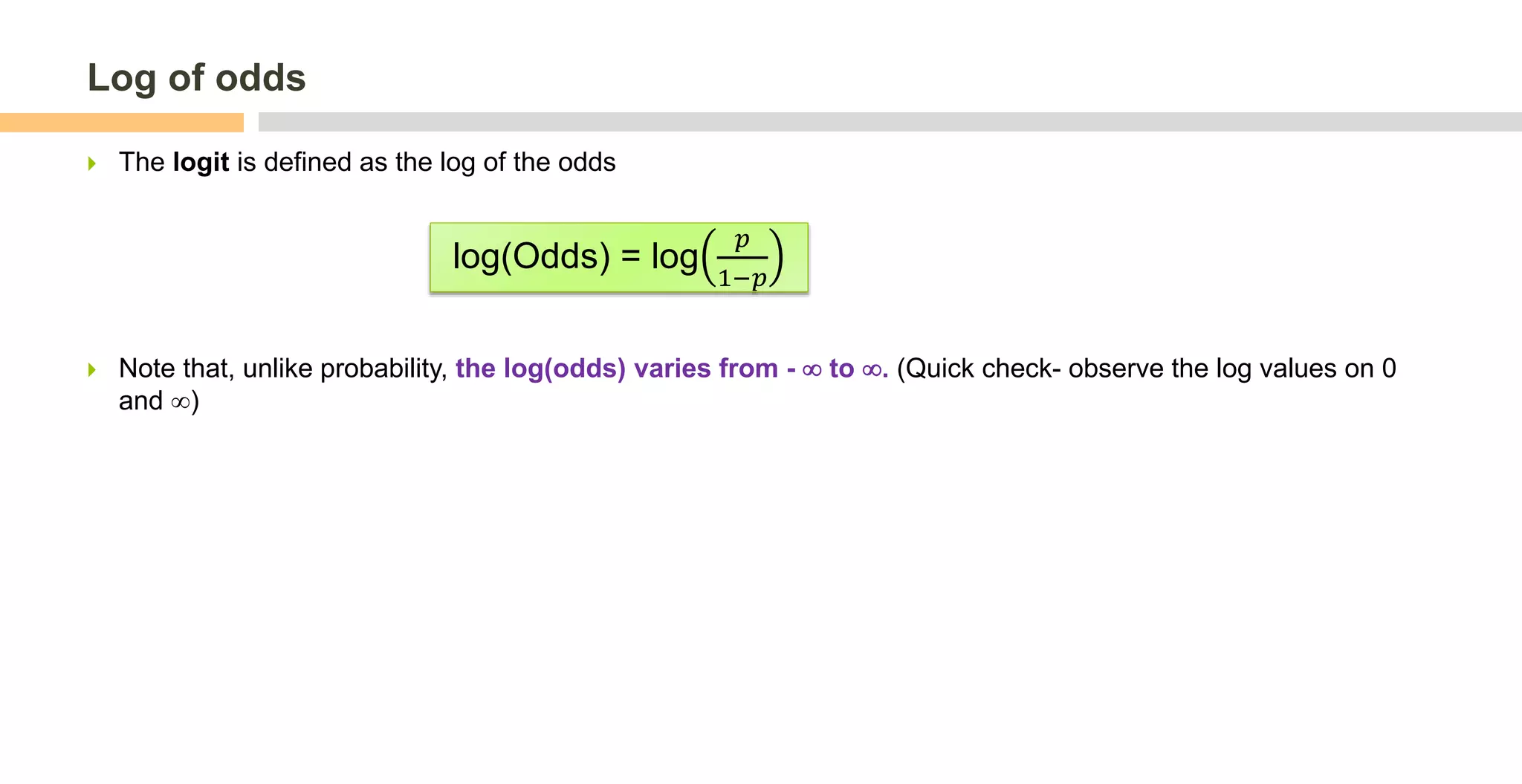

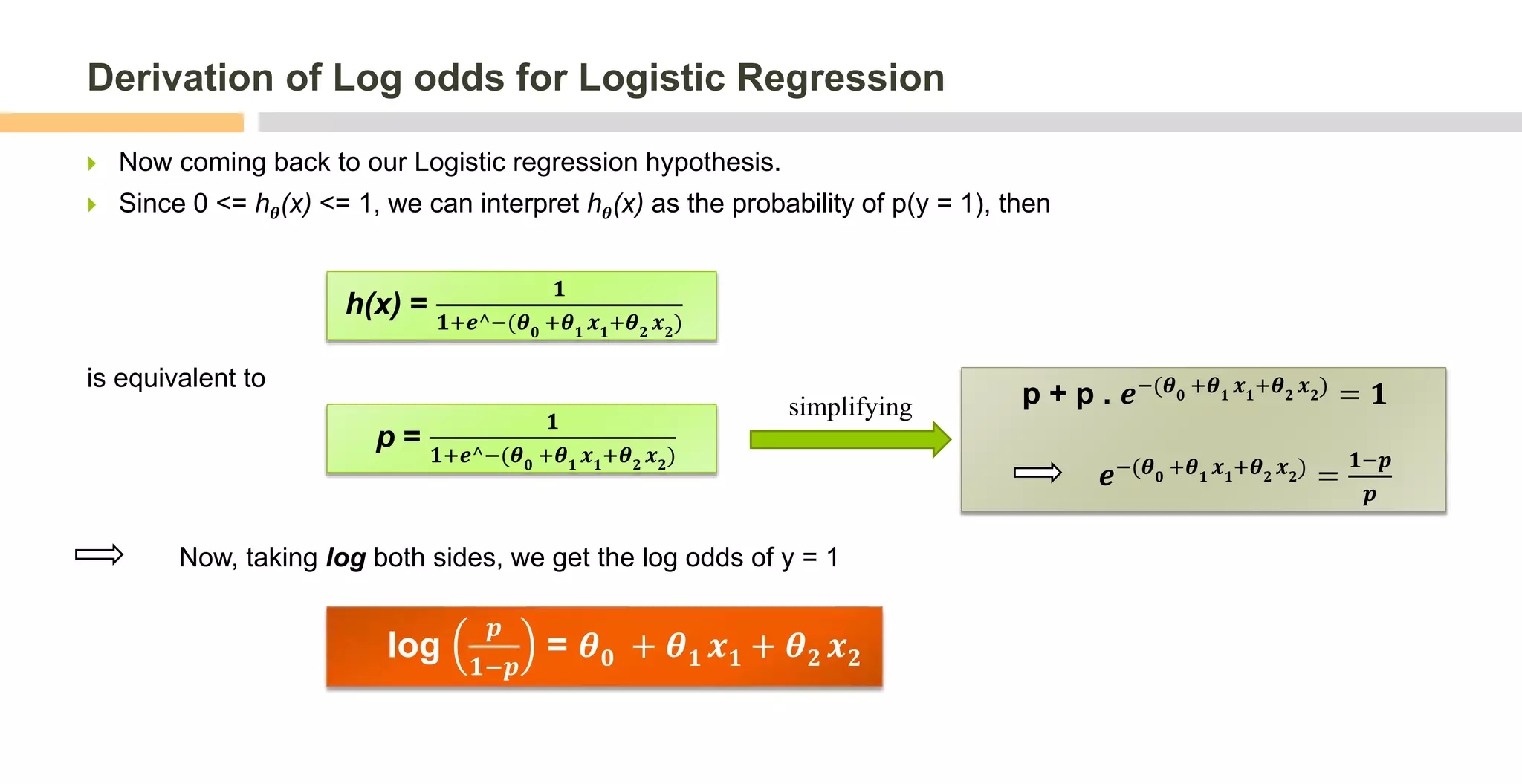

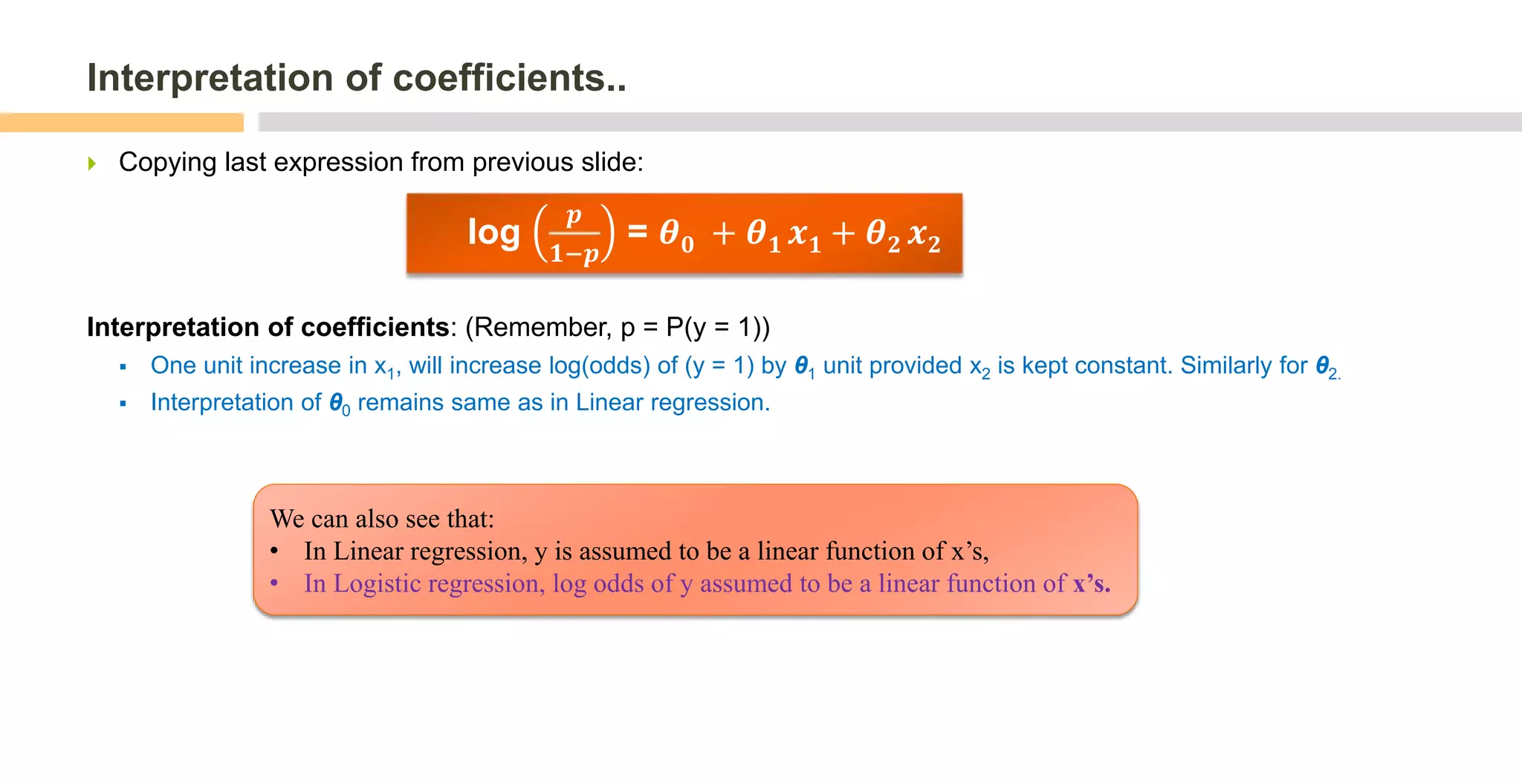

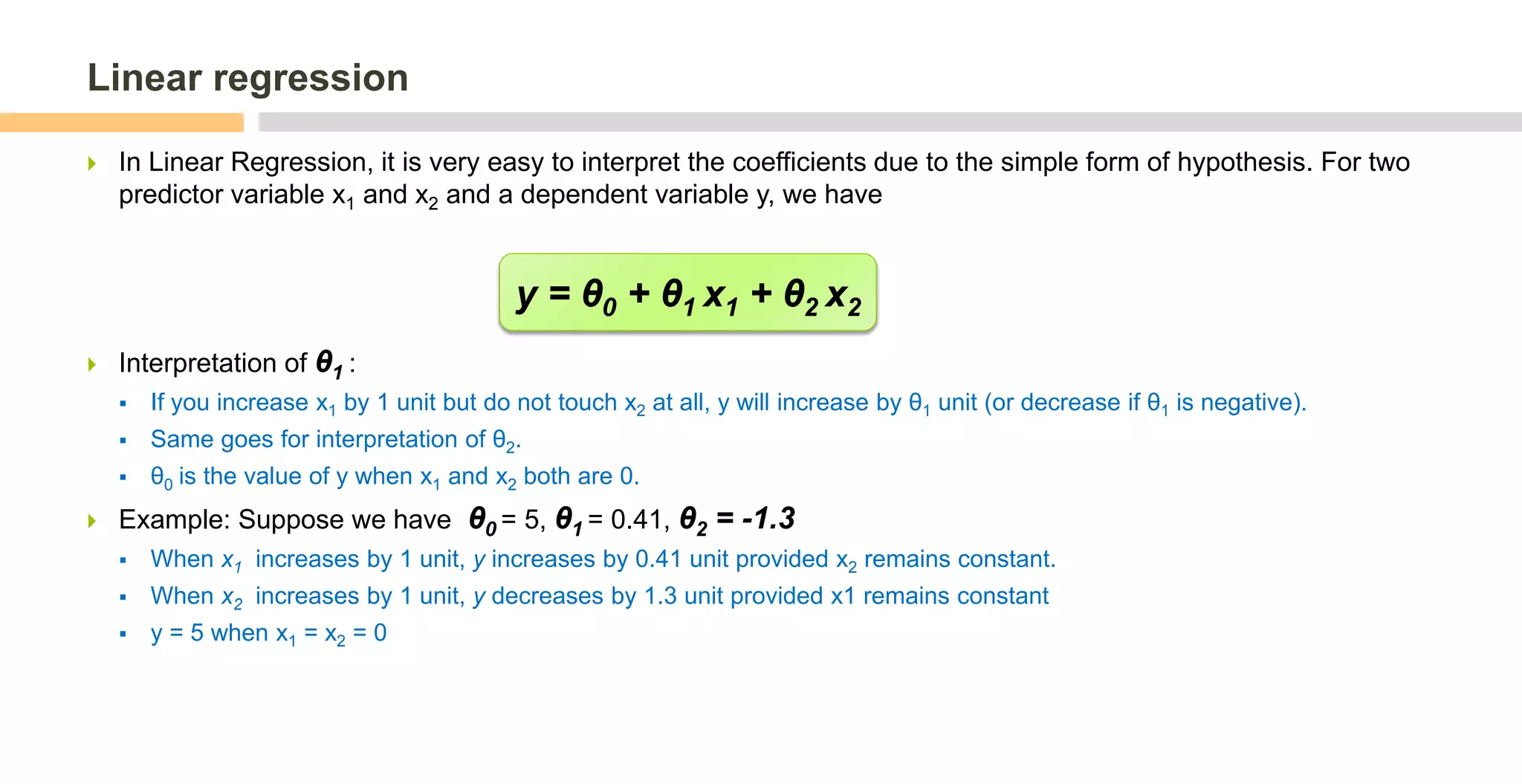

For linear regression, the coefficients directly represent the relationship between the predictor and response variables. For logistic regression, the coefficients represent the effect of the predictors on the log odds of the response being 1. Taking the exponent of the linear combination of coefficients and predictors gives the odds, which can be converted to a probability between 0 and 1 using the sigmoid function.

![Logistic regression

In Logistic regression, we pass our Linear regression hypothesis (θ0 + θ1 x1 + θ2 x2) into sigmoid function to

squash it in [0, 1]

Now we have 0 ⩽ hθ(x) ⩽1

Prediction

We set a "threshold" at 0.5 (better threshold can also be derived from ROC curve) and

o if hθ(x) ⩾ 0.5 we predict y =1

o if hθ(x) < 0.5 we predict y = 0

hθ (x) =

𝟏

𝟏+𝒆^−(𝜽 𝟎

+𝜽 𝟏

𝒙 𝟏

+𝜽 𝟐

𝒙 𝟐

)

Image source: saedsayad.com

Due to complex process of getting y from x’s, we can not easily interpret the

effect of change in x’s on y ! Therefore we try to simplify this expression.](https://image.slidesharecdn.com/interpretationofcoefficients-linearlogisticregressionbyankitsharma-181213154139/75/Interpretation-of-coefficients-Linear-and-Logistic-regression-3-2048.jpg)