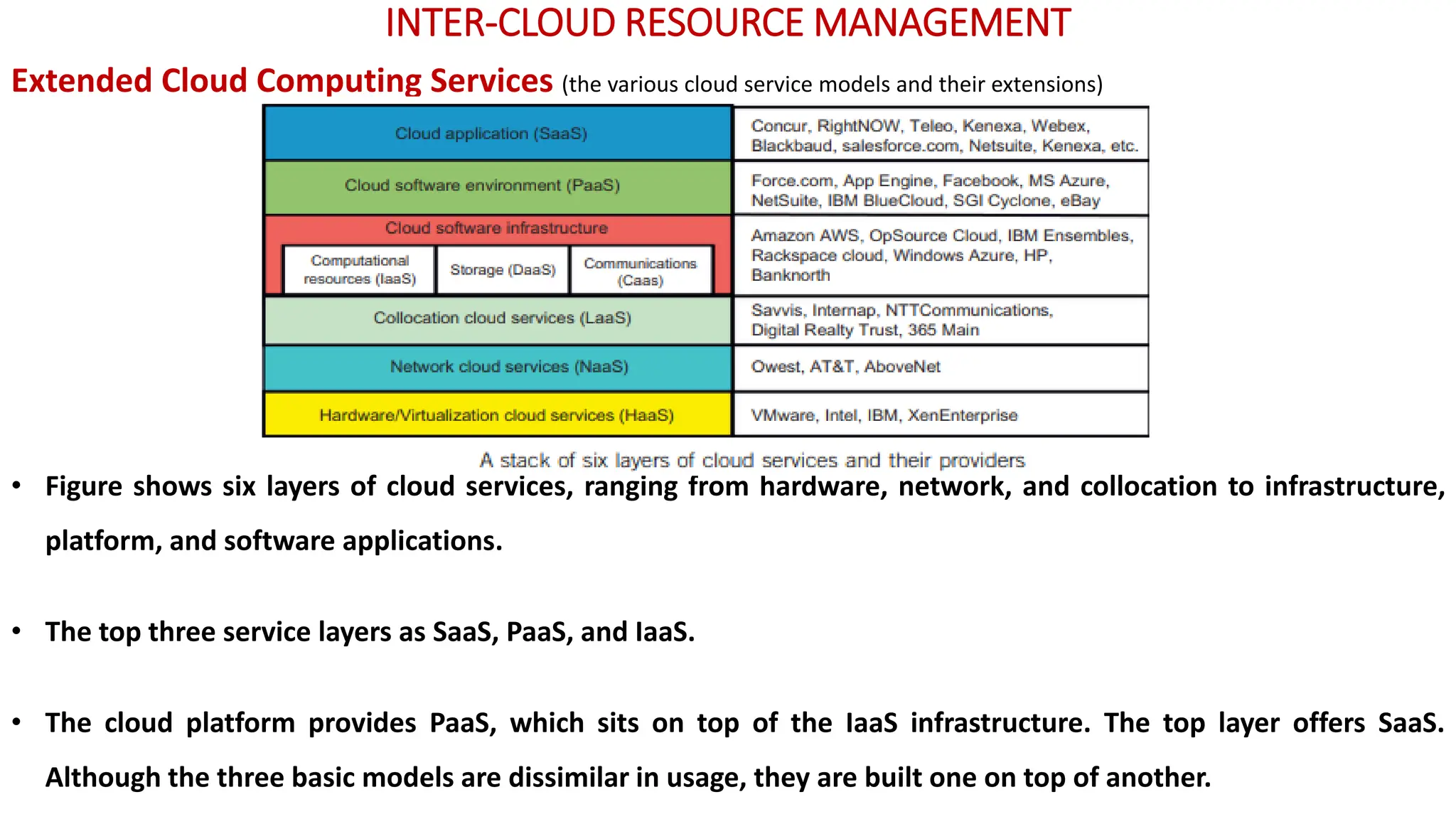

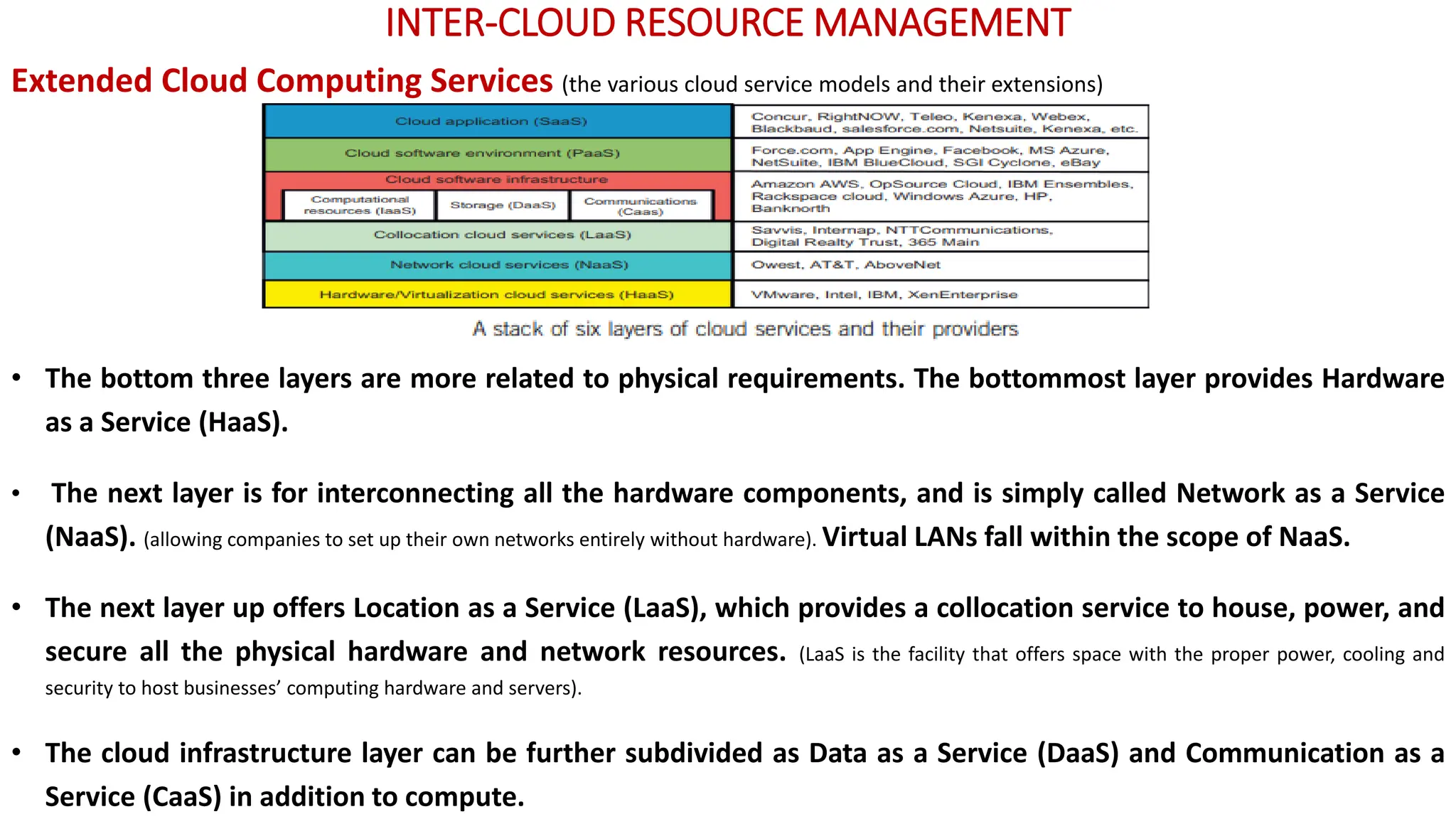

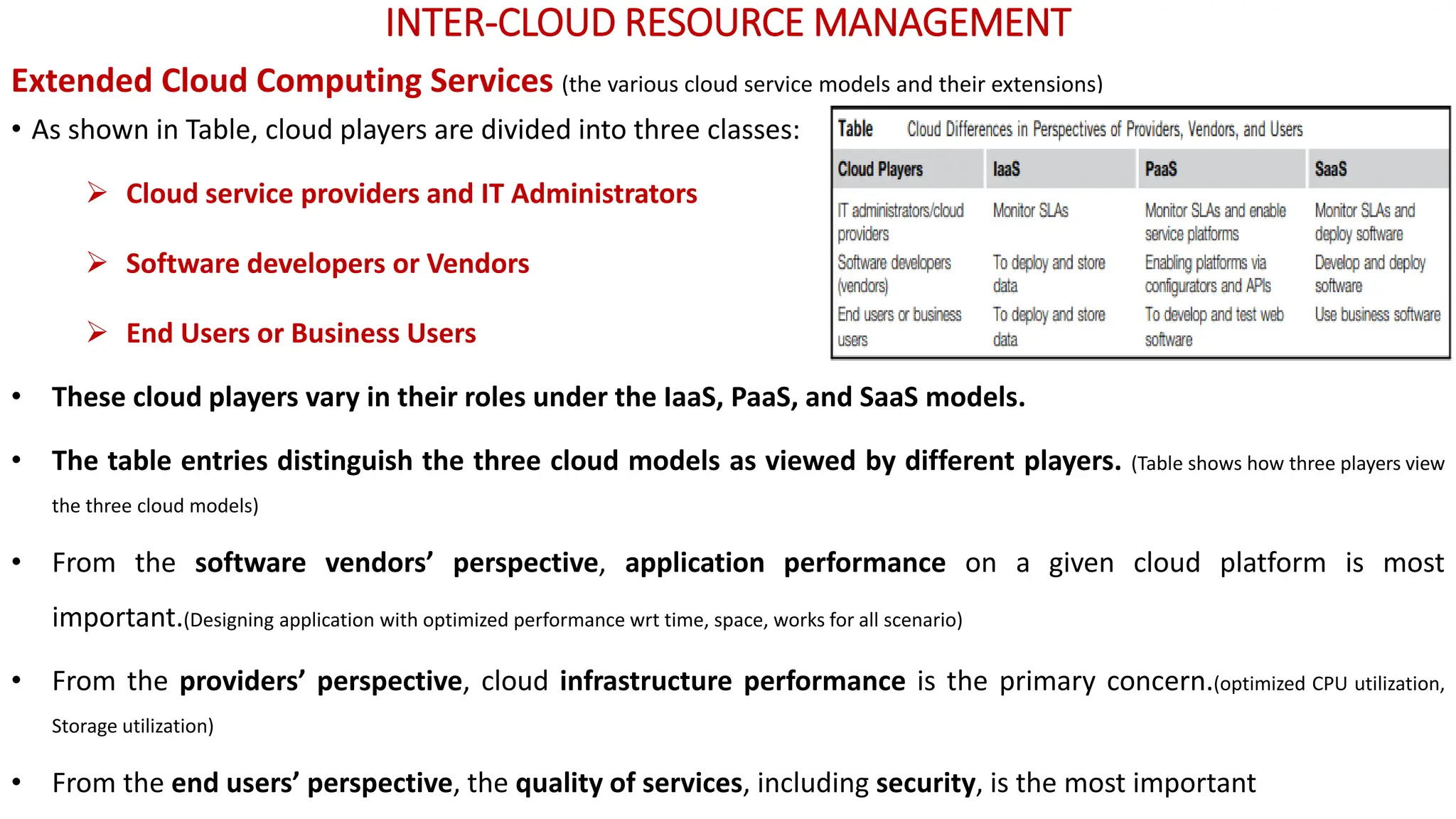

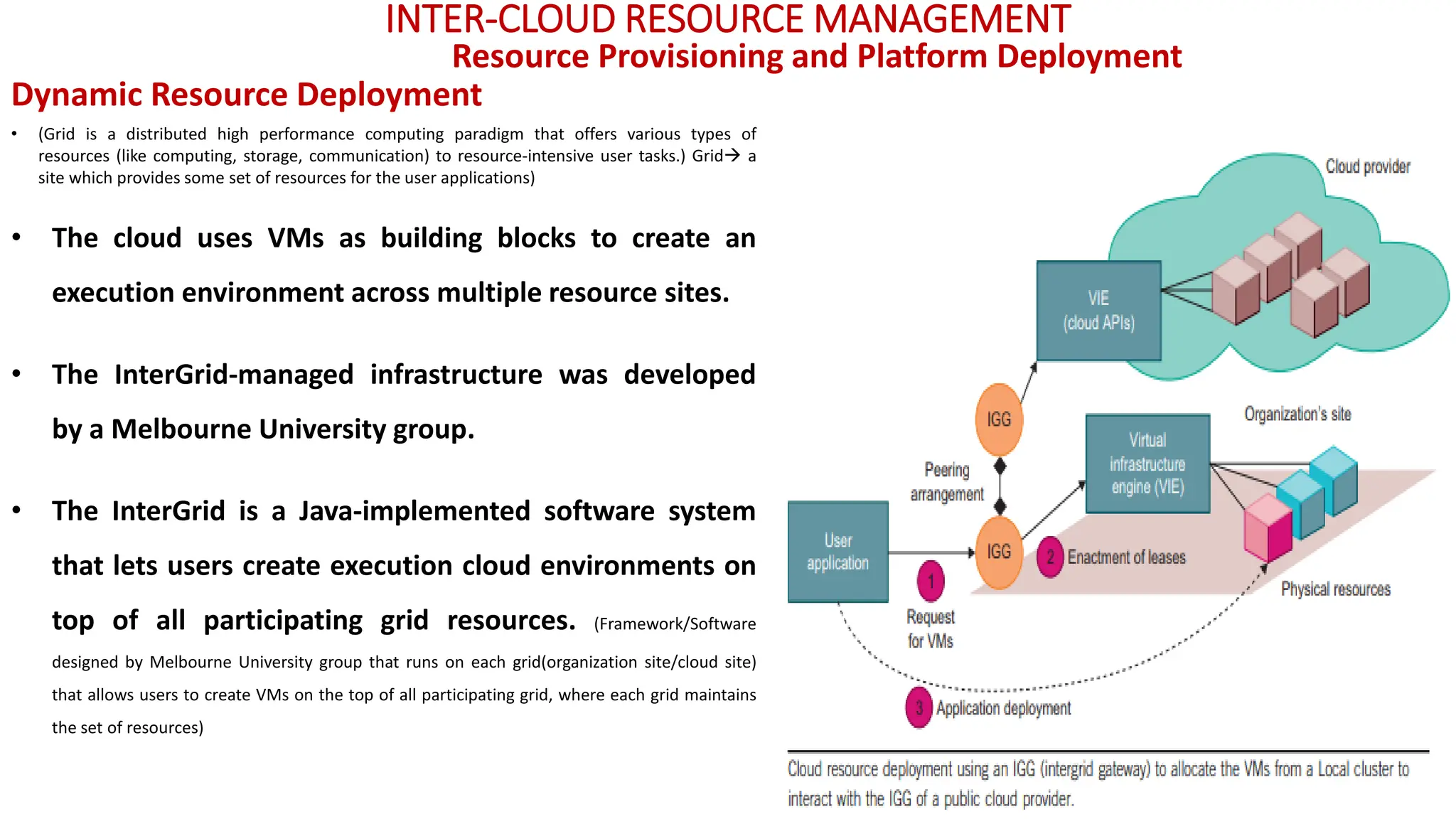

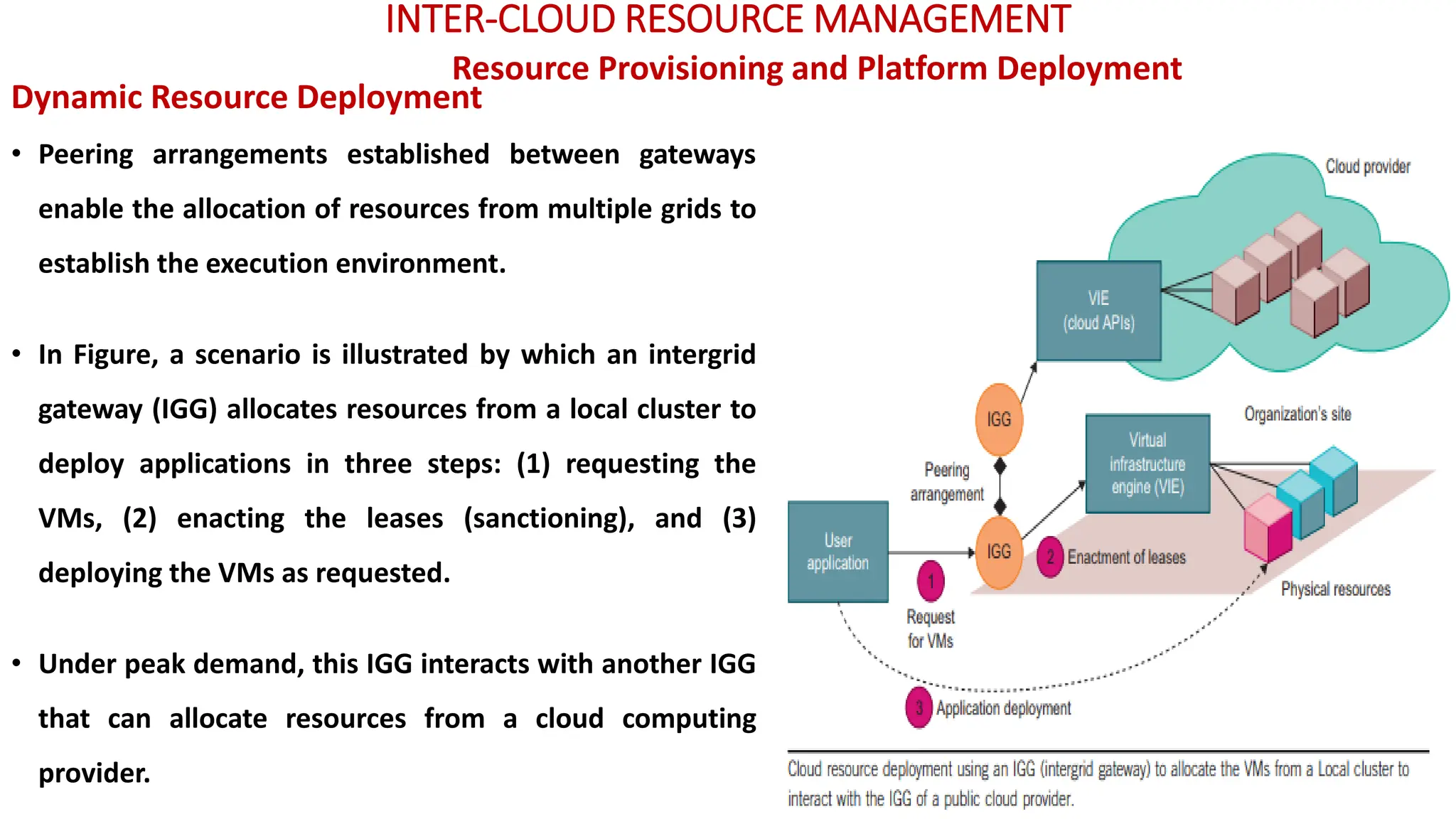

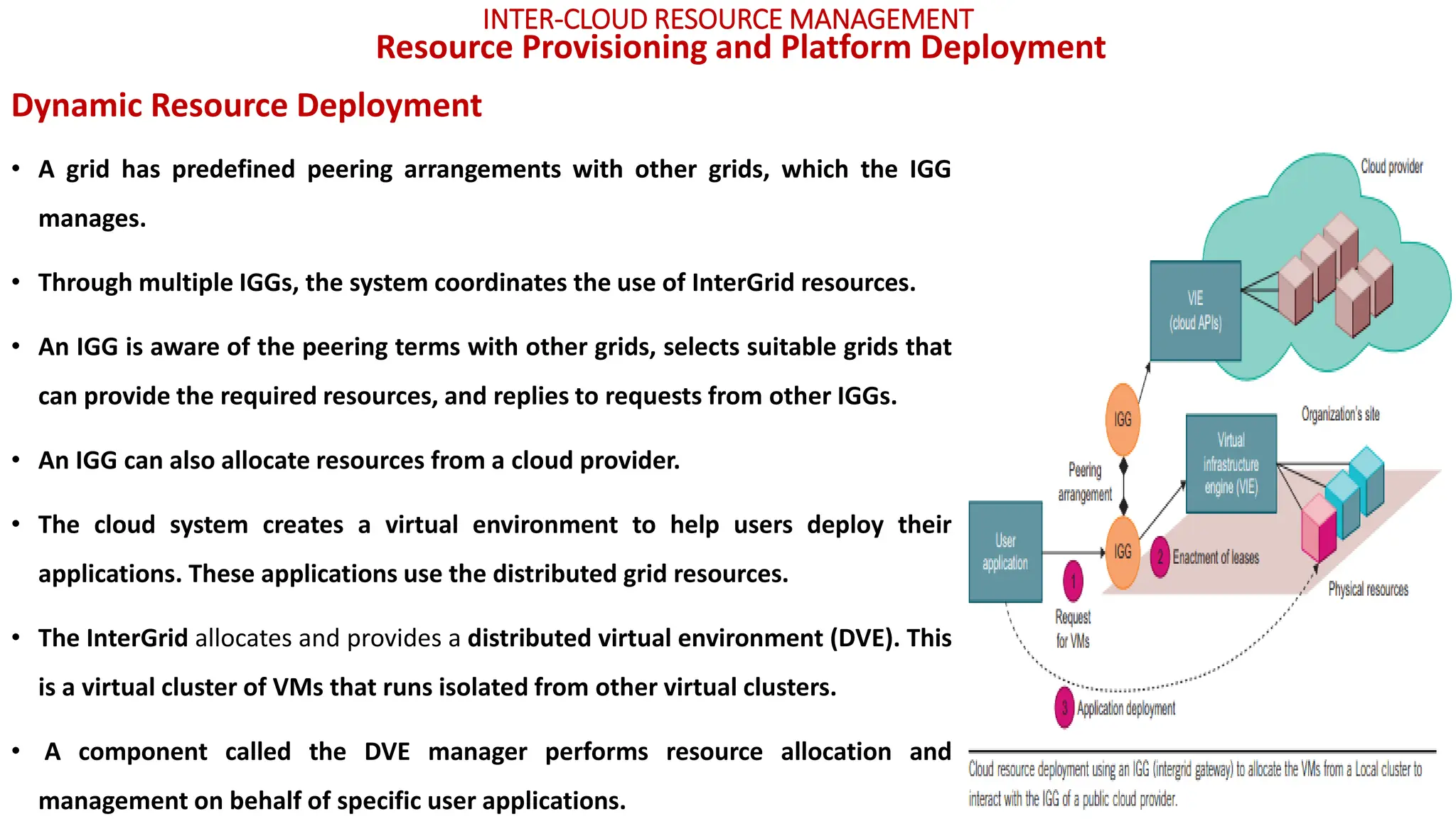

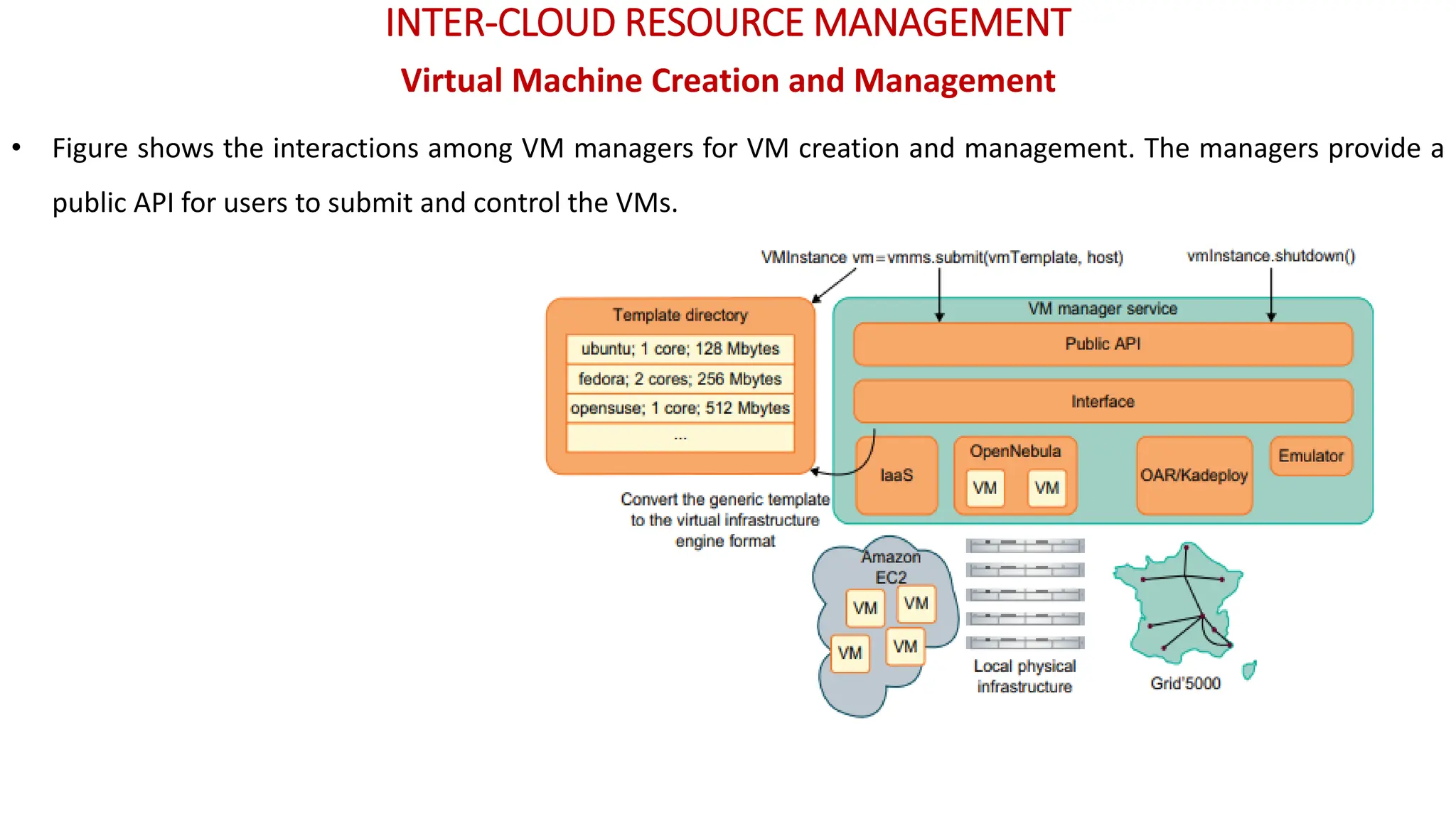

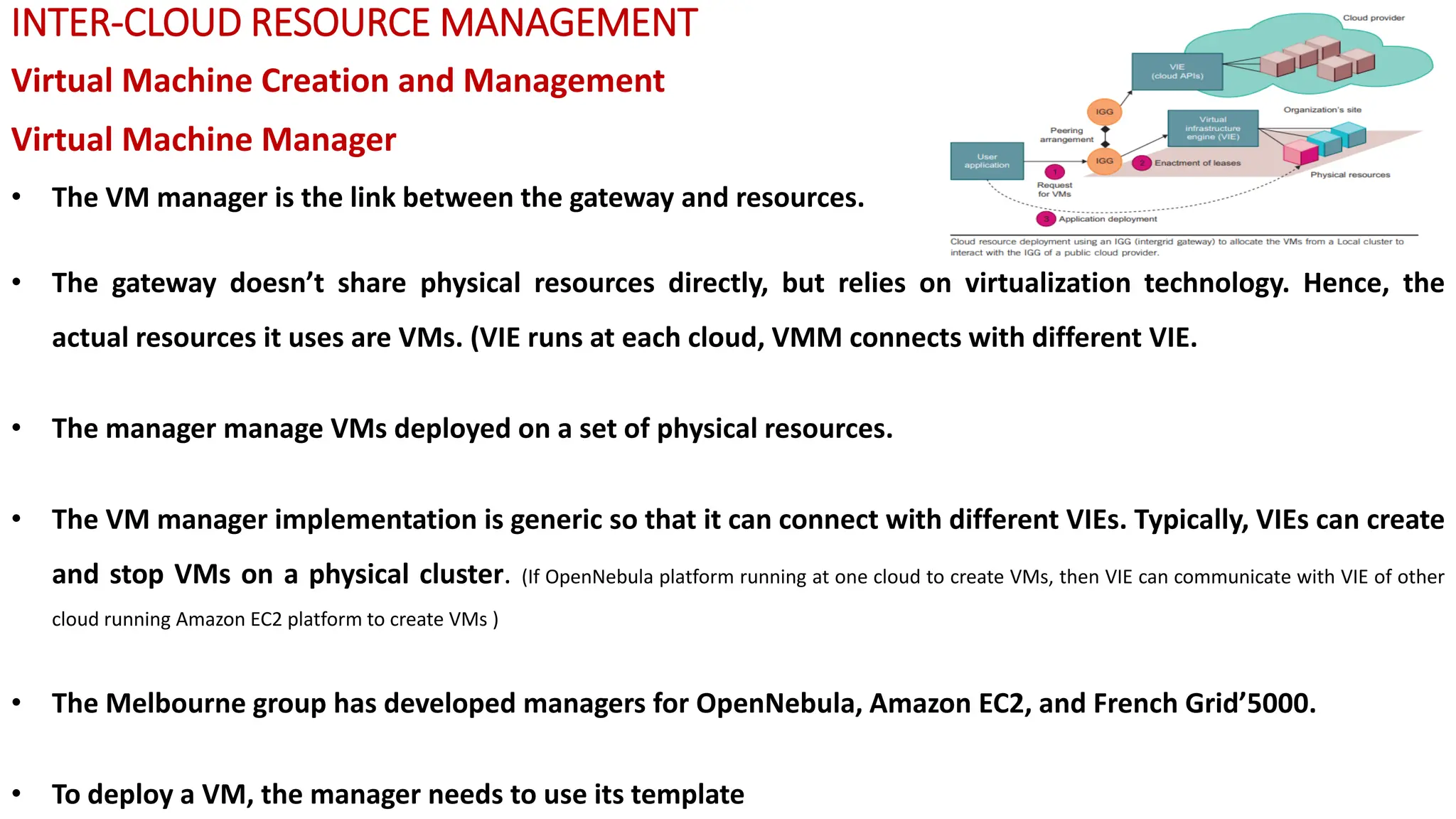

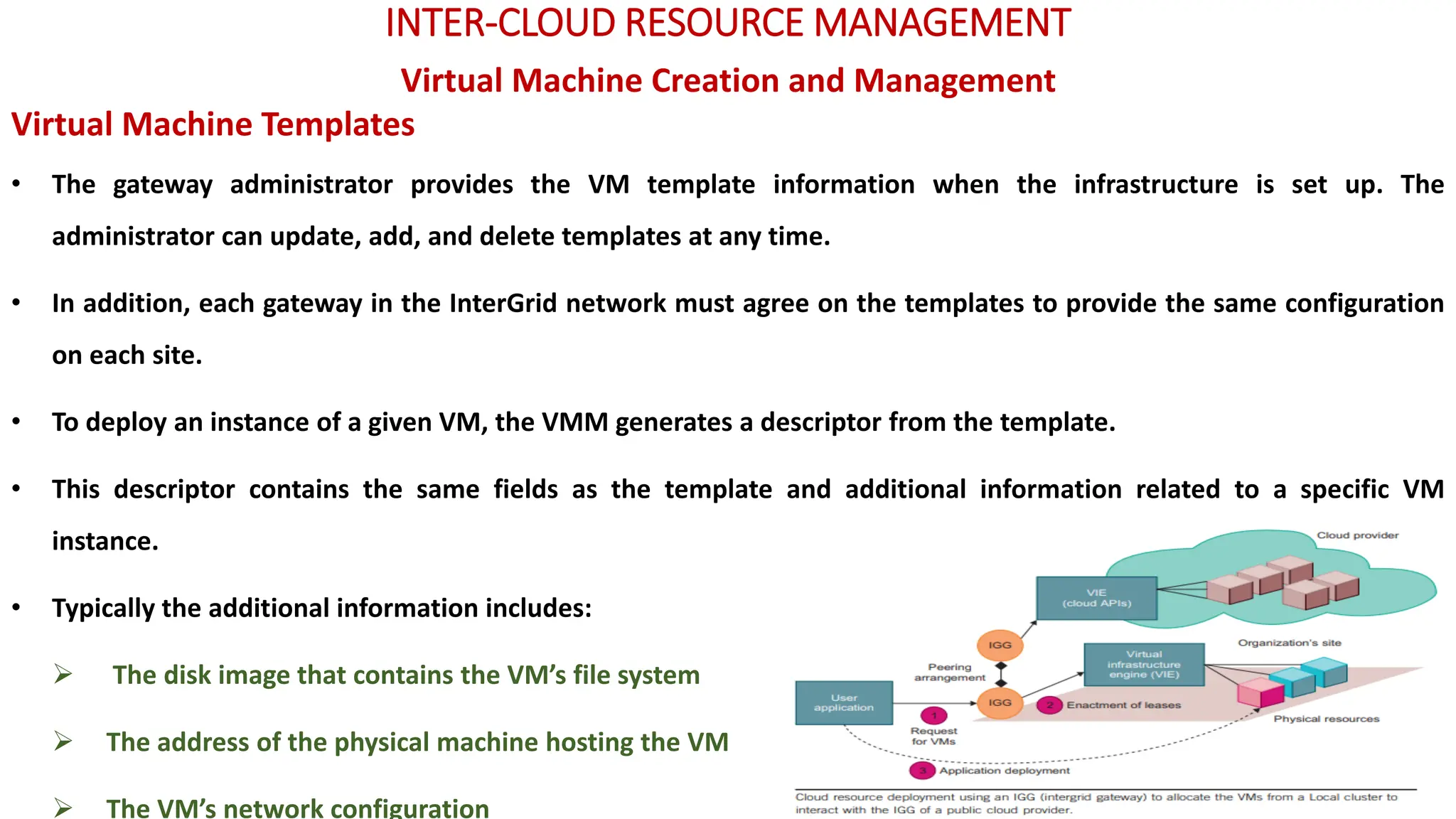

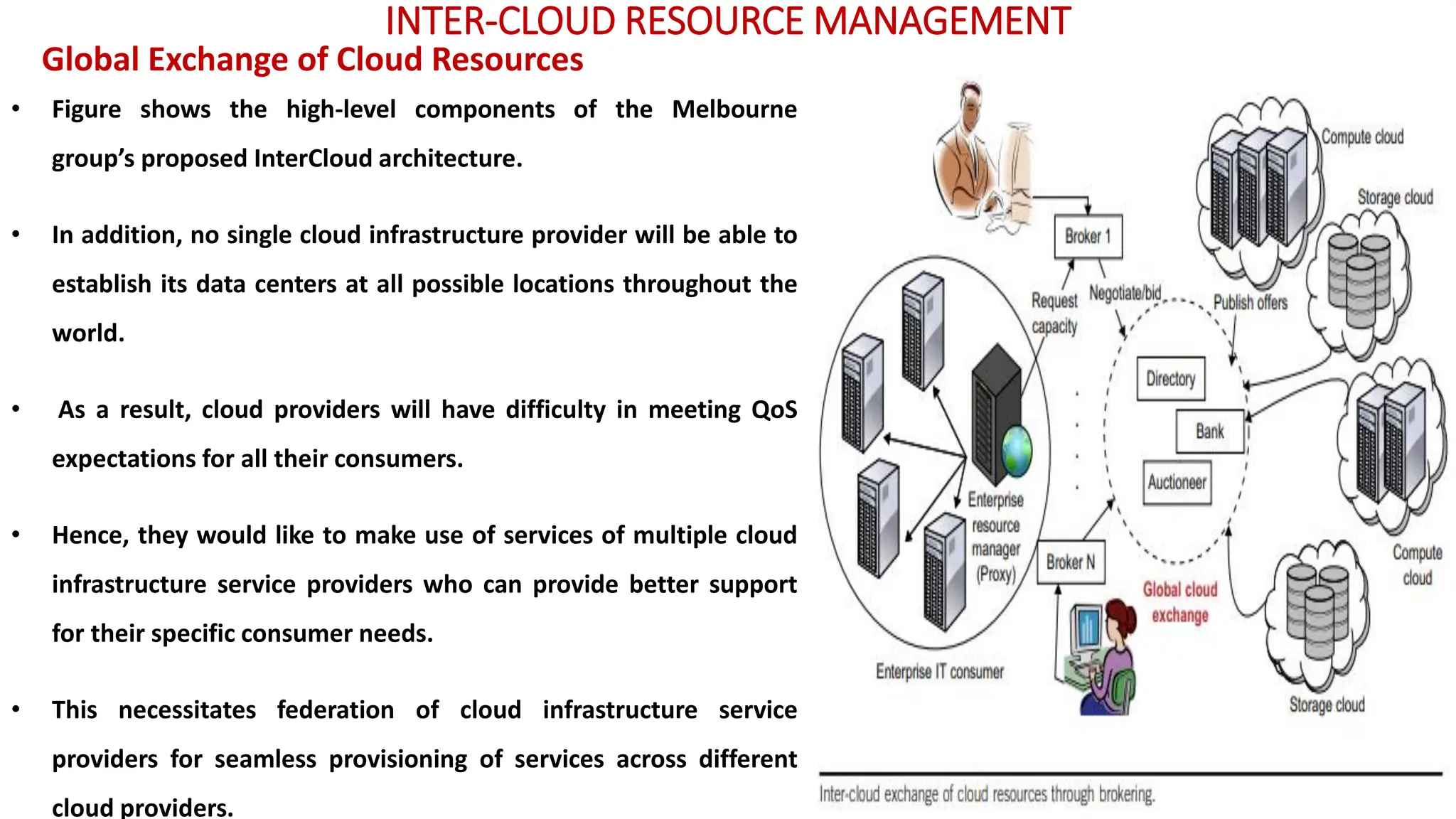

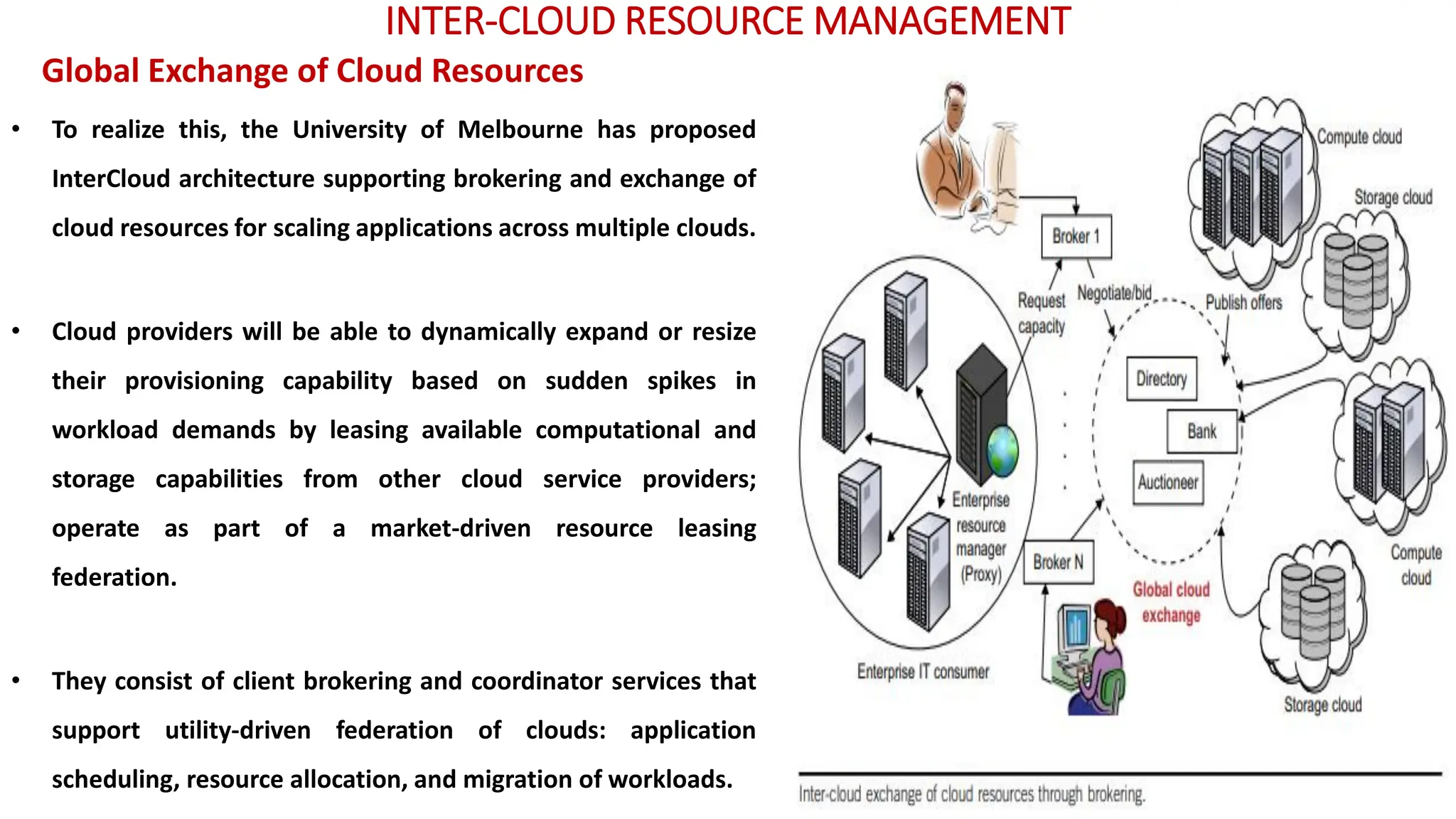

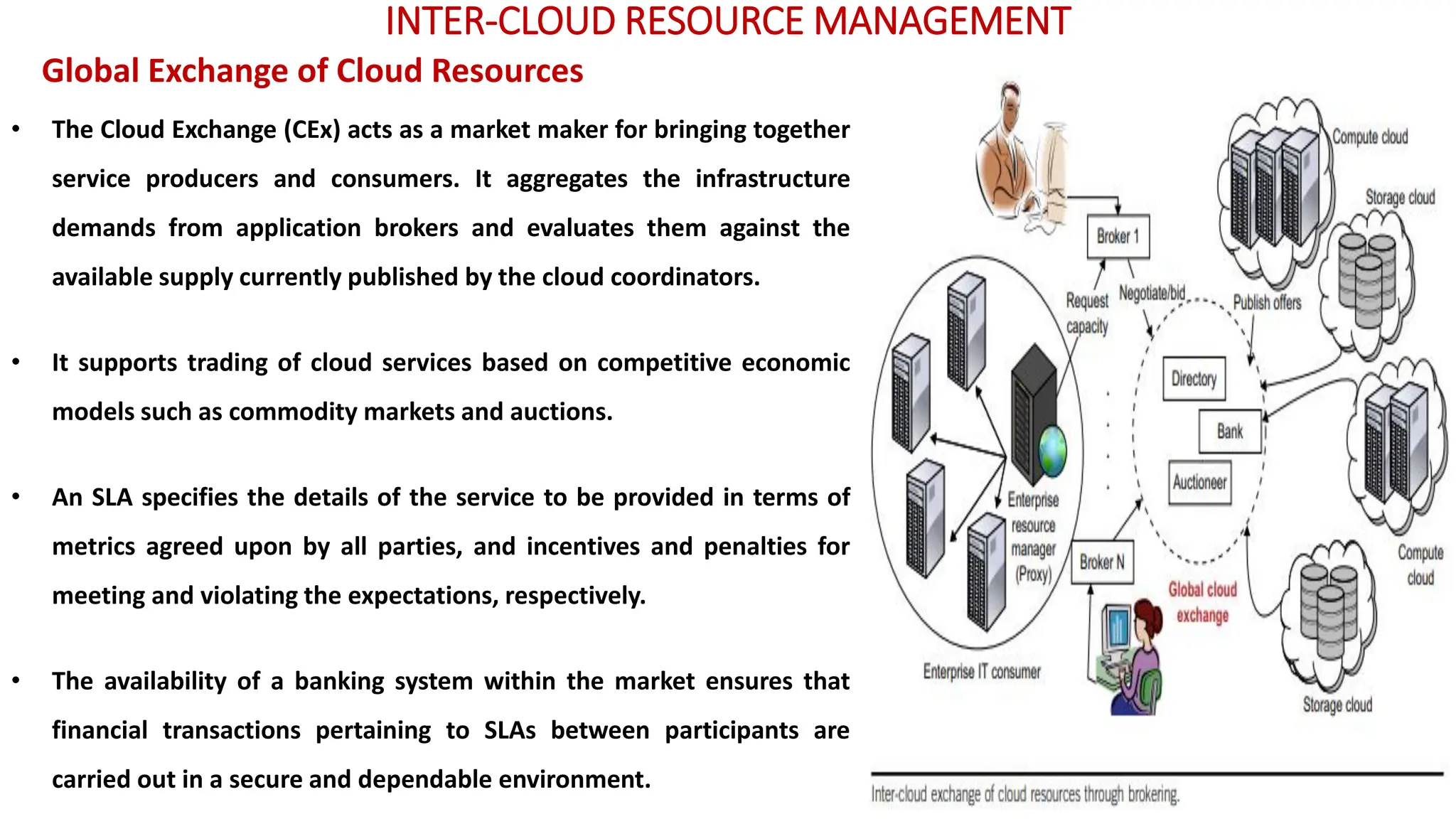

The document discusses inter-cloud resource management within extended cloud computing services, detailing various cloud service models (IaaS, PaaS, SaaS) and their hierarchical interactions. It also explains the infrastructure layers, resource provisioning methods, VM management, and the dynamics of the global exchange of cloud resources, emphasizing the necessity for optimal performance and meeting quality of service expectations. Multiple provisioning methods to allocate resources based on demand, time events, and application popularity are presented alongside challenges in maintaining service level agreements.