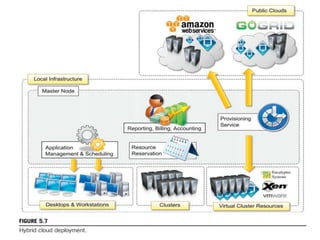

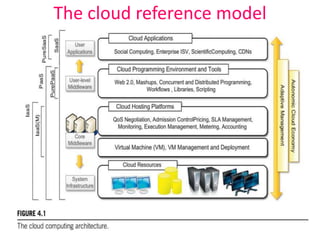

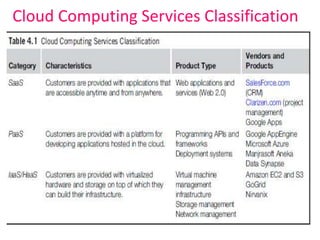

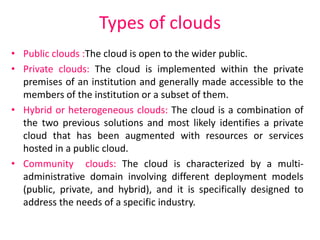

Cloud computing provides on-demand IT services over the internet on a pay-as-you-go basis. The document discusses the cloud computing architecture including the cloud reference model, infrastructure as a service (IaaS), platform as a service (PaaS), and software as a service (SaaS). It also covers different types of clouds such as public clouds, private clouds, and hybrid clouds.

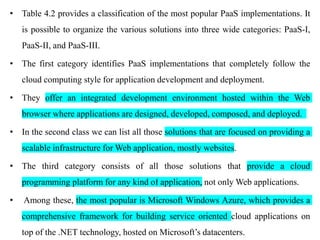

![Open challenges

• Cloud interoperability and standards

• Cloud computing is a service-based model for delivering IT infrastructure and

applications like utilities such as power, water, and electricity.

• Vendor lock-in constitutes one of the major strategic barriers against the

seamless adoption of cloud computing at all stages.

• The presence of standards that are actually implemented and adopted in the

cloud computing community could give room for interoperability and then

lessen the risks resulting from vendor lock-in.

• Few organizations, such as the Cloud Computing Interoperability Forum

(CCIF) and the DMTF Cloud Standards Incubator are leading the path.

• DMTF (formerly known as the Distributed Management Task Force) creates

open manageability standards on both sides of diverse emerging and traditional

IT infrastructures including cloud, virtualization, network, servers and storage.

• The Open Virtualization Format (OVF) [51] is an attempt to provide a common

format for storing the information and metadata describing a virtual machine

image](https://image.slidesharecdn.com/cloudcomputingmodule-2-240201191249-c4ea8dd0/85/Cloud-Computing-MODULE-2-to-understand-the-cloud-computing-concepts-ppt-42-320.jpg)