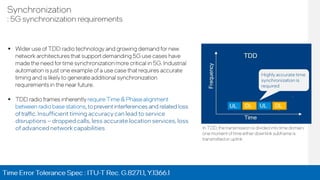

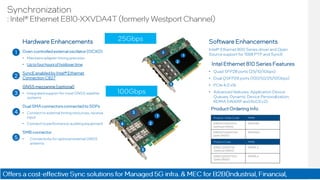

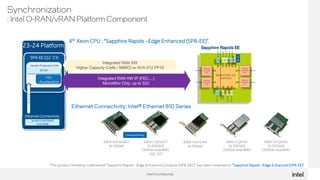

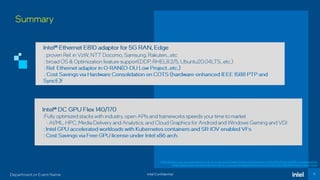

This document discusses network timing synchronization solutions and the Intel Ethernet 810 network interface card.

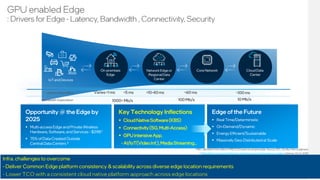

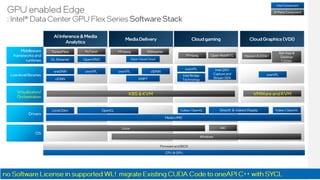

It compares GPS, SyncE, and PTP timing synchronization methods. It then describes the Intel Ethernet 810 NIC's hardware and software features for high accuracy timing, including an OCXO oscillator and GNSS support. Product codes and specifications are provided for the 810 series cards. The document also outlines the Intel edge computing strategy and software stack for edge applications.