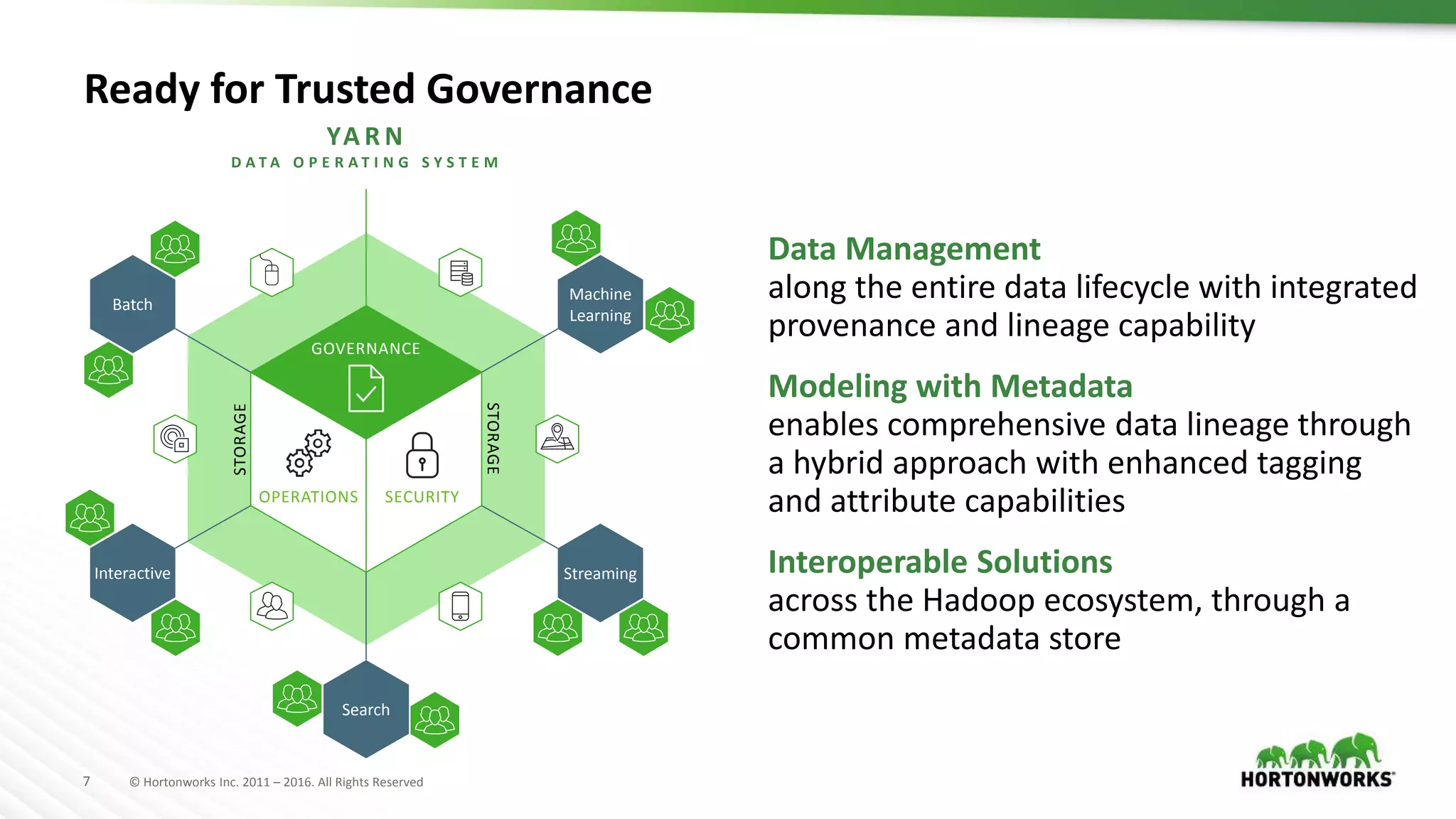

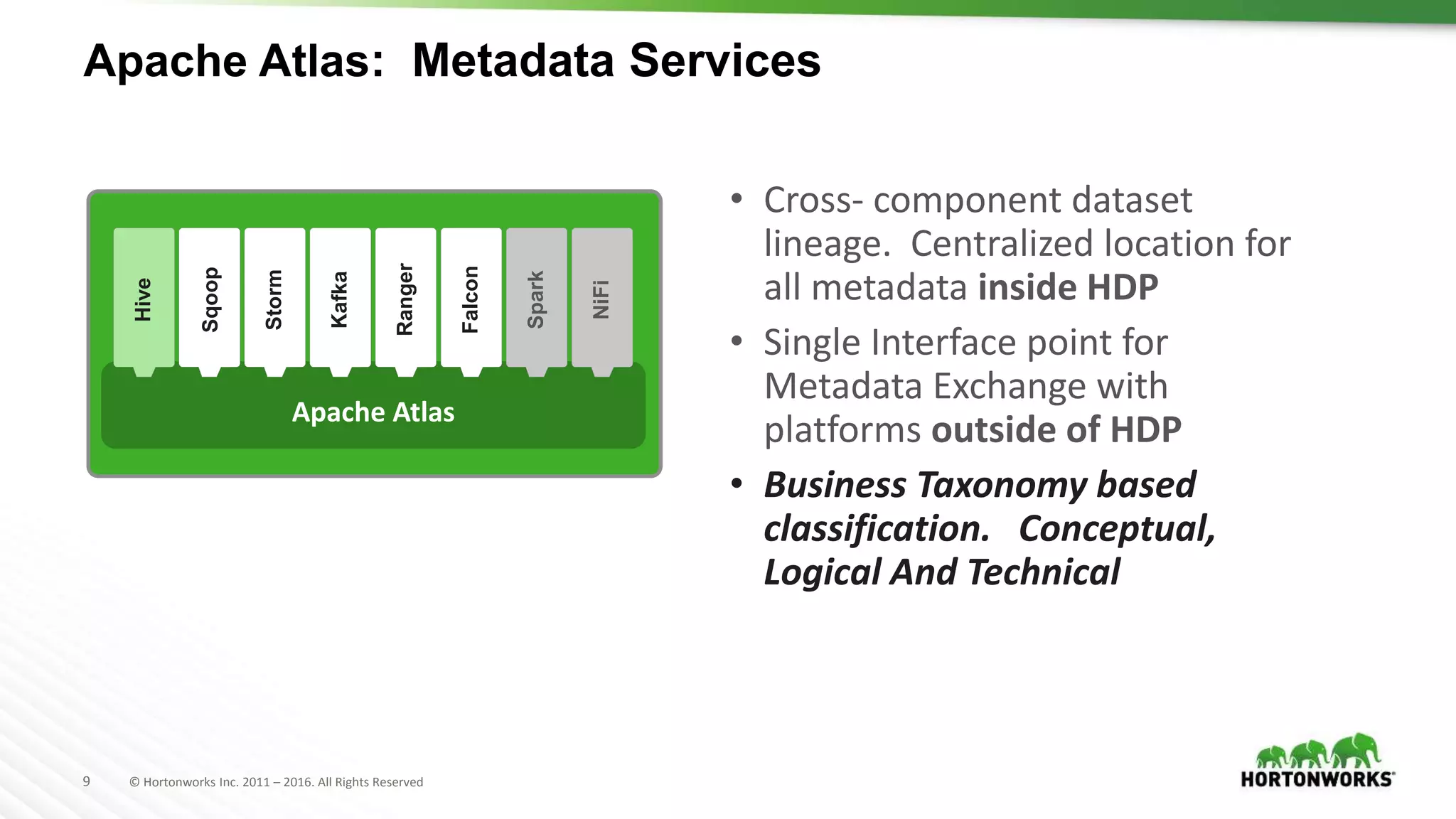

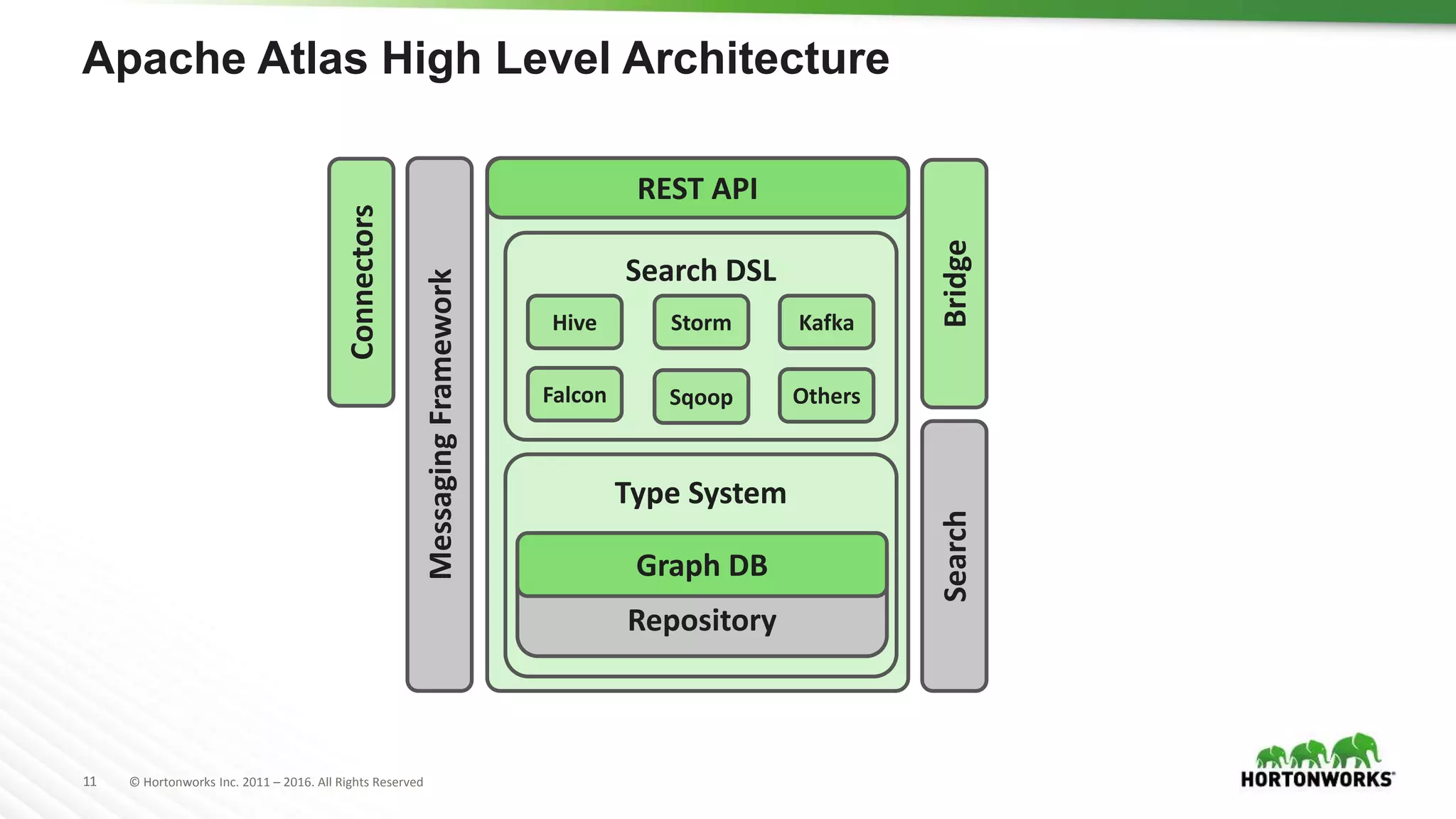

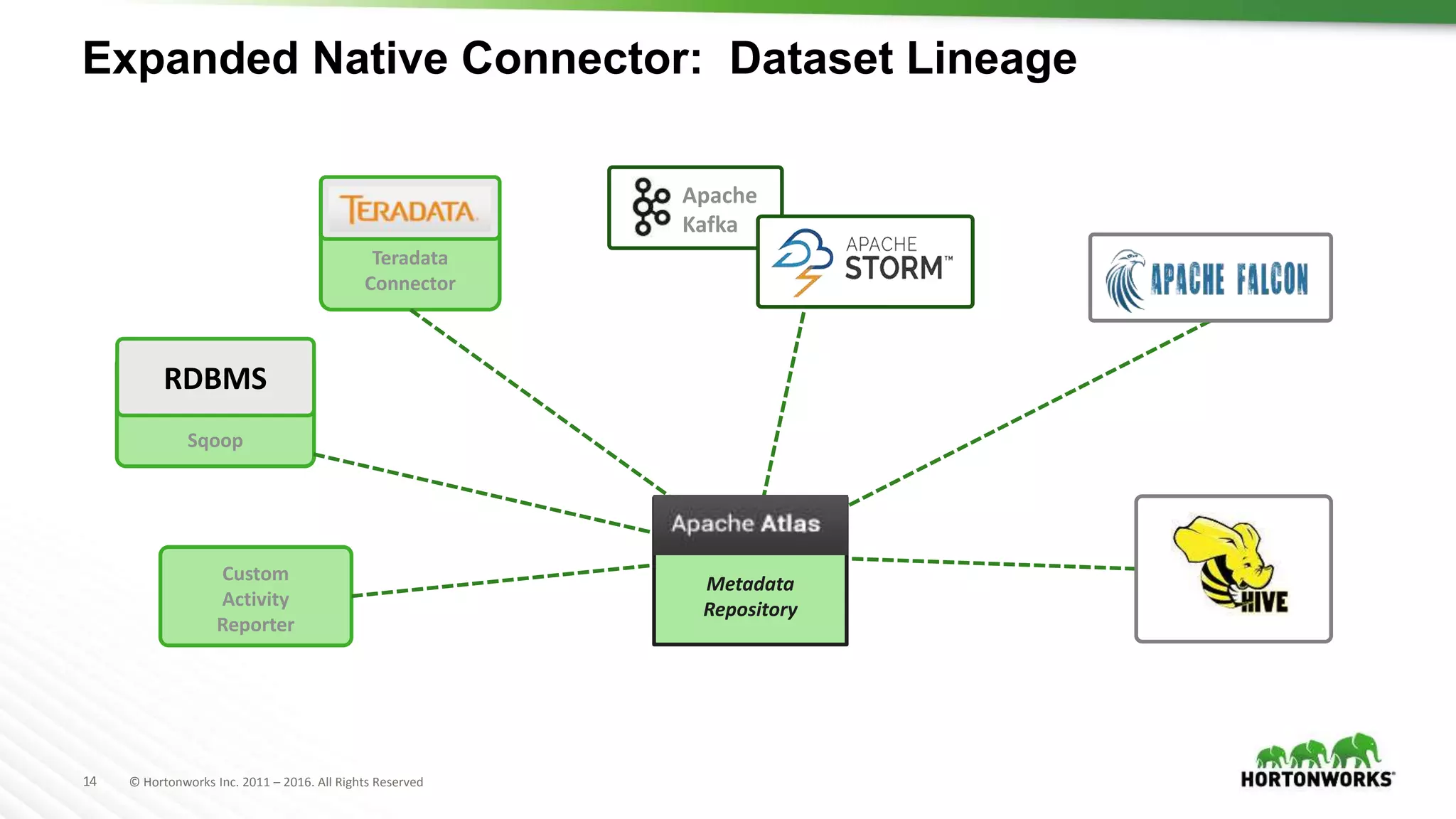

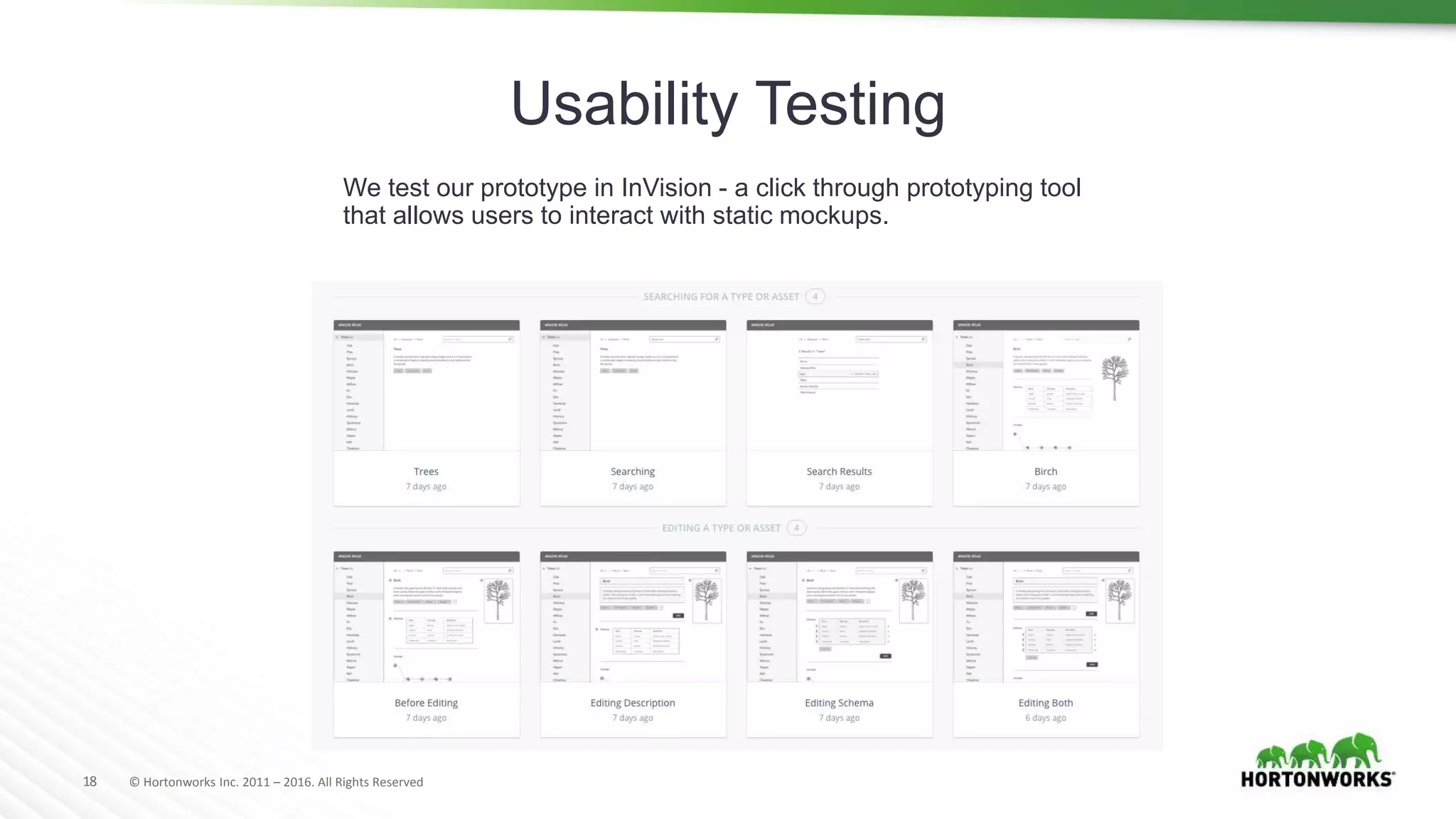

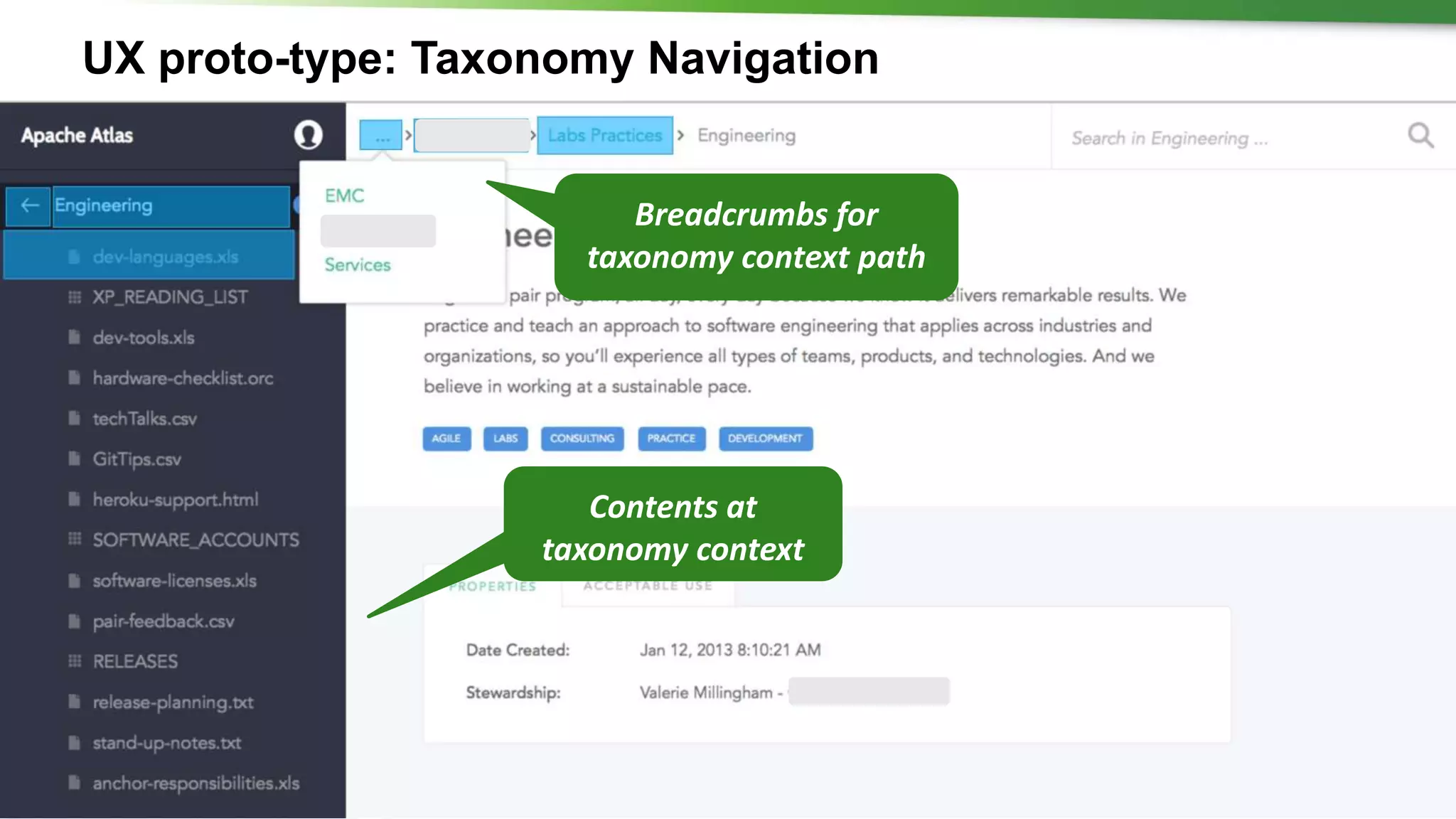

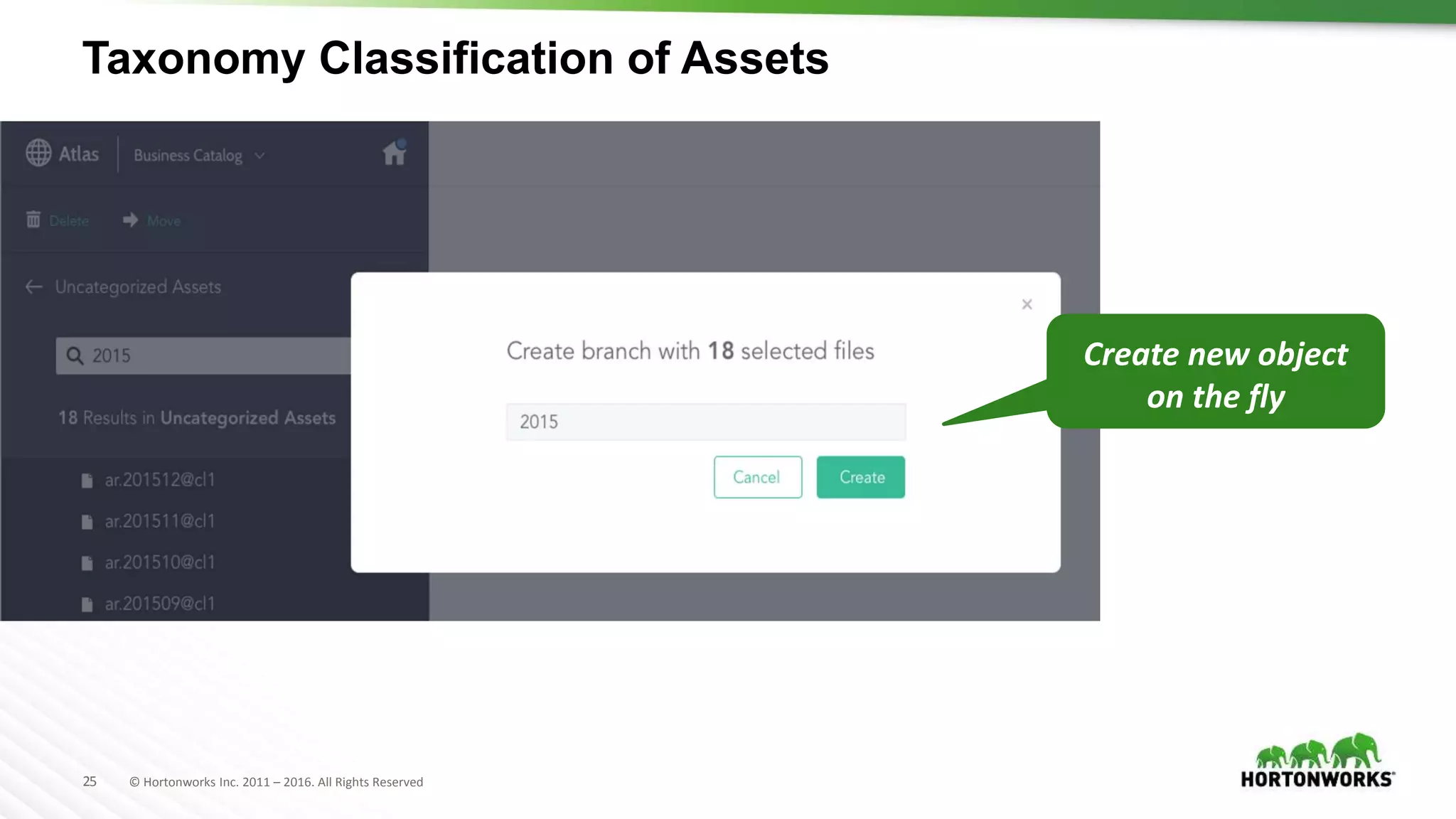

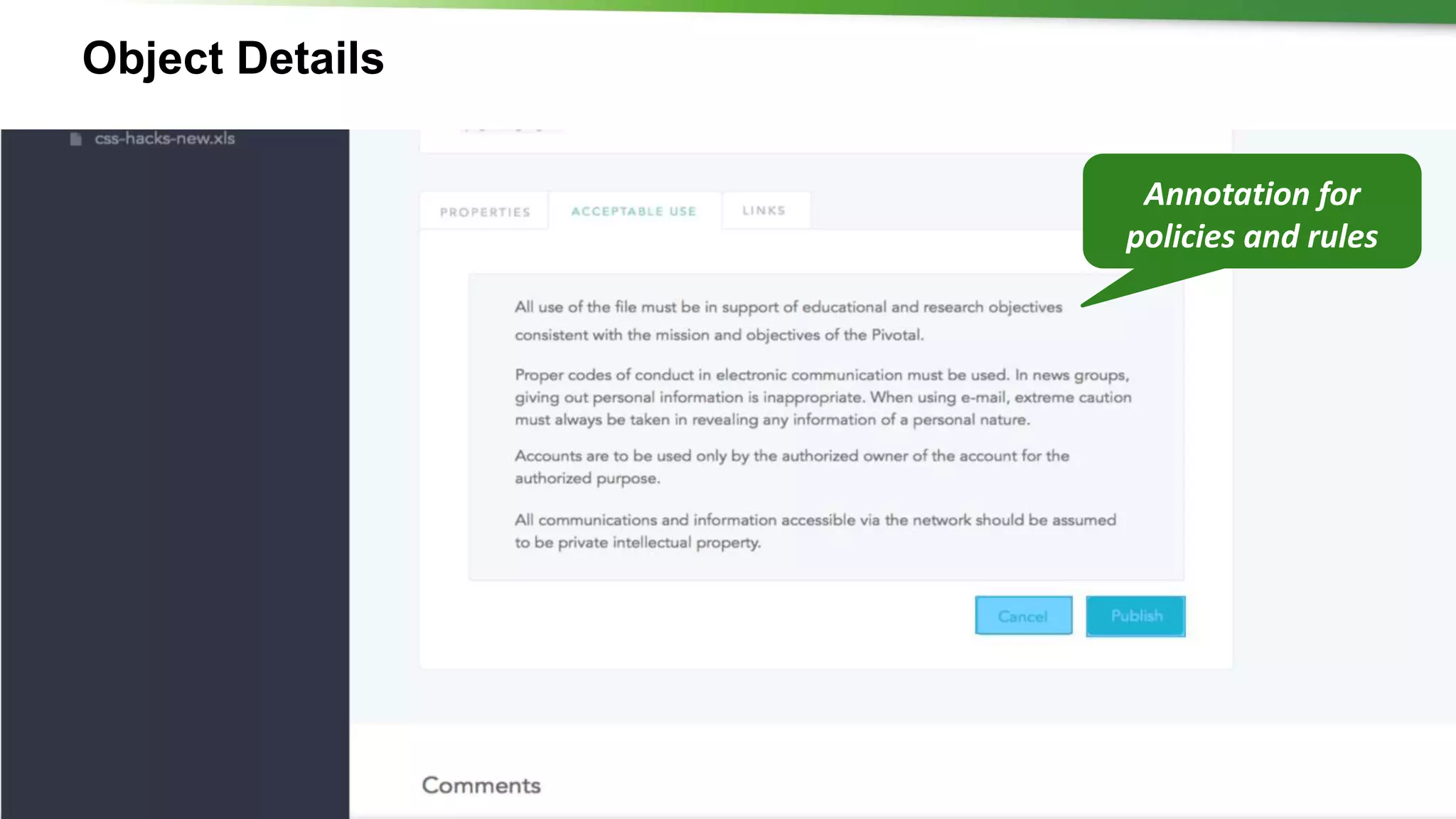

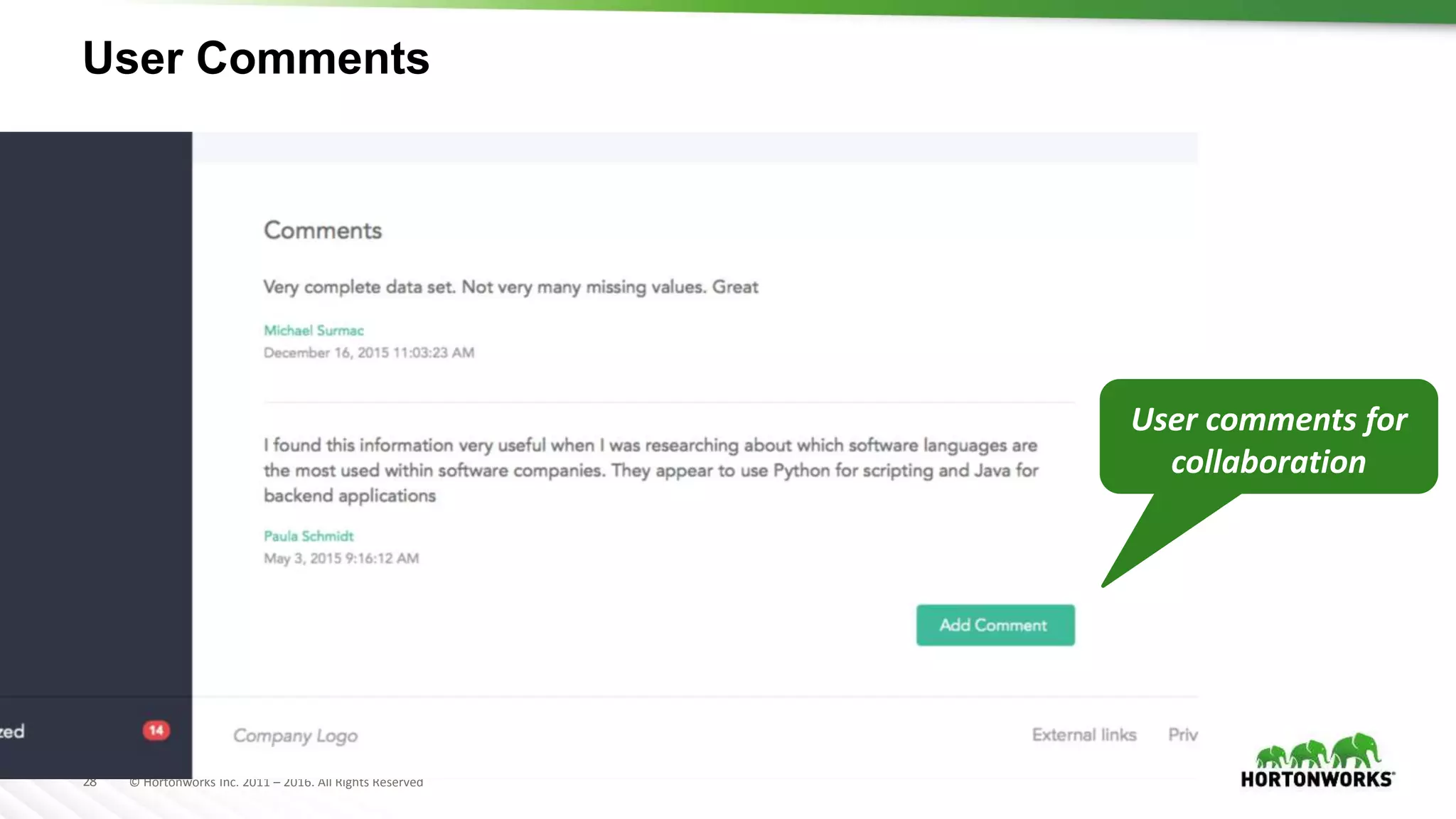

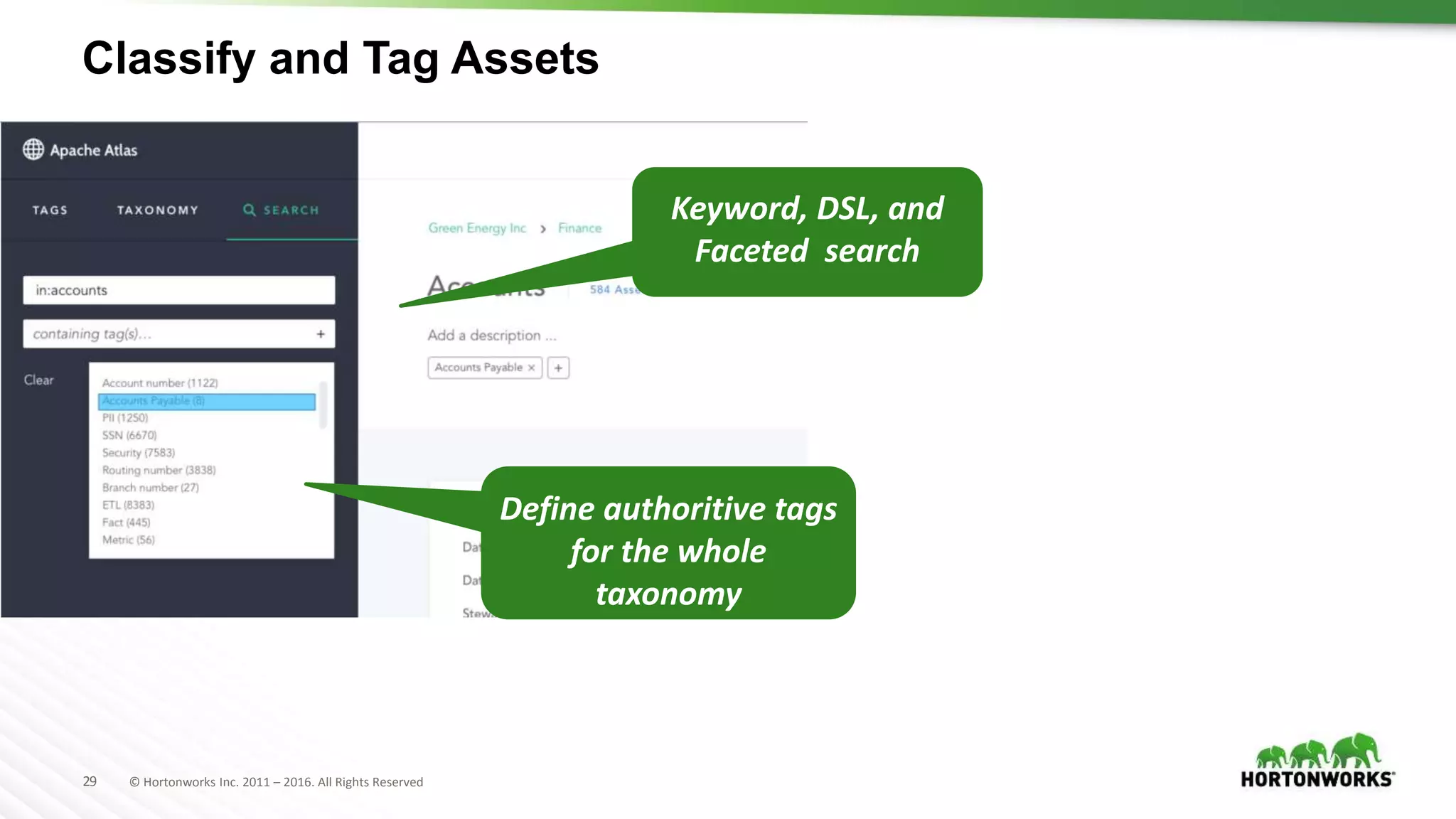

The document discusses the implementation of Apache Atlas as a solution for enterprise data governance, bridging traditional data warehousing with modern Hadoop environments. It outlines the roadmap for features and capabilities related to metadata management, data lineage, and implications for business taxonomy, emphasizing user engagement through interviews and usability testing. The document also cautions that the described features are subject to change and should not be relied upon for purchasing decisions.