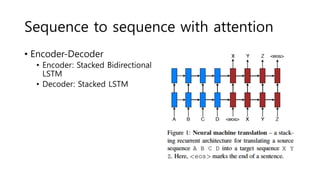

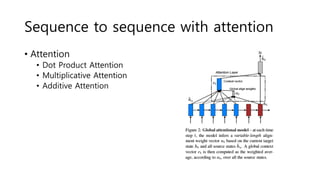

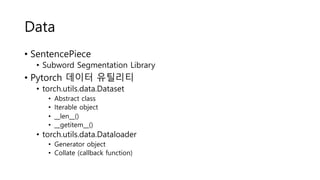

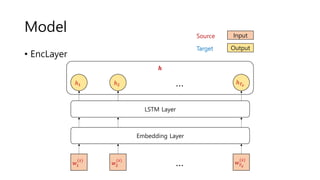

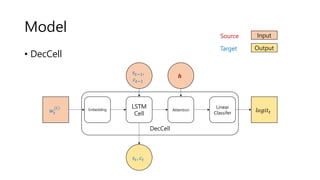

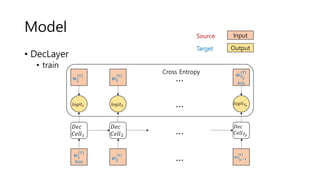

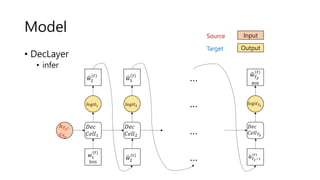

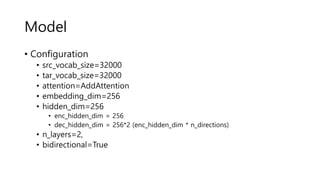

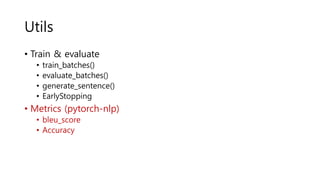

This document summarizes the key aspects of a neural machine translation model using sequence-to-sequence with attention. It describes the encoder-decoder architecture using stacked LSTMs, dot product attention, and how the model is trained on WMT'14 English-German data and evaluated on Newstest2013 data. It also provides information on the tokenizer, dataset creation, model configuration, and training utilities.

![[IMPL] Neural Machine Translation

장재호

19. 09. 06 (금)](https://image.slidesharecdn.com/implneuralmachinetranslation-191030053355/85/Impl-neural-machine-translation-1-320.jpg)