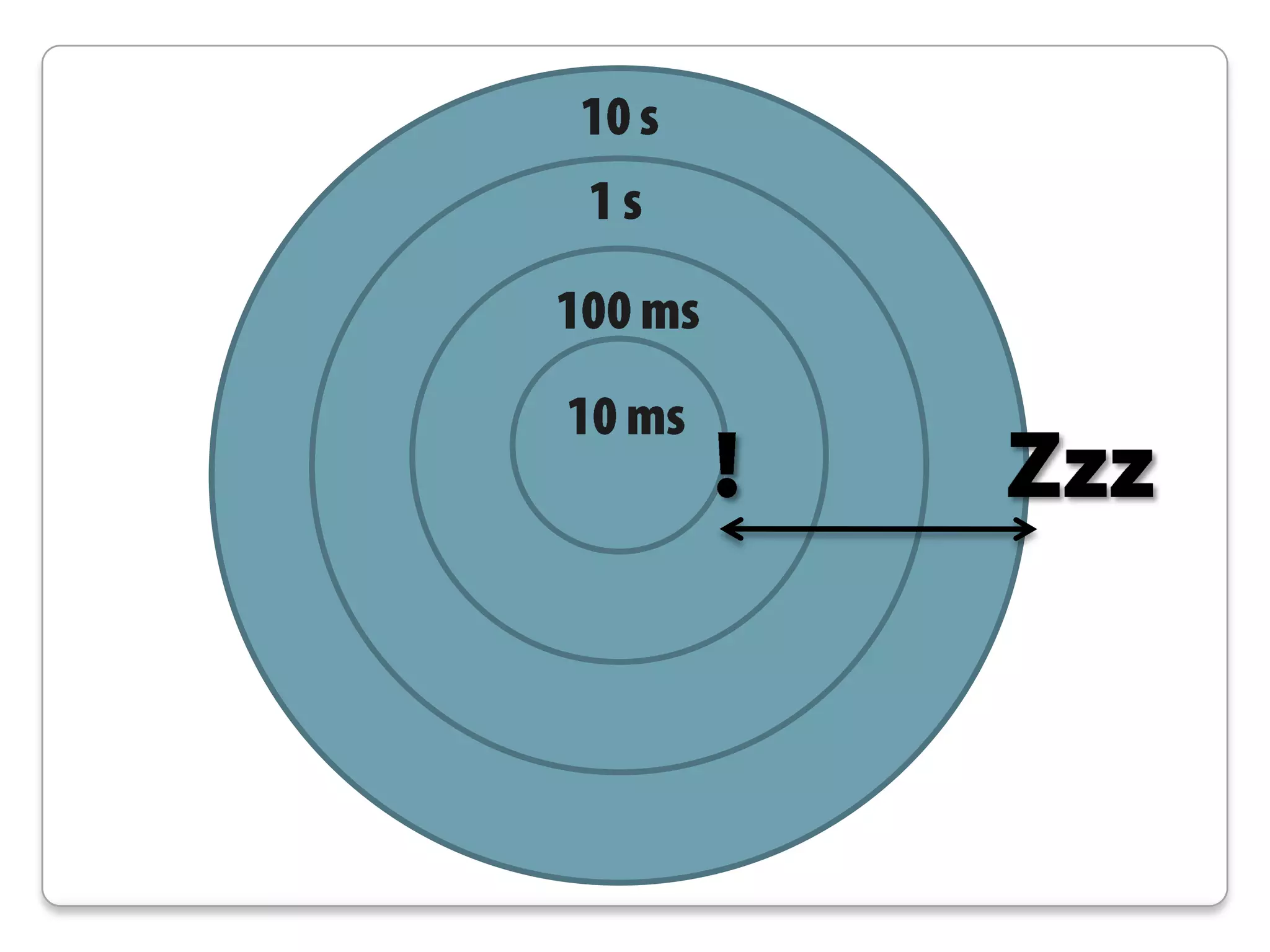

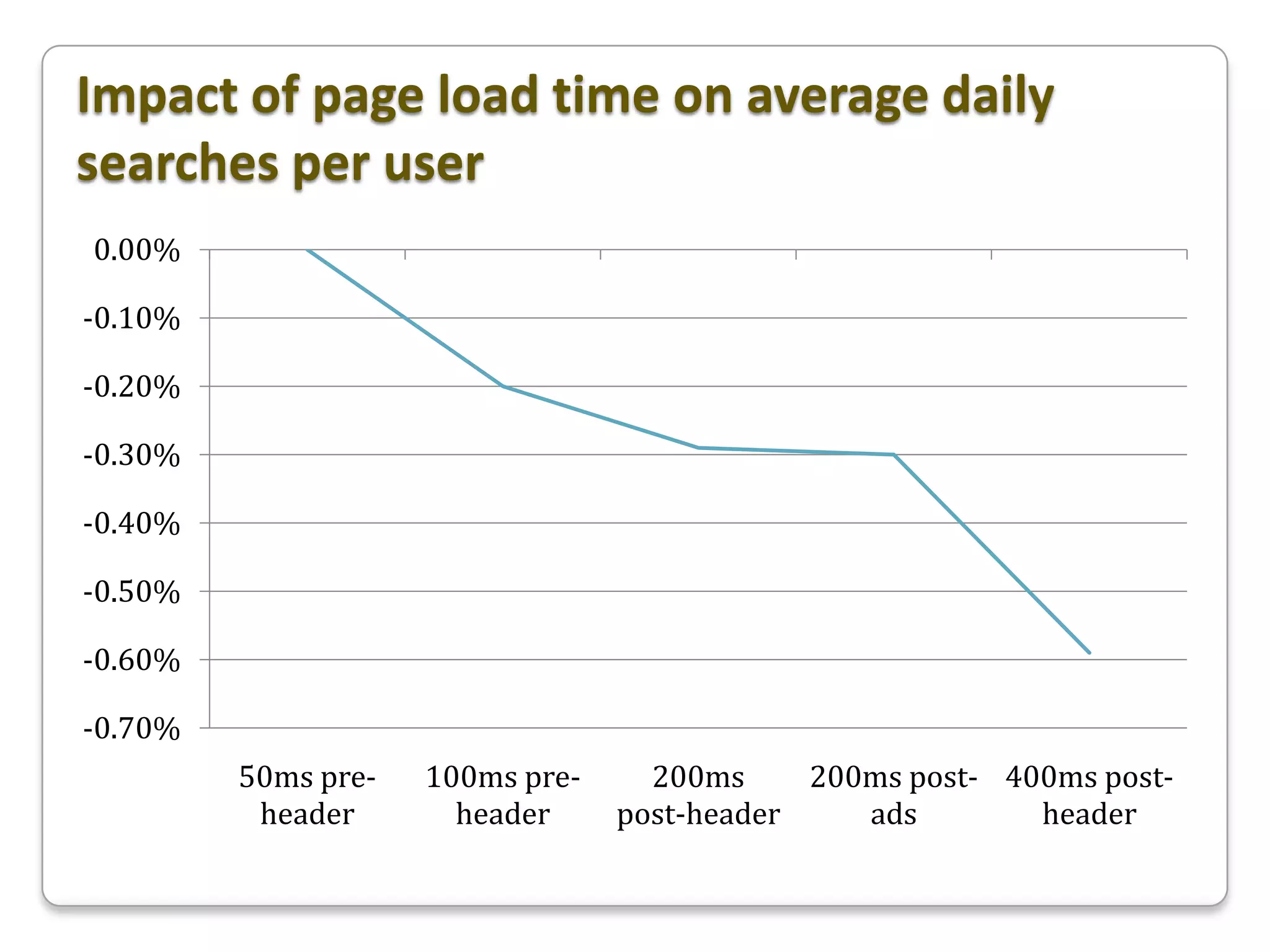

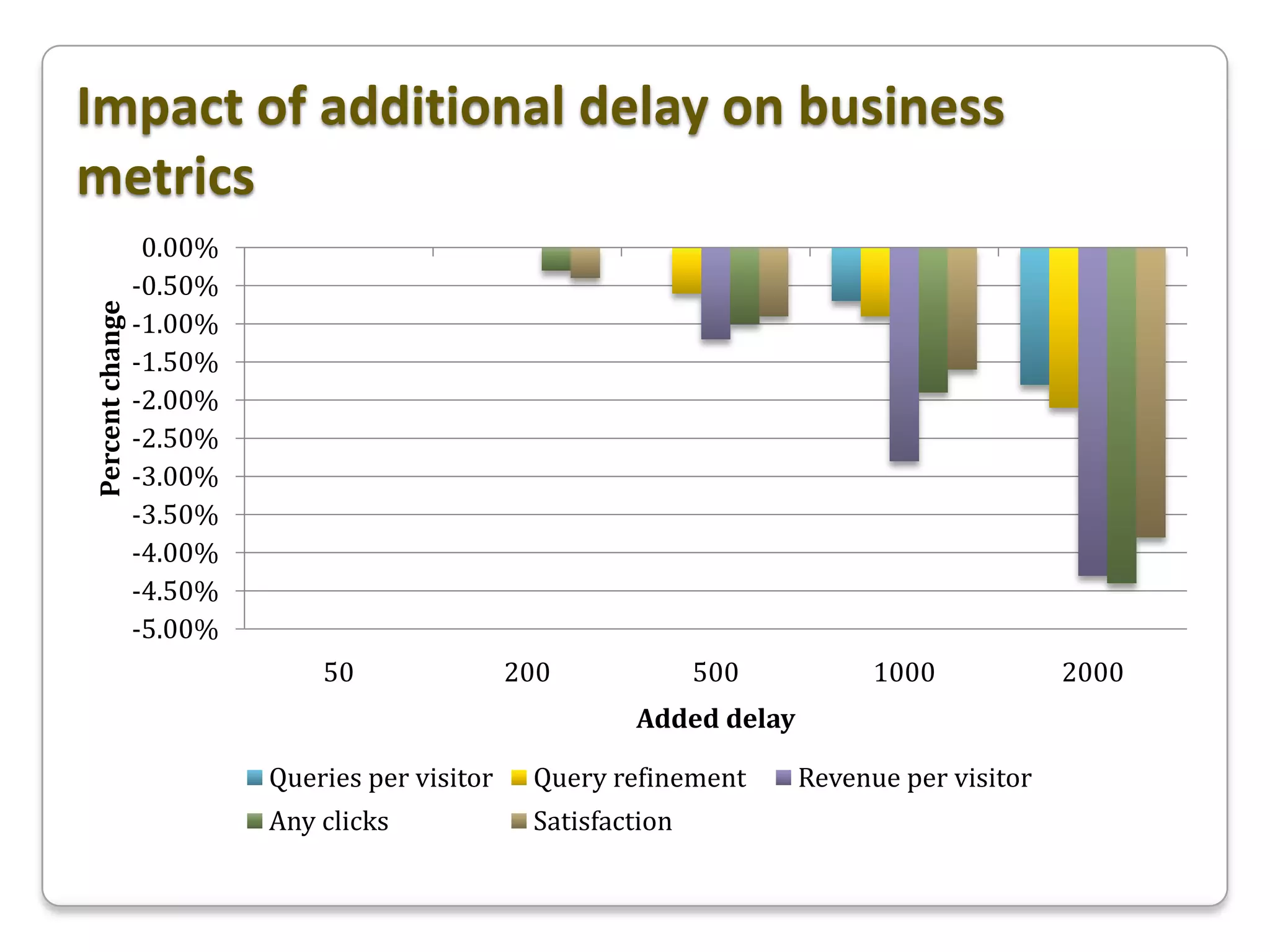

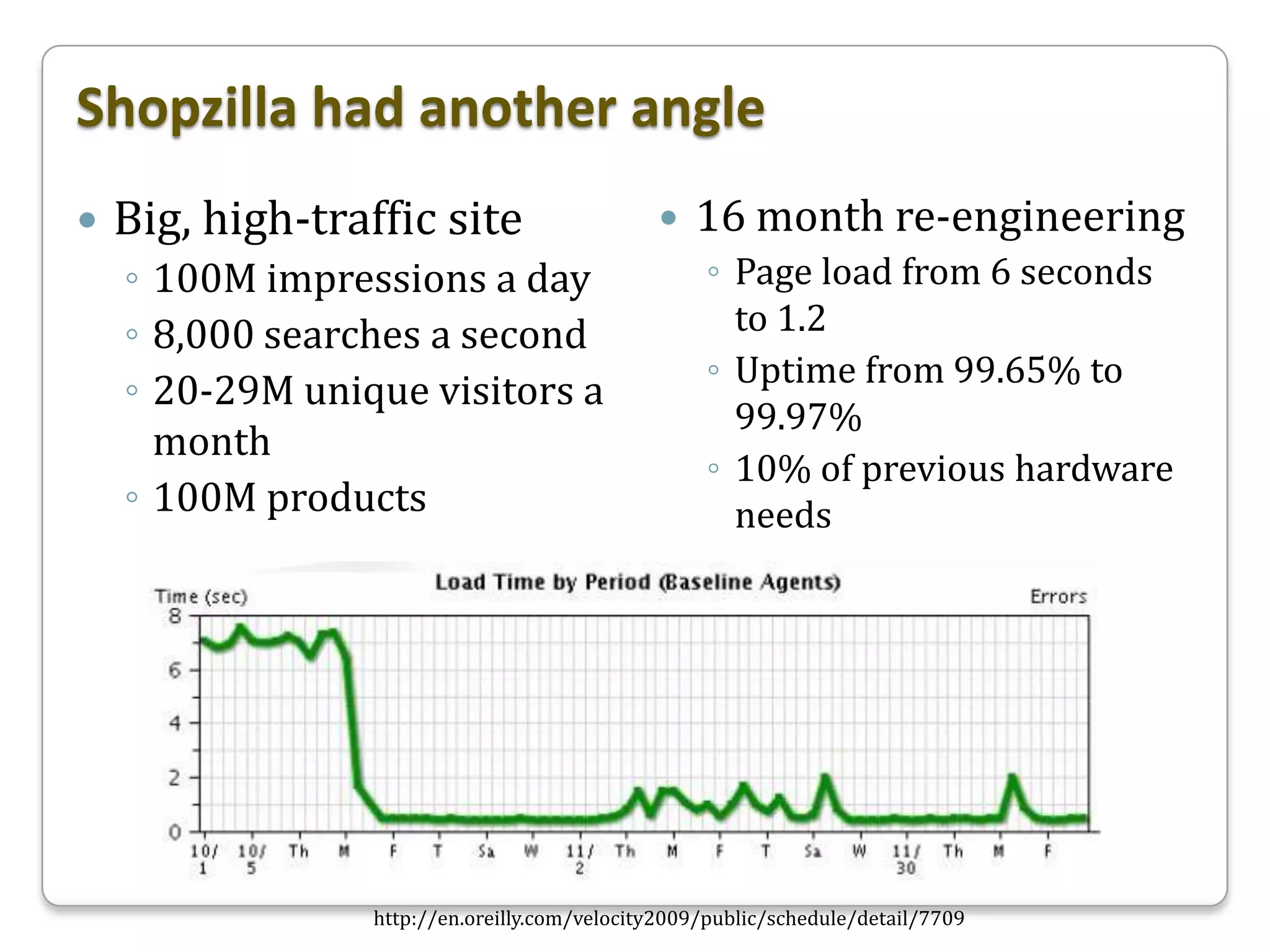

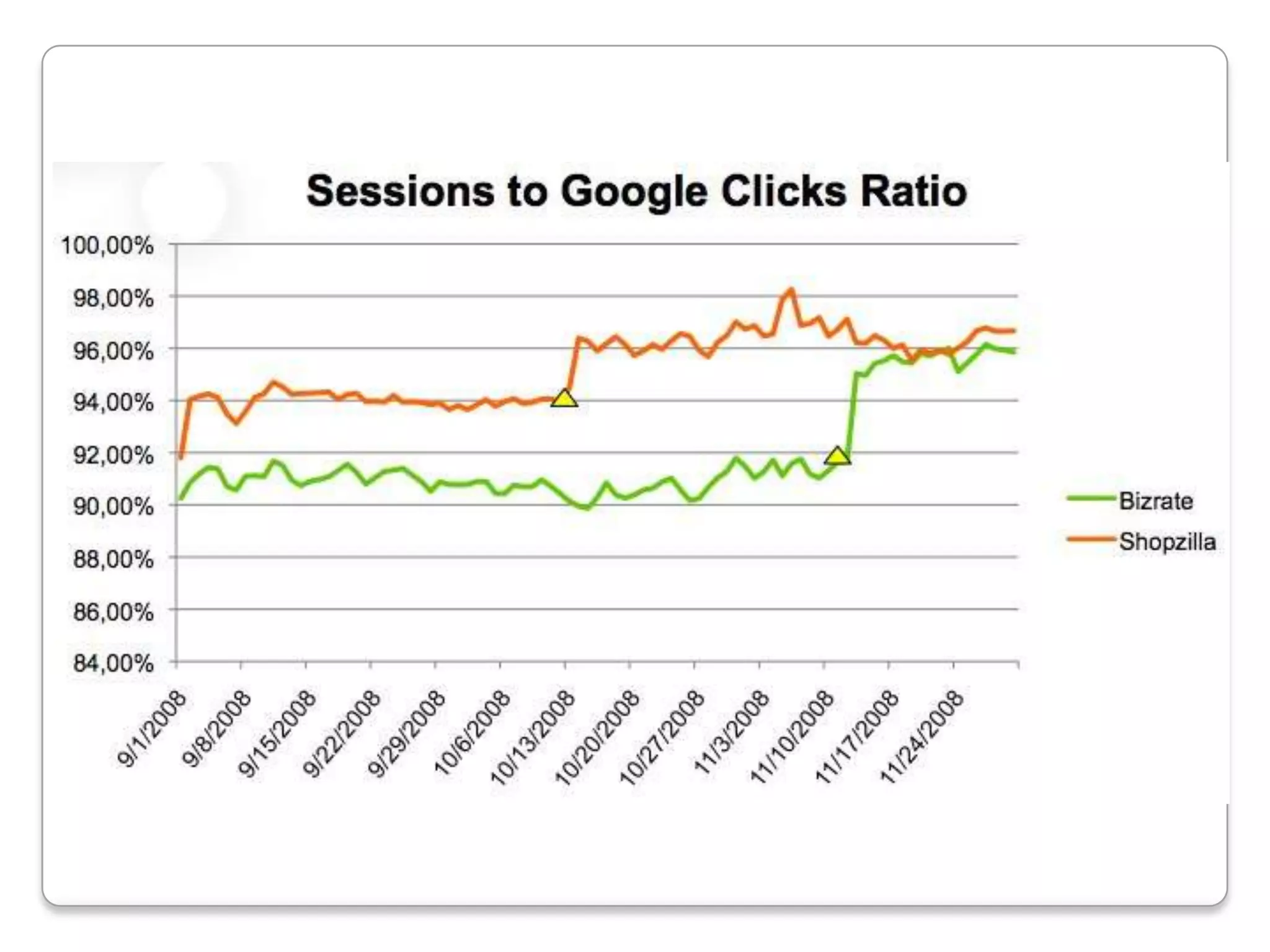

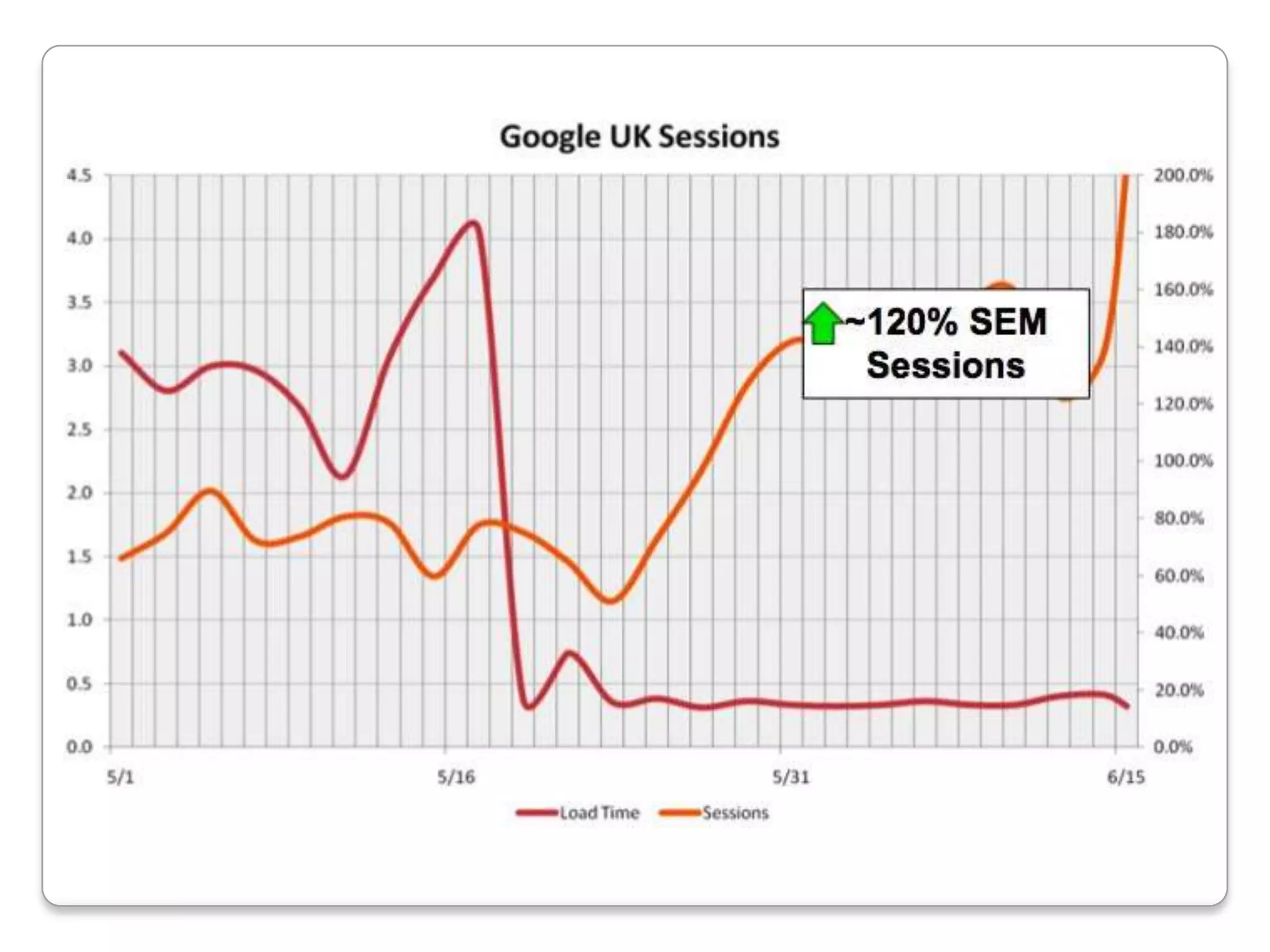

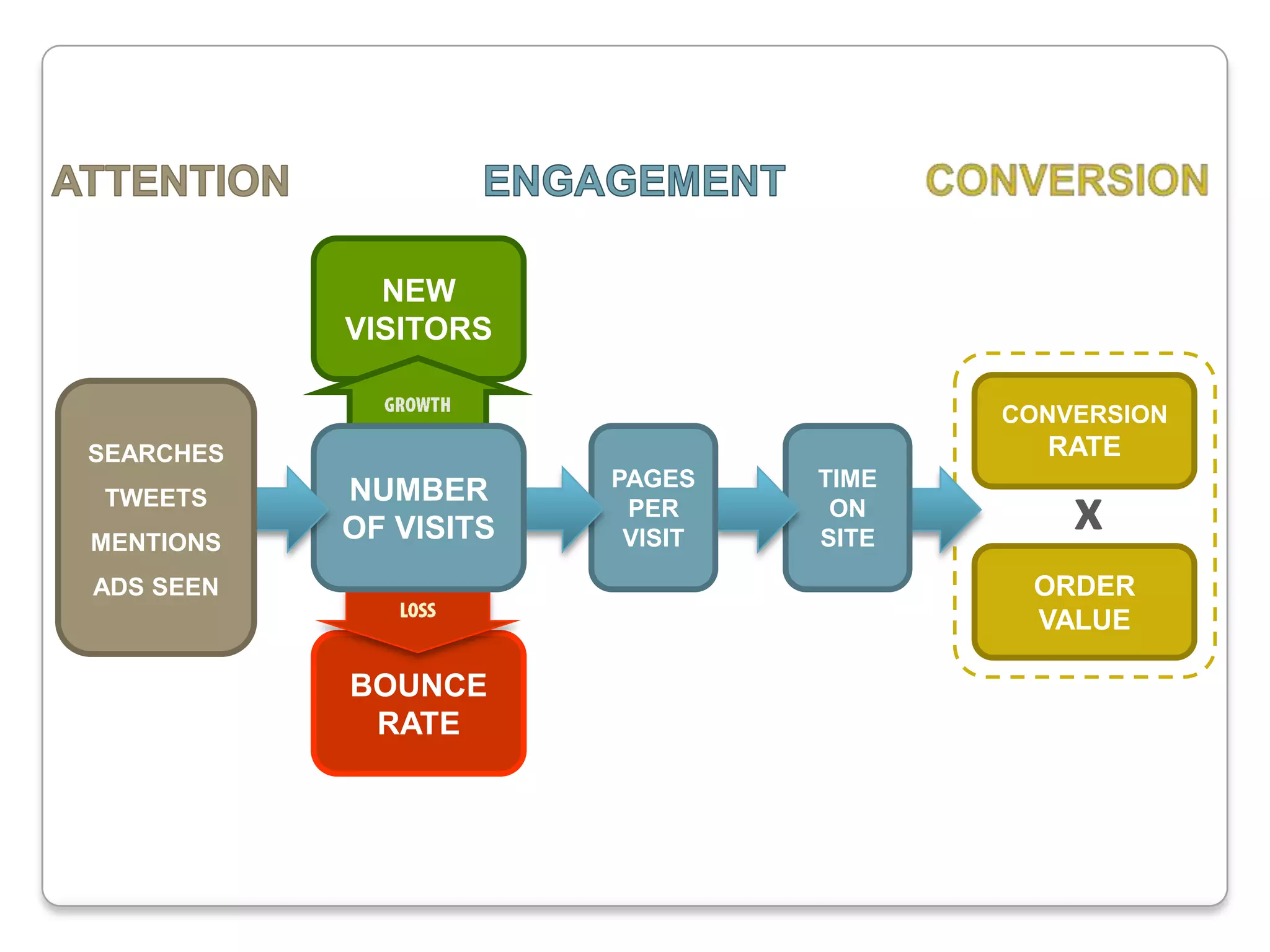

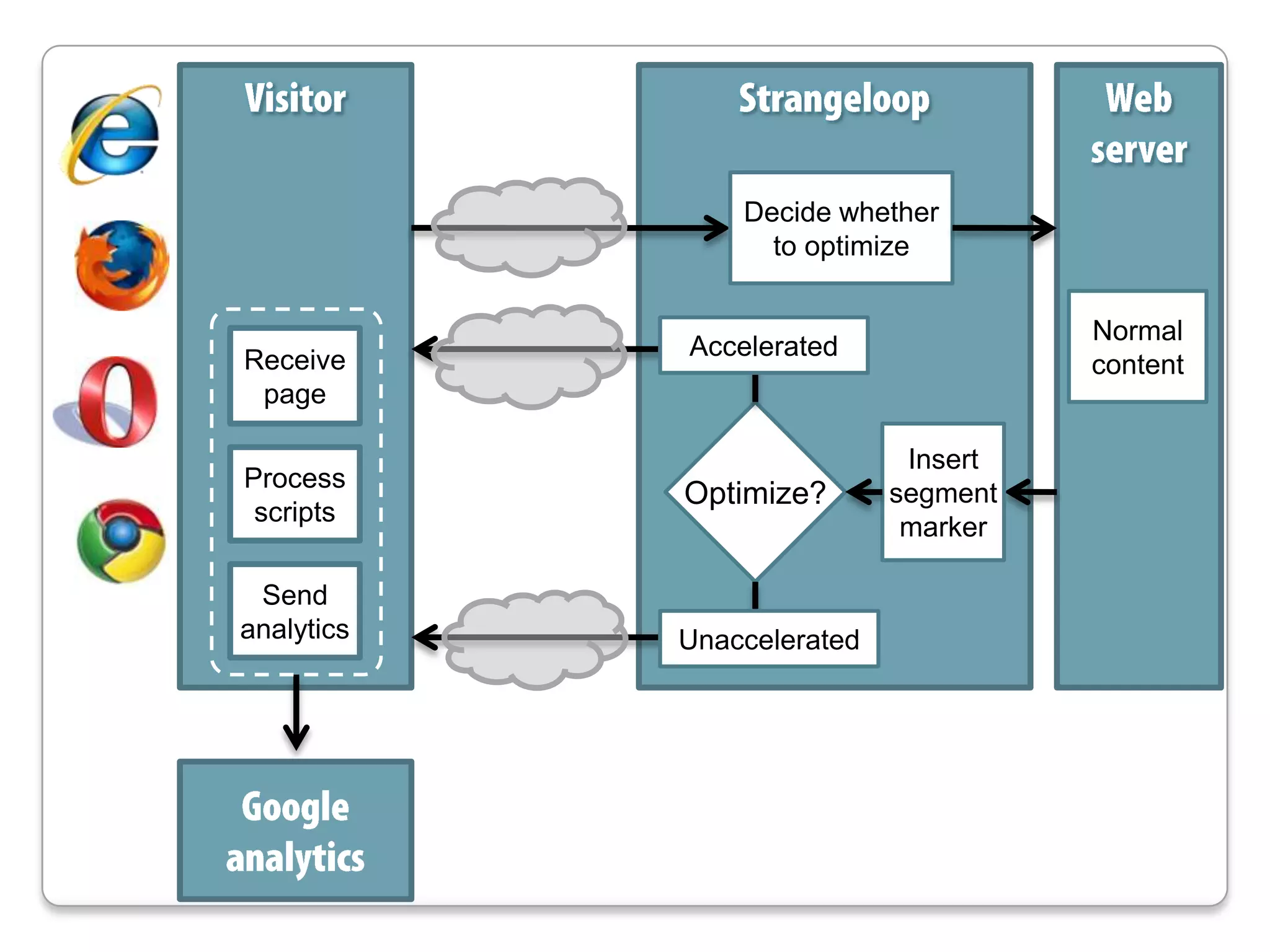

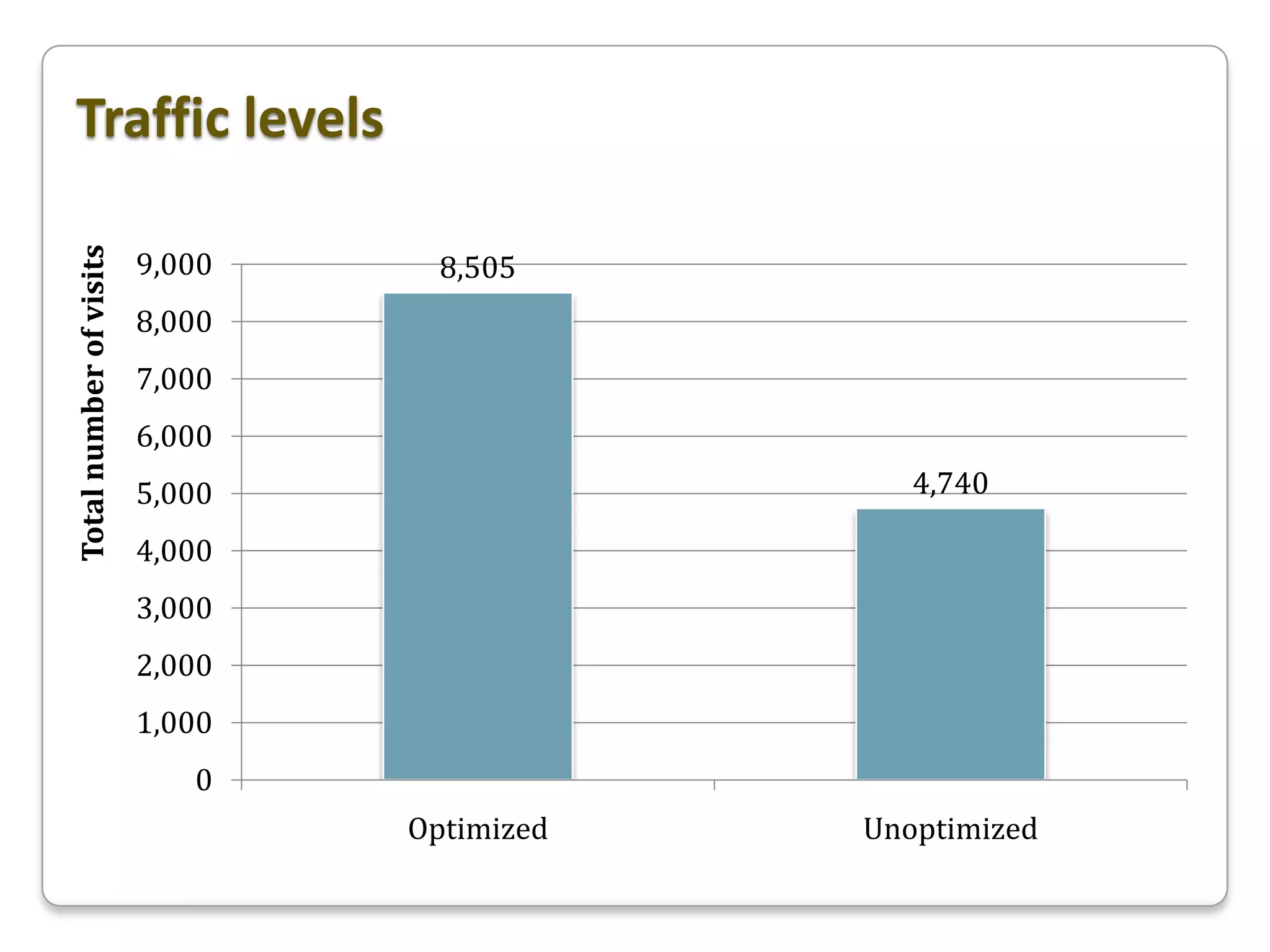

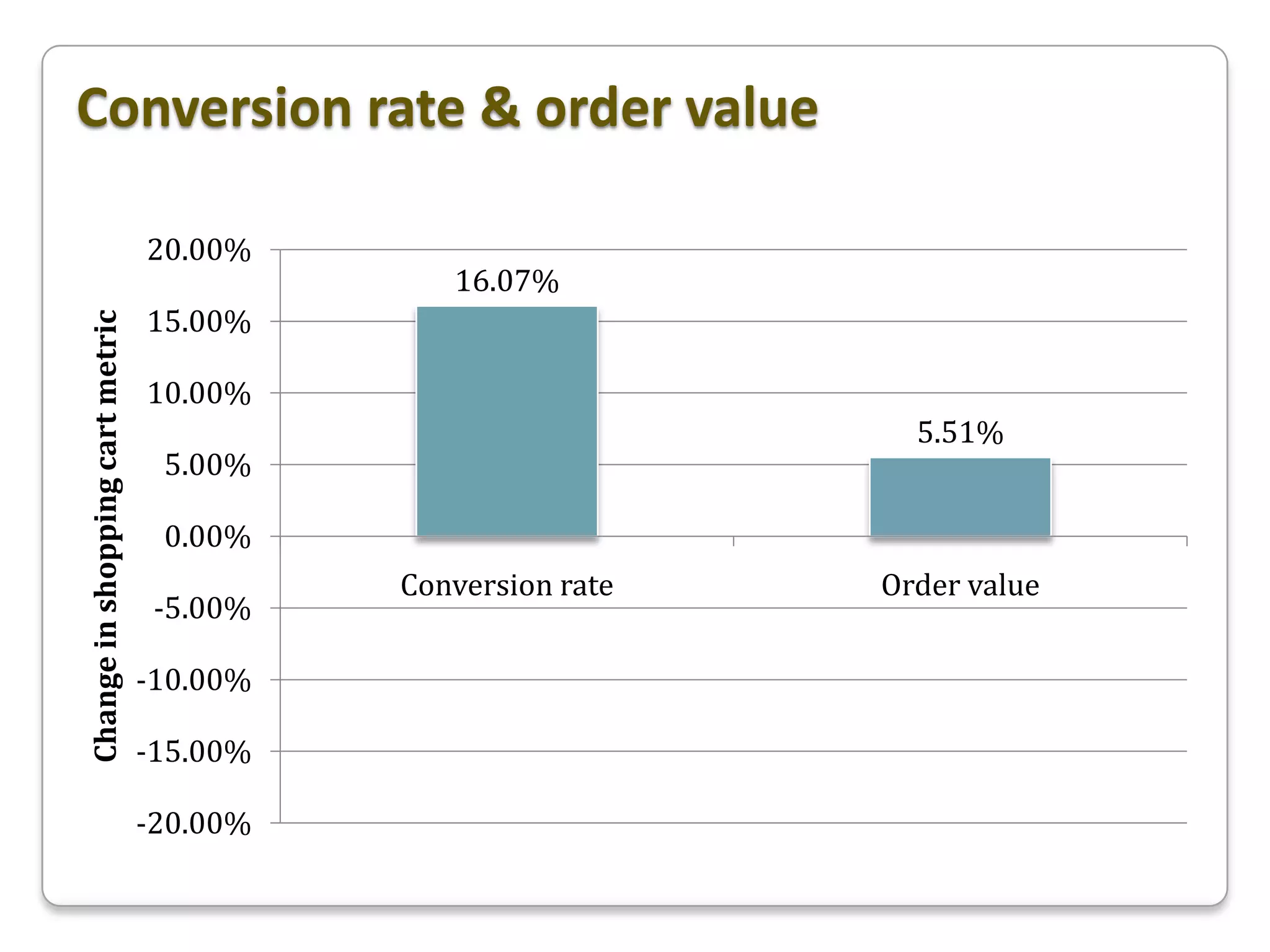

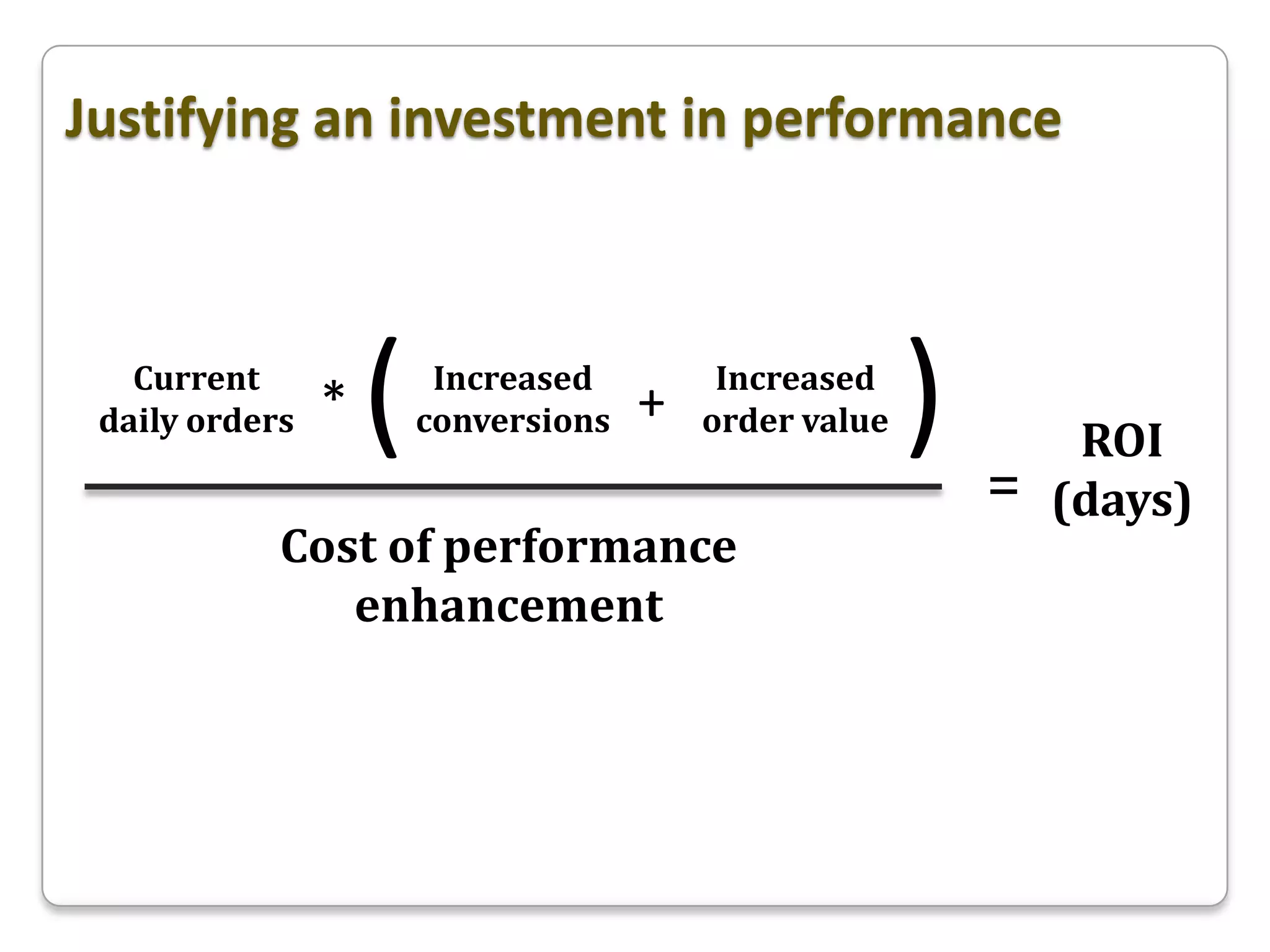

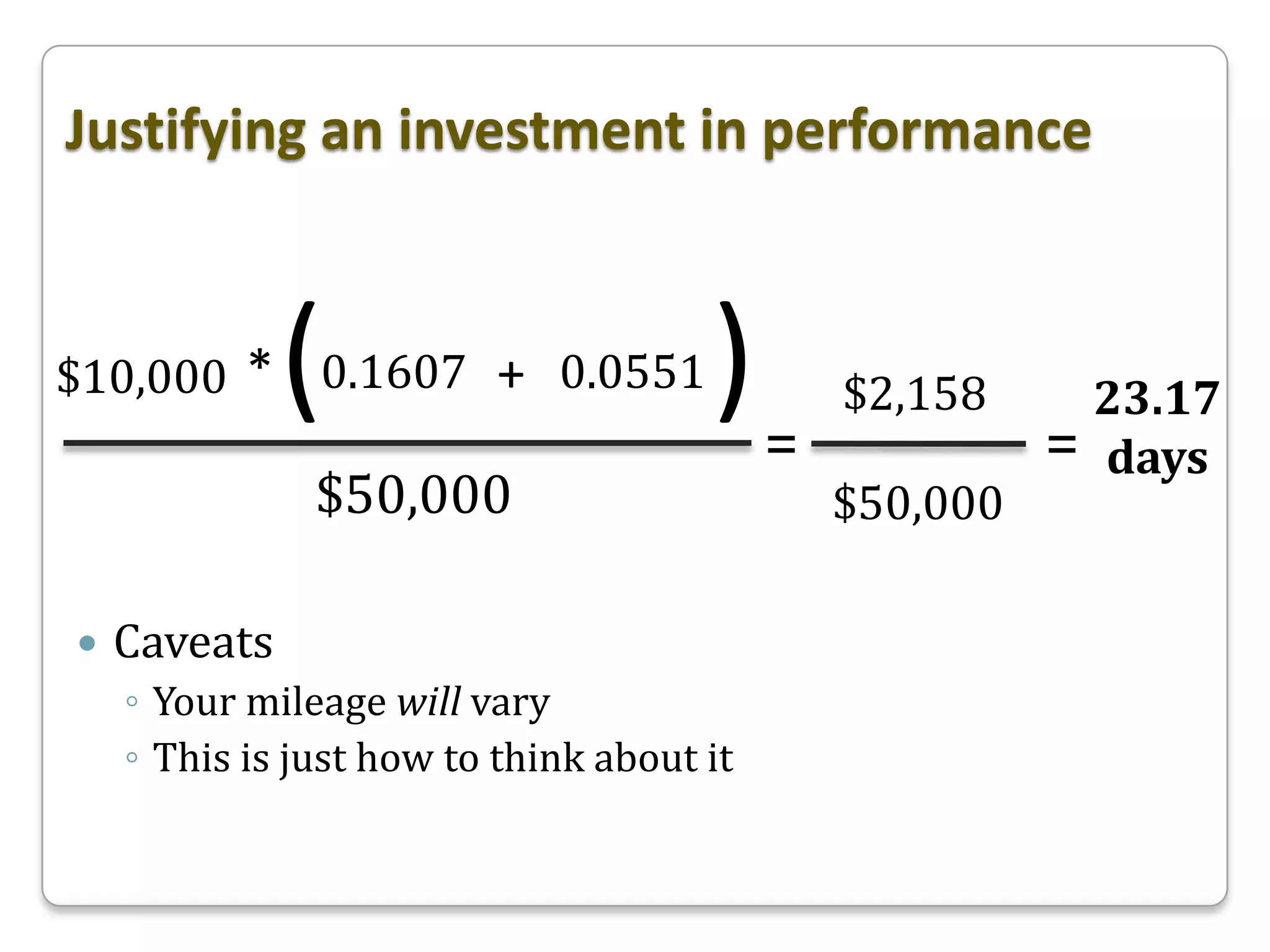

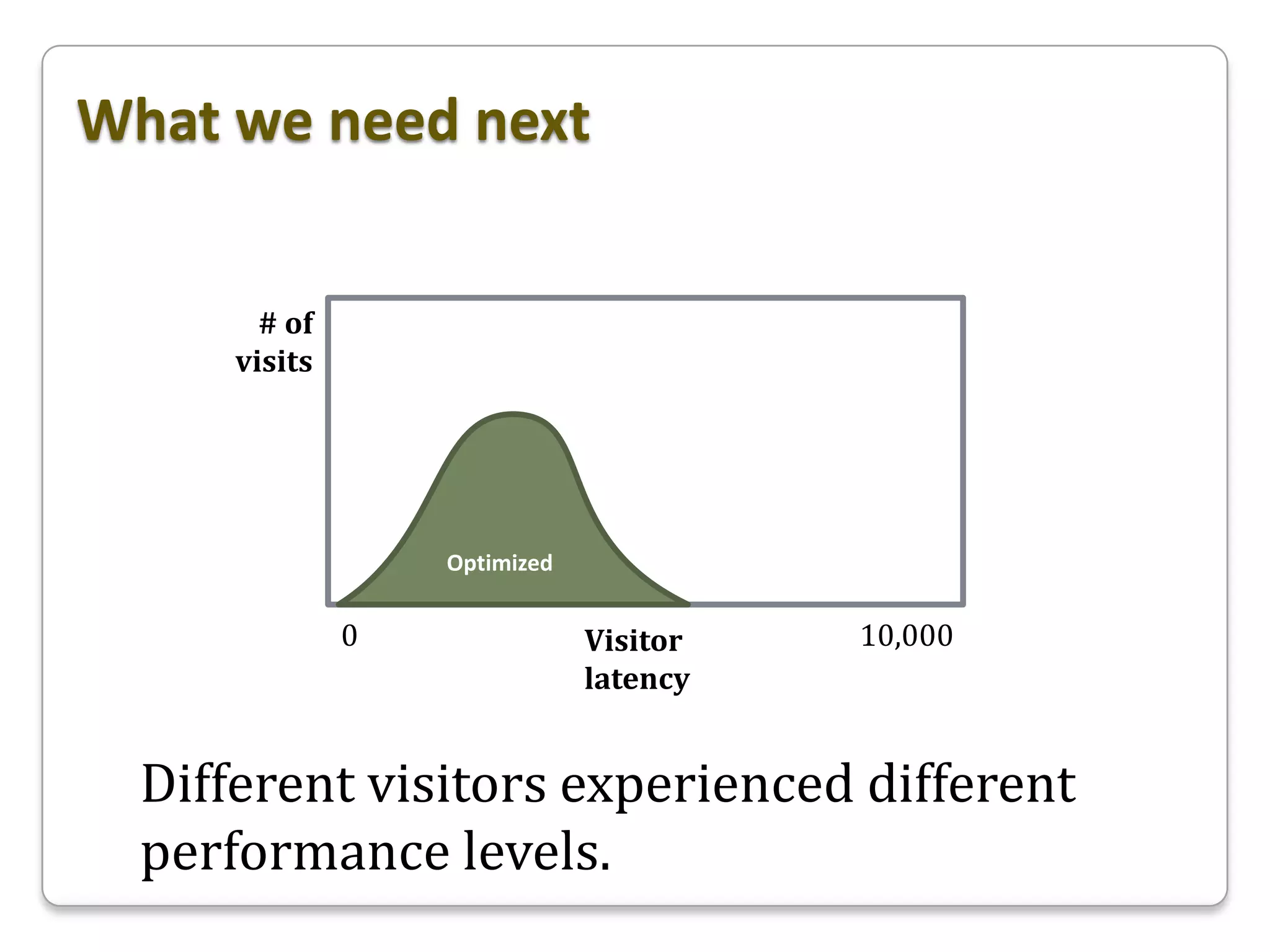

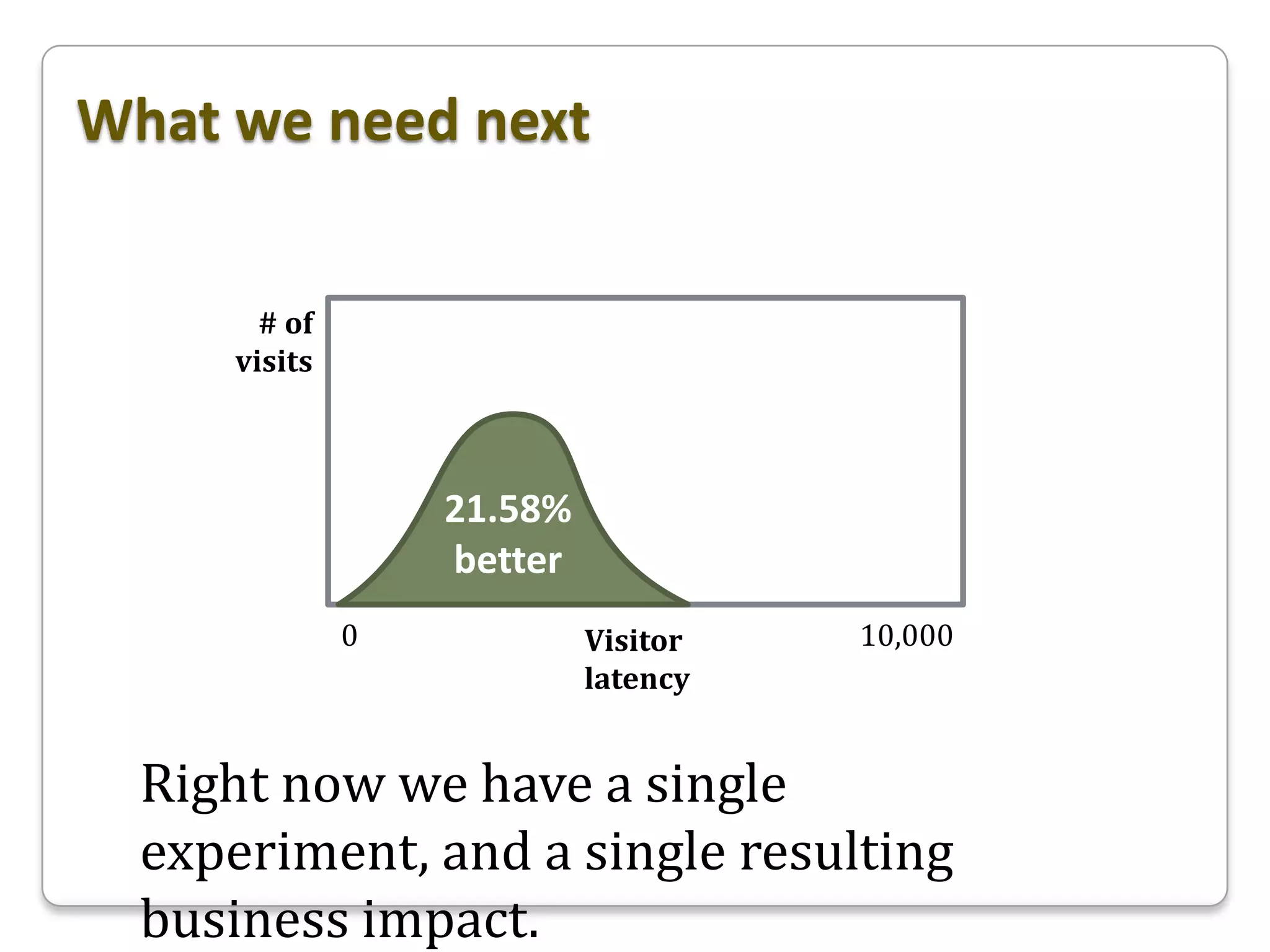

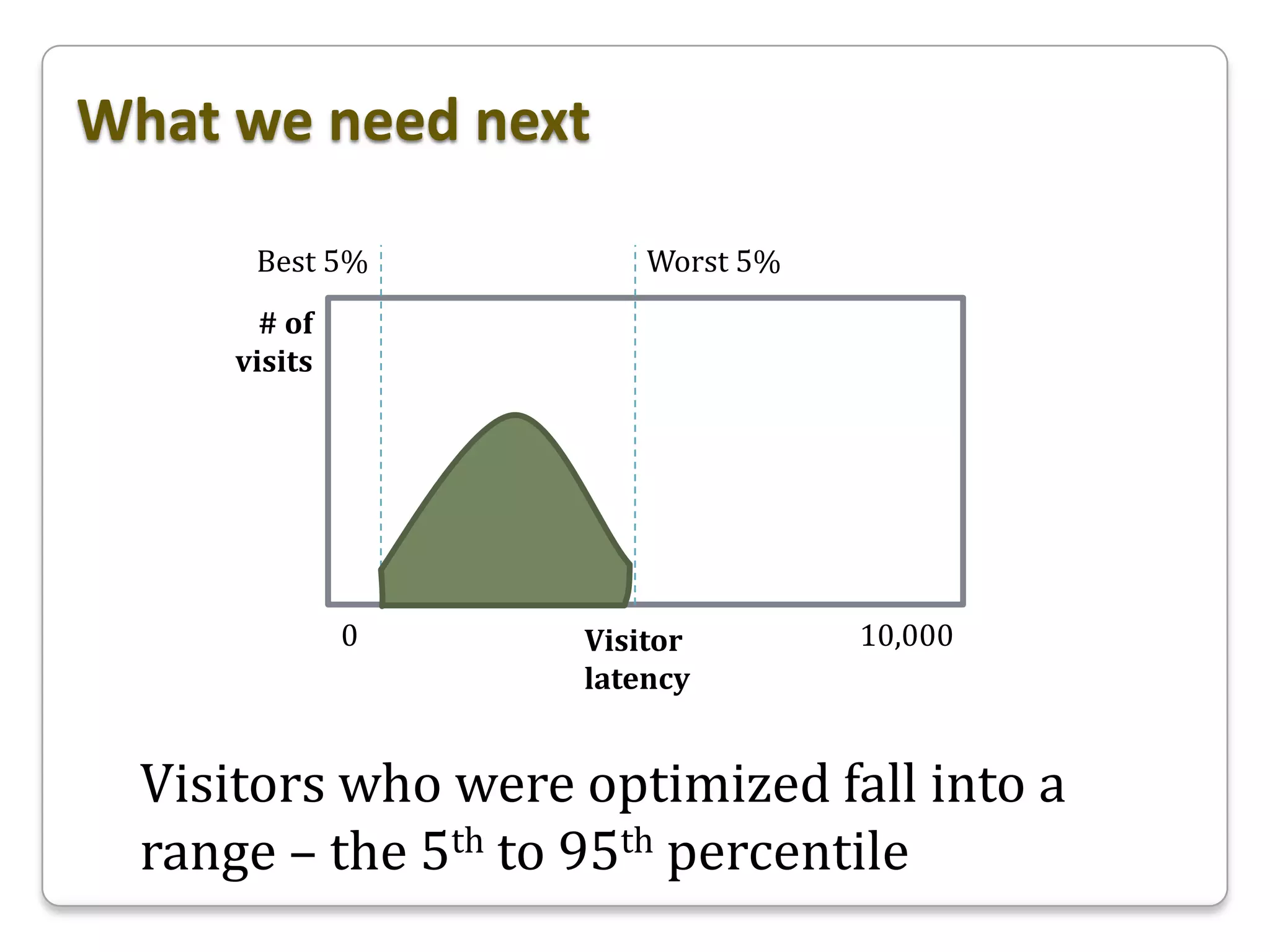

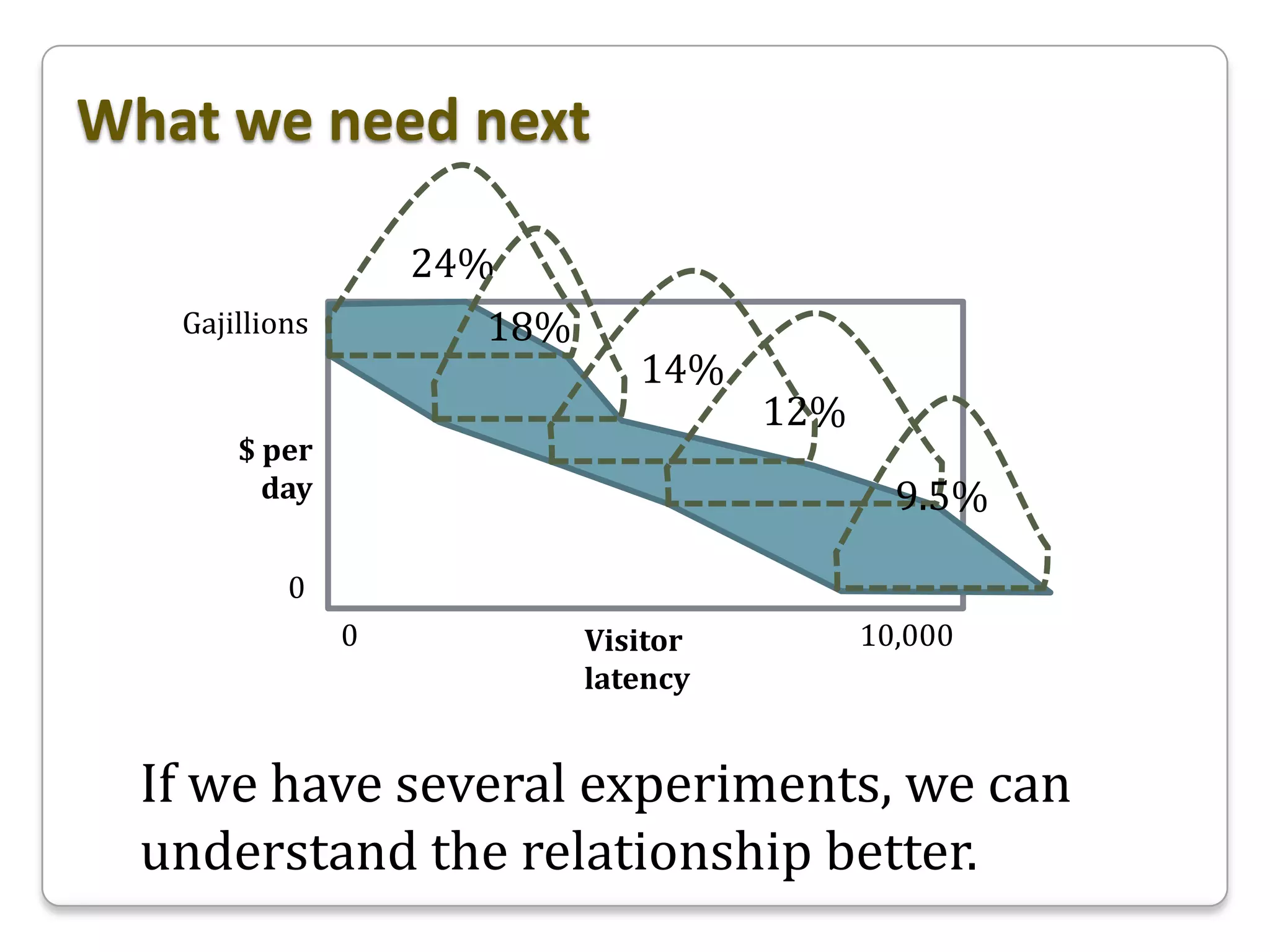

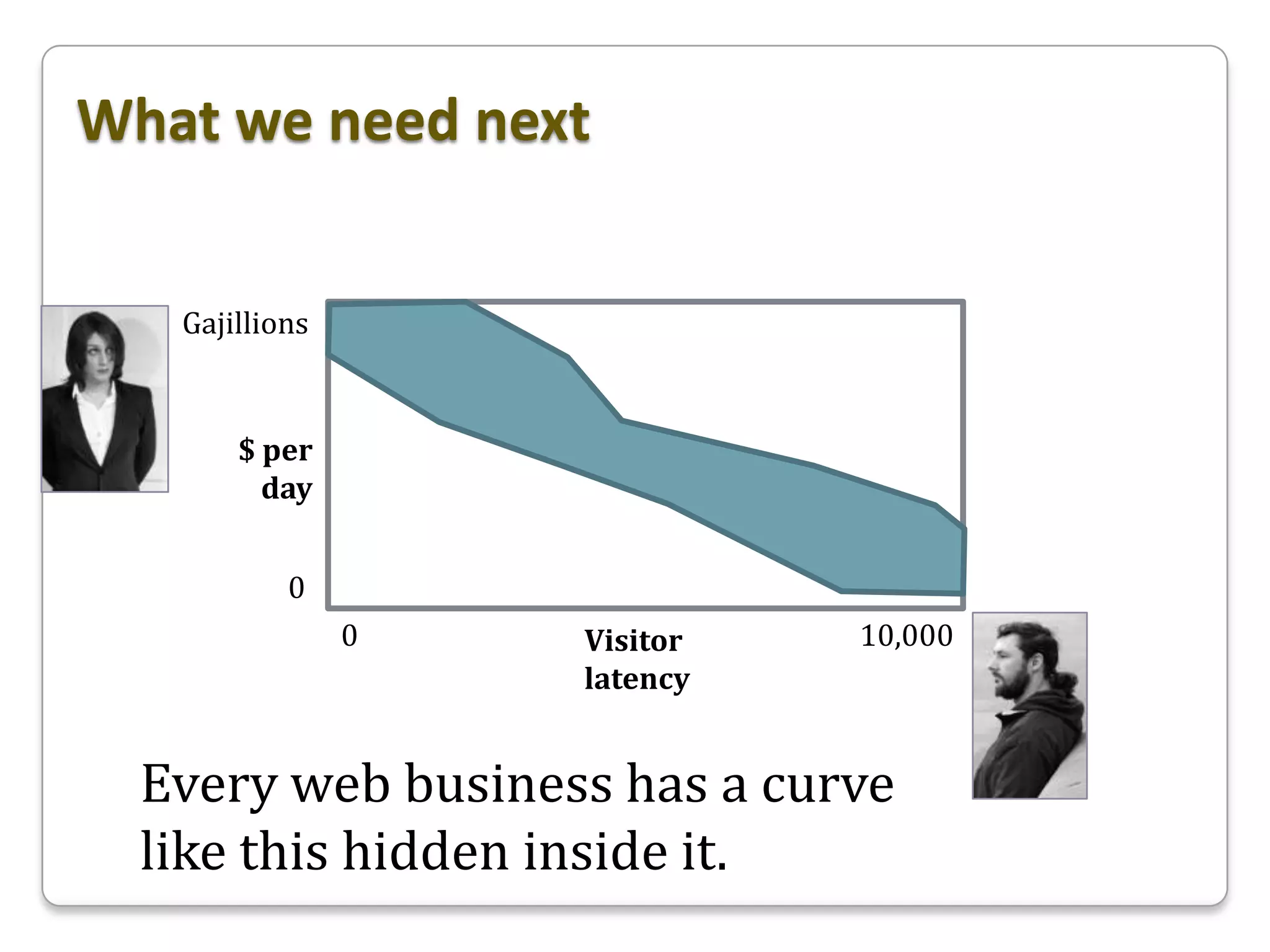

This document discusses how the speed of a website impacts key performance indicators (KPIs) for online businesses. It provides examples of companies that improved website speed and saw increases in important metrics like conversion rates and revenue. The document advocates for justifying investments in performance based on expected returns calculated from increased conversions and order values rather than just page load times. It calls for more experiments to better understand the relationship between website speed and business outcomes for different types of sites and user experiences.