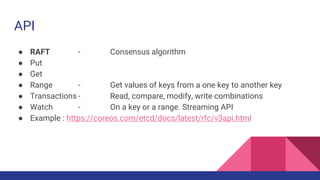

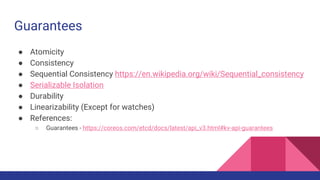

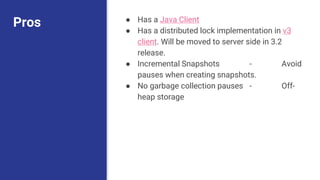

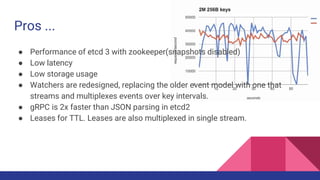

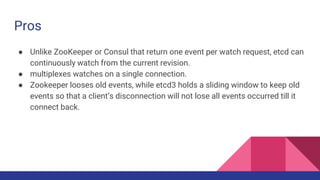

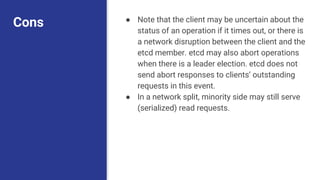

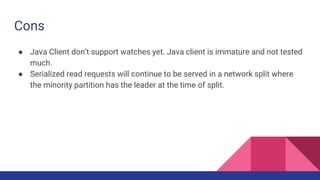

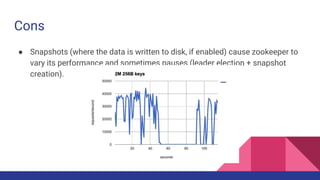

The document compares distributed coordination systems, focusing on etcd v3, Zookeeper, Consul, and Hazelcast. It outlines the pros and cons of each system, highlighting features such as performance, client support, and snapshot behavior. The selection of an appropriate system depends on specific requirements and developer ecosystems.