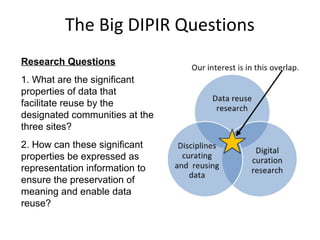

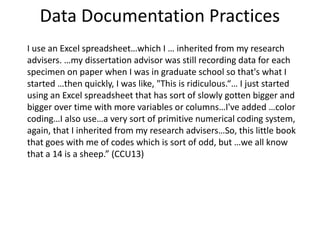

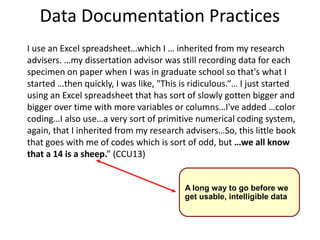

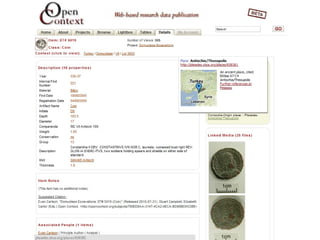

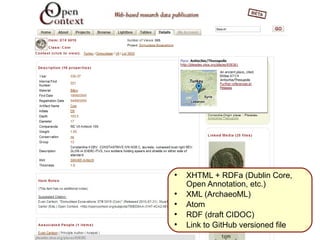

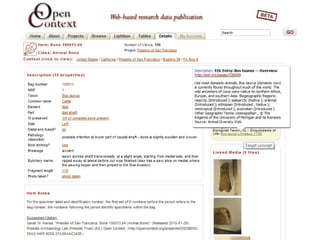

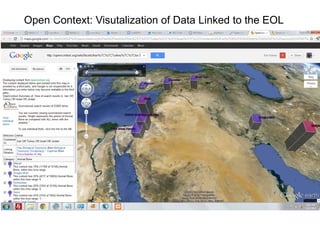

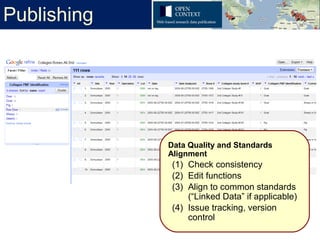

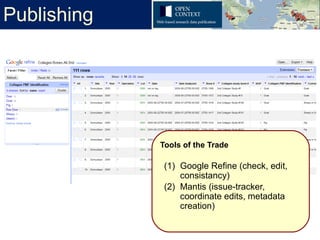

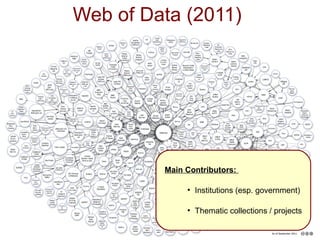

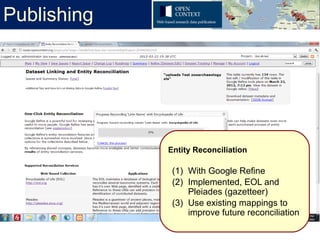

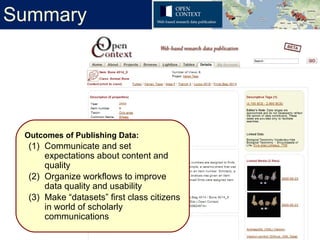

The document discusses the challenges of data sharing in archaeology, focusing on the 'Thousand Flowers' project, which aims to publish open access, high-quality archaeological data. It highlights issues such as complexity, scalability, ethics, and the need for improved data documentation and usability practices. The project emphasizes the importance of linking data to broader networks, improving workflows, and evolving publication standards to better fit modern research needs.