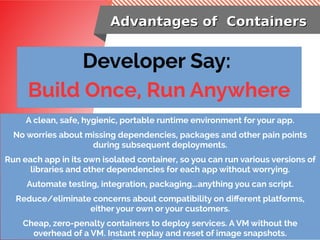

The document discusses running a JBoss EAP cluster on OpenShift. It introduces containers and Docker as a way to package and run applications. OpenShift is presented as a way to automate deployment and scaling of containerized applications on Kubernetes. The document demonstrates deploying a simple Java application using JBoss EAP on OpenShift, configuring the cluster, and enabling auto-scaling based on CPU usage.

![Setting up ClusterSetting up Cluster

ports:

- containerPort: 8080

protocol: TCP

- containerPort: 8443

protocol: TCP

- containerPort: 8778

protocol: TCP

- name: ping

containerPort: 8888

protocol: TCP

[org.jgroups.protocols.openshift.KUBE_PING] (MSC service thread 1-5)

namespace [haexample] set; clustering enabled

[org.jgroups.protocols.openshift.KUBE_PING] (MSC service thread 1-4)

namespace [haexample] set; clustering enabled

[org.infinispan.remoting.transport.jgroups.JGroupsTransport] (MSC service

thread 1-5) ISPN000094: Received new cluster view for channel server:

[haexample-2-pxsd9|0] (1) [haexample-2-pxsd9]

Configuring Cluster on Openshift

Its Running!!!](https://image.slidesharecdn.com/i3-workshopedbredhat-19-july-2017-170719155026/85/create-auto-scale-jboss-cluster-with-openshift-41-320.jpg)