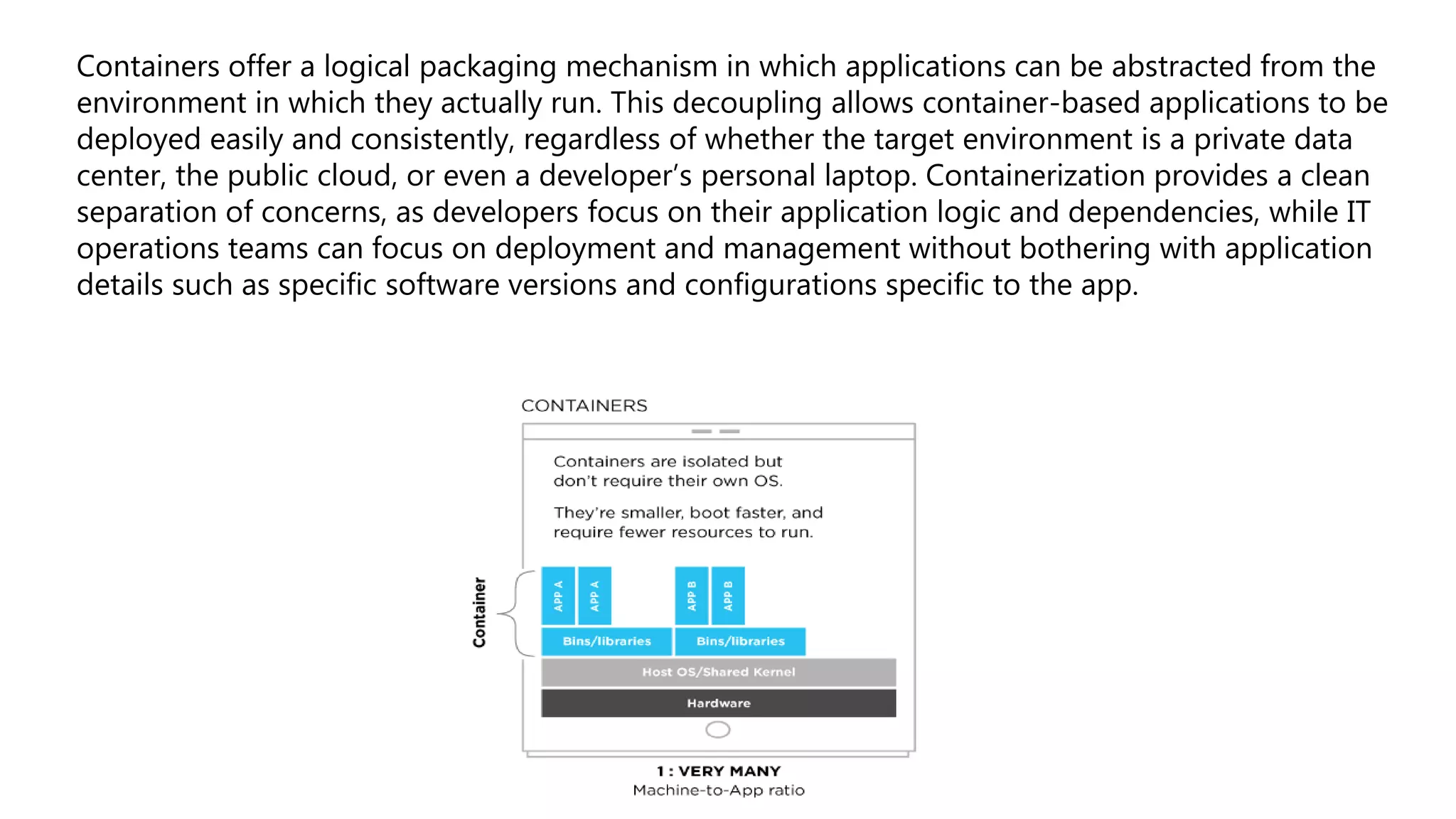

Containers provide a lightweight and consistent packaging mechanism for applications, decoupling them from their running environments, which enhances deployment speed and productivity. They allow developers to manage application dependencies while enabling IT operations teams to focus on deployment and management, significantly reducing bugs and environmental inconsistencies. Containerization is ideal for service-based architectures, fostering reliability and efficiency through componentization and independent resource management.

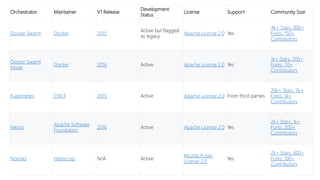

![Features Docker Swarm Docker Swarm mode Kubernetes Mesos Nomad

Scheduler Architecture Monolithic Shared State [source required] Shared State Two Level Shared State

Container agnostic No No Yes, Docker and rkt Yes, Docker, rktand other drivers Yes, Docker, rkt and other drivers

Service discovery

No native support, requires third

parties source

Native support source

Native support with an intra-cluster

DNS source

or

Using environment variables source

Native support with Mesos-DNS source

Requires Consul but easy integration

provided source

Secret management No native support [source required]

Native support with Docker Secret

Management source

Native support with secret objects source

No native support, depends on the

framework source

Requires Vault but easy integration

provided source

Configuration management

Through env variables in compose

files source

Through env variables in compose

files source

Native support through ConfigMap source

or

Injecting configuration using environment

variables source

No native support, depends on the

framework source

Through the env stanza in the job

specifications source

Logging

Requires to configure a logging driver and

forward to a third party such as the ELK

stack

Requires to configure a logging driver and

forward to a third party such as the ELK

stack

Requires to forward logs to a third party

such as the ELK stack source

With ContainerLogger or provided by the

frameworks source

With the logs stanza to configure where to

store the logs for the containers source

Monitoring

Requires to use a third party to keep track

all the containers status source

Requires to use a third party to keep track

all the containers status source

With Heapsters providing a base

platform sending data to a storage

backend source

Sends Observability Metrics to a third party

monitoring dashboard source

By outputting resource data to statsite and

statsd source

High-Availability

Native support by creating multiple

manager source

Native support from the distribution of

manager nodes source

Native support by replicating master in a

HA-cluster source

Native support from having multiple

masters with ZooKeeper

Native support from the distribution of

server nodes source

Load balancing No native support [source required]

By exposing ports for services to an

load balancer source

External load balancer automatically

in front of a service configured for

such source

Provided from the selected framework such

as Marathon source

Can use Consul integration to do the load

balancing using third parties source

Networking Uses Docker networking facilities source Uses Docker networking facilities source

Requires the use of third parties to form an

overlay network source

Requires the use of third parties for

networking solutions source

No native support for network overlay but

handles the exposed services through the

network stanza source

Application definition Uses Docker Compose files source

Can use the experimental stack command

read Docker-Compose format source

Using yaml format to define the different

objects e.g.

Depends on the framework source

Using HCL, a proprietary language similar

json source

Deployment

No deployment strategies, only applies the

Docker Compose on a cluster [source

required]

Supports rolling update in service

and applies it on image update source

Supports roll-back source

Native support for deployment with the

Deployment definition source

Depends on the framework source

Natively support multiple deployment

strategies such as rolling upgrades and

canary deployments source

Auto-scaling No [source required]

No, but has easy manual scalling

available source

Native supports to autoscale pods within a

given range source

Depends on the framework source

Only available through HashiCorp private

platform Atlas source

Self-healing No [source required] Yes source Yes source Depends on the framework source Yes source

Stateful support Through the use of data volumes source Through the use of data volumes source

Through StatefulSets source

or

Using persistent volumes source

Through the creation of persistent

volumes source

Using Docker Volumes source

Development environment

Can use the same or similar Docker

Compose files to create the dev

environment

Can use the same or similar Docker

Compose files to create the dev

environment

Can use minikube to quickly create a single

node Kubernetes cluster to create the dev

environment source

Depends on the framework but a Mesos

cluster can be installed locally

By running a single nomad agent that is

both server and client to create the dev

environment

Documentations

Initially confusing due to the change

from Docker Swarm to Docker engine

Swarm mode

Initially confusing due to the change

from Docker Swarm to Docker engine

Swarm mode

Sometimes difficult to search due to the

changes of documentation platforms

(github to kubernetes.io) and then

organisation (from user-guide/ to tasks/,

tutorials/ and concepts/](https://image.slidesharecdn.com/containerization-180406141131/85/Containerization-6-320.jpg)