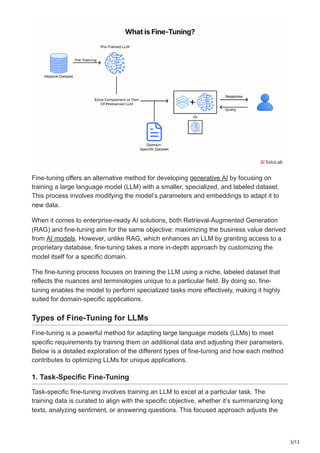

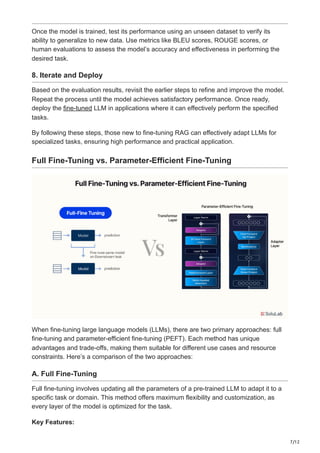

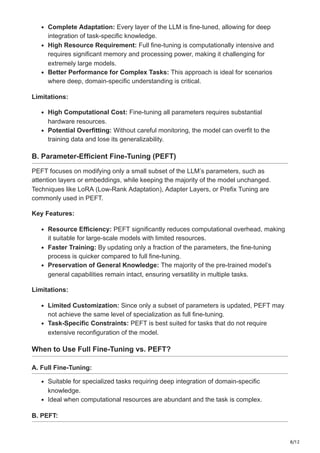

The document compares retrieval-augmented generation (RAG) and fine-tuning as techniques to enhance large language models (LLMs), detailing their distinct methodologies and application scenarios. RAG integrates external data sources for real-time, accurate responses, while fine-tuning involves training on specialized datasets to adapt model parameters for specific tasks or domains. Choosing between these approaches depends on project needs and resource availability, with the possibility of combining both strategies for optimal AI solutions.