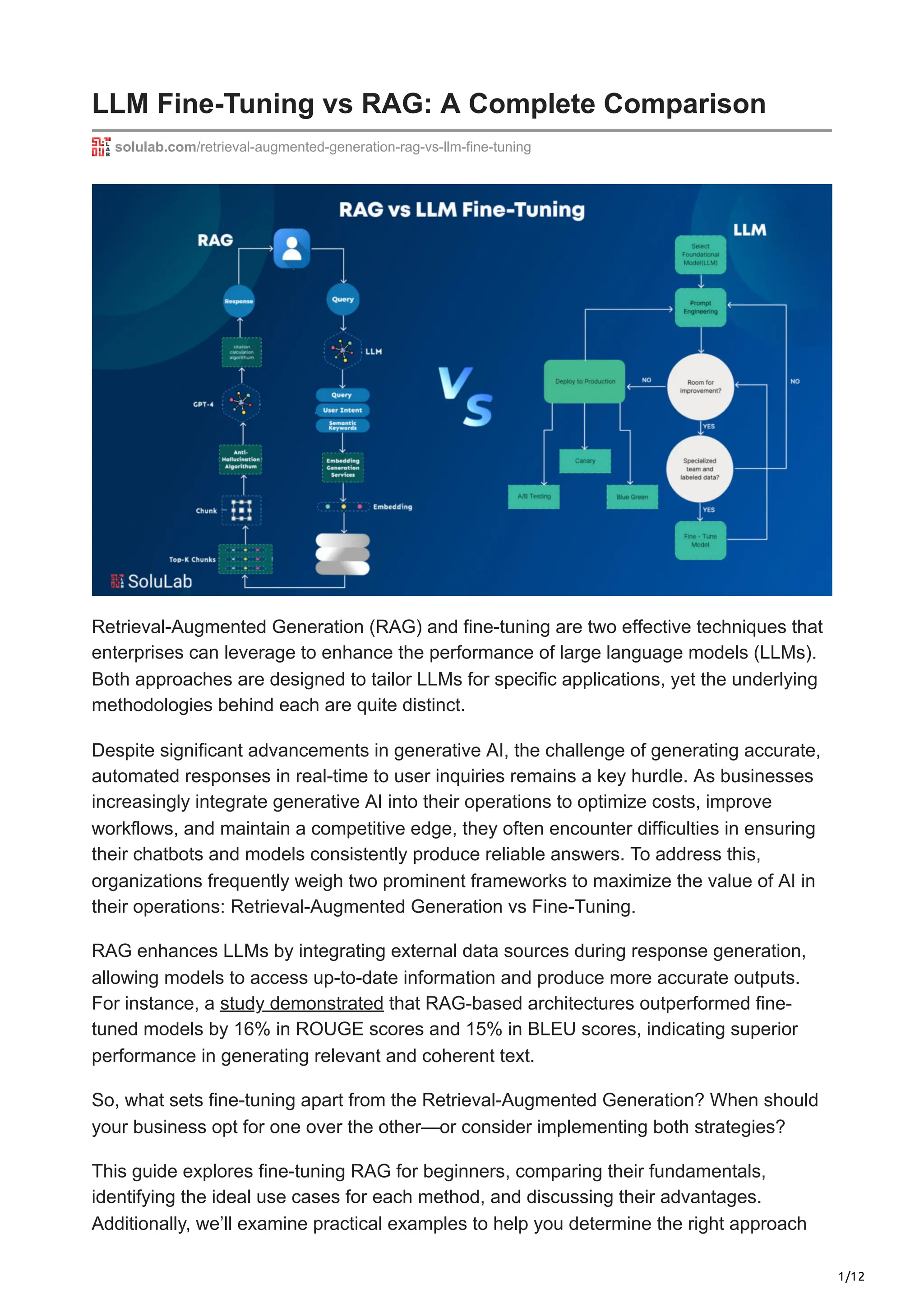

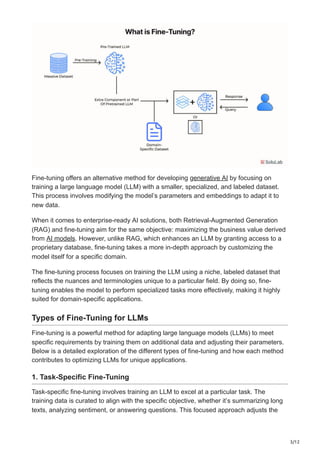

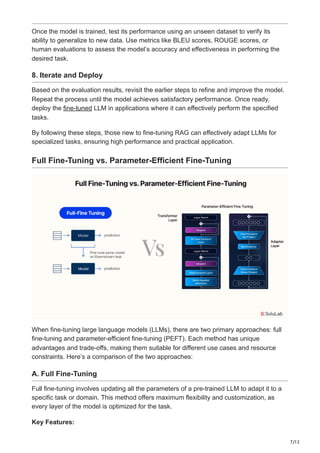

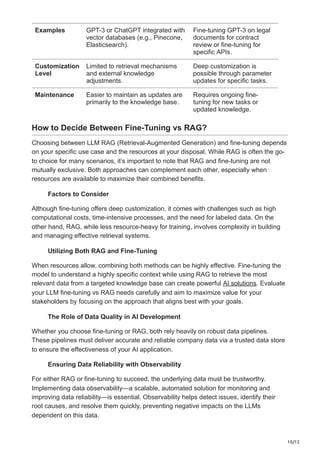

The document compares retrieval-augmented generation (RAG) and fine-tuning as techniques for enhancing large language models (LLMs) in enterprise applications. RAG integrates external data sources for real-time response generation while fine-tuning adapts an LLM through specialized datasets for domain-specific tasks. It discusses the complexities and advantages of both methods, offering insights on when to choose one over the other and emphasizing the importance of data quality.