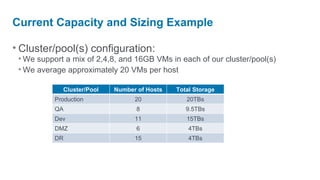

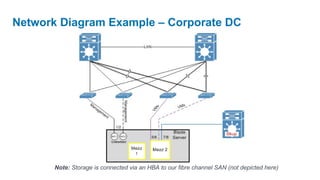

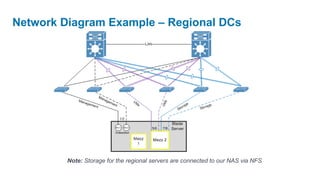

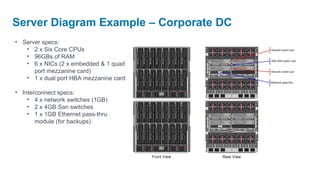

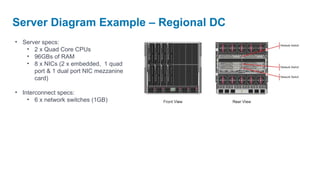

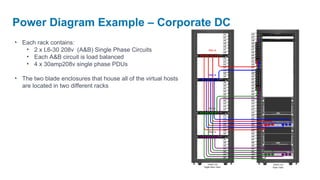

The document discusses the design and implementation of scalable private and public cloud environments, emphasizing critical design areas such as capacity planning, virtual platforms, and datacenter locations. It outlines specific guidelines for network and storage configurations, server hardware requirements, and power design, as well as the importance of monitoring and management solutions. Additionally, it highlights the necessity of documenting the design process and the challenges of managing virtual machine sprawl.