1) Autonomous vehicles require balancing supercomputing complexity, real-time performance, and functional safety.

2) Cyber-physical systems rely on four pillars: connectivity, monitoring, prediction, and self-optimization.

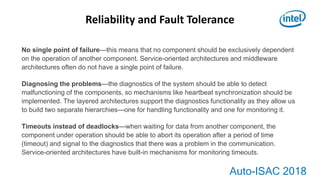

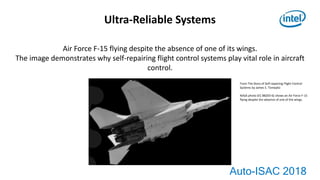

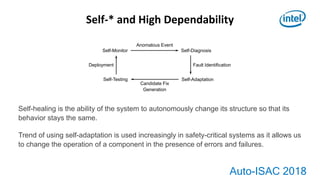

3) Ultra-reliable systems require qualities like self-healing, where the system can autonomously change its structure to maintain behavior despite failures.