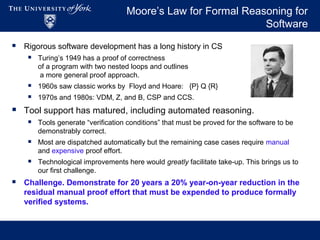

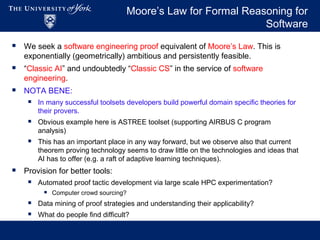

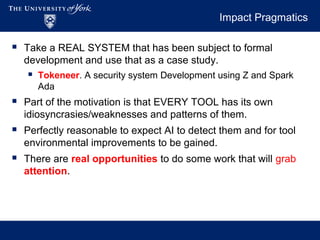

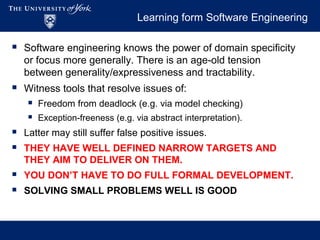

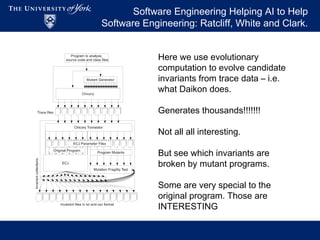

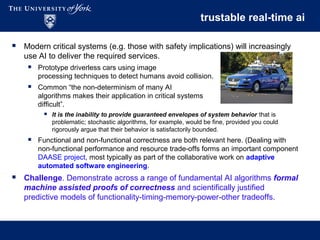

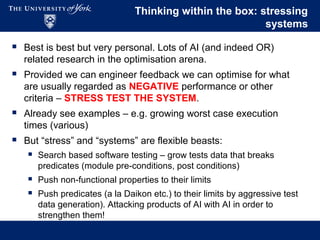

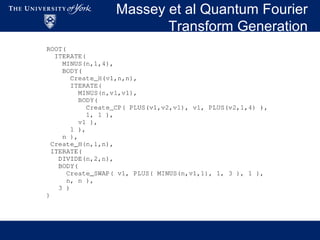

This document discusses challenges at the intersection of software engineering and artificial intelligence over the next 20 years. It proposes several challenges, including: 1) demonstrating a 20% year-over-year reduction in manual proof effort for formally verified systems; 2) developing trustable real-time AI algorithms with proofs of correctness; and 3) identifying high-impact uses of abundant cloud computing resources for SE and AI problems. It also discusses challenges around low-power systems, stress testing systems, and generating new algorithms for quantum computing.