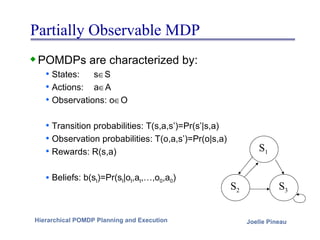

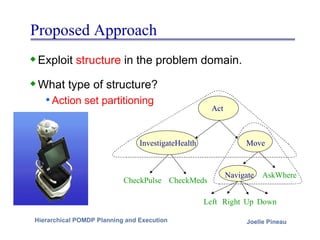

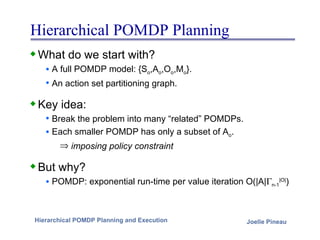

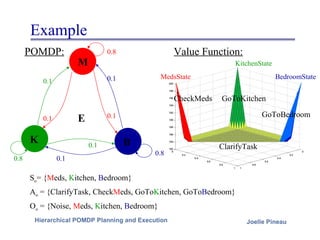

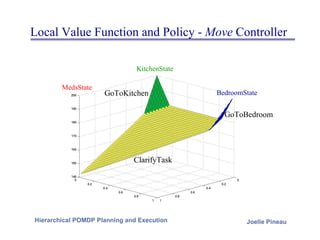

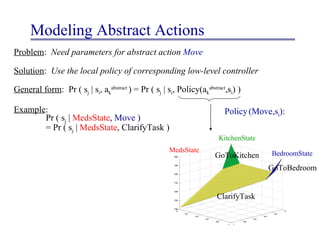

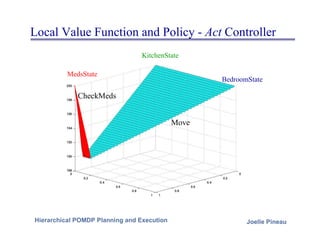

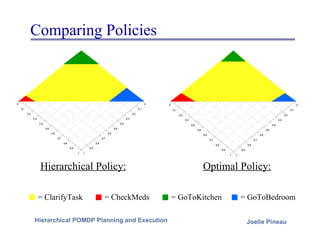

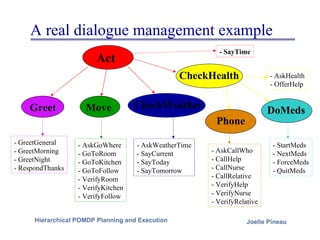

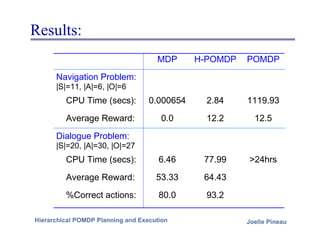

The document proposes a hierarchical approach to planning for partially observable Markov decision processes (POMDPs) to address their exponential complexity. It involves breaking the problem into smaller related POMDPs, each with a subset of the full action space, and imposing policy constraints across them. This exploits structure in the problem domain to find near-optimal policies. As an example, a medical assistance POMDP is broken into controllers for different areas that communicate through abstract actions modeled from their local policies.