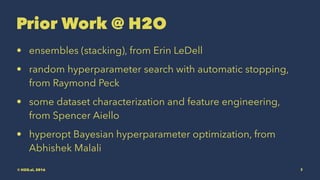

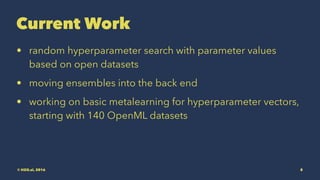

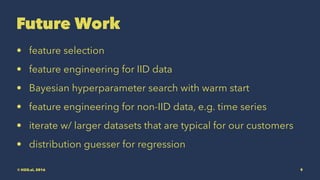

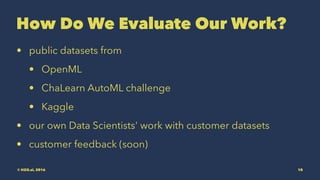

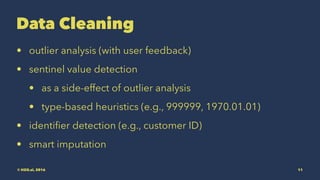

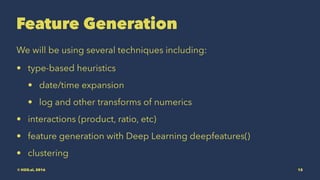

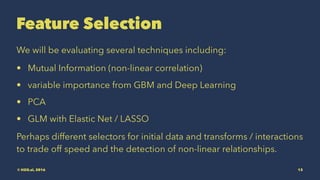

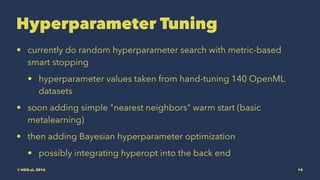

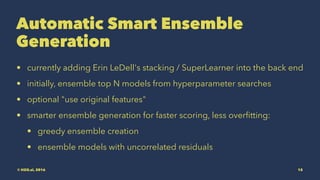

The H2O AutoML roadmap outlines the automation of data preparation and model training for both novice and expert users, with a focus on feature generation, selection, and hyperparameter tuning. Current and future work includes evolving techniques for feature engineering and evaluation using public datasets, alongside customer feedback. The document presents a comprehensive plan for enhancing their AutoML offerings through metalearning and ensemble model creation.