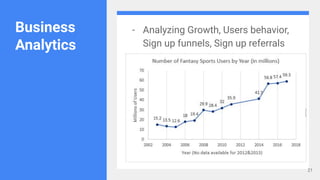

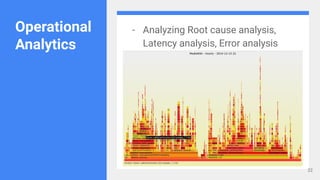

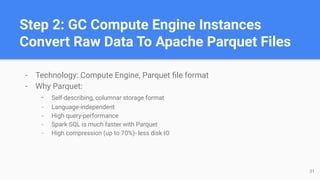

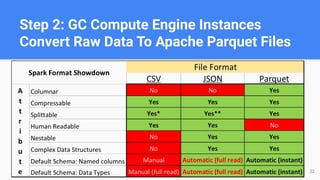

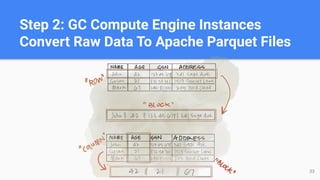

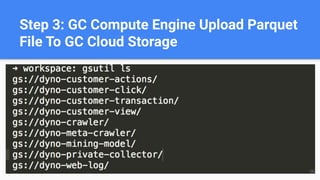

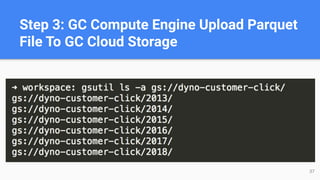

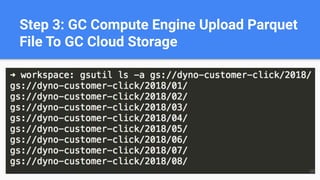

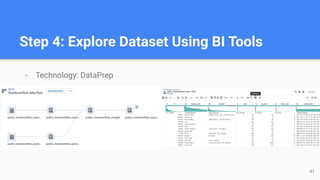

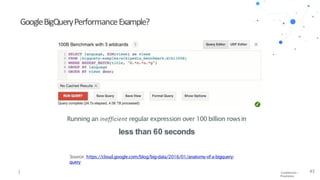

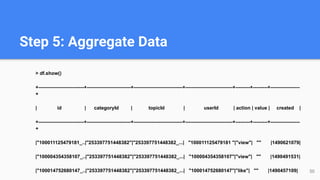

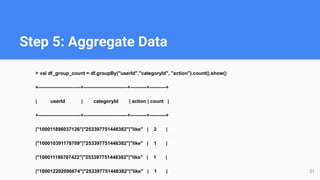

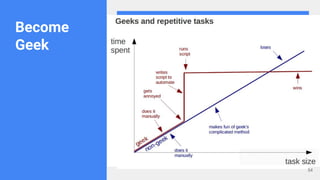

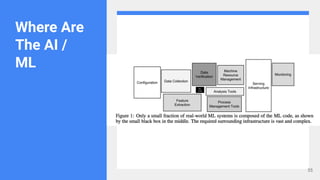

This document summarizes Tu Pham's presentation on building an end-to-end business intelligence system on Google Cloud. It describes collecting raw user data from partners using Compute Engine, processing the data into Apache Parquet files, storing the files in Cloud Storage, and analyzing the data using tools like DataPrep, BigQuery, and Grafana. The system aggregates data to calculate metrics like unique users per topic and average user engagement. Tu Pham emphasizes principles like keeping things simple, separating realtime and batch workflows, and optimizing costs.