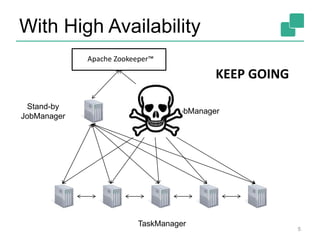

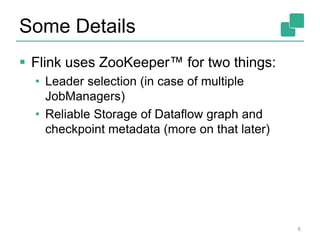

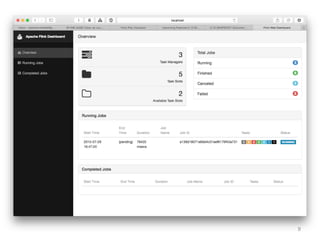

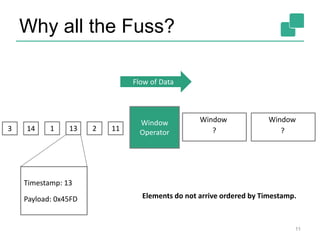

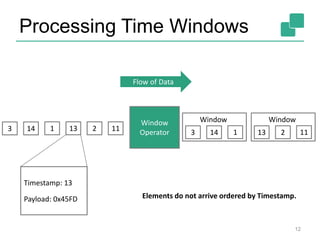

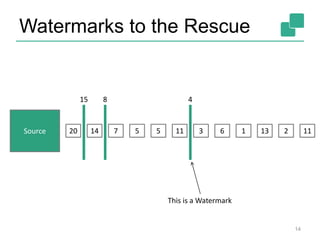

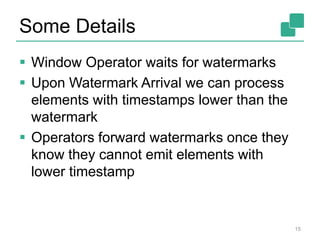

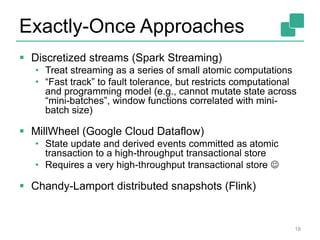

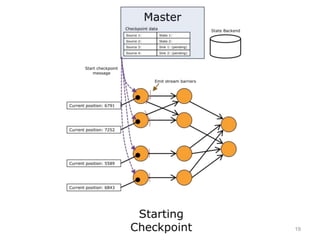

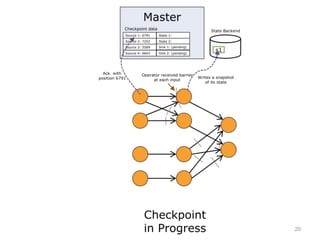

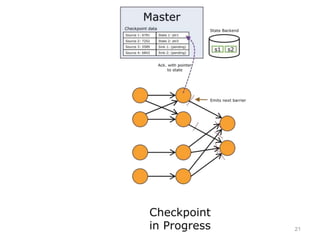

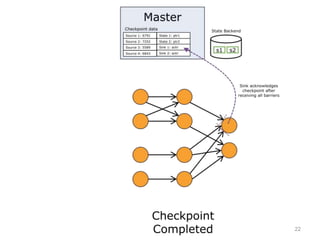

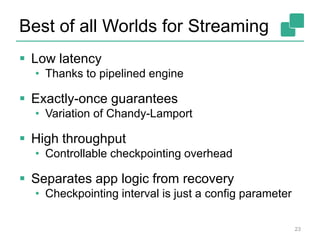

The upcoming Apache Flink 0.10 release will include features such as high availability of the JobManager through Zookeeper, live monitoring of accumulators and metrics, improved event-time and windowing capabilities using watermarks, and exactly-once fault tolerance through distributed snapshots. A demo will also show how fault tolerance works to ensure state consistency during failures. More improvements are still being worked on for this release.