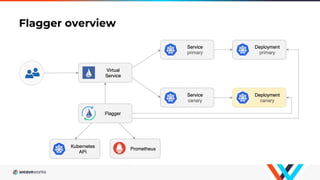

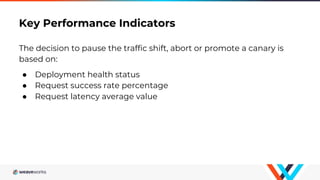

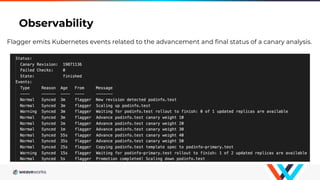

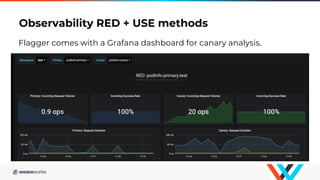

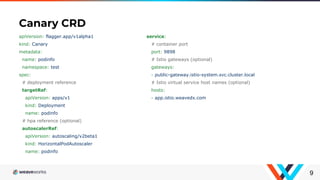

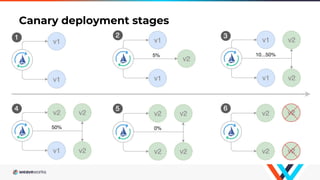

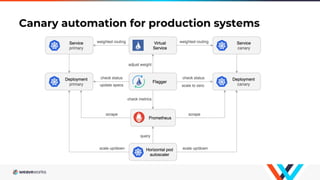

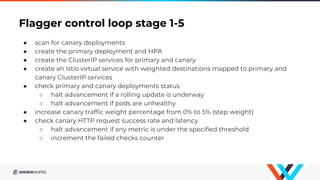

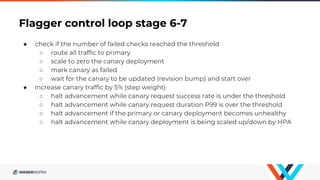

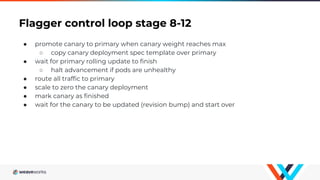

Flagger is a Kubernetes operator that automates canary deployments using Istio routing and Prometheus metrics. It gradually shifts traffic to the canary while measuring key performance indicators like success rate and latency. If the canary meets the thresholds, it is promoted to primary, if not traffic is shifted back and the canary is aborted. Flagger implements a control loop that advances the canary in steps from 0% to 100% traffic while monitoring metrics to ensure stability before fully promoting the deployment.