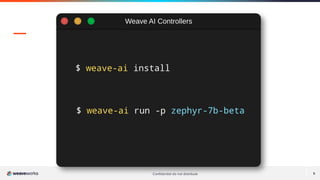

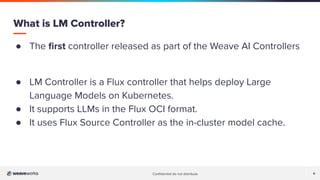

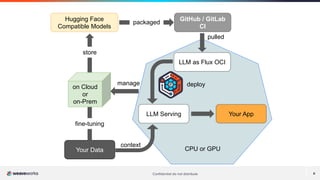

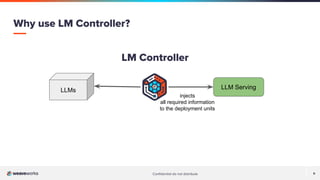

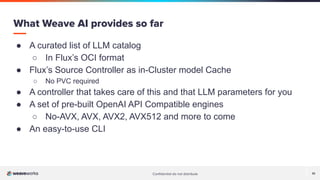

The document outlines the deployment of large language models (LLMs) on Kubernetes using the Weave AI platform. It introduces the LM Controller, which simplifies the deployment and management of LLMs, making them accessible to development teams through self-service options. Key features include integration with existing DevOps pipelines, a curated LLM catalog, and pre-built engines for various CPU architectures.