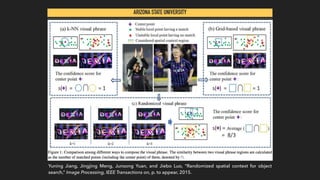

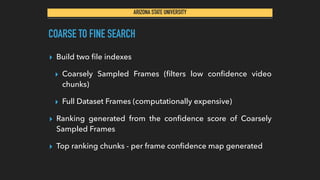

The document presents a method for fast object instance search in videos from a single example. The method generates frame-wise confidence maps using Randomized Visual Phrases to locate the target object across frames. It then uses Max-Path search to further improve accuracy by handling scale variations and providing robustness without relying on image segmentation. The algorithm ranks object trajectories across video chunks based on confidence scores. Coarse-to-fine frame indexing and filtering techniques enable efficient search of large video datasets in under 30 seconds. Evaluation on standard datasets demonstrates accurate and efficient object instance search from one example.

![ARIZONA STATE UNIVERSITY

WHY?

▸ Vigilance

▸ Combining with AI technologies to help improve crop

productivity [Video]

▸ Autonomous Cars [Video]

▸ Robots which can catch moving objects](https://image.slidesharecdn.com/fastobjectinstancesearchfromoneexample-151111224704-lva1-app6891/85/Fast-Object-Instance-Search-From-One-Example-3-320.jpg)

![ARIZONA STATE UNIVERSITY

ALGORITHM

▸ V = {F1, F2…Fn} where Fi ∈ V

▸ Assumption: Trajectories are non-overlapping

▸ V = {V1, V2…Vn} where Vi ∈ V

▸ Ti = {Ti1, Ti2…Til} where l is total number of object trajectories

▸ Tij = {Bij1, Bij2…Bijk} where k is total number of frames in trajectory Tij

▸ To find the trajectory, Ti

*

= argmax s(Tij) where Tij ∈ Ti [equation 1]

▸ s(Tij) = ∑ s(B) where B ∈ Tij [equation 2]

▸ Once we have the best trajectory Ti

*

for each video chunk, we can then return

the ranked results of all trajectories.](https://image.slidesharecdn.com/fastobjectinstancesearchfromoneexample-151111224704-lva1-app6891/85/Fast-Object-Instance-Search-From-One-Example-10-320.jpg)

![ARIZONA STATE UNIVERSITY

OTHER METHODS DEPLOYED

▸ Hessian Affine Detectors [4]

▸ FLANN [5]](https://image.slidesharecdn.com/fastobjectinstancesearchfromoneexample-151111224704-lva1-app6891/85/Fast-Object-Instance-Search-From-One-Example-14-320.jpg)