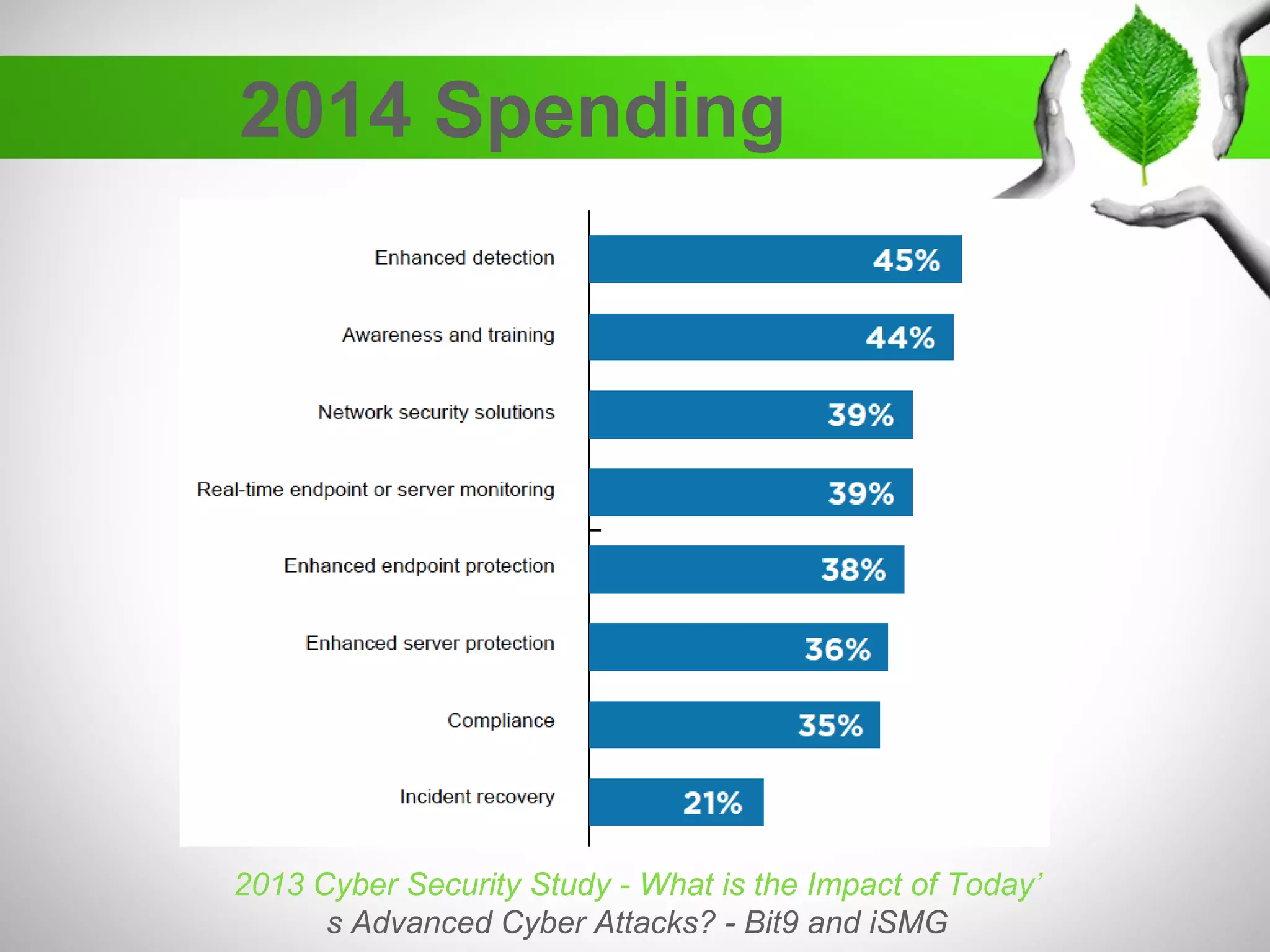

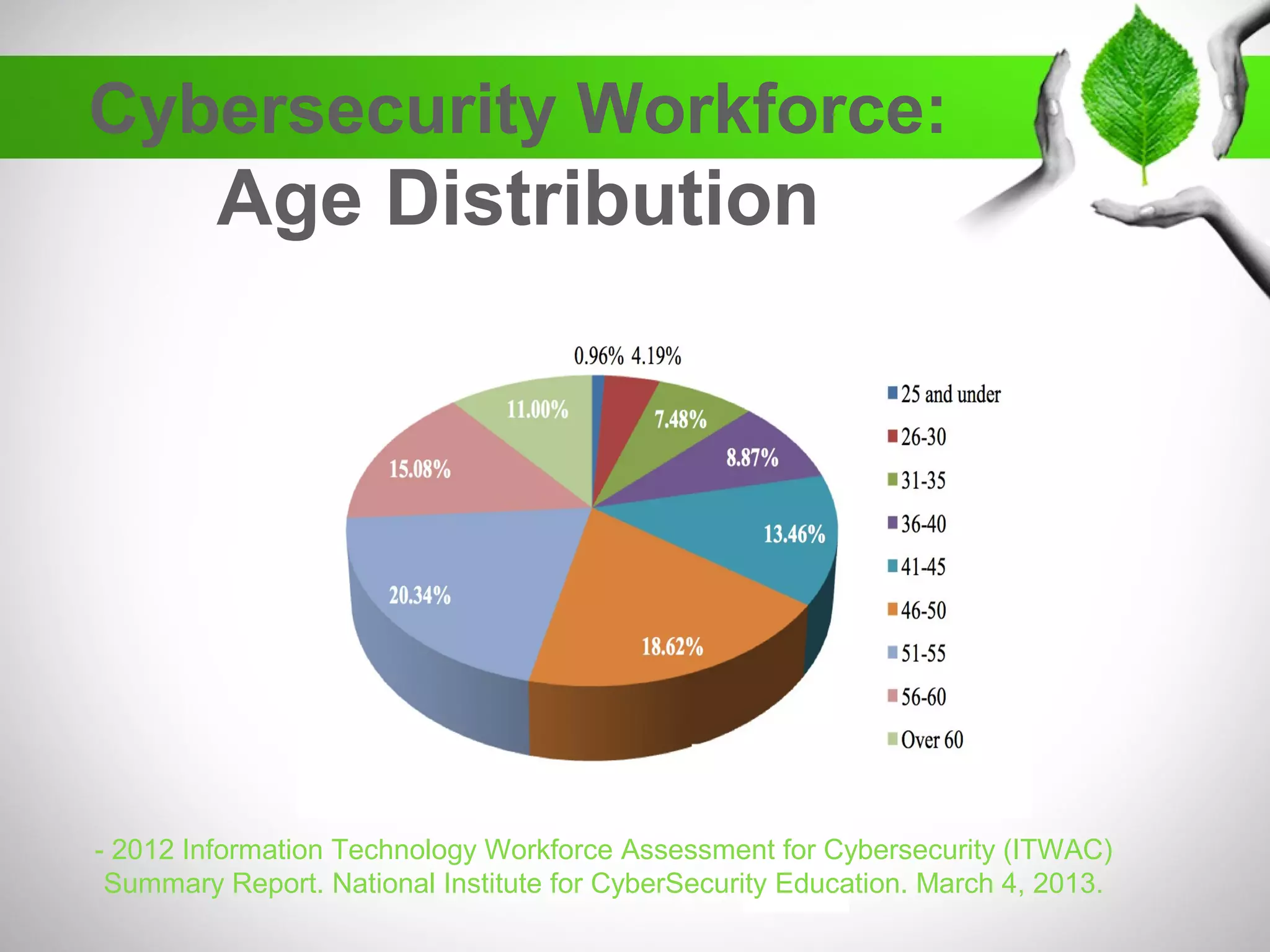

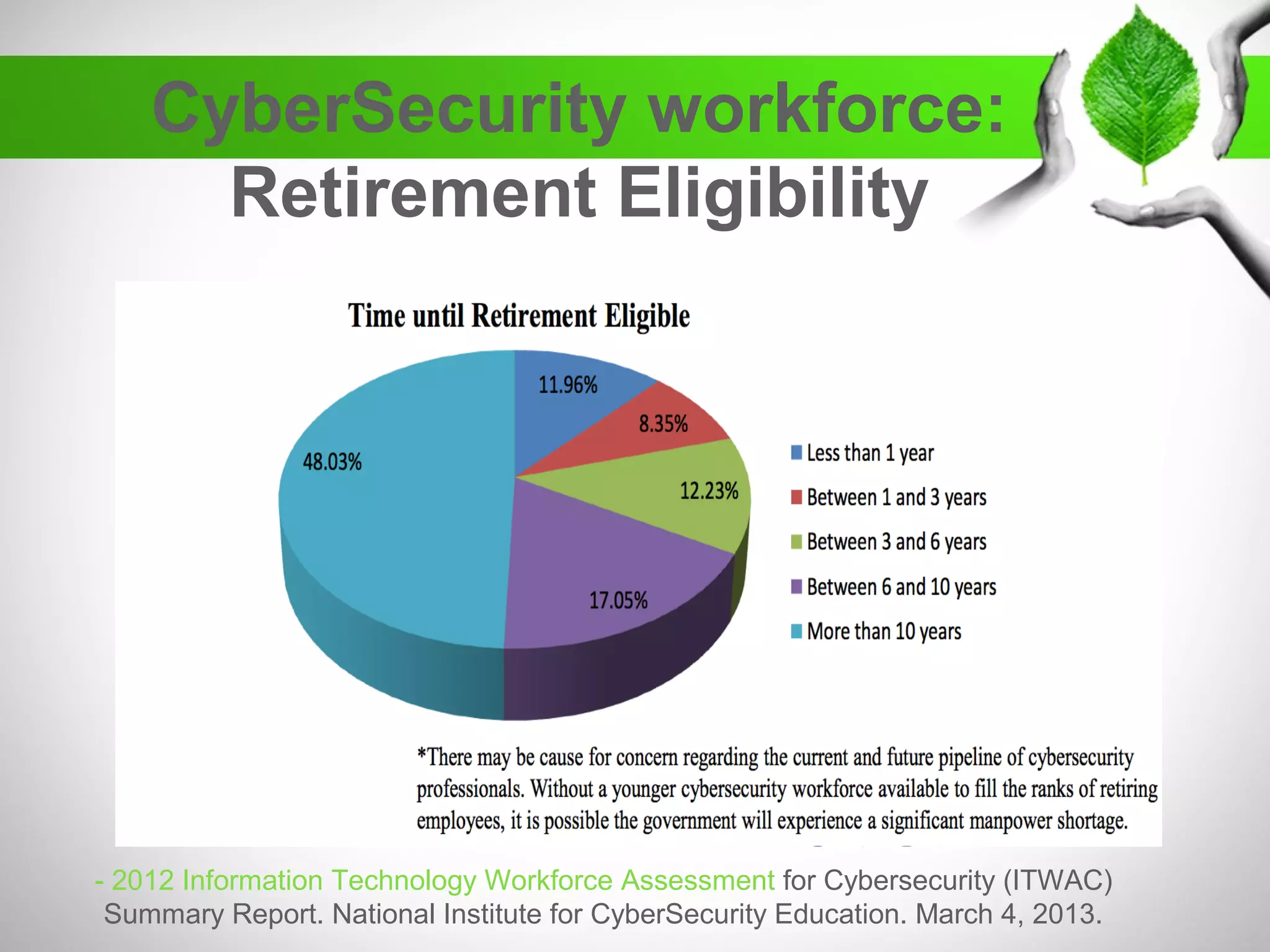

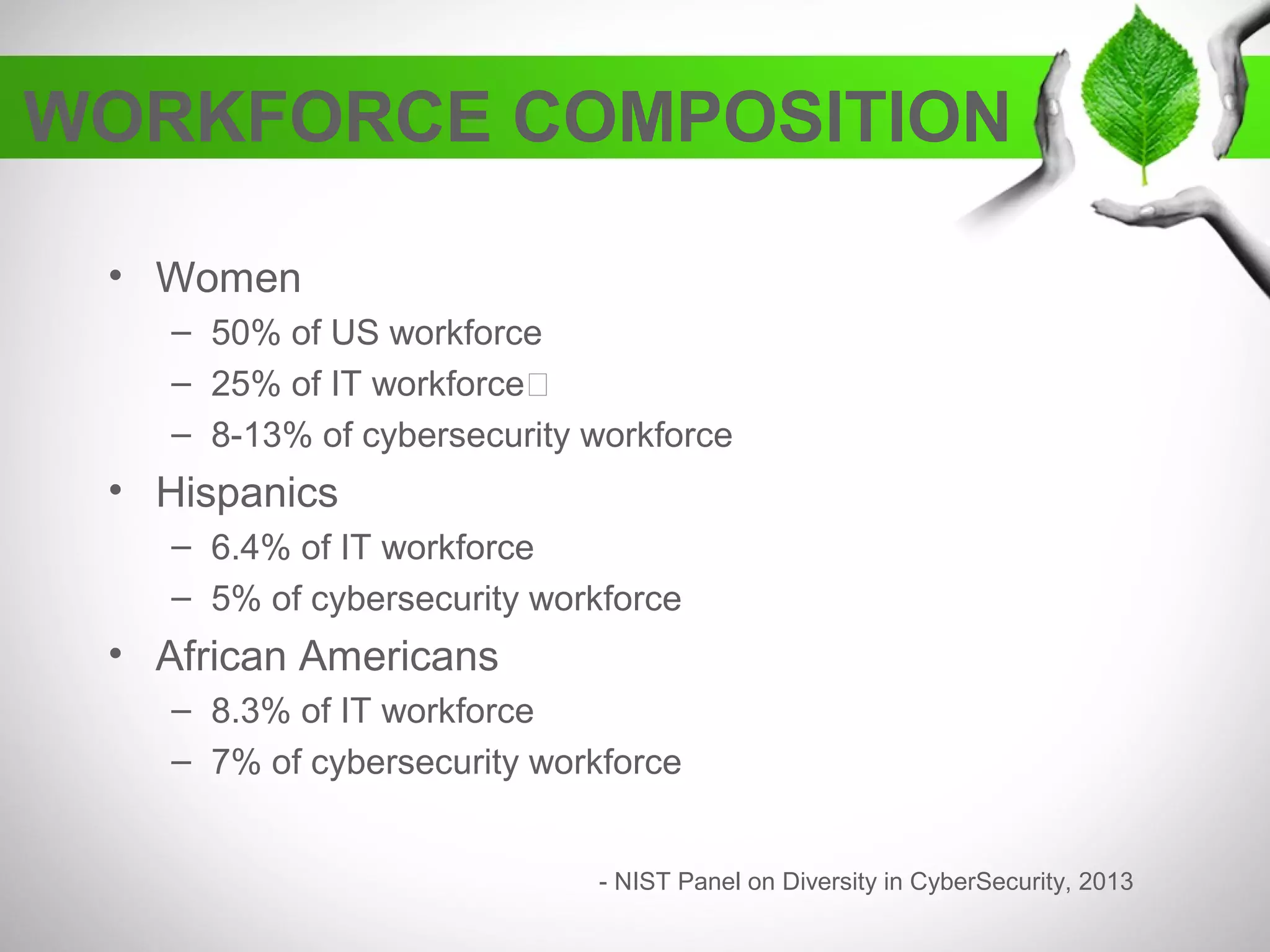

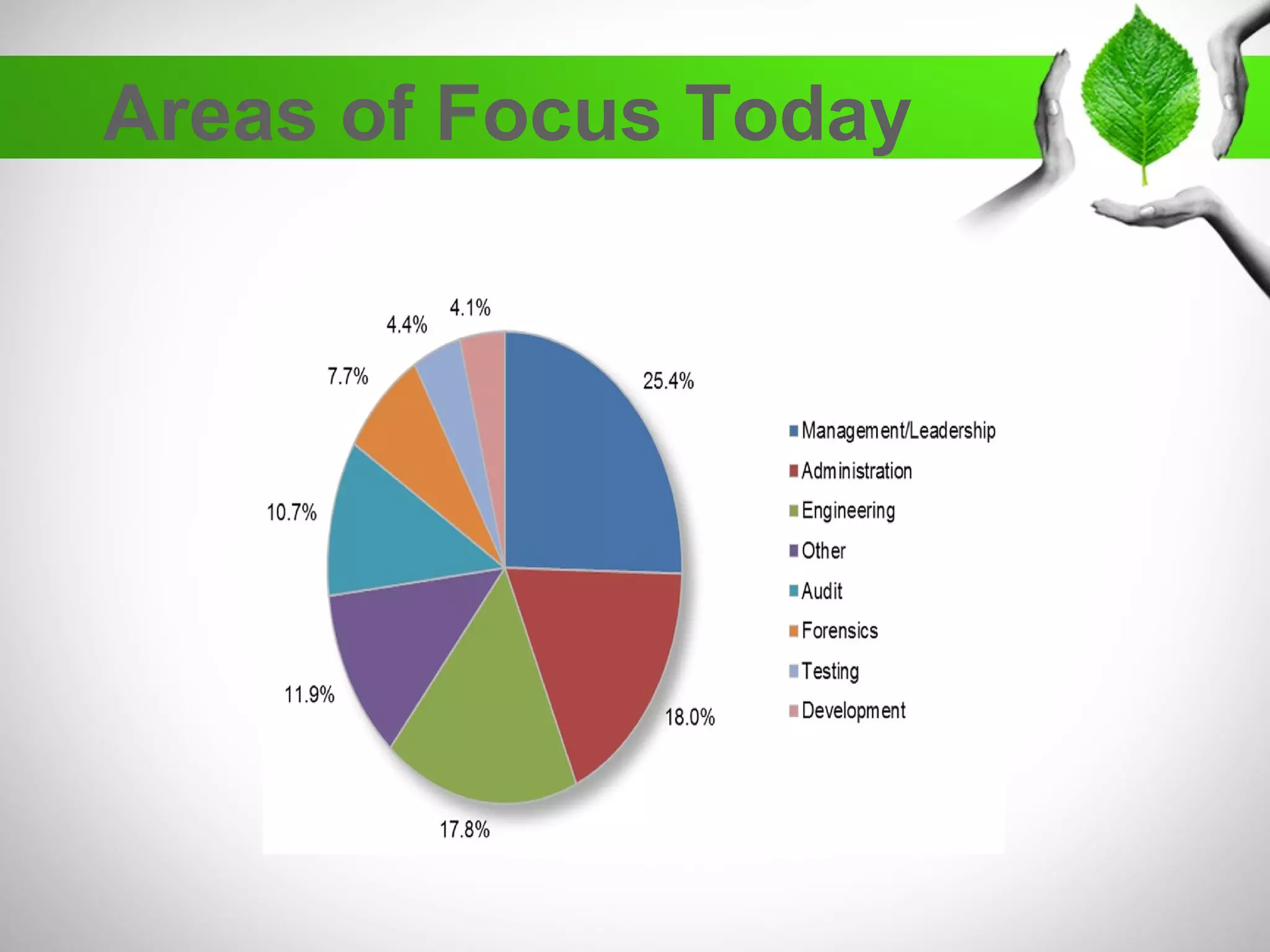

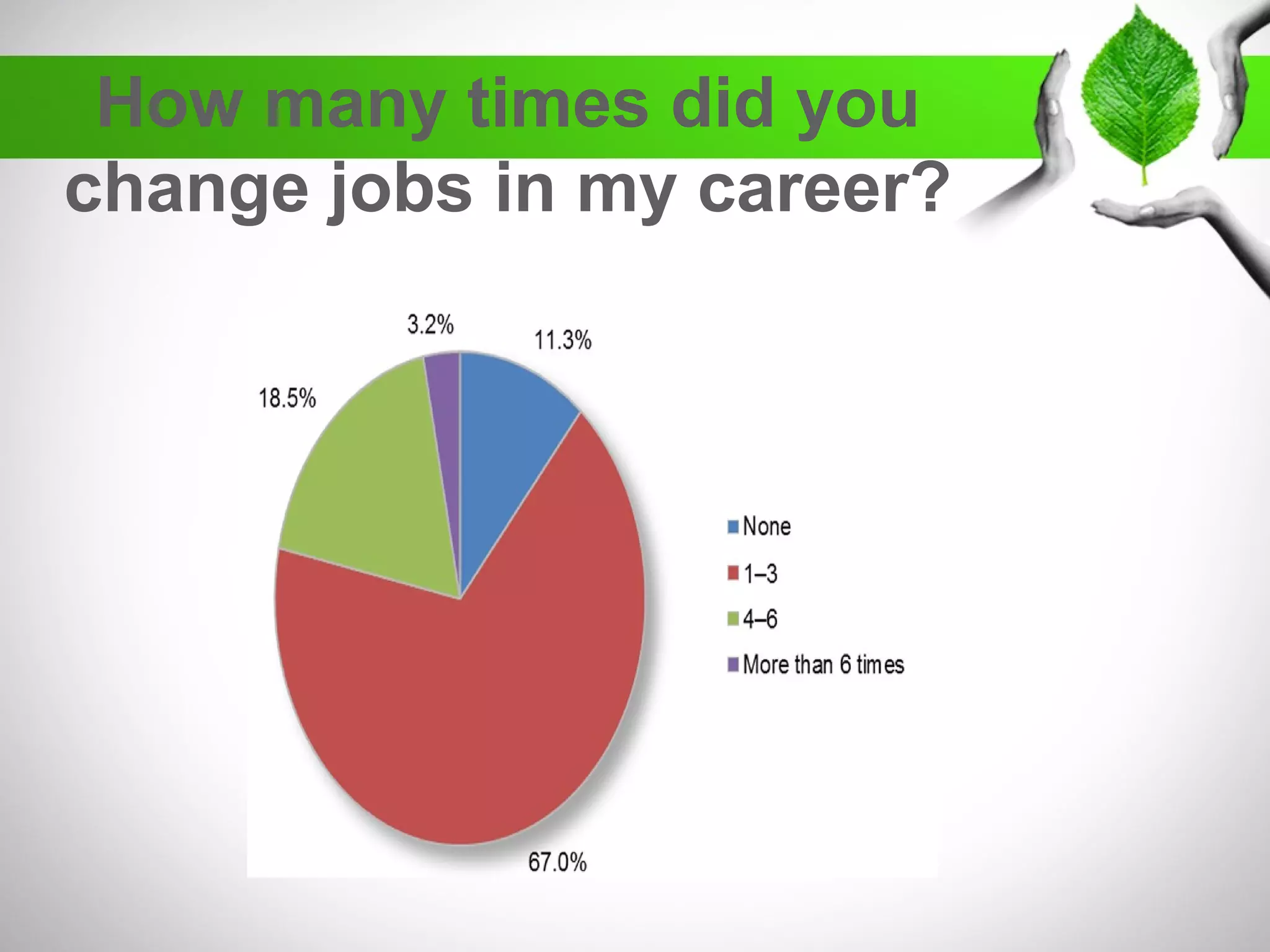

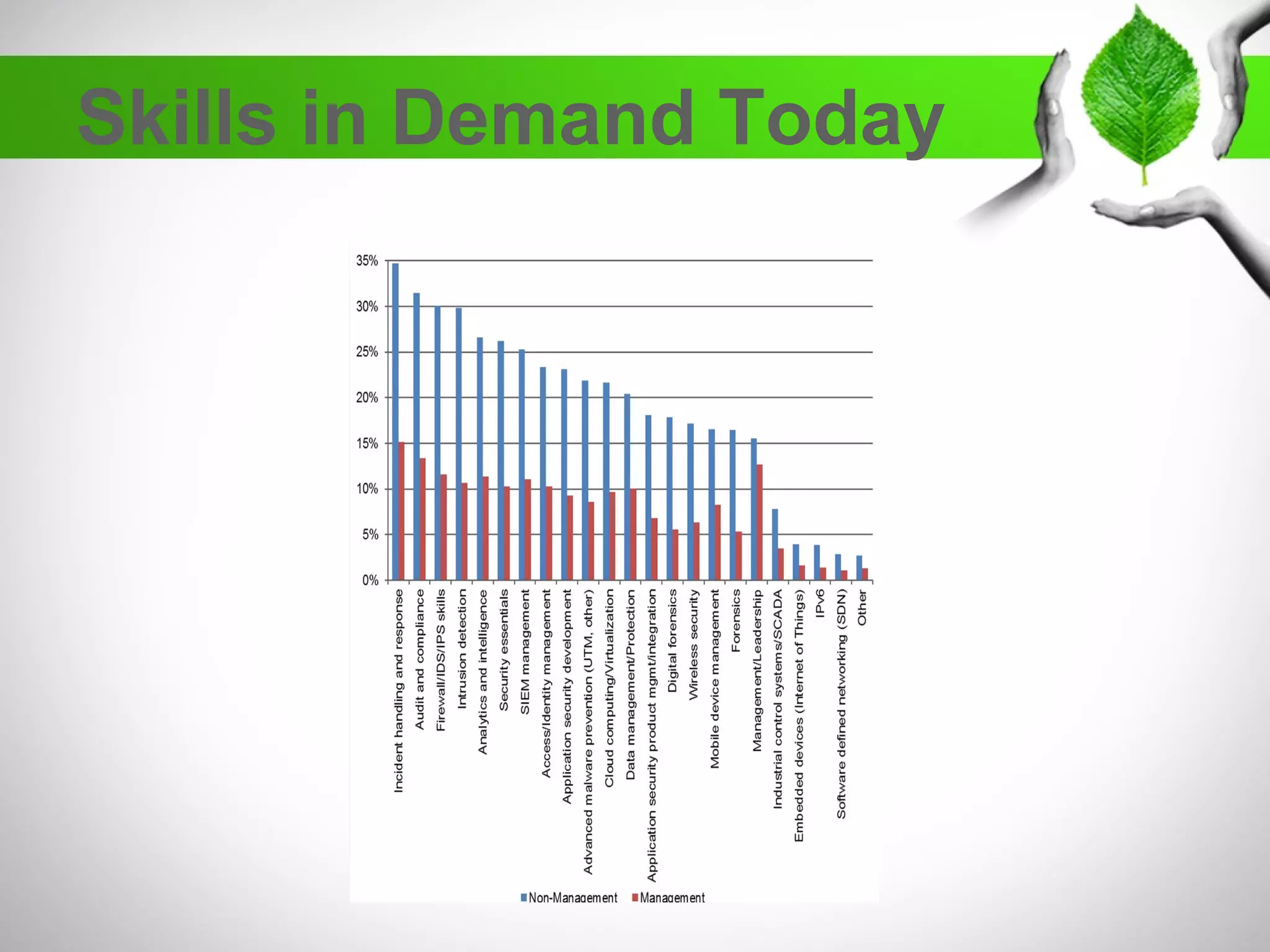

The document discusses the creation of a diverse cybersecurity program, emphasizing the importance of diverse perspectives and backgrounds in addressing cybersecurity challenges. It highlights current workforce demographics, challenges to diversity, and the significant role that a varied team plays in enhancing innovation and effectiveness in cybersecurity. The author, Dr. Tyrone W.A. Grandison, calls for a holistic approach to problem-solving in cybersecurity, advocating for inclusive strategies that integrate individuals from diverse backgrounds.