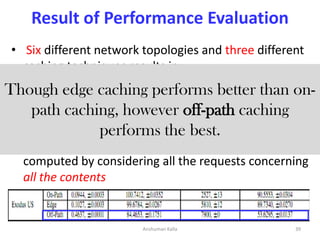

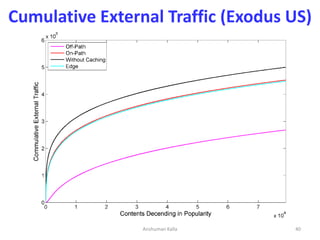

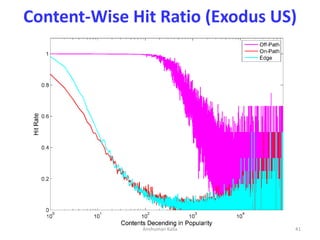

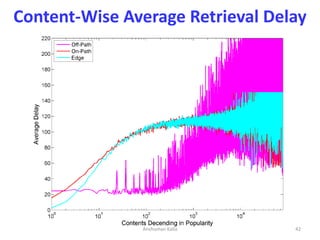

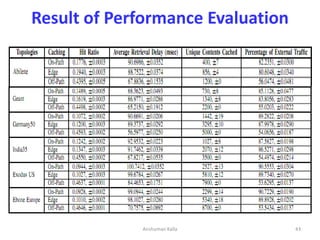

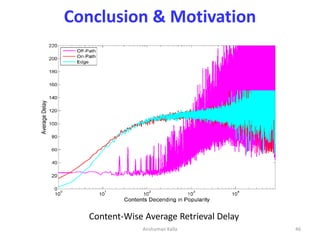

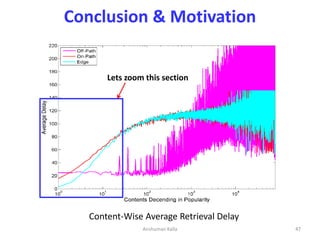

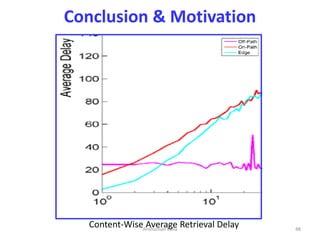

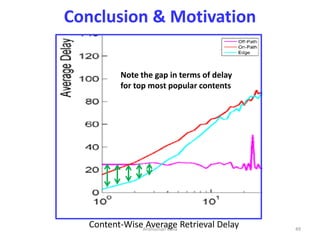

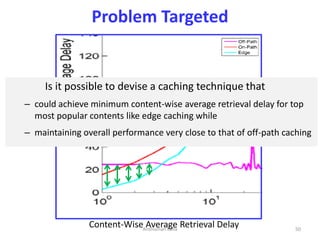

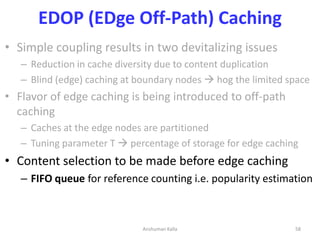

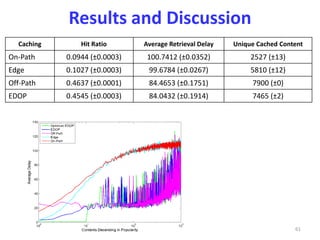

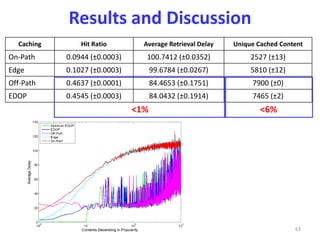

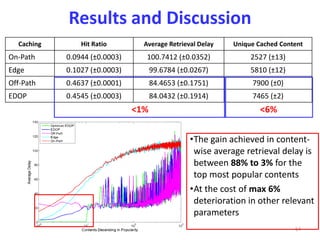

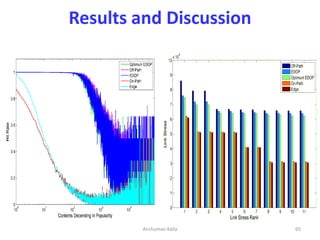

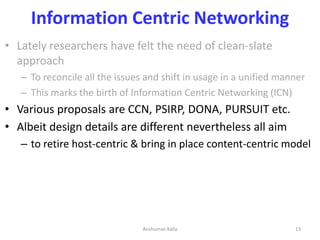

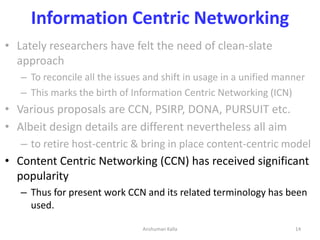

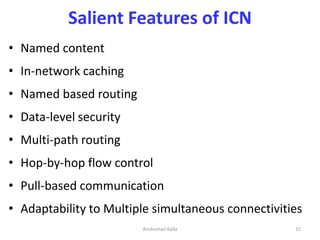

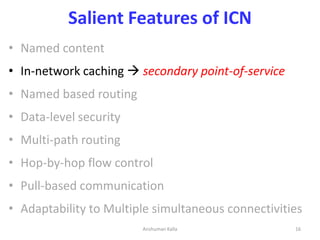

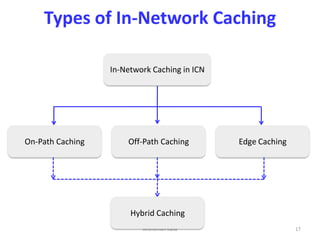

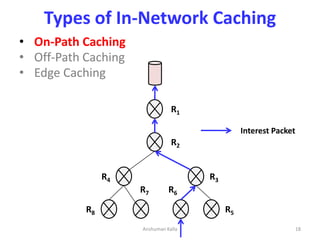

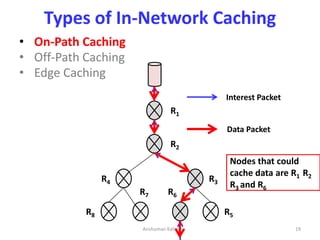

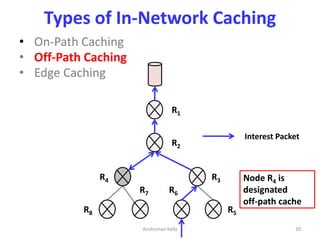

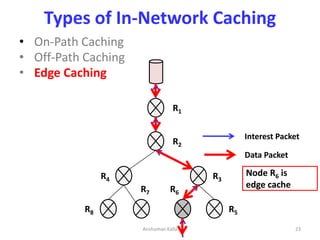

The document presents a study on off-path caching with edge caching in information-centric networking (ICN), highlighting the need for a transition from host-centric to content-centric models due to limitations in current TCP/IP networking. Performance comparisons among on-path, off-path, and edge caching techniques reveal that while off-path caching performs the best overall, a hybrid approach combining off-path and edge caching is proposed to optimize performance for popular content. Key metrics evaluated include cache hit ratio, average retrieval delay, and unique content cached, leading to recommendations for improved caching strategies.

![Aim - First

• To empirically compare the performance of on-path,

off-path and edge caching [All Three]

– Researchers already compared performance of on-path and

edge caching techniques

24Anshuman Kalla](https://image.slidesharecdn.com/exploringoff-pathcachingwithedgecachingininformationcentricnetworkingslides-161202091503/85/Exploring-off-path-caching-with-edge-caching-in-information-centric-networking-slides-24-320.jpg)

![Aim - First

• To empirically compare the performance of on-path,

off-path and edge caching [All Three]

– Researchers already compared performance of on-path and

edge caching techniques

• If marginal performance gap is affordable then edge caching is better

as it involves only edge nodes (Ref this paper for references)

25Anshuman Kalla](https://image.slidesharecdn.com/exploringoff-pathcachingwithedgecachingininformationcentricnetworkingslides-161202091503/85/Exploring-off-path-caching-with-edge-caching-in-information-centric-networking-slides-25-320.jpg)

![Aim - First

• To empirically compare the performance of on-path,

off-path and edge caching [All Three]

– Researchers already compared performance of on-path and

edge caching techniques

• If marginal performance gap is affordable then edge caching is better

as it involves only edge nodes (Ref this paper for references)

– However comparison of three would answer the questions

• Which one of the three caching technique performs the best?

• Is pervasive caching (i.e. caching at all nodes) really beneficial?

26Anshuman Kalla](https://image.slidesharecdn.com/exploringoff-pathcachingwithedgecachingininformationcentricnetworkingslides-161202091503/85/Exploring-off-path-caching-with-edge-caching-in-information-centric-networking-slides-26-320.jpg)