Embed presentation

Download as PDF, PPTX

![‘Google’ Detector: Feature Mapping

Options for building X[ ]:

Input: Audio file (WAV, 16 bit mono, 44.1 kHz)

Output: 1 if it contains the word ‘Google’, otherwise 0

1. Use raw waveform as a feature vector.

But: will have 66150 features for a 1.5 second file.

Kinda scary, and easy to overfit.

2. Use Mel-Frequency Cepstral Coefficients (MFCC).

Believed to be closer to human auditory response.

Depending on parameters, can give about 80 features per file.](https://image.slidesharecdn.com/experimentswithmachinelearning-150813193329-lva1-app6892/85/Experiments-with-Machine-Learning-GDG-Lviv-19-320.jpg)

![[cepstra, aSpectrum, pSpectrum] = MFCC(waveform);

x = [cepstra(1); cepstra(2); ...; cepstra(n)];](https://image.slidesharecdn.com/experimentswithmachinelearning-150813193329-lva1-app6892/85/Experiments-with-Machine-Learning-GDG-Lviv-20-320.jpg)

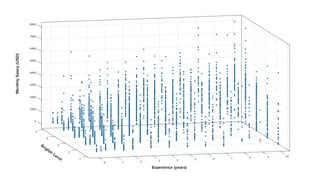

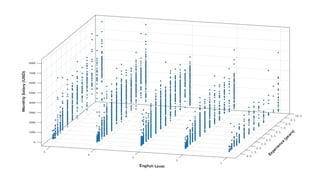

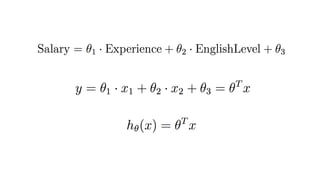

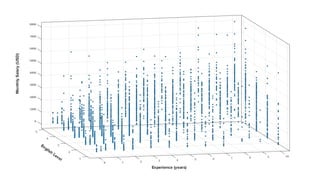

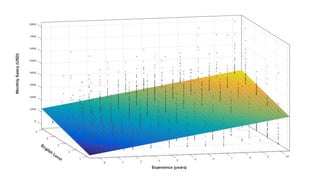

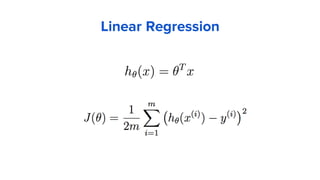

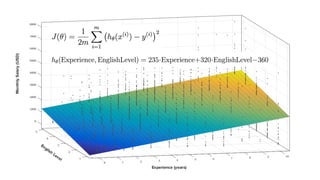

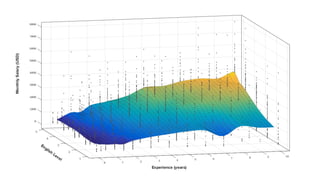

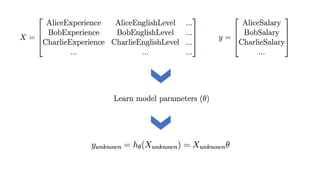

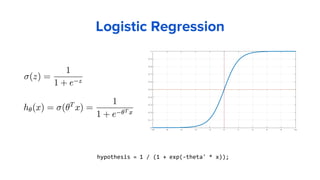

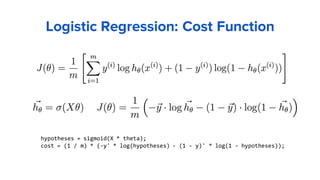

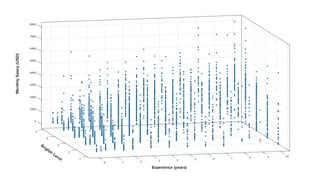

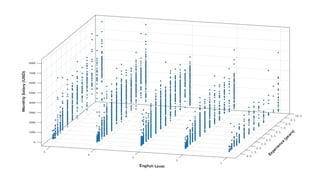

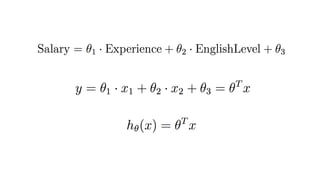

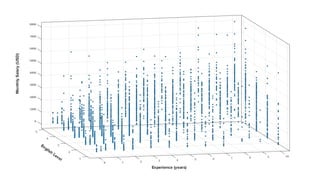

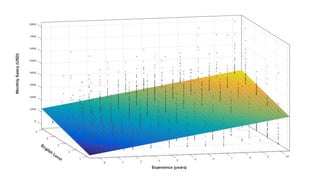

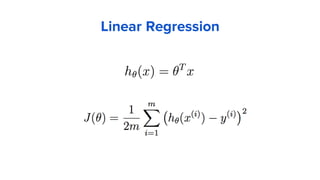

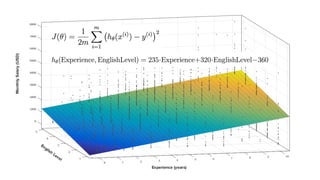

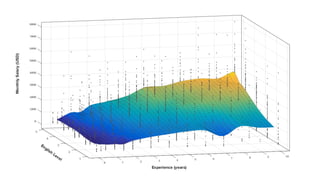

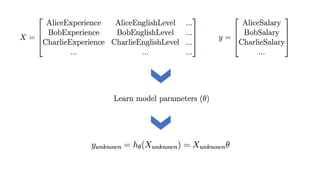

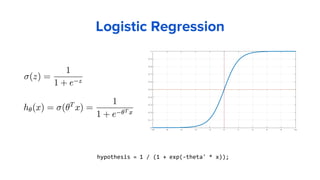

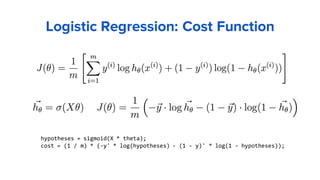

This document discusses experiments with machine learning. It begins by defining machine learning as a program that improves its performance on tasks through experience. It then categorizes machine learning into supervised learning, unsupervised learning, and reinforcement learning. The document goes on to explain regression, classification, linear regression, and logistic regression. It provides an example of classifying human speech to detect whether a phrase contains the word "Google" or not. Features are extracted from audio files using Mel-Frequency Cepstral Coefficients rather than using the raw waveform. The document concludes by providing contact information for the author to ask questions.

![‘Google’ Detector: Feature Mapping

Options for building X[ ]:

Input: Audio file (WAV, 16 bit mono, 44.1 kHz)

Output: 1 if it contains the word ‘Google’, otherwise 0

1. Use raw waveform as a feature vector.

But: will have 66150 features for a 1.5 second file.

Kinda scary, and easy to overfit.

2. Use Mel-Frequency Cepstral Coefficients (MFCC).

Believed to be closer to human auditory response.

Depending on parameters, can give about 80 features per file.](https://image.slidesharecdn.com/experimentswithmachinelearning-150813193329-lva1-app6892/85/Experiments-with-Machine-Learning-GDG-Lviv-19-320.jpg)

![[cepstra, aSpectrum, pSpectrum] = MFCC(waveform);

x = [cepstra(1); cepstra(2); ...; cepstra(n)];](https://image.slidesharecdn.com/experimentswithmachinelearning-150813193329-lva1-app6892/85/Experiments-with-Machine-Learning-GDG-Lviv-20-320.jpg)