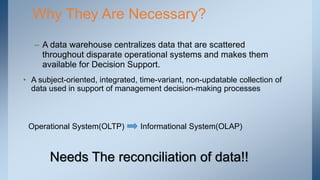

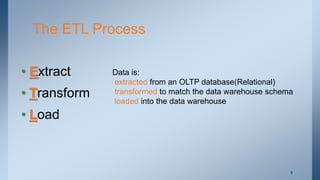

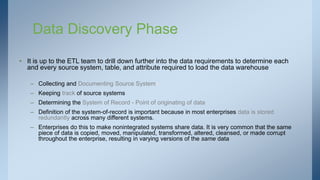

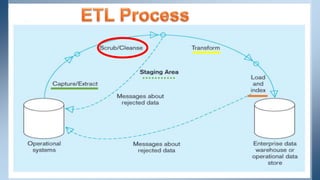

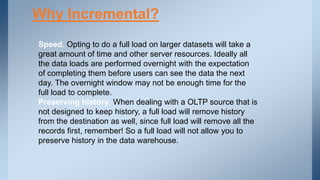

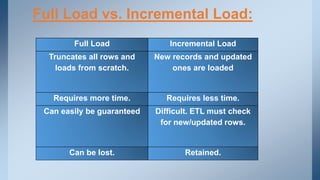

The document provides an overview of data warehouses, emphasizing their role in centralizing cleansed and standardized enterprise data for decision-making. It details the ETL (Extract, Transform, Load) process essential for constructing data warehouses, which includes extracting data from operational systems, transforming it to meet dimensional integrity, and loading it into the warehouse while ensuring data quality and efficiency. The document also discusses techniques for data extraction, transformation functions, staging areas, and the significance of incremental versus full data loads.