The document discusses data warehouses and database management systems (DBMS). It provides information on:

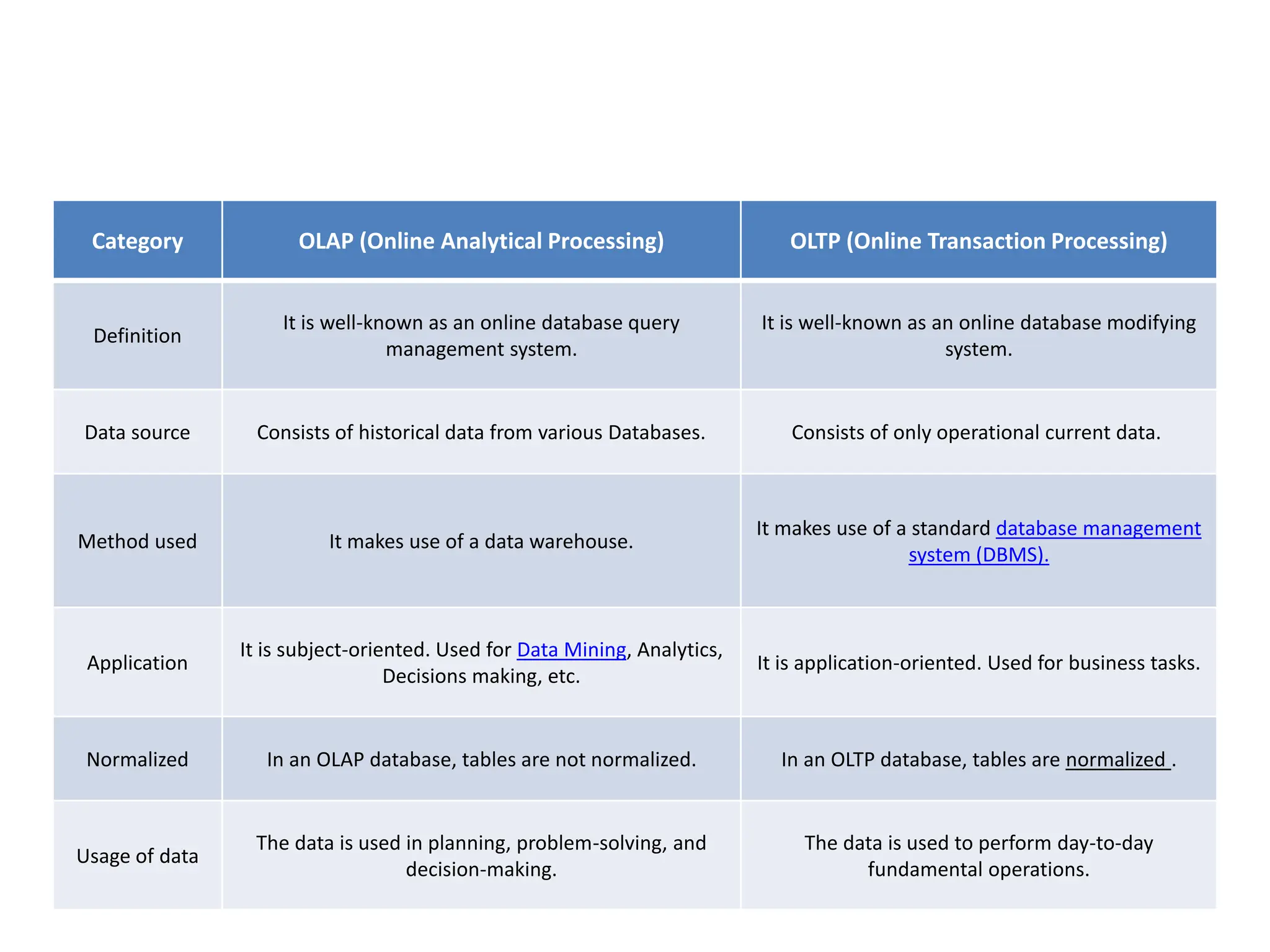

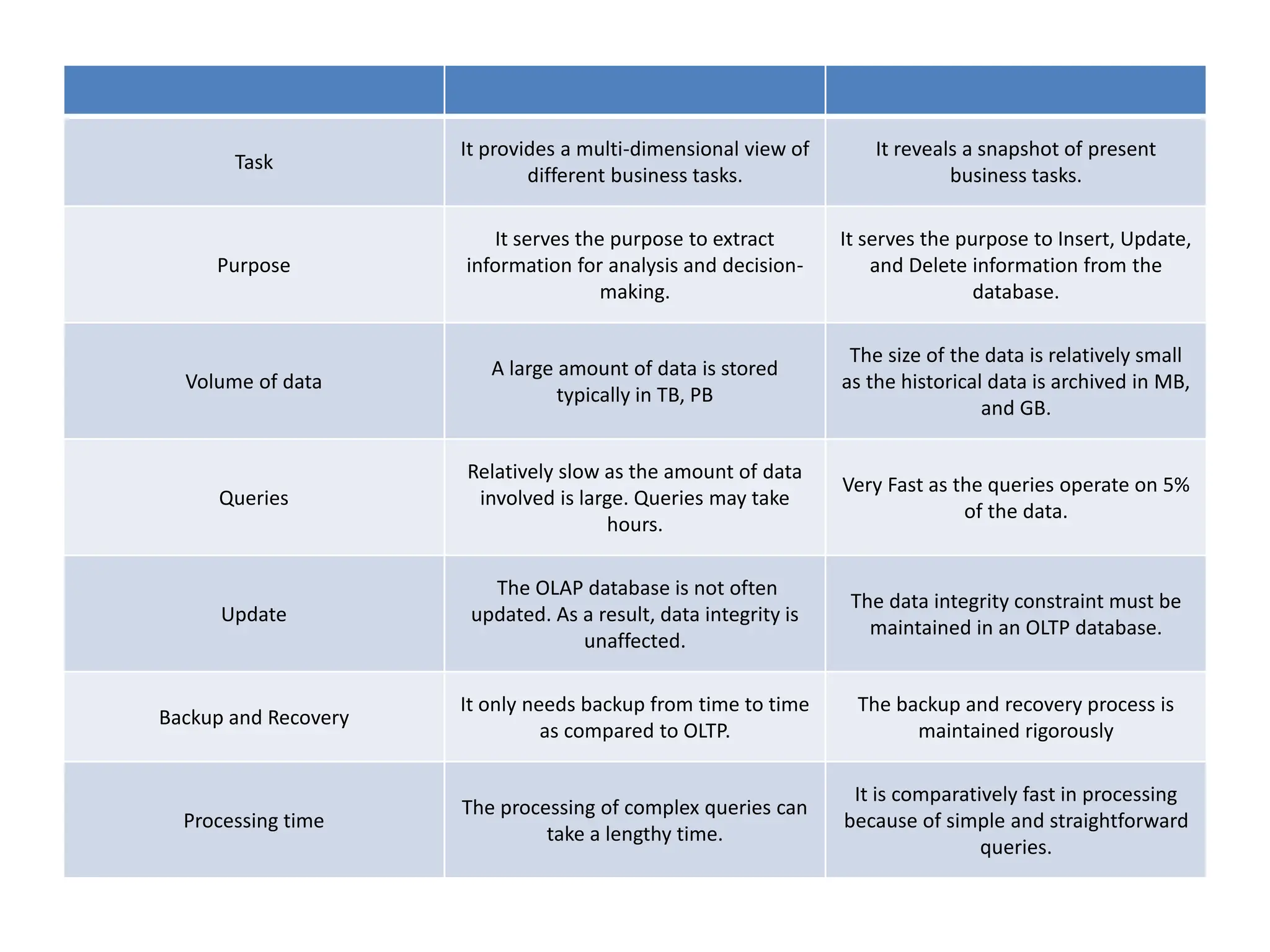

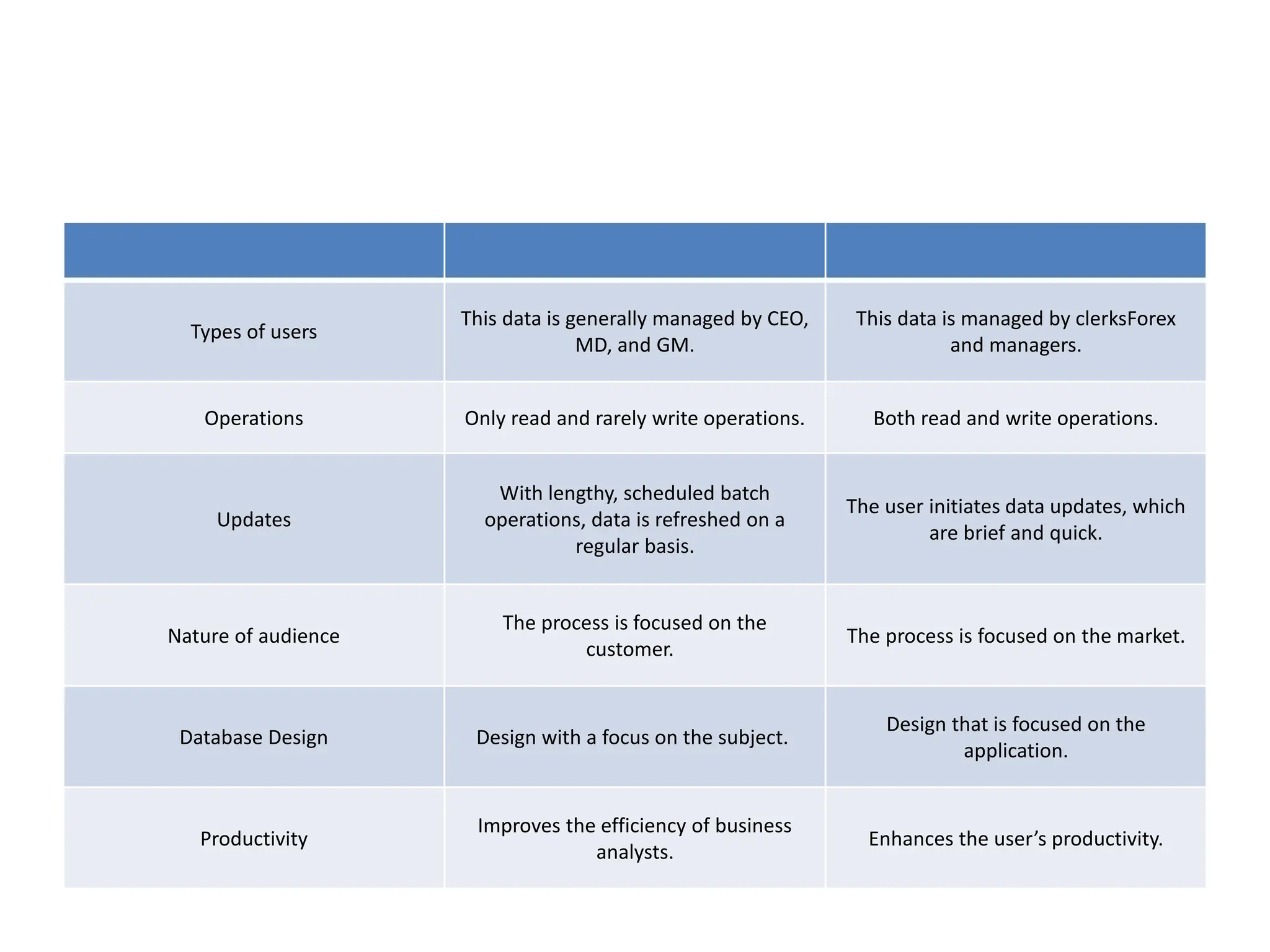

- The key difference between online analytical processing (OLAP) and online transaction processing (OLTP) databases and their purposes. OLAP databases contain historical data for analysis while OLTP databases contain current operational data.

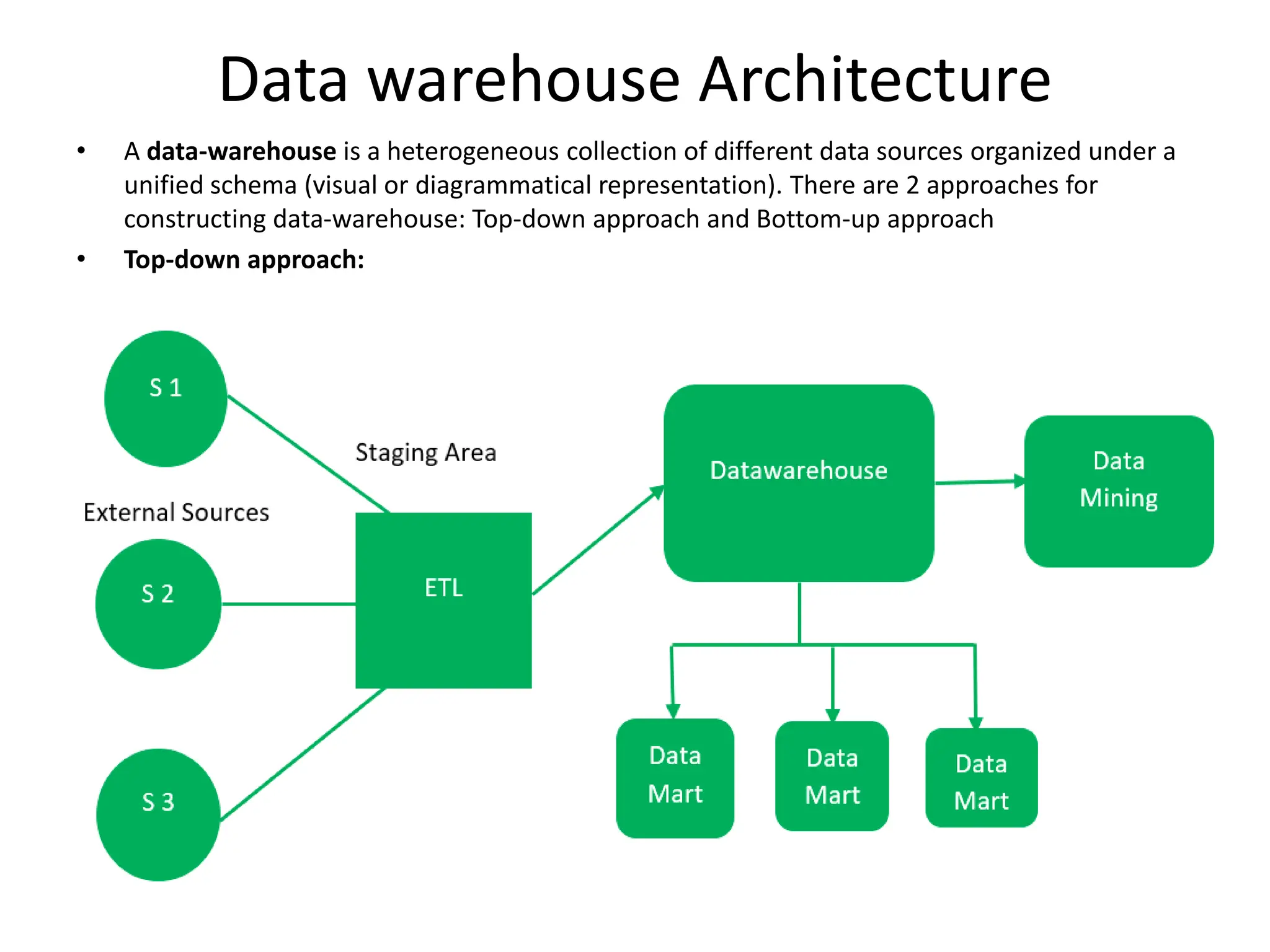

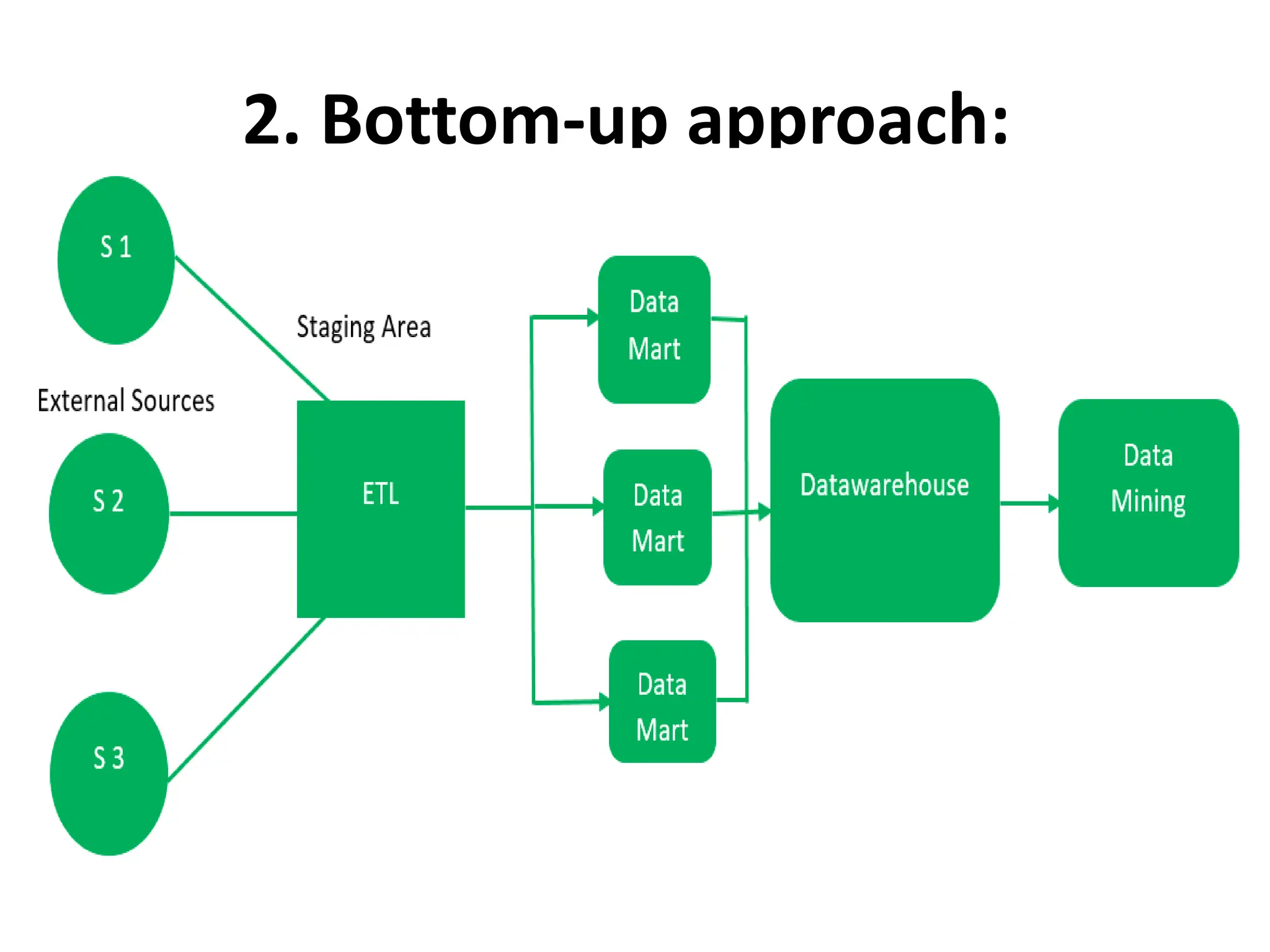

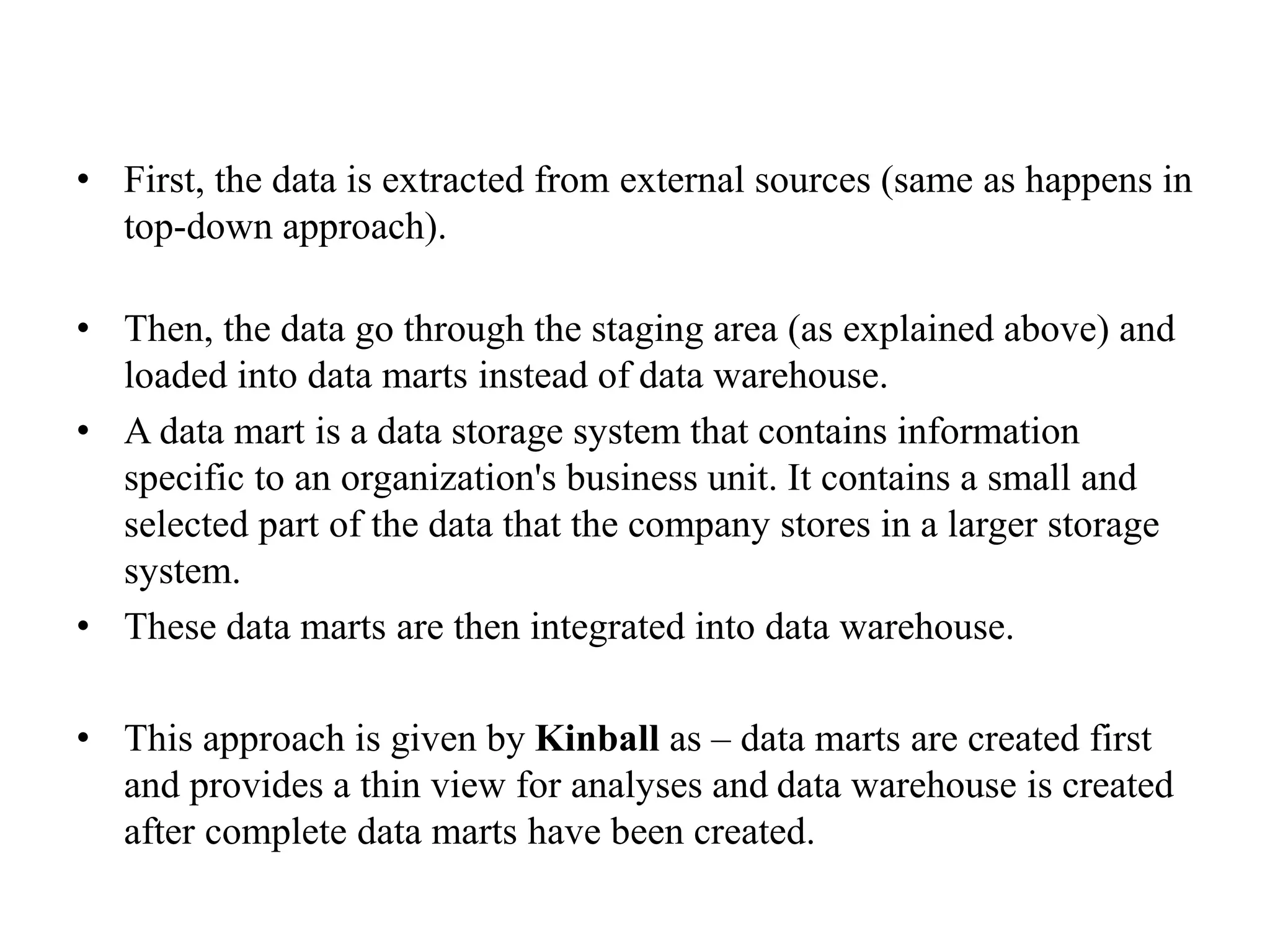

- The top-down and bottom-up approaches for constructing a data warehouse, which involve extracting data from external sources, transforming and loading it, and then storing it in data marts or a centralized data warehouse.

- Some common components of a data warehouse architecture including the external sources, staging area, data warehouse, data marts, and data mining.

- Properties and features of a DB