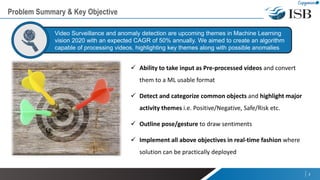

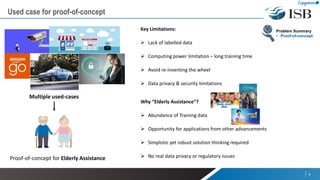

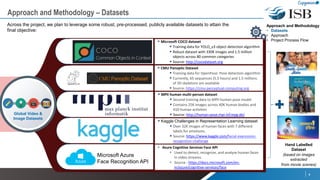

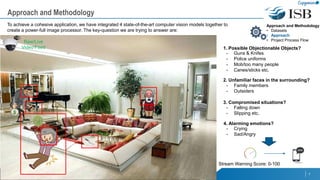

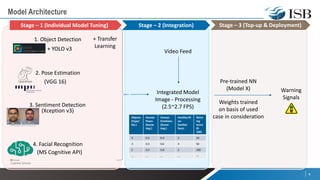

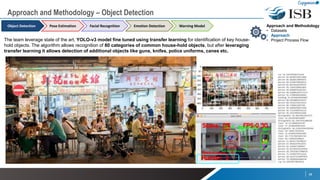

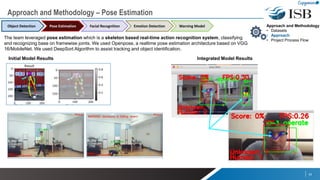

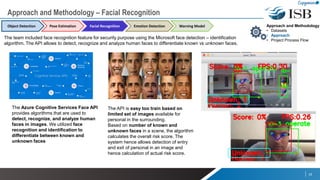

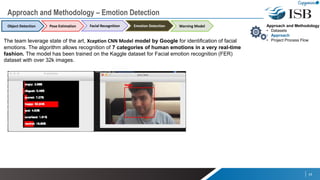

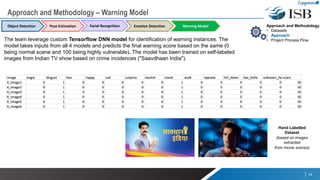

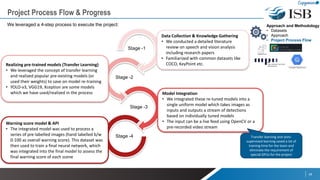

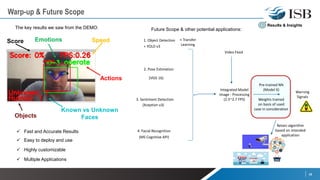

The document discusses a capstone project focusing on deep learning for video analytics, aimed at detecting and categorizing themes in video streams, particularly for use in elderly assistance. It outlines the methodology, which includes leveraging multiple pre-trained models for object detection, pose estimation, emotion detection, and facial recognition, alongside addressing challenges like data privacy and the need for labeled datasets. The project seeks to create a real-time solution capable of identifying potential risks and anomalies within video feeds.