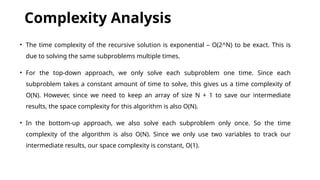

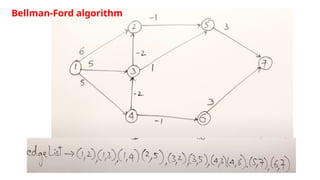

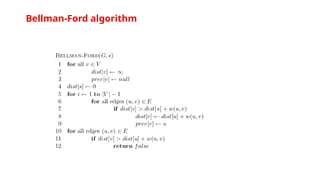

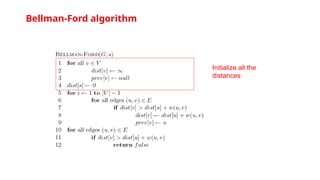

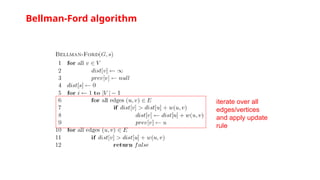

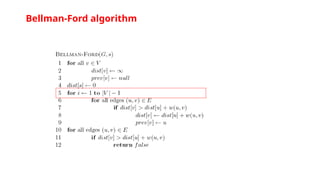

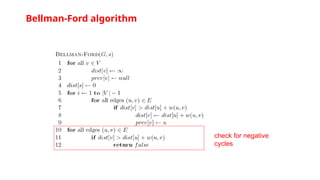

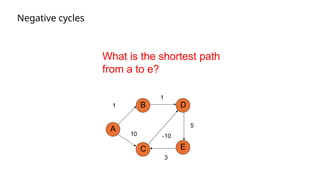

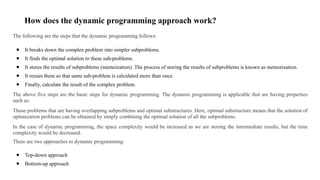

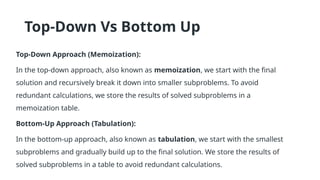

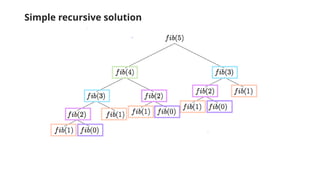

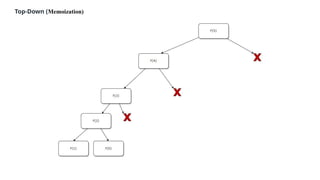

Dynamic programming is an optimization approach that breaks down problems into overlapping sub-problems whose results are reused (memoization). It contrasts with divide and conquer by relying on previous outputs to optimize larger sub-problems, with two main approaches: top-down (recursive with memoization) and bottom-up (iterative with tabulation). Key applications include the Fibonacci series, knapsack problem, and Bellman-Ford algorithm for shortest paths.

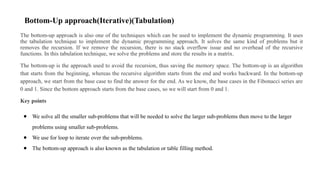

![This is a bottom-up approach. We start from the bottom, finding fib(0) and fib(1), add them together to

get fib(2) and so on until we reach fib(5).

function tabulatedFib(n)

{

if (n === 1 || n === 2){

return 1;

}

const fibNums = [0, 1, 1];

for (let i = 3; i <= n; i++){

fibNums[i] = fibNums[i-1] + fibNums[i-2];

}

return fibNums[n];

}

The time complexity of both the memoization and tabulation solutions are O(n) — time grows linearly

with the size of n, because we are calculating fib(4), fib(3), etc each one time.

Fibonacci Numbers using Dynamic Programming (Bottom Up)](https://image.slidesharecdn.com/dynamicprogramming-241123165653-fbf719a5/85/Dynamic-programming-in-Design-Analysis-and-Algorithms-11-320.jpg)