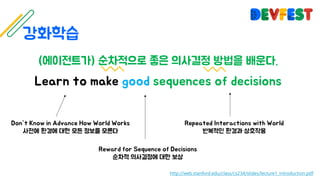

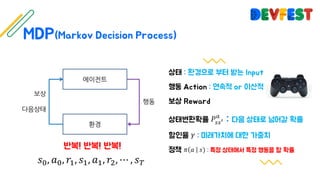

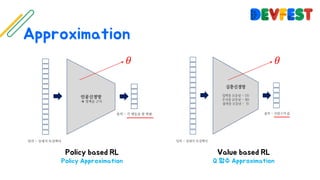

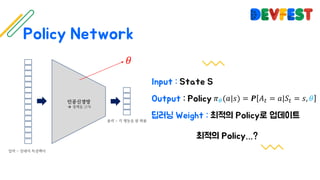

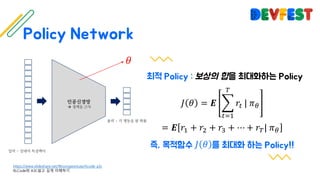

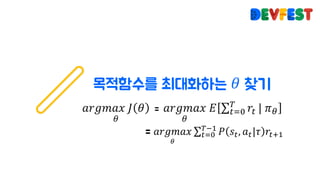

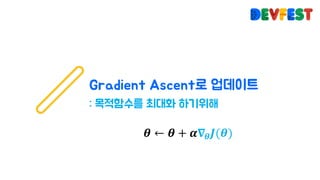

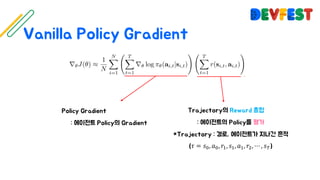

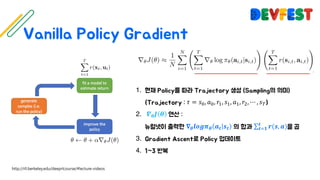

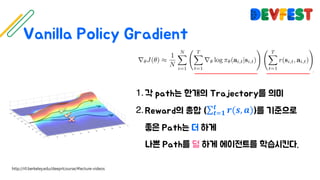

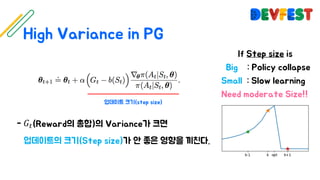

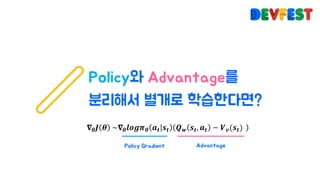

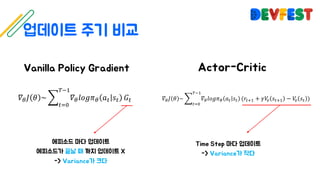

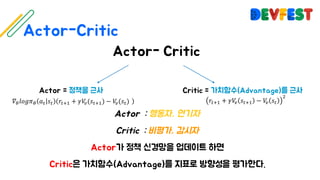

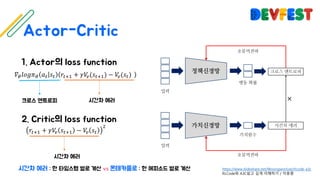

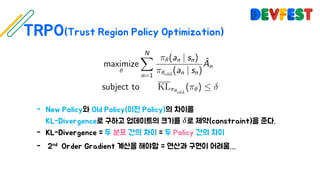

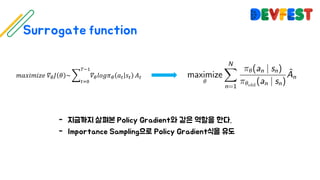

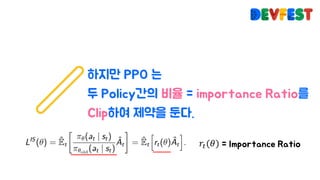

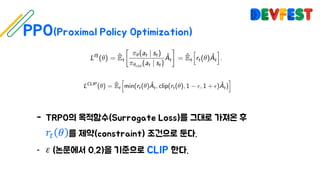

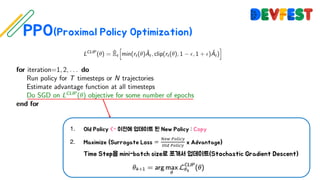

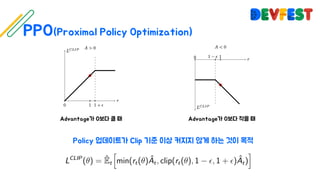

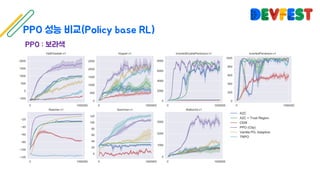

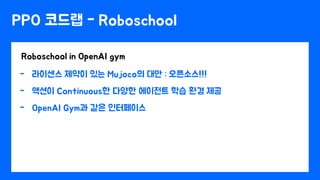

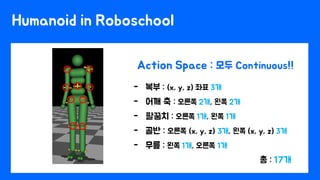

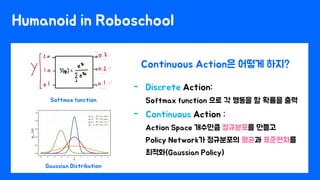

The document appears to be a collection of resources and references related to reinforcement learning, including various tutorials, slides, and methods like A3C. It contains mathematical notations and concepts pertinent to reinforcement learning algorithms. Additionally, it lists URLs for further reading and understanding of the subject.