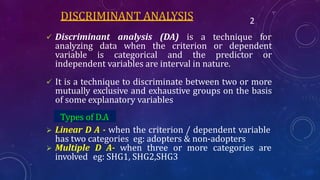

1. Discriminant analysis is a statistical technique used to discriminate between two or more groups based on a set of predictor variables when the dependent variable is categorical.

2. It creates a discriminant function that provides weights for the predictor variables to maximize differences between groups based on centroids.

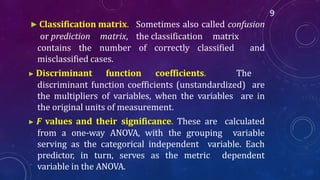

3. Key outputs include canonical correlations, classification matrices, discriminant function coefficients, and Wilk's lambda, which is used to assess how well the functions separate cases into groups.