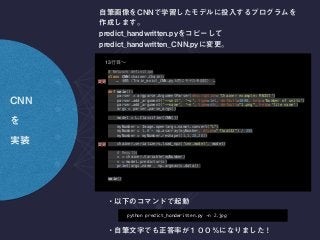

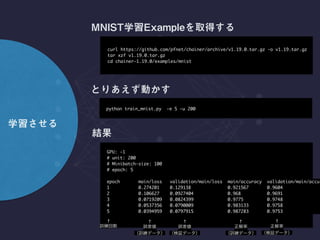

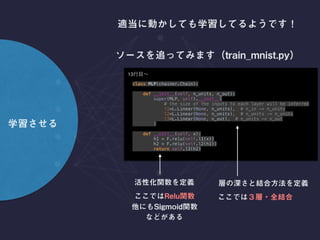

This document discusses using a convolutional neural network (CNN) to classify handwritten digits from the MNIST dataset. It describes training a CNN model on MNIST data for 3 epochs, then using the trained model to predict the digit in an input image. The CNN architecture includes convolution and max pooling layers followed by a fully-connected layer. The model is trained on GPU and saved, then used to predict digits in new images.

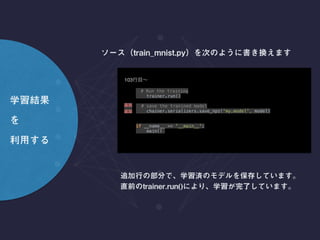

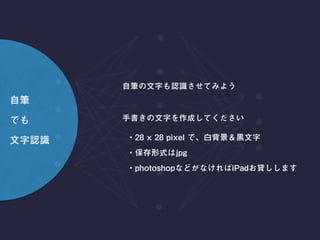

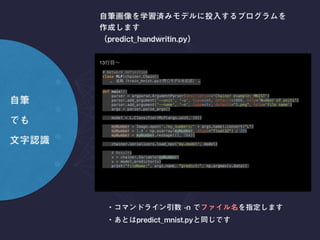

![import numpy as np

# Network definition

class MLP(chainer.Chain):

… train_mnist.py …

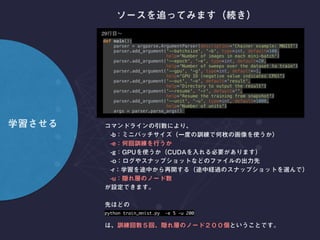

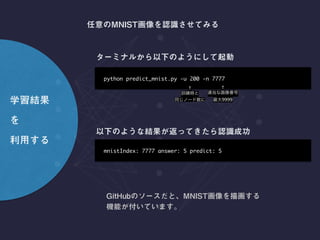

def main():

parser = argparse.ArgumentParser(description='Chainer example: MNIST')

parser.add_argument('--unit', '-u', type=int, default=1000, help='Number of units')

parser.add_argument('--number', '-n', type=int, default=1, help='mnist index')

args = parser.parse_args()

train, test = chainer.datasets.get_mnist()

index = min(args.number,9999)

targetNumber = test[index][0].reshape(-1,784)

targetAnswer = test[index][1]

model = L.Classifier(MLP(args.unit, 10))

chainer.serializers.load_npz('linear.model', model)

# Results

x = chainer.Variable(targetNumber)

v = model.predictor(x)

print("mnistIndex:",args.number,"answer:", targetAnswer ,"predict:", np.argmax(v.data))

main()

13](https://image.slidesharecdn.com/slide20161220-161220025001/85/DeepLearning-20161220-17-320.jpg)

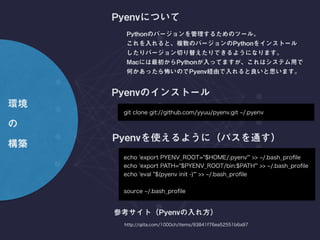

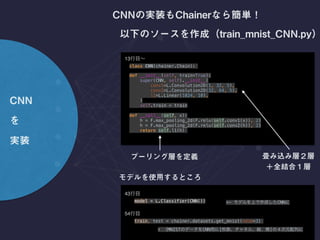

![43

# Set up a neural network to train

model = L.Classifier(CNN())

if args.gpu >= 0:

chainer.cuda.get_device(args.gpu).use() # Make a specified GPU current

model.to_gpu() # Copy the model to the GPU

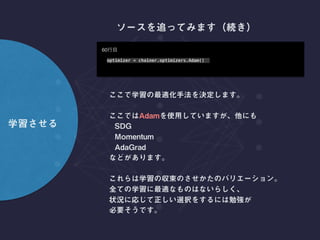

# Setup an optimizer

optimizer = chainer.optimizers.Adam()

optimizer.setup(model)

# Load the MNIST dataset

train, test = chainer.datasets.get_mnist(ndim=3)

<— CNN

↑ MNIST CNN [ ]

python train_mnist_CNN.py -e 3](https://image.slidesharecdn.com/slide20161220-161220025001/85/DeepLearning-20161220-24-320.jpg)