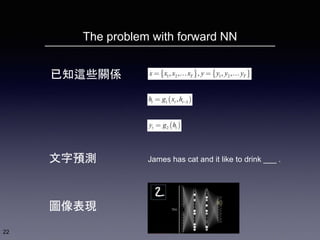

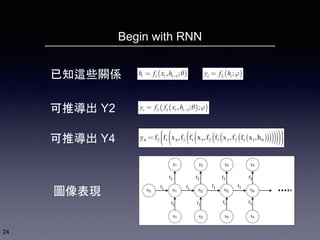

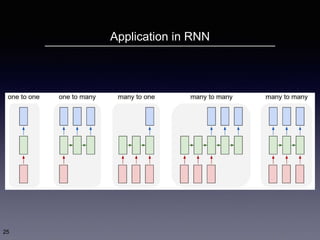

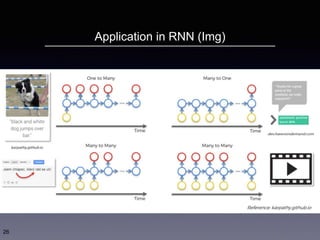

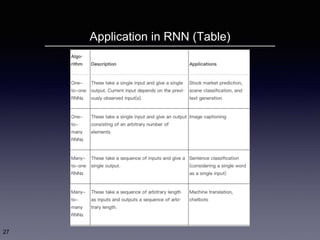

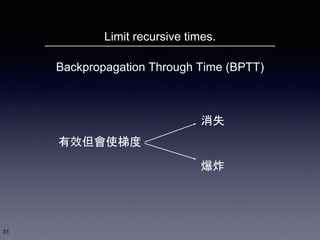

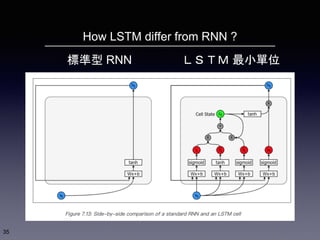

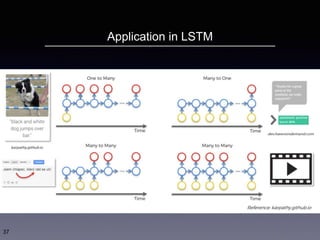

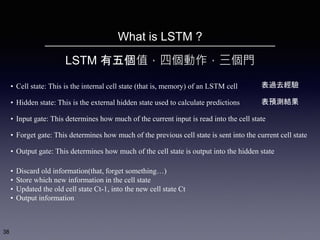

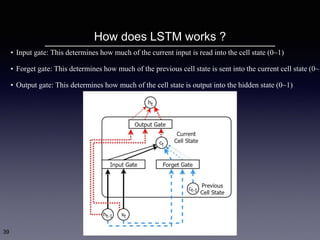

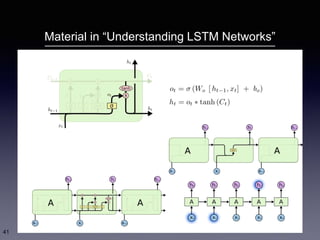

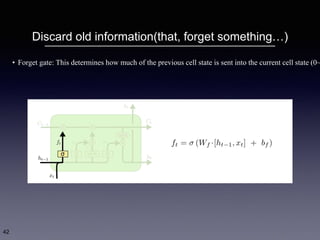

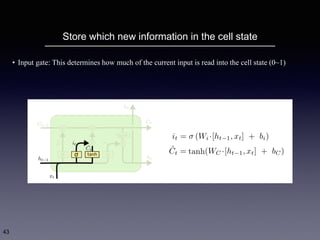

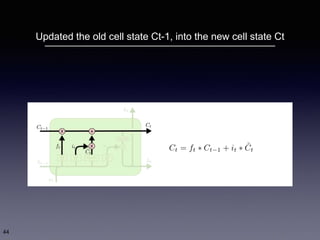

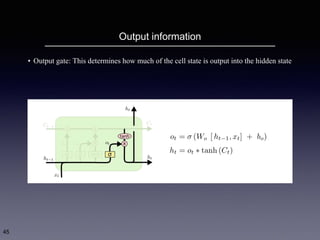

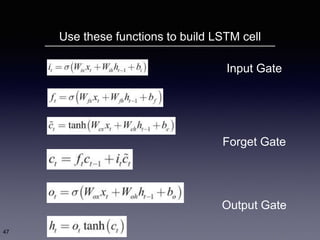

The document covers deep learning in natural language processing (NLP), focusing on data preprocessing, using NLTK and Word2Vec, and introducing Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) networks. It explains the differences between traditional feature engineering and neural network approaches, the workings of RNN and LSTM architectures, and their applications, particularly in overcoming challenges like backpropagation and long-term dependence. Key components of LSTMs, including gates and cell states, are discussed to illustrate their ability to manage memory effectively.

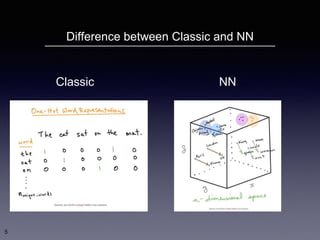

![這叫做 Feature Engineering

Bag-of-word

N-gram

例句:Bob went to the market to buy some flowers.

[1, 1, 2, 1, 1, 1, 1, 1, 0, 0, 0]

["Bo", "ob", "b ", " w", "we", "en", ..., "me", "e "," f", "fl", "lo", "ow", "we", "er", "rs"]](https://image.slidesharecdn.com/deeplearningnlp-180609042659/85/Deep-learning-nlp-7-320.jpg)

![這叫做 Feature Engineering

Bag-of-word

N-gram

例句:Bob went to the Market to buy some flowers.

[1, 1, 2, 1, 1, 1, 1, 1, 0, 0, 0]

["Bo", "ob", "b ", " w", "we", "en", ..., "me", "e "," f", "fl", "lo", "ow", "we", "er", "rs"]

缺點在於

1. 太過消耗資源

2.需要專家且經驗不易複製](https://image.slidesharecdn.com/deeplearningnlp-180609042659/85/Deep-learning-nlp-8-320.jpg)