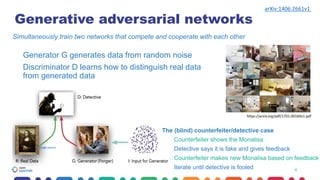

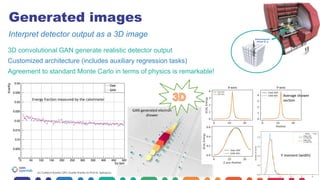

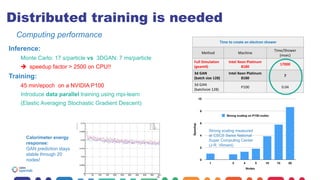

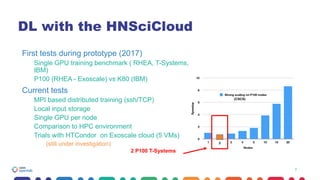

The document outlines efforts to enhance Monte Carlo simulation techniques using machine learning and deep learning, aiming for a general fast simulation tool tailored for high luminosity LHC needs. It discusses the implementation of Generative Adversarial Networks (GANs) for realistic detector output generation, achieving significant speed improvements in inference times. The next steps include optimizations related to resource management and further testing, with initial results indicating promising outcomes.