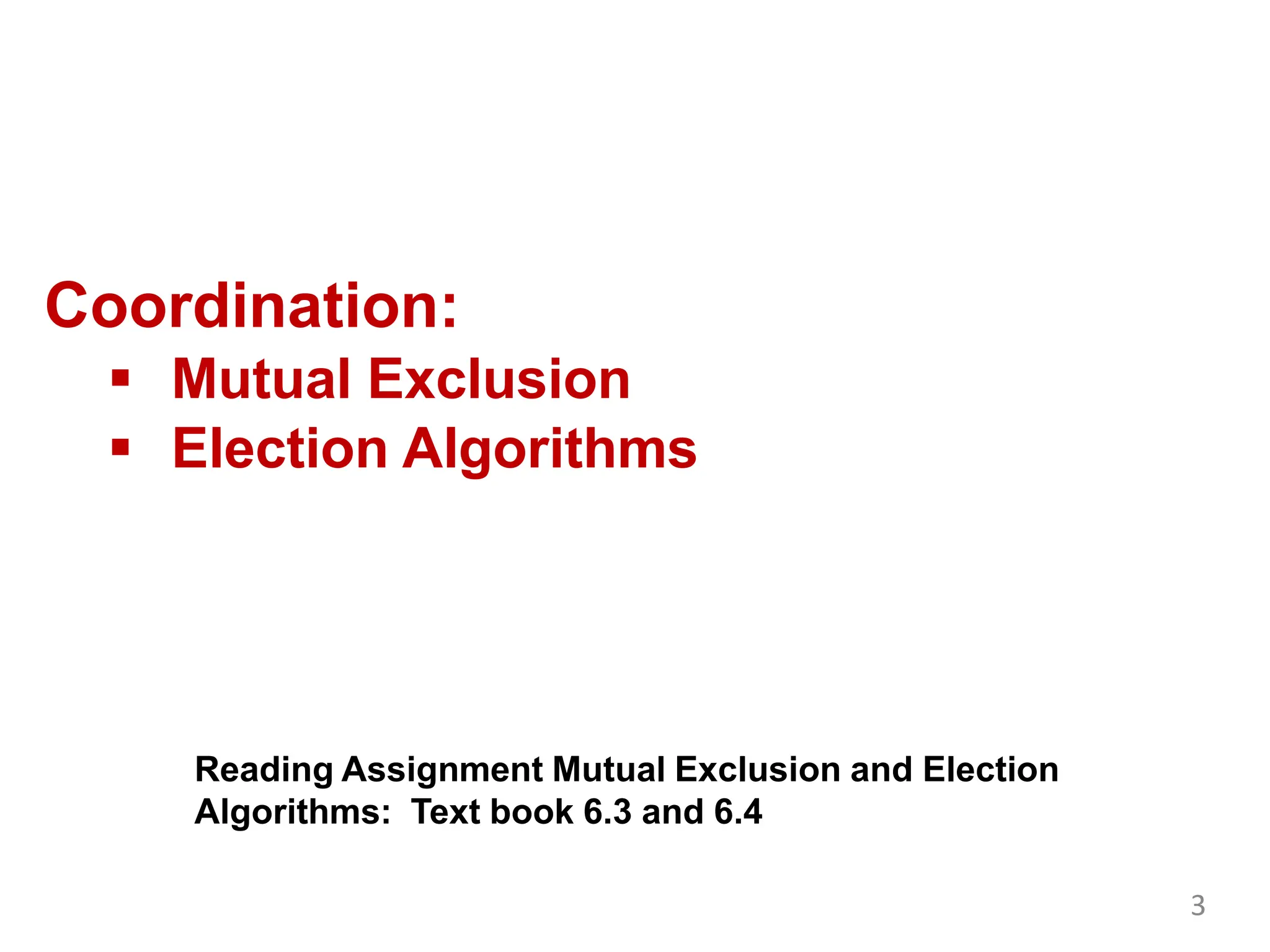

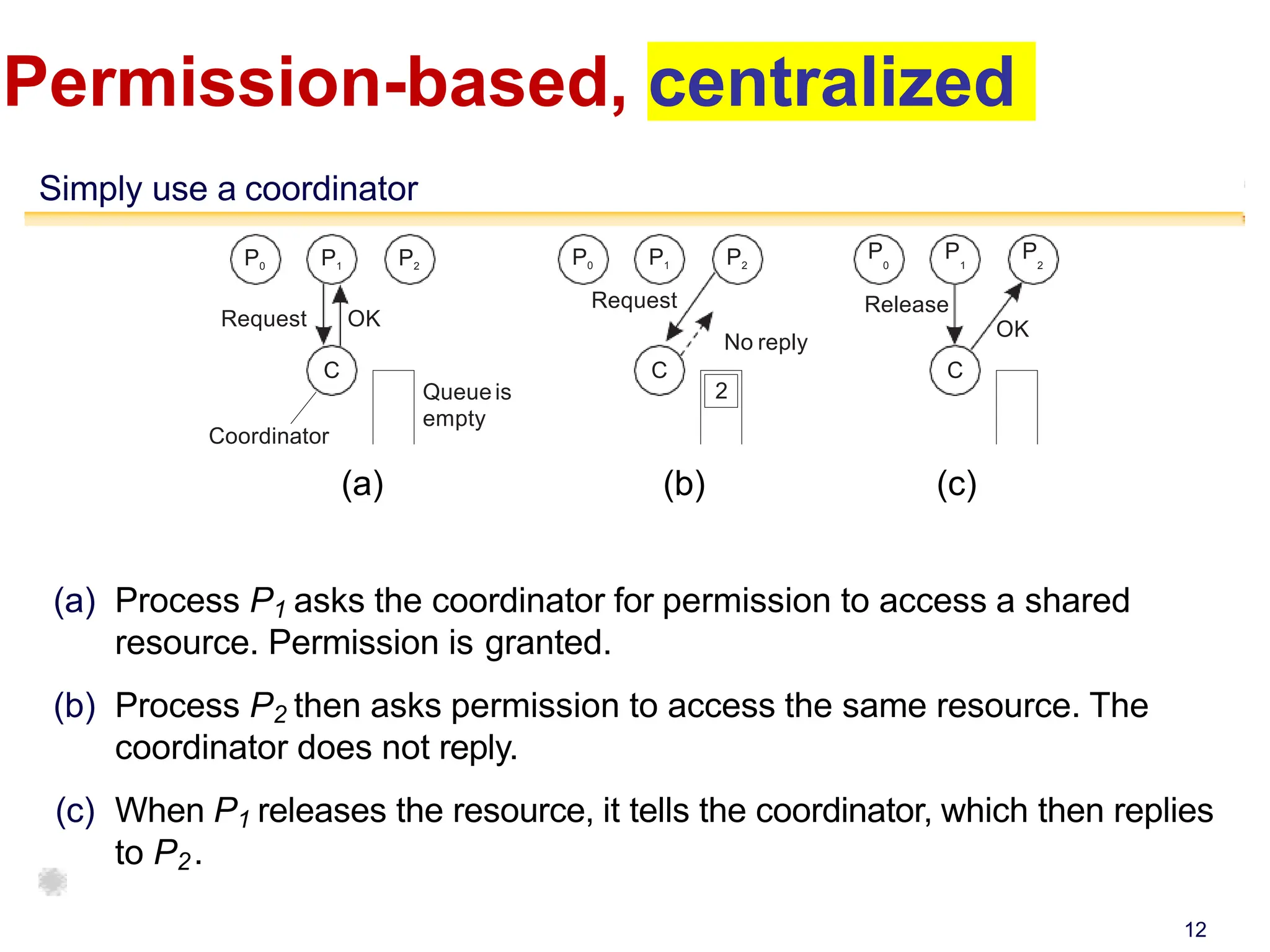

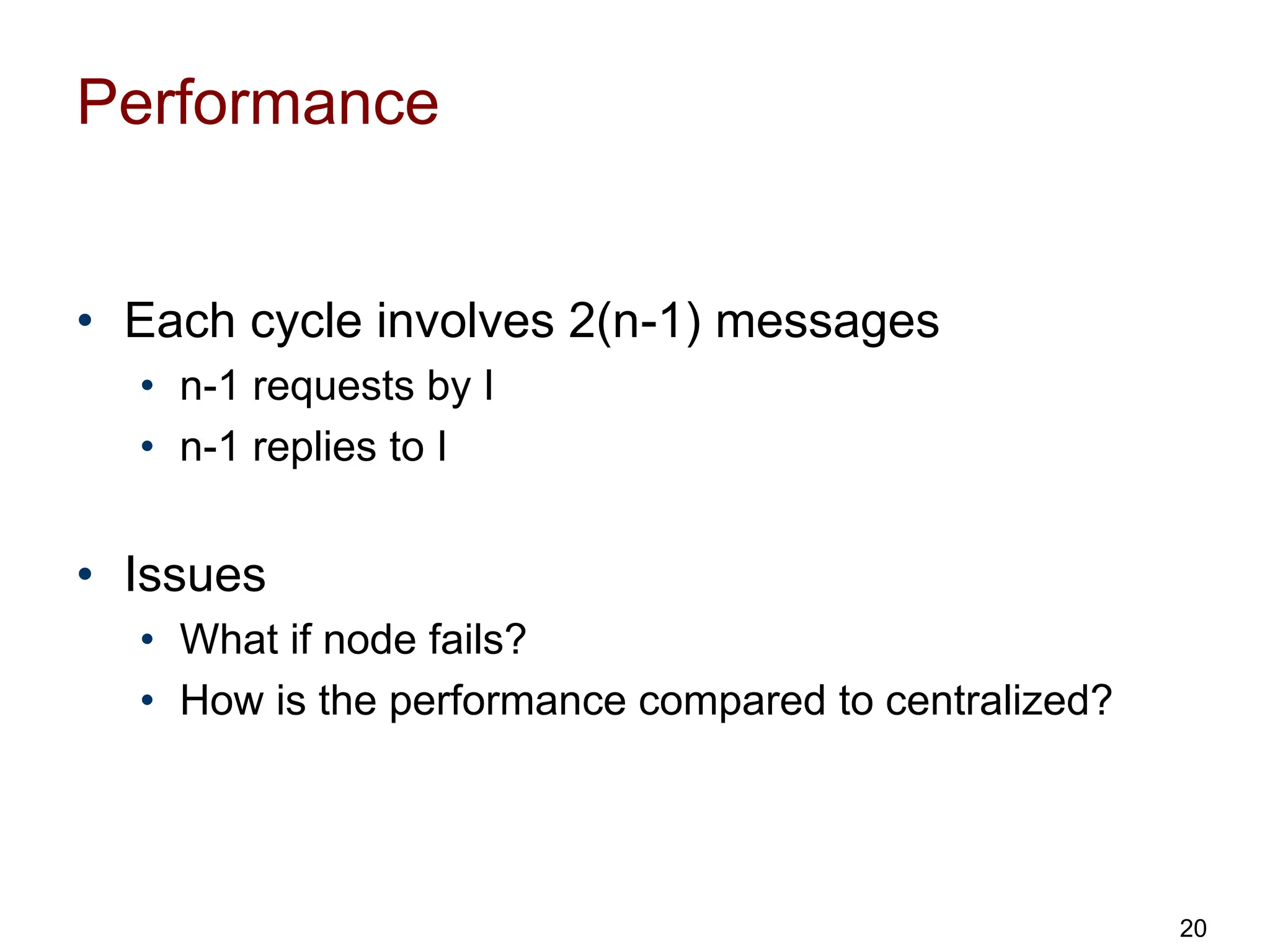

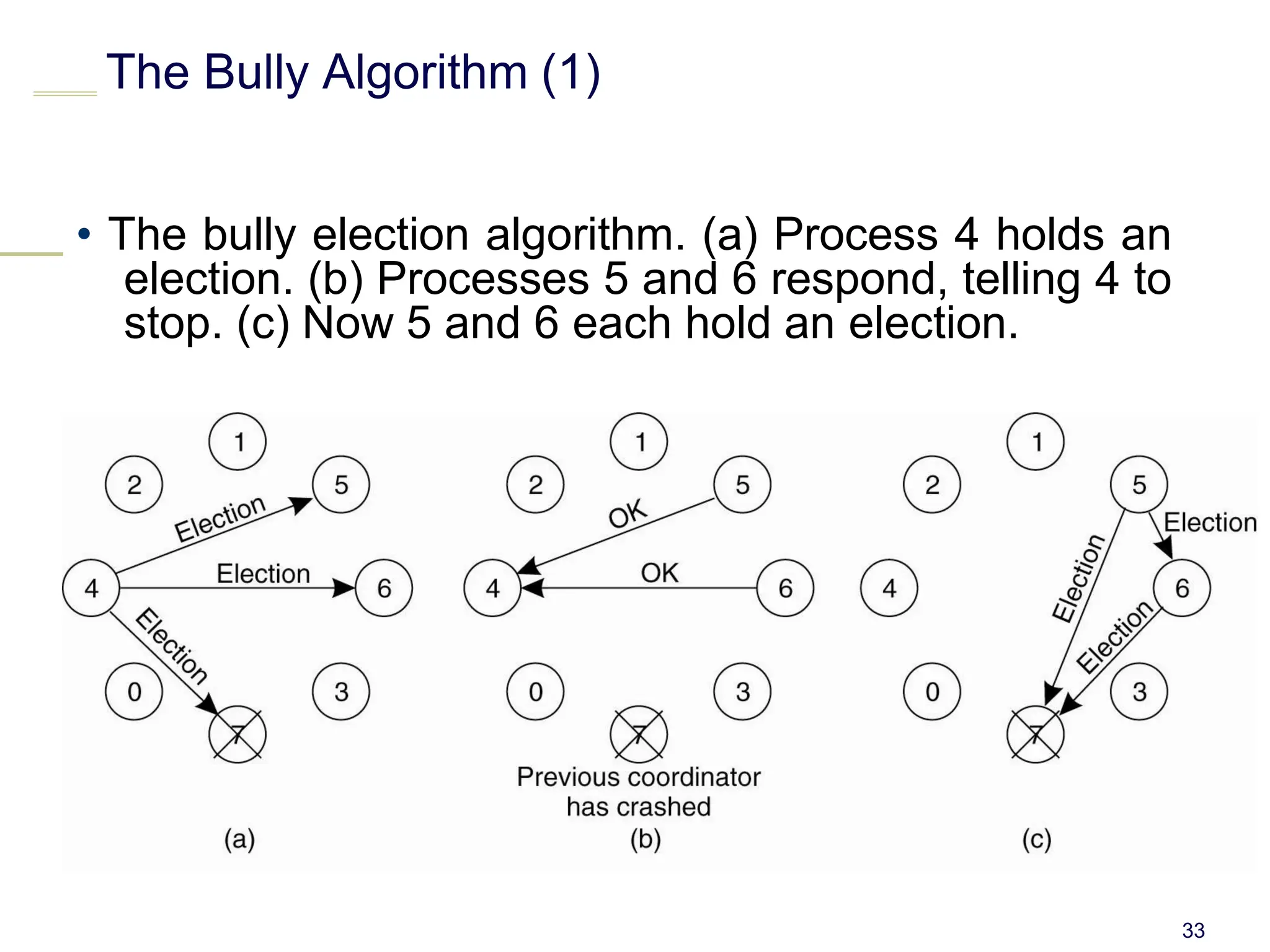

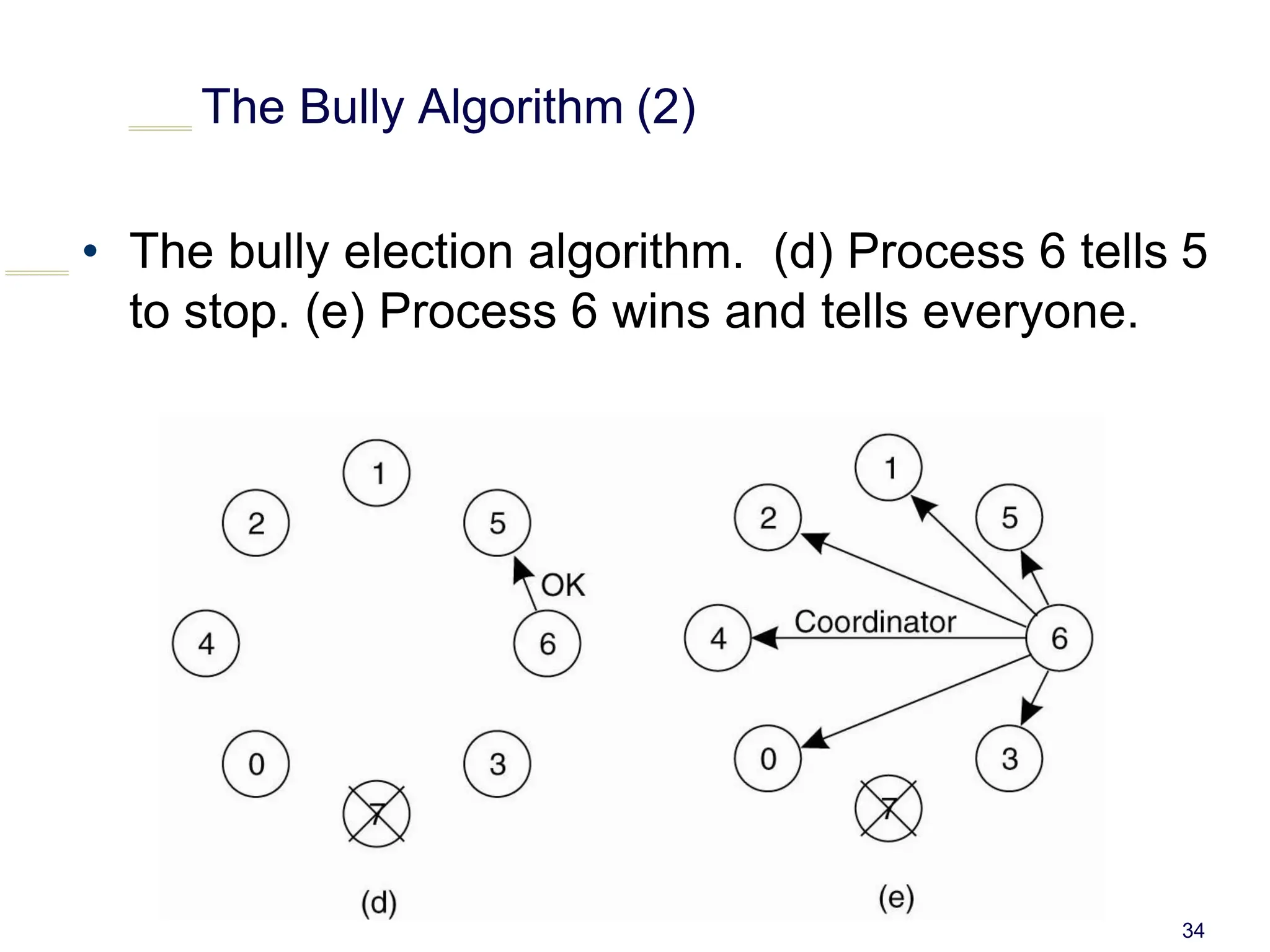

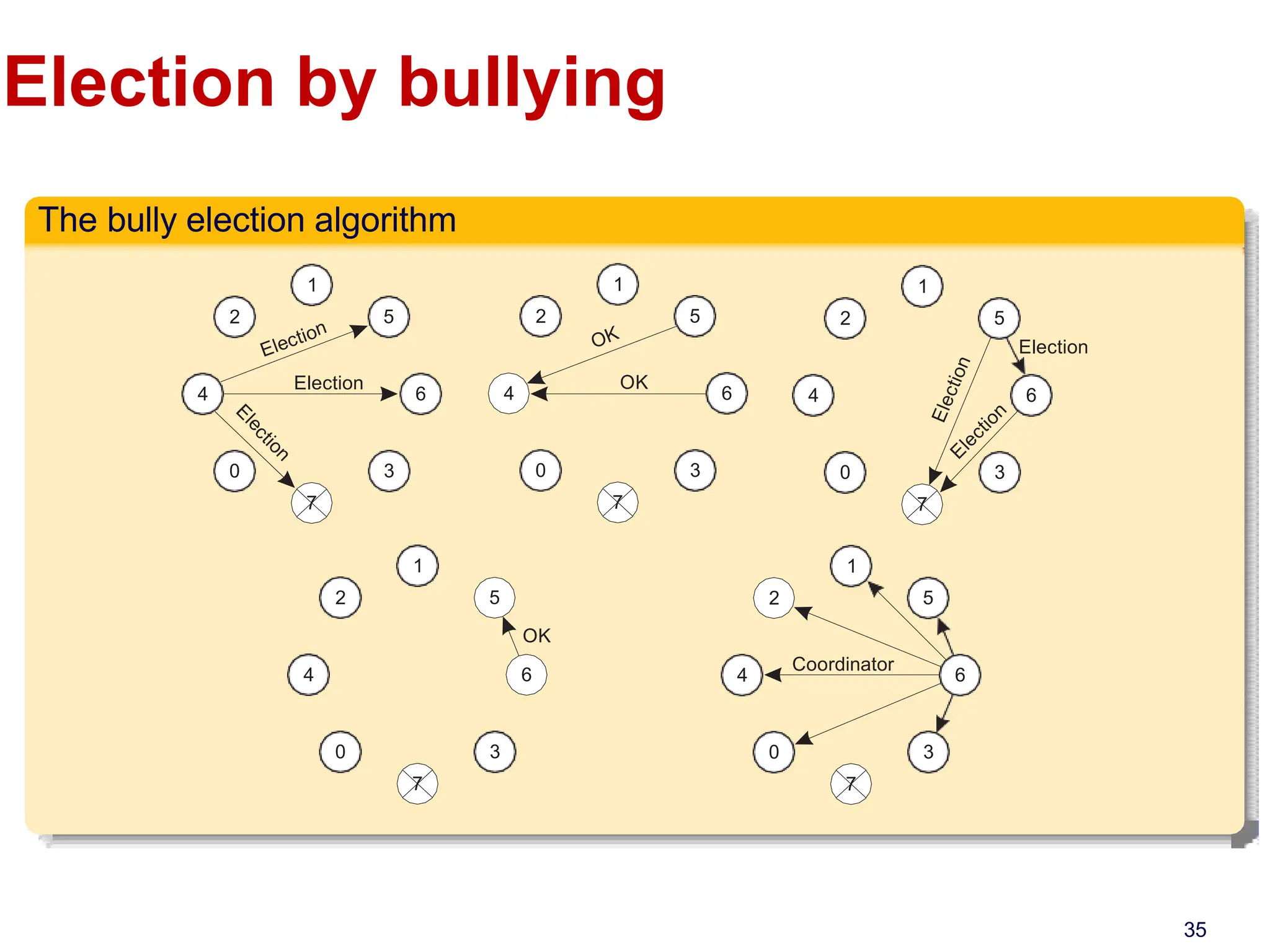

This document discusses algorithms for mutual exclusion and election in distributed systems. It covers centralized and distributed mutual exclusion algorithms including Ricart & Agrawala and token ring. It also covers two election algorithms: the bully algorithm where processes "bully" others to become coordinator, and a ring-based algorithm where processes are organized in a logical ring.

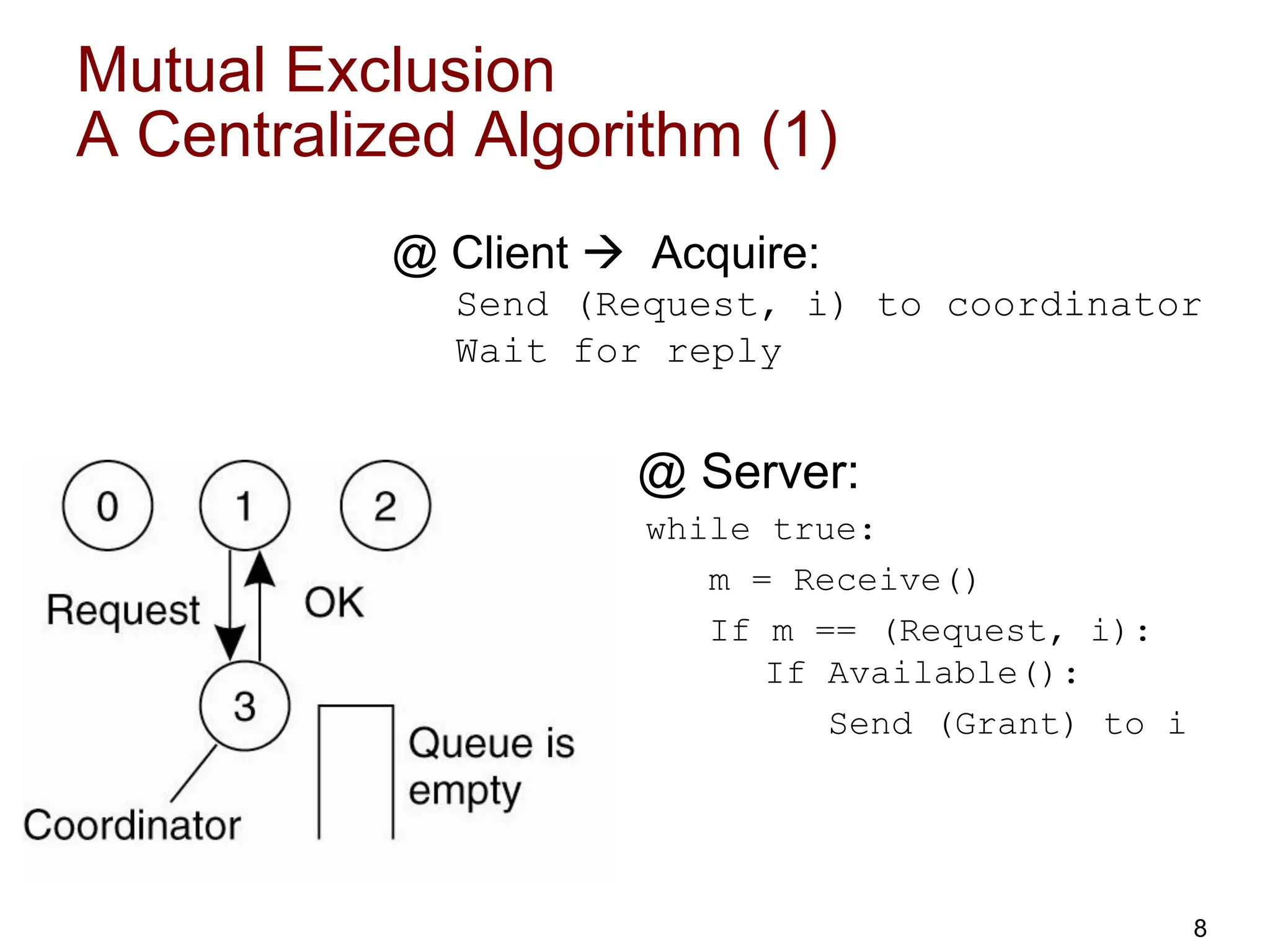

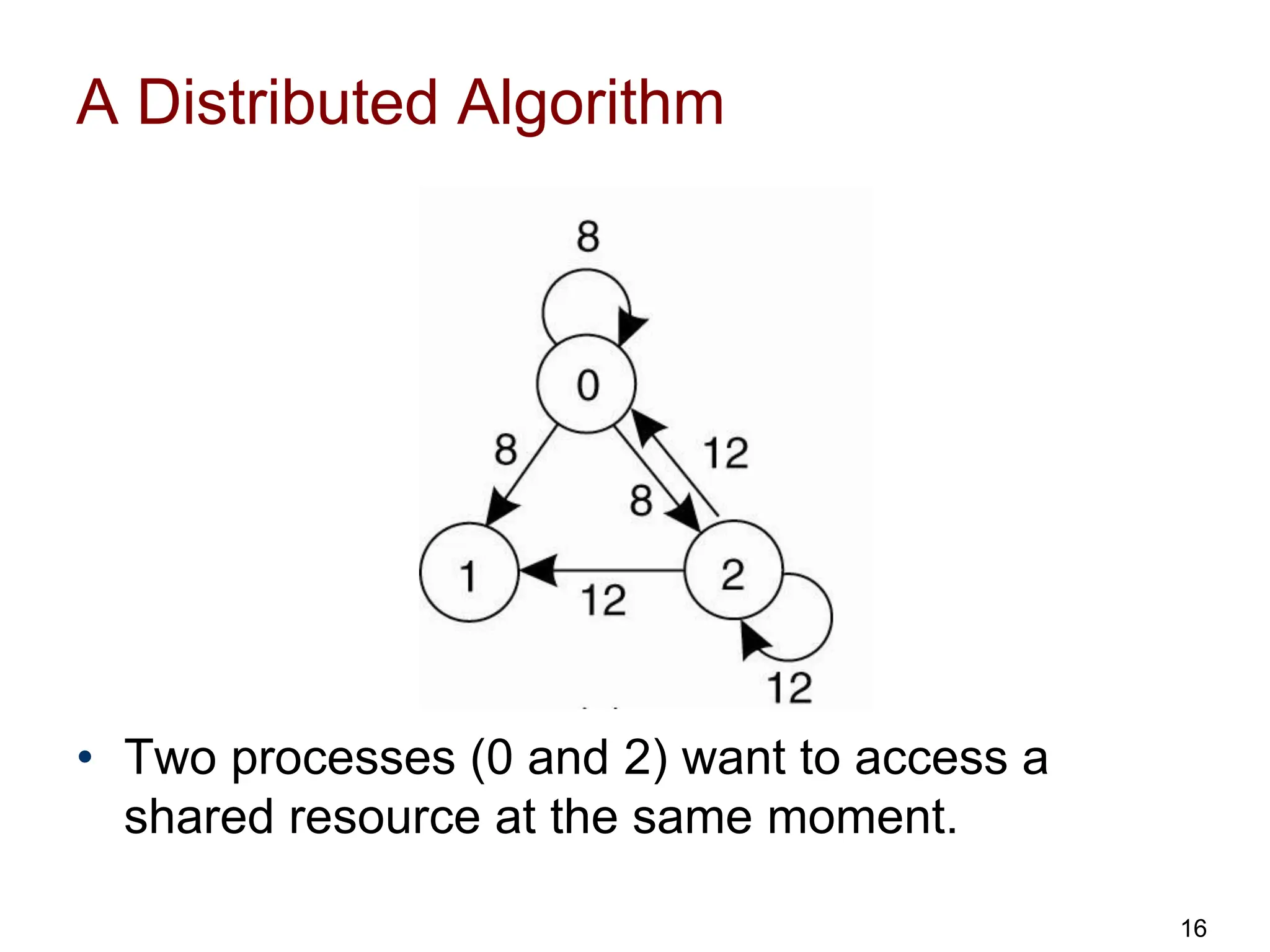

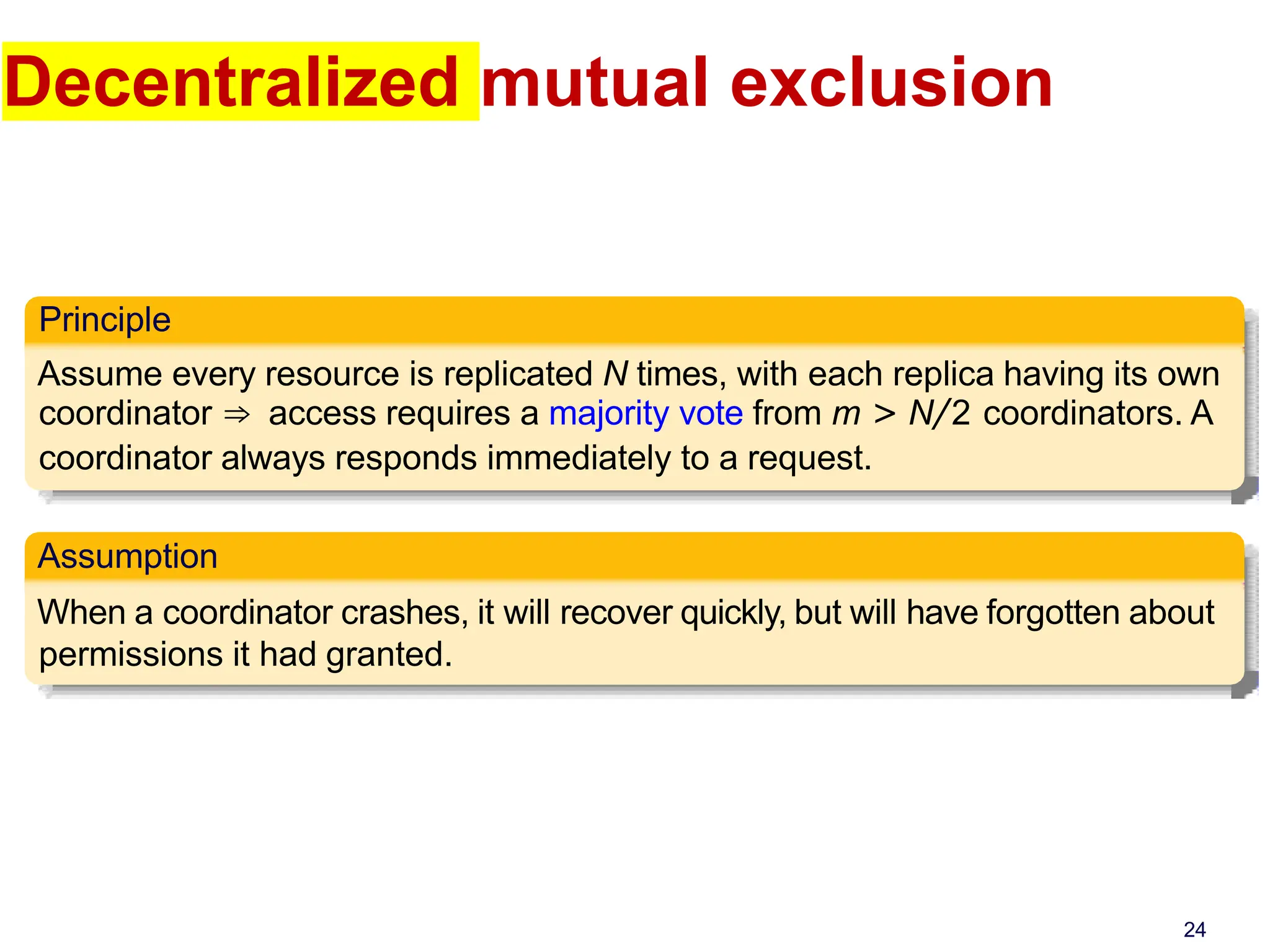

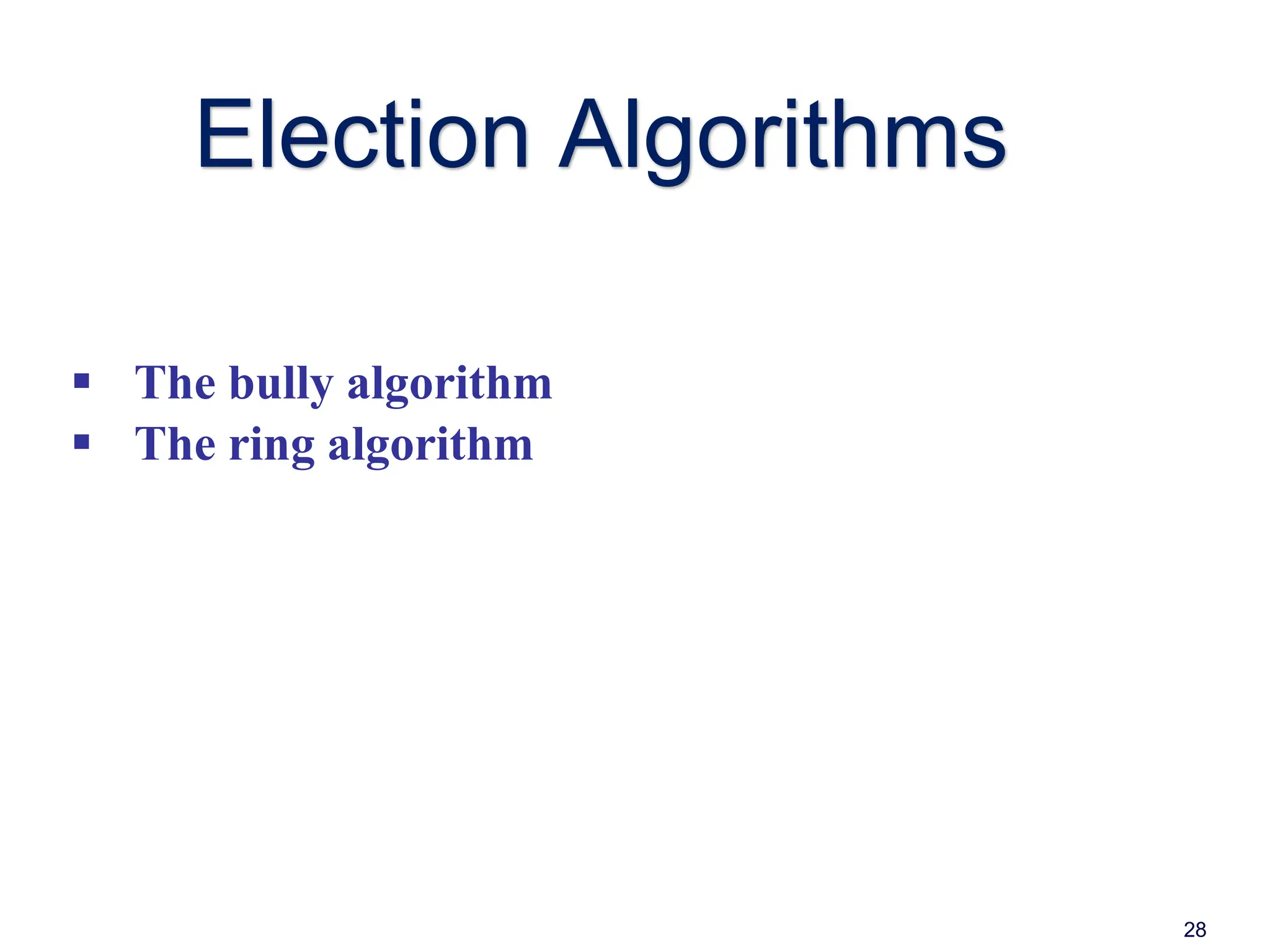

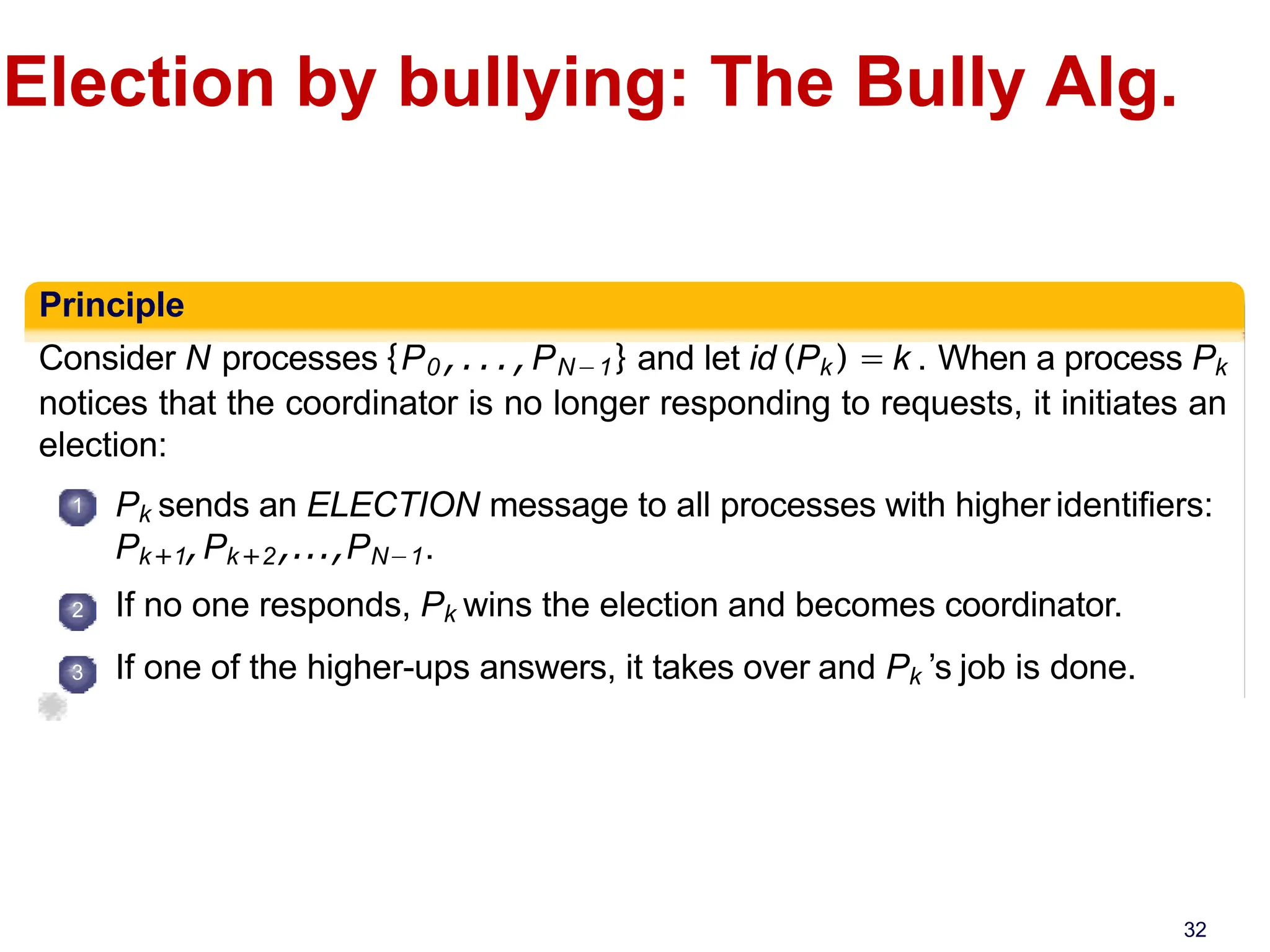

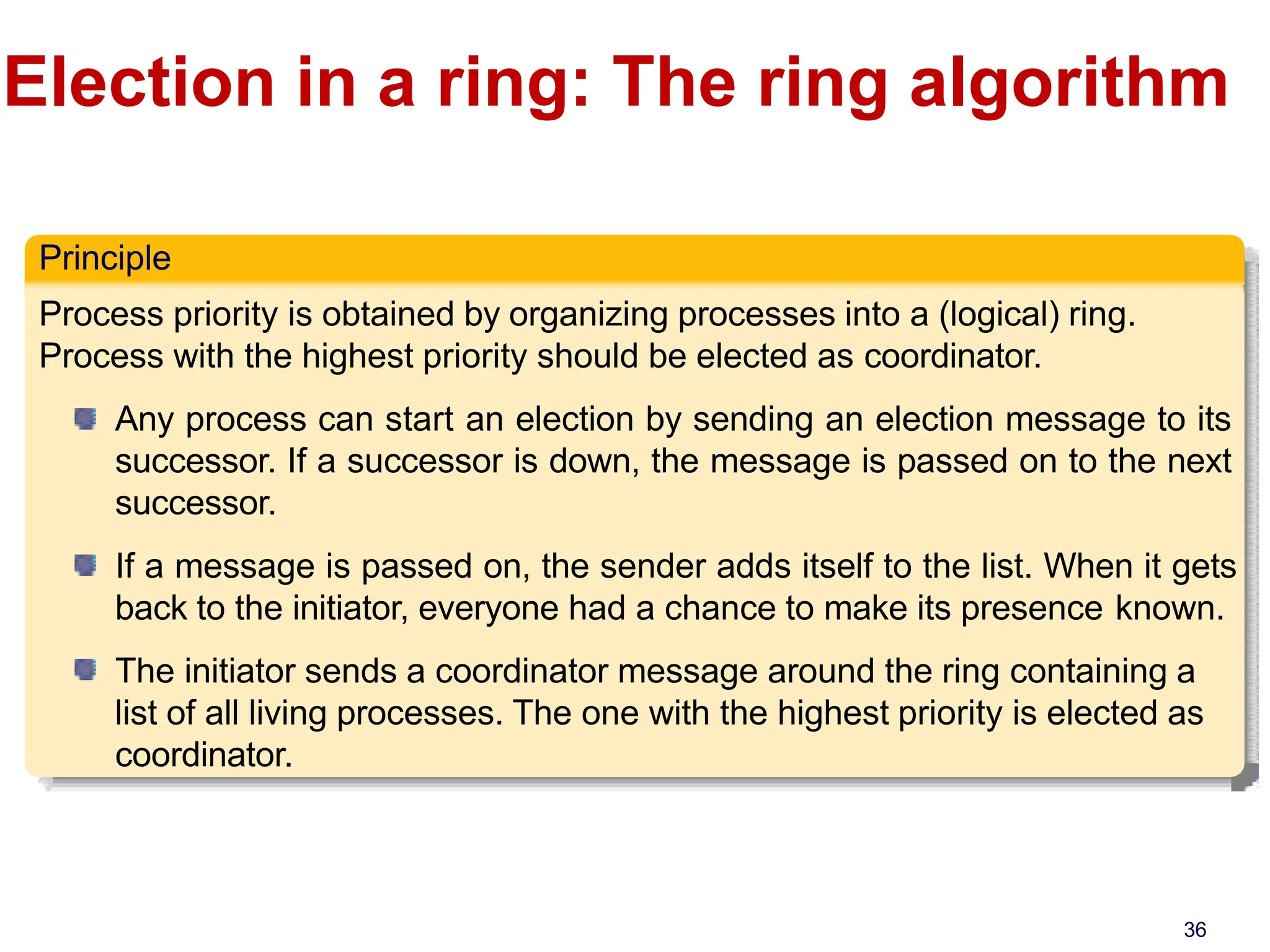

![Election in a ring

Election algorithm using a ring

1 2 3 4

5

6

7

0

[3]

[3,4]

[3,4,5]

[3,4,5,6]

[3,4,5,6,0]

[3,4,5,6,0,1] [3,4,5,6,0,1,2]

[6]

[6,0]

[6,0,1] [6,0,1,2] [6,0,1,2,3]

[6,0,1,2,3,4]

[6,0,1,2,3,4,5]

The solid line shows the election messages initiated by P6

The dashed one the messages by P3

37](https://image.slidesharecdn.com/dclecture04and05mutualexcutionandelectionalgorithms-240401130316-795feda8/75/DC-Lecture-04-and-05-Mutual-Excution-and-Election-Algorithms-pdf-37-2048.jpg)