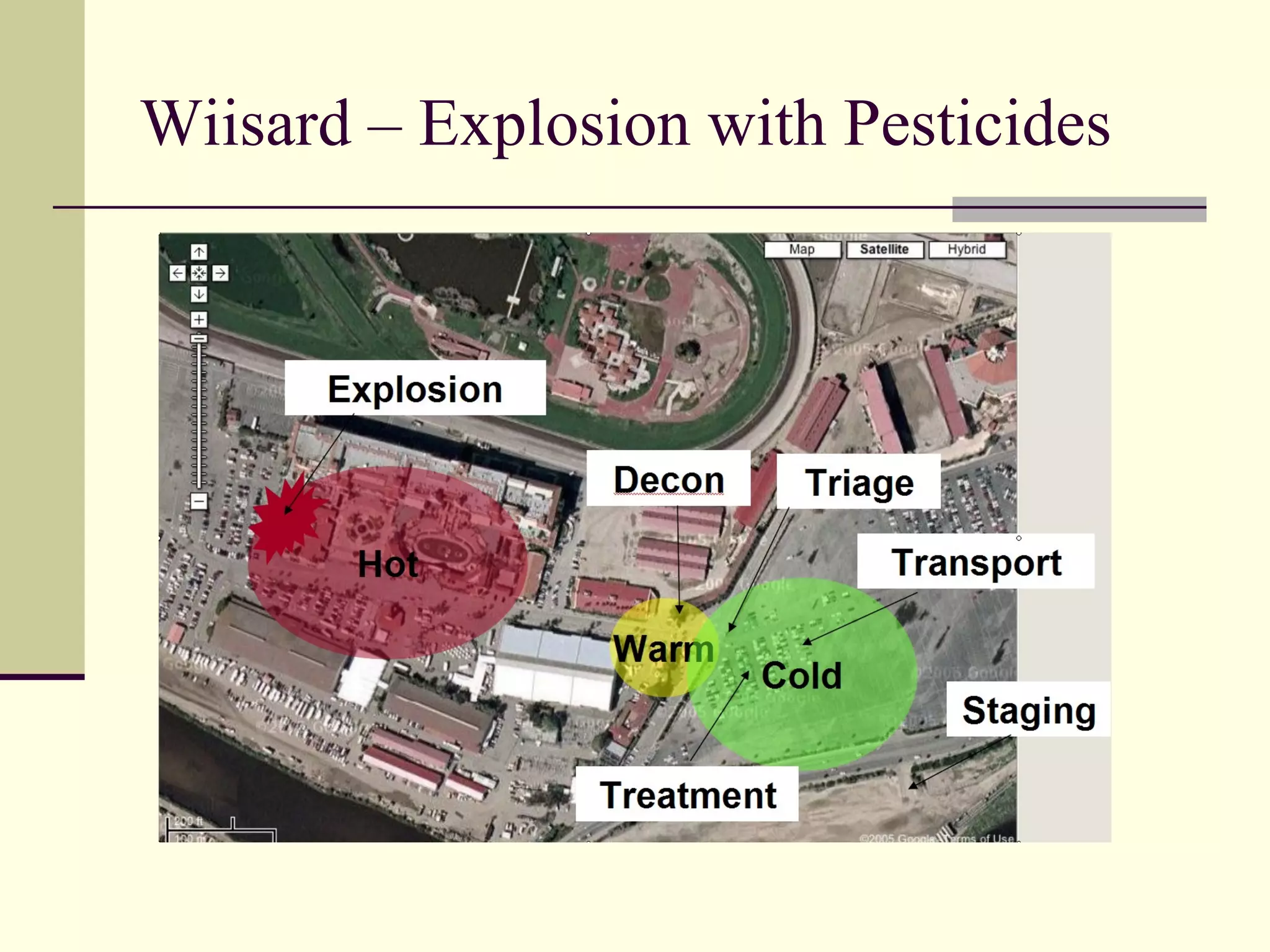

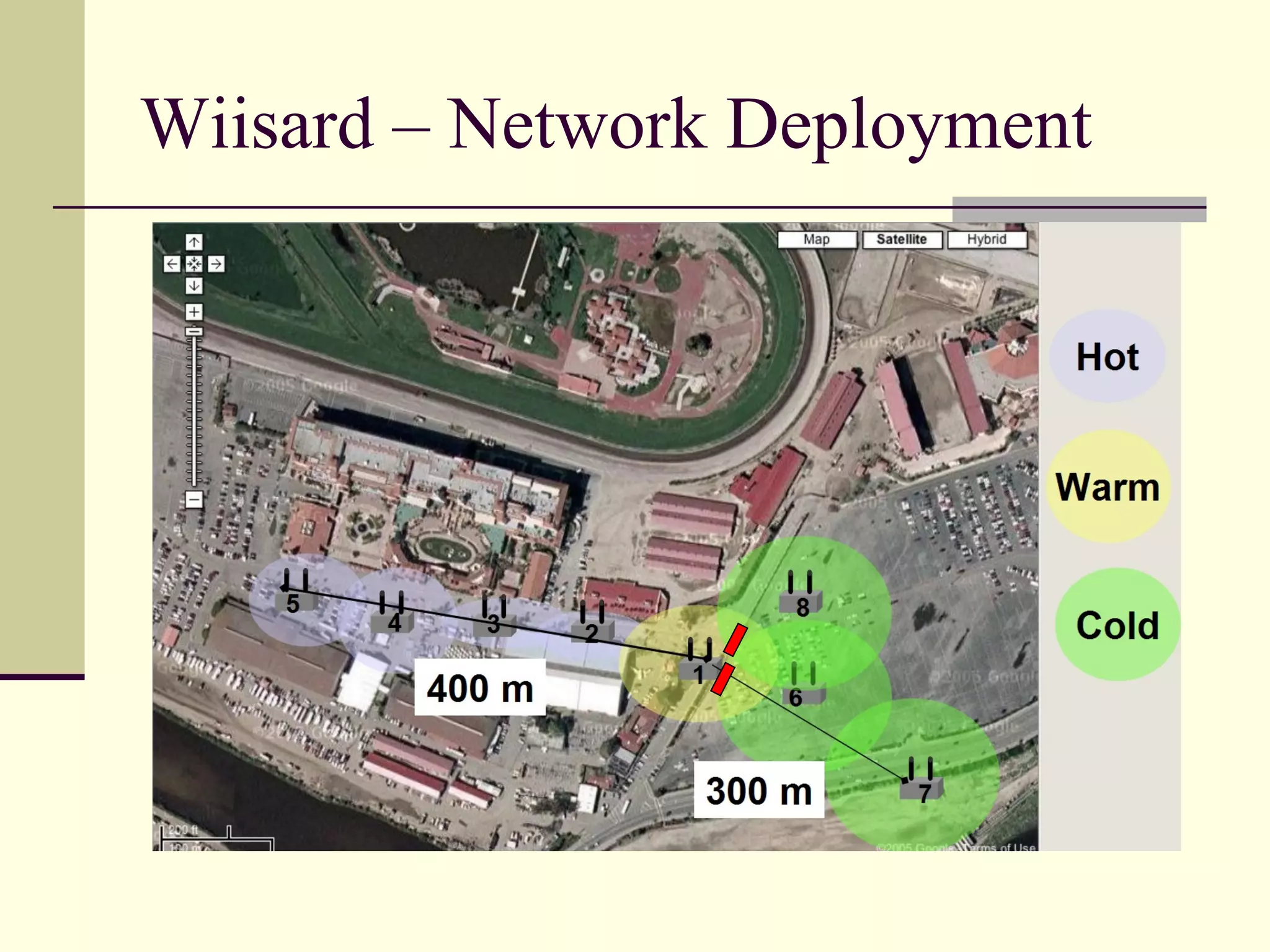

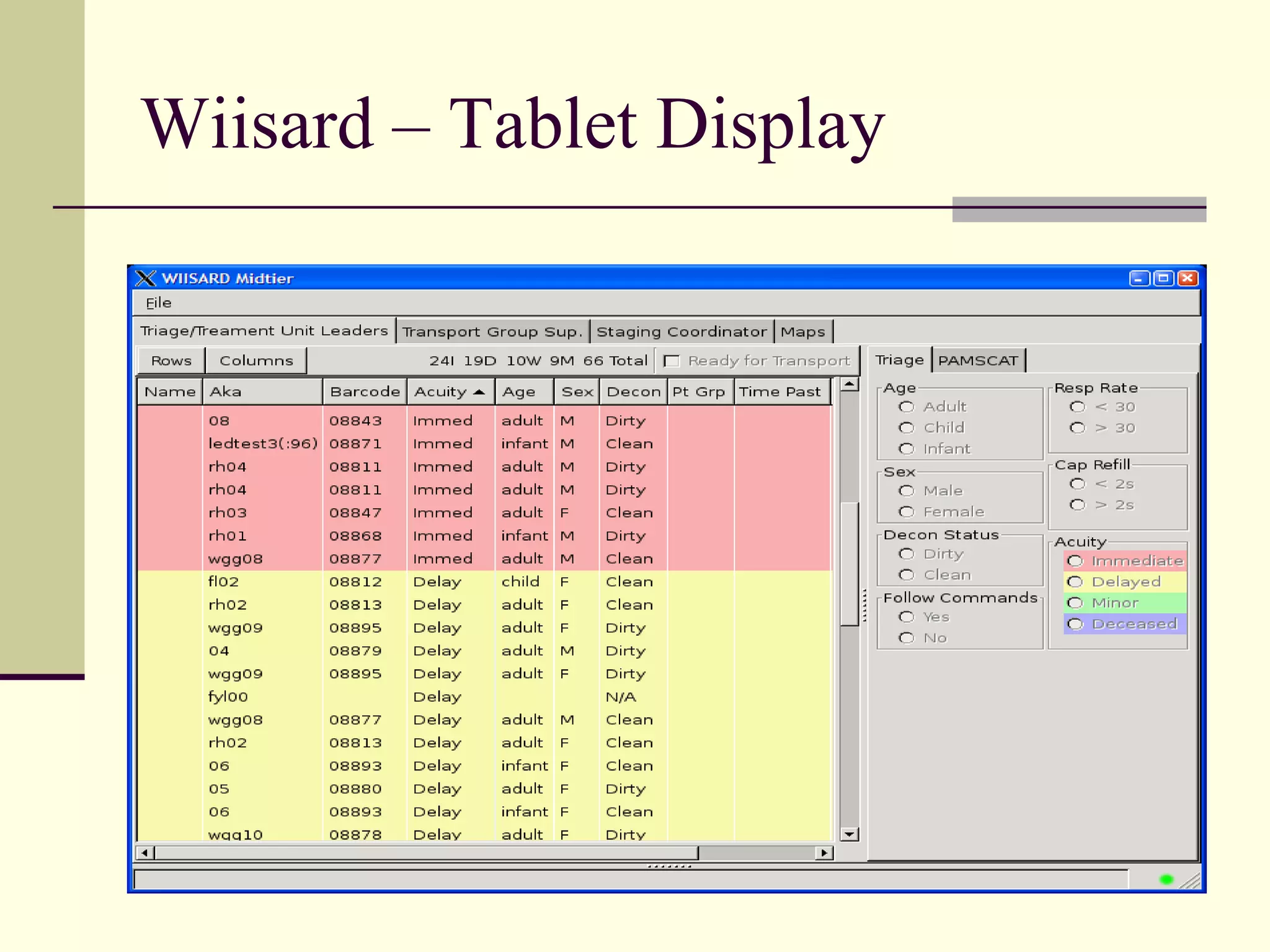

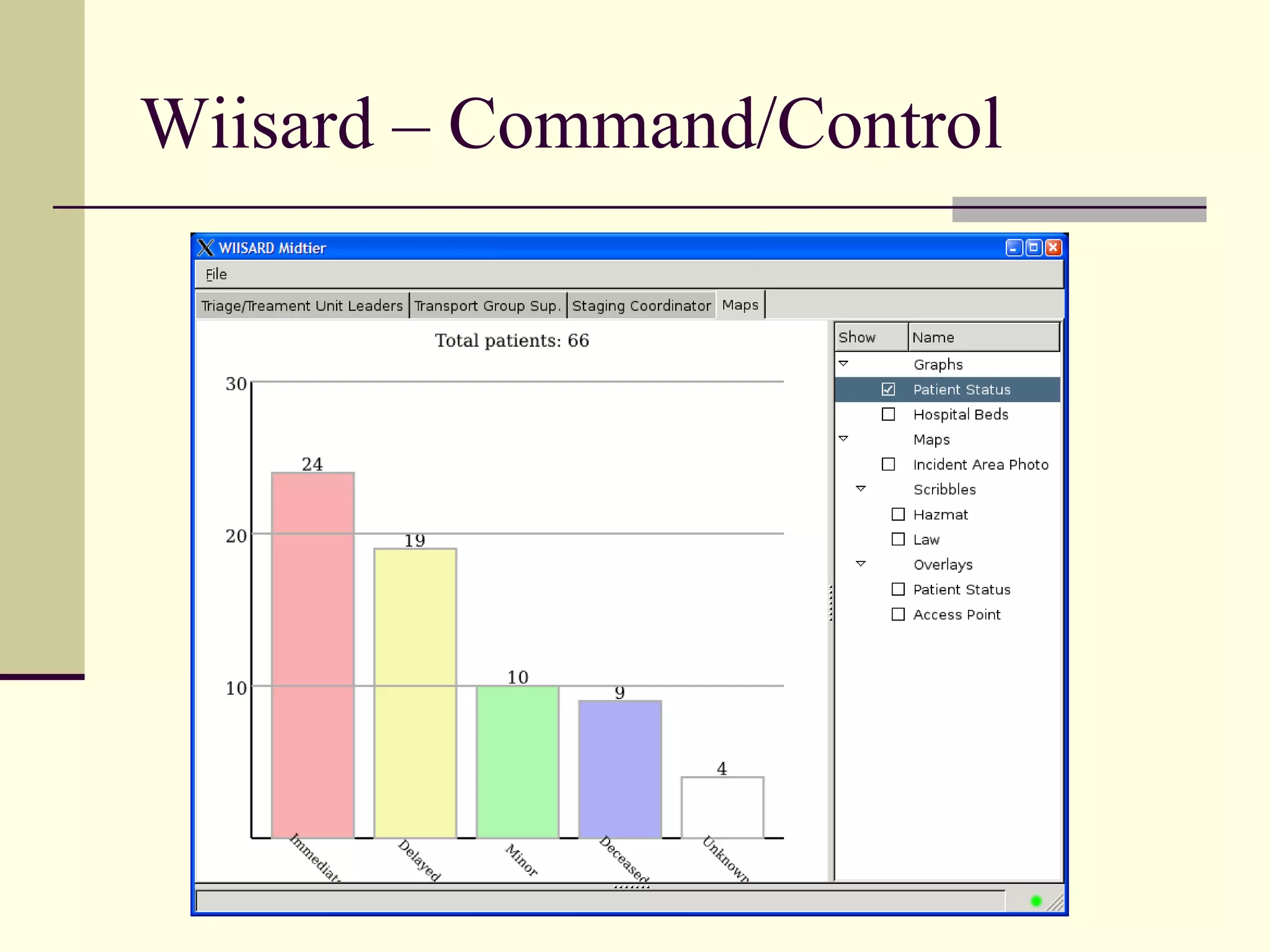

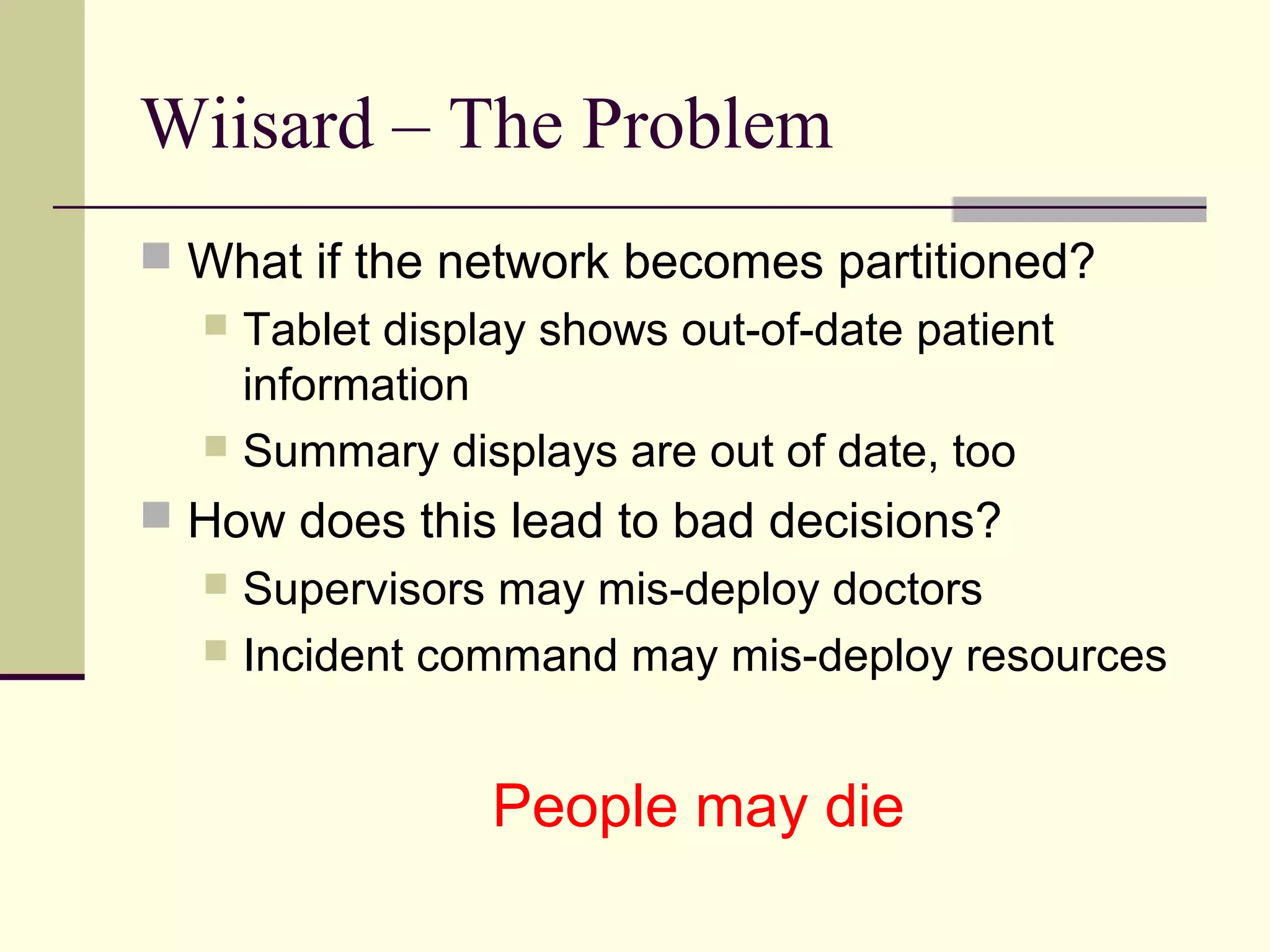

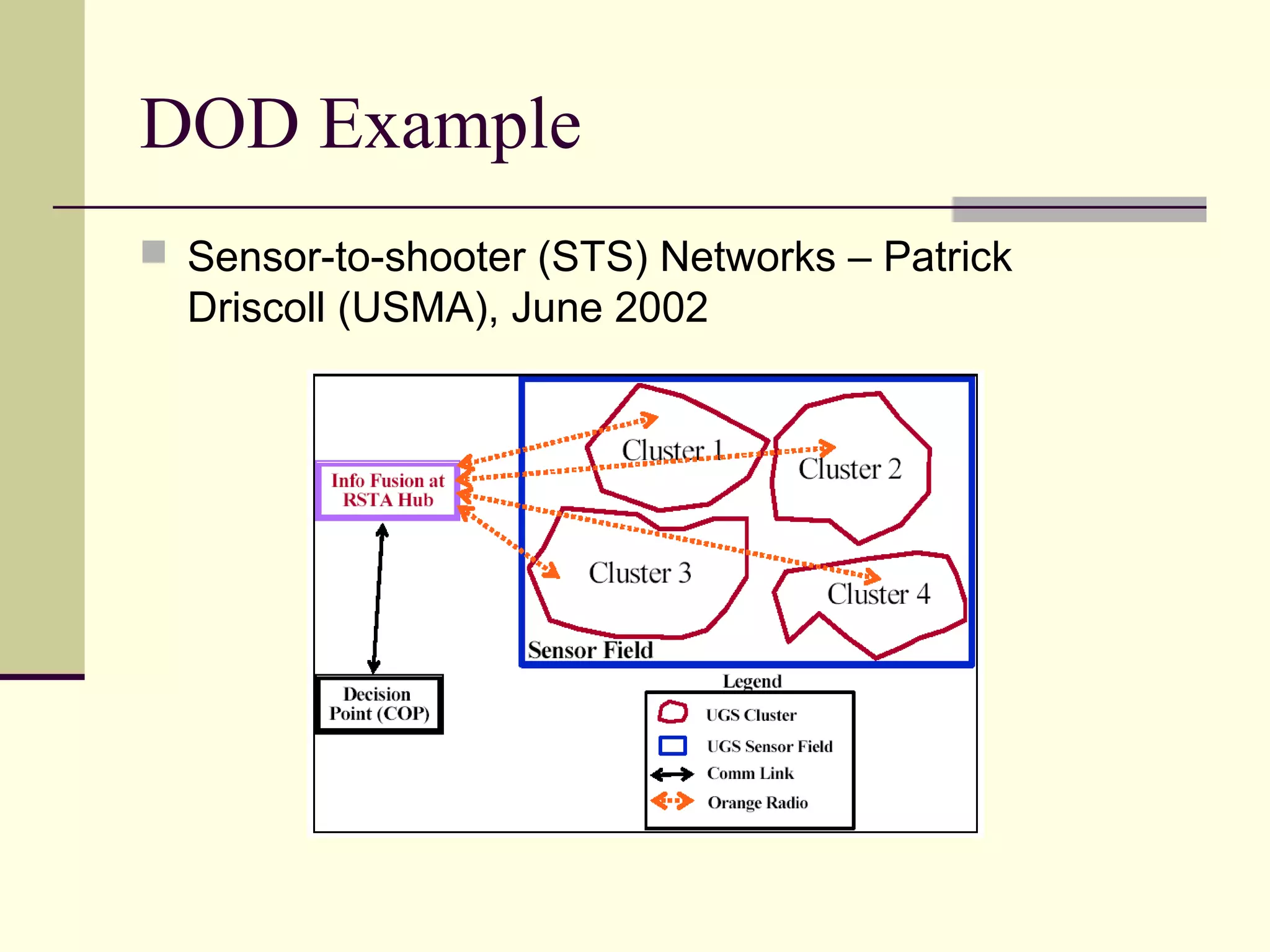

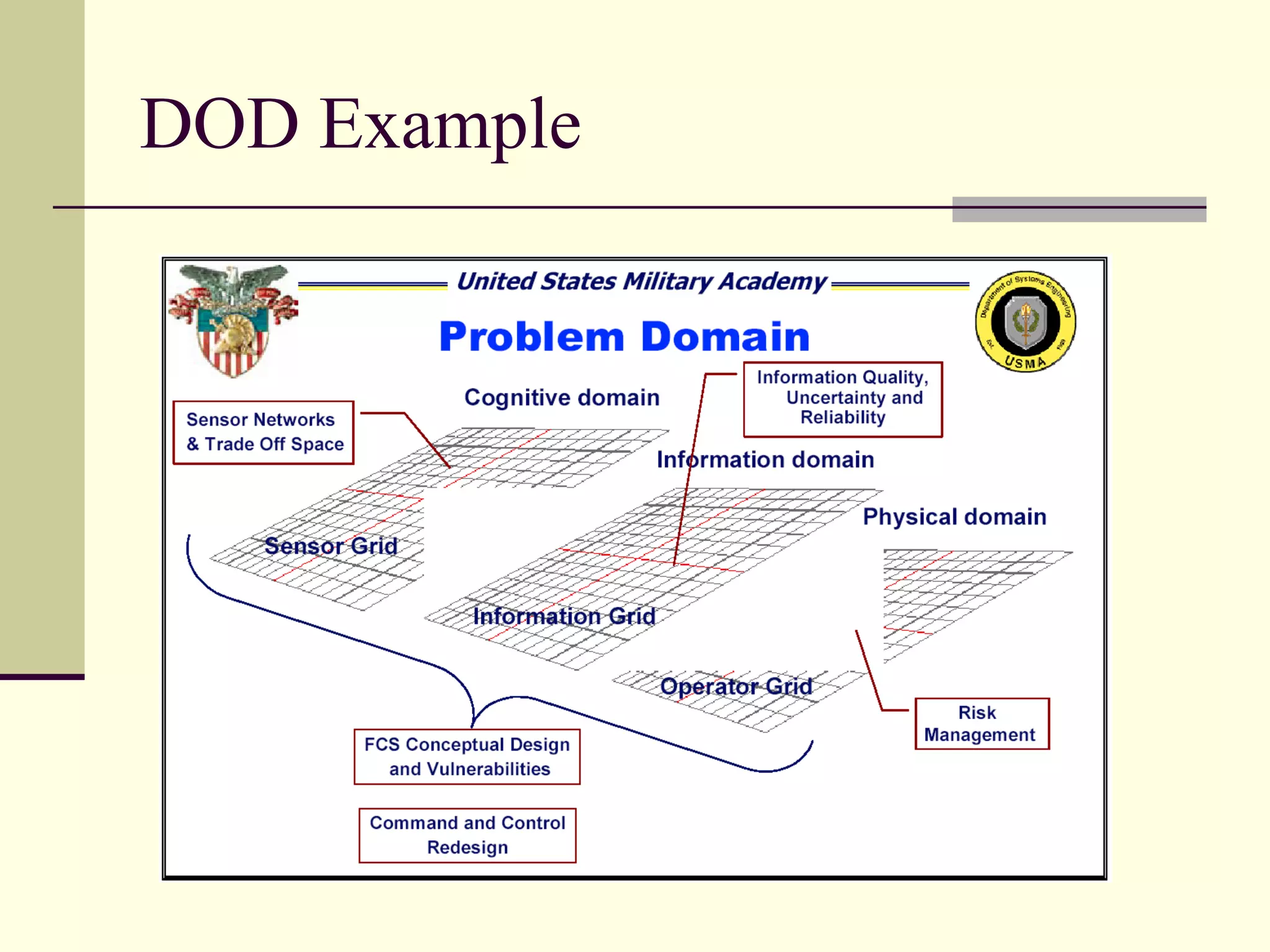

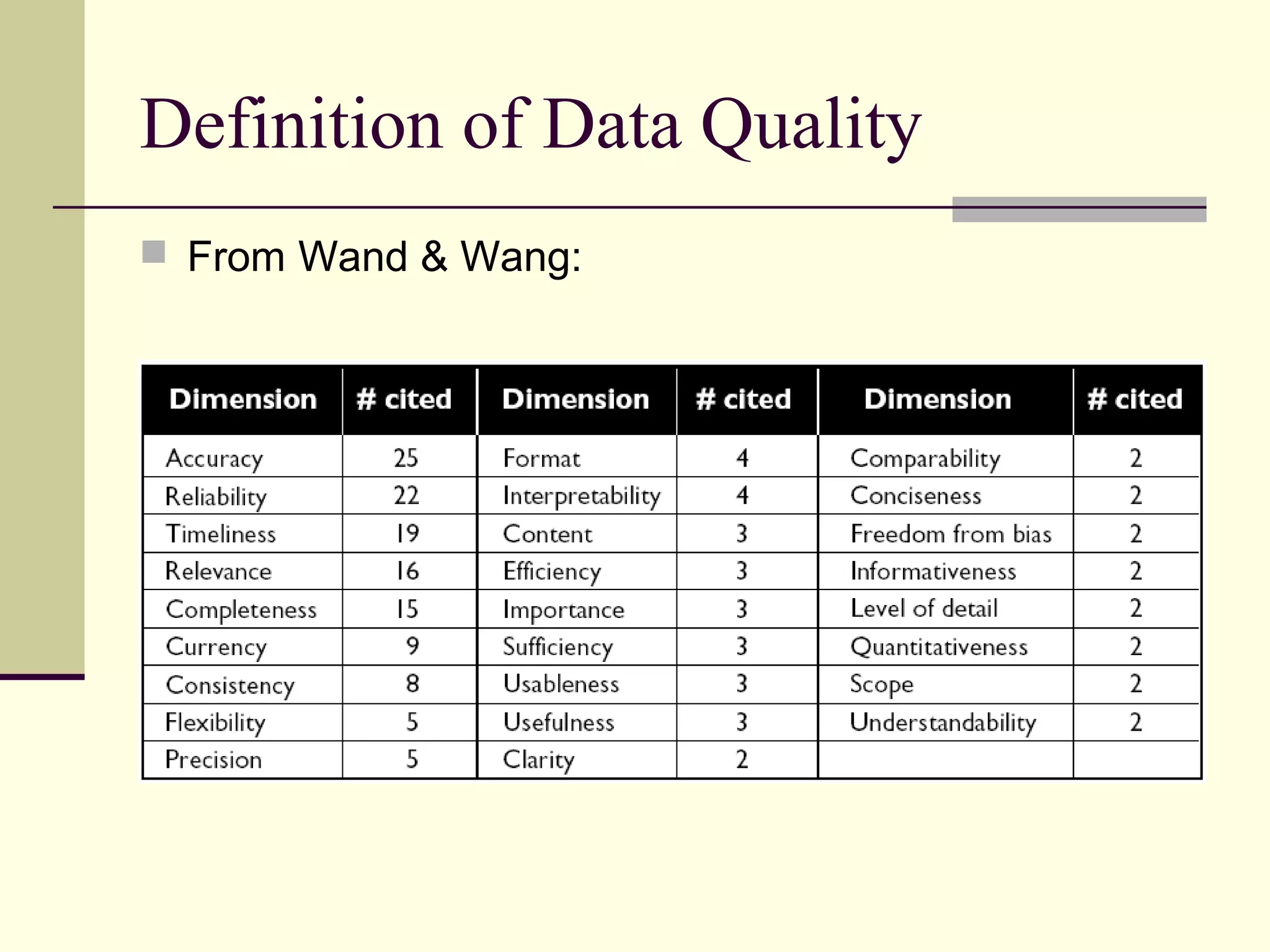

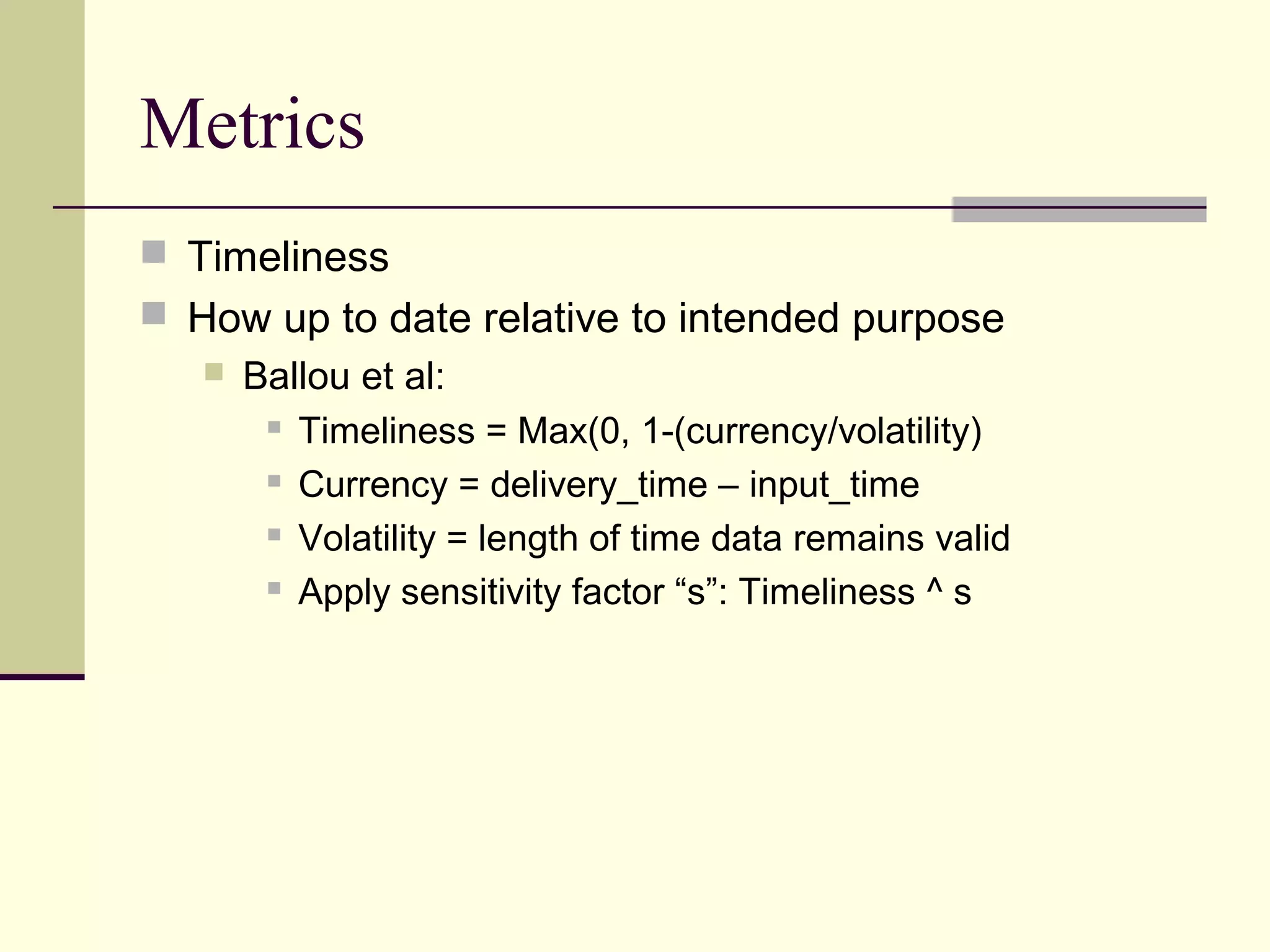

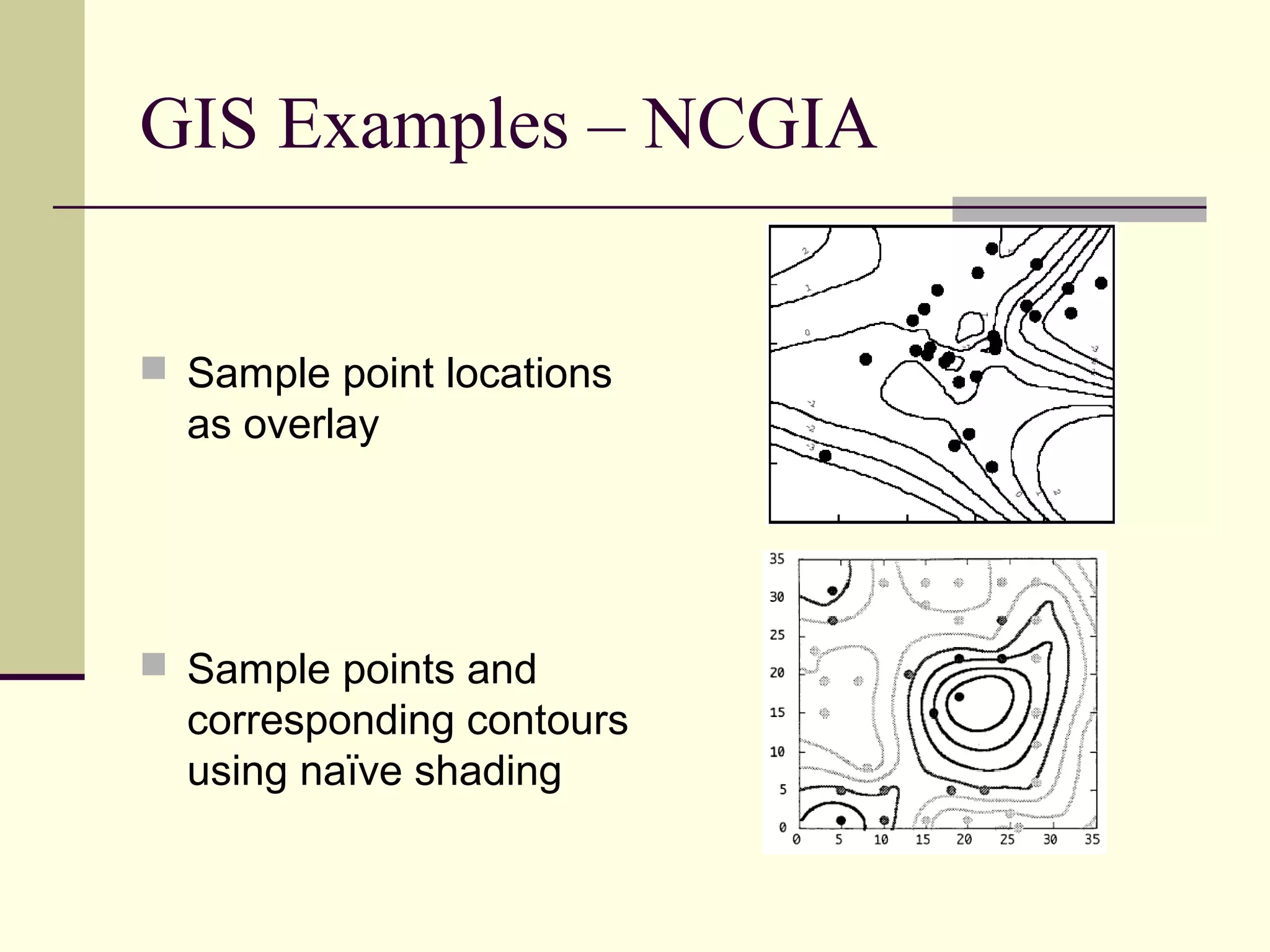

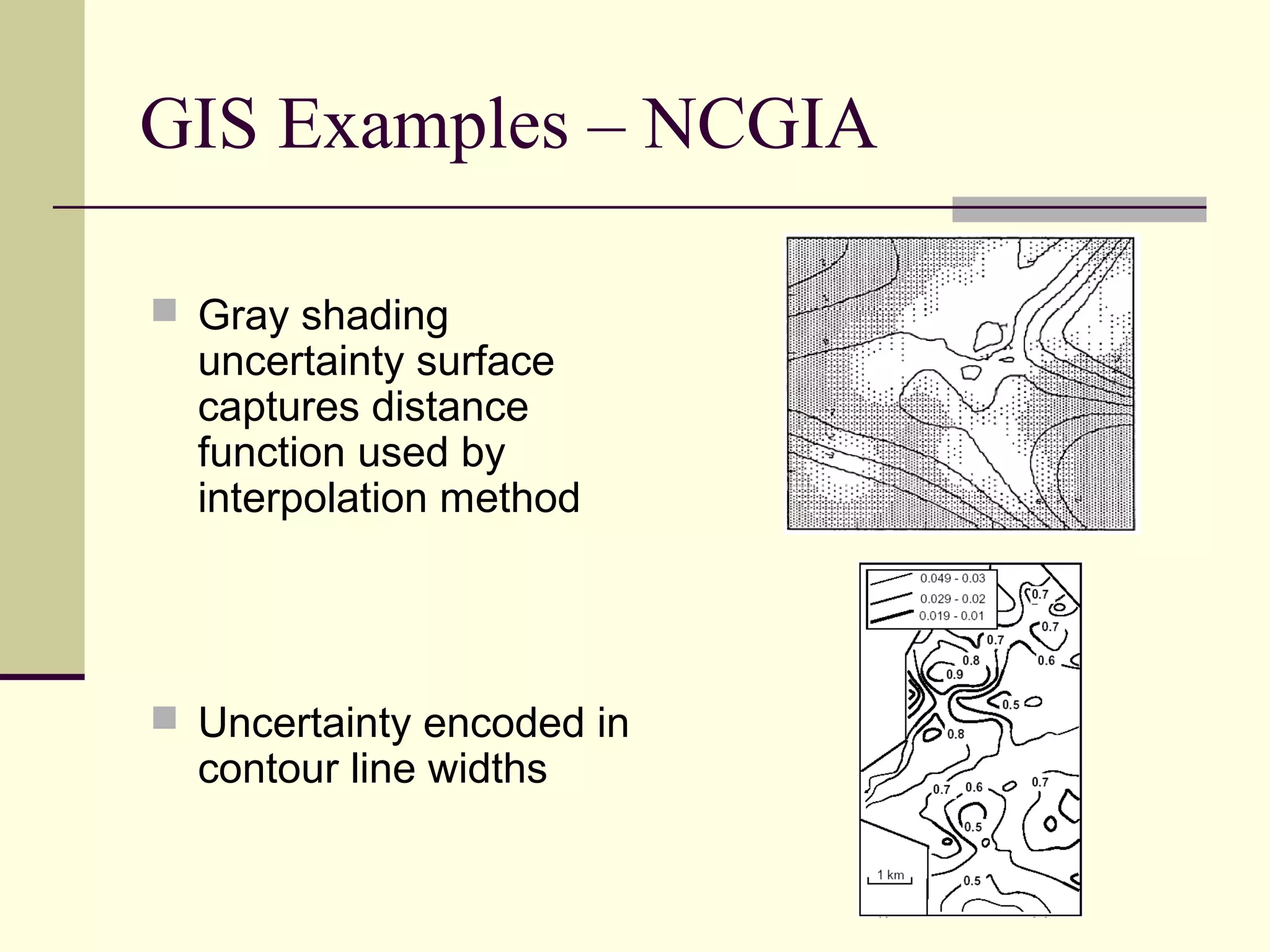

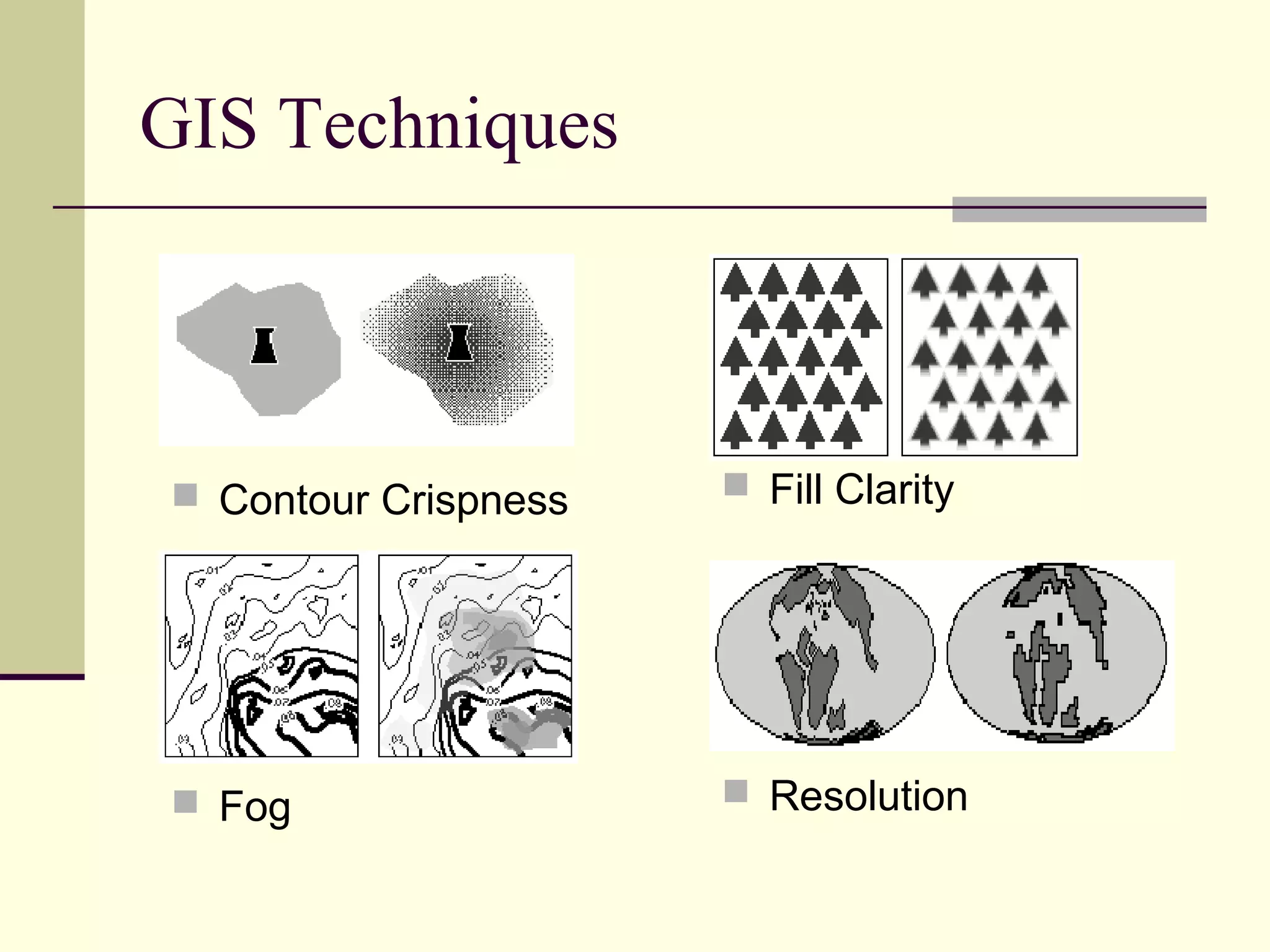

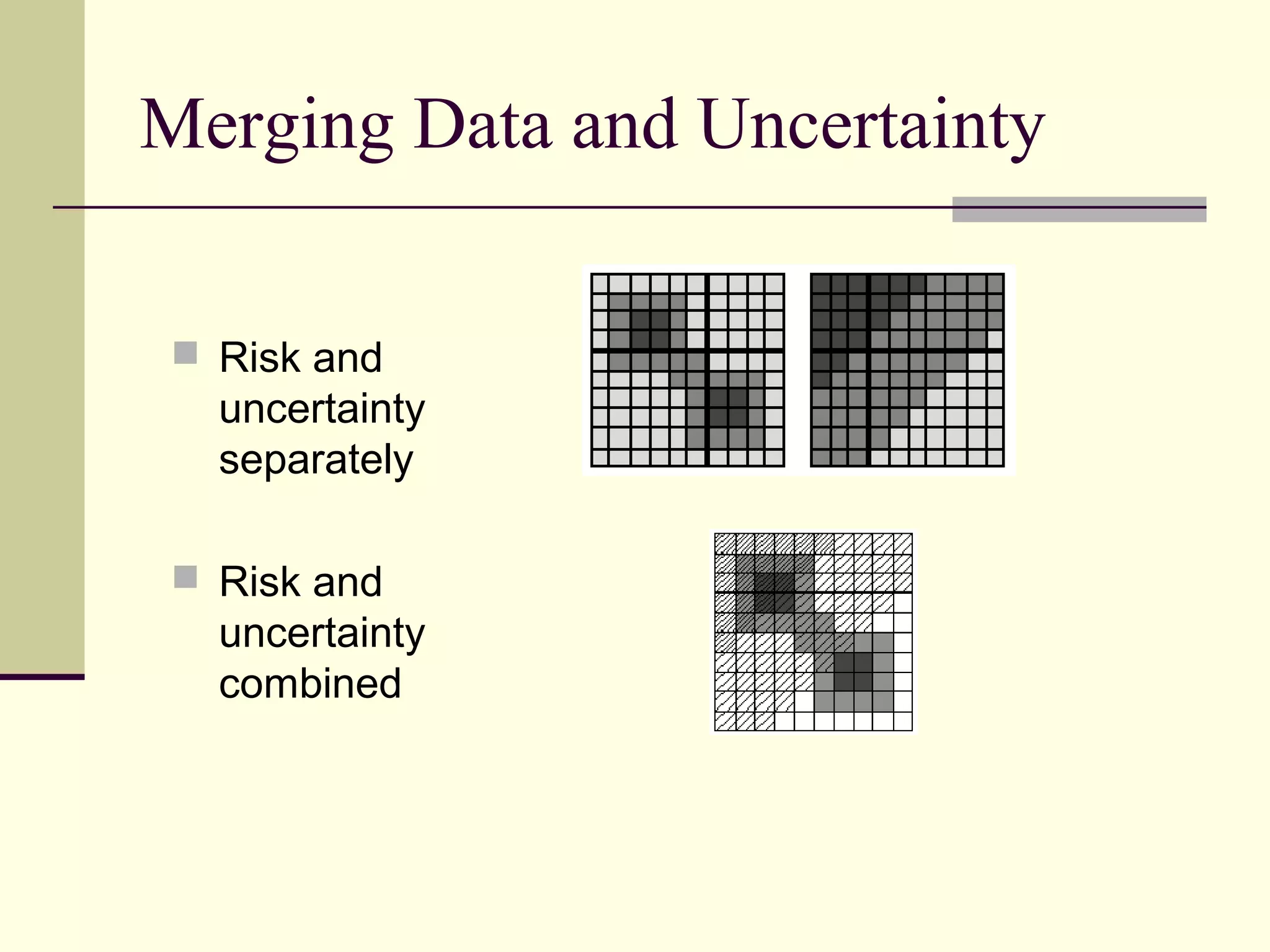

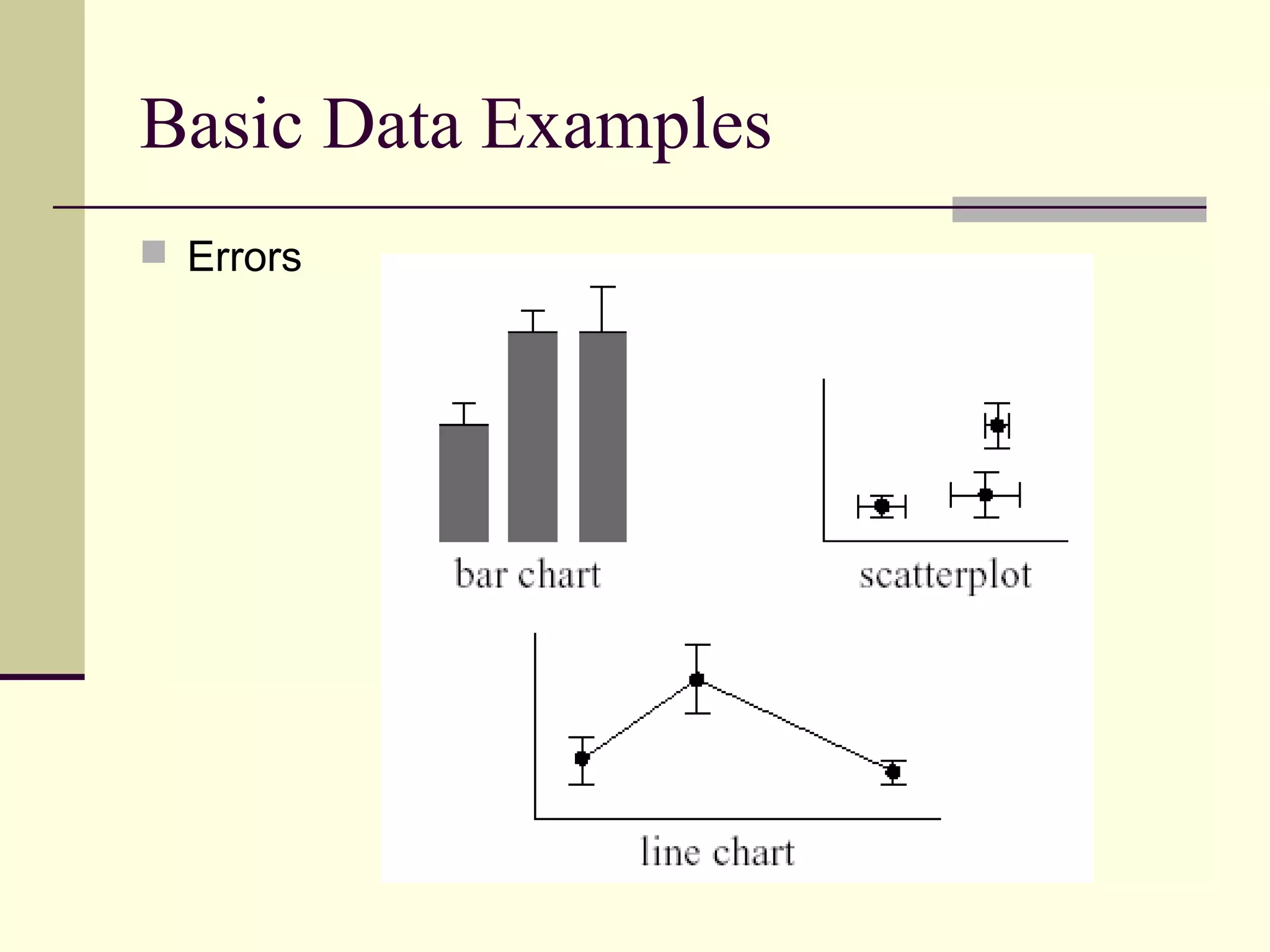

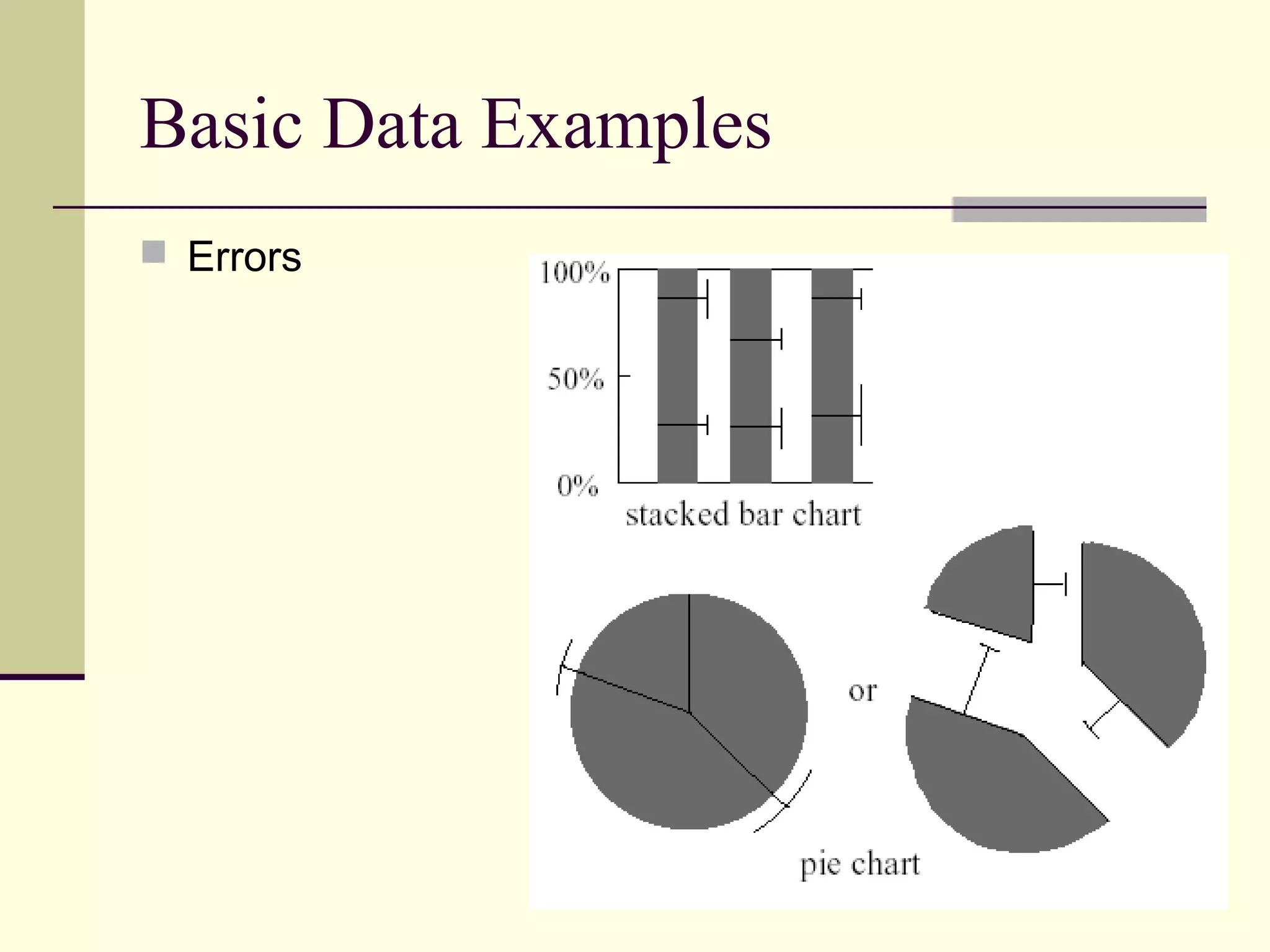

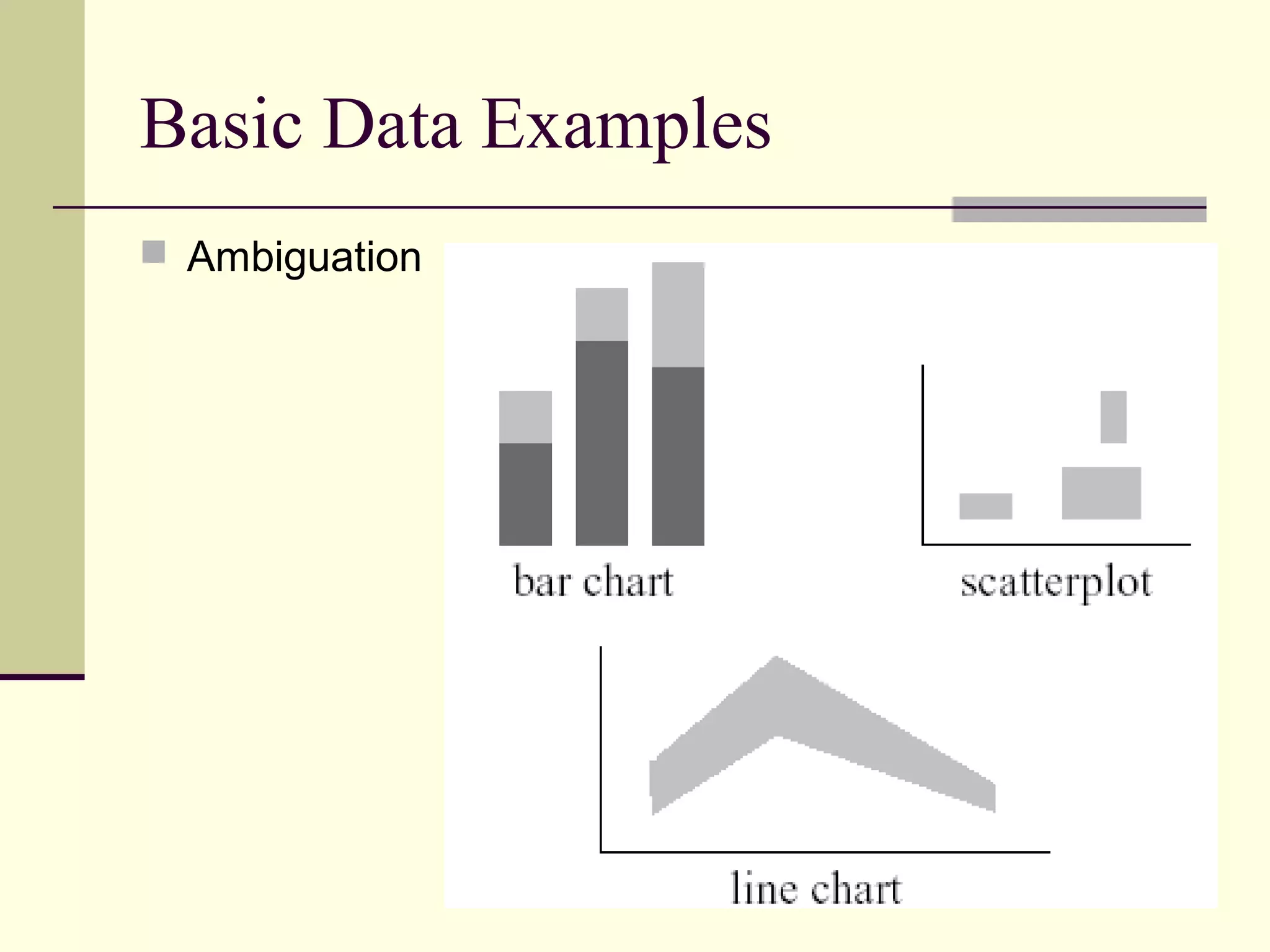

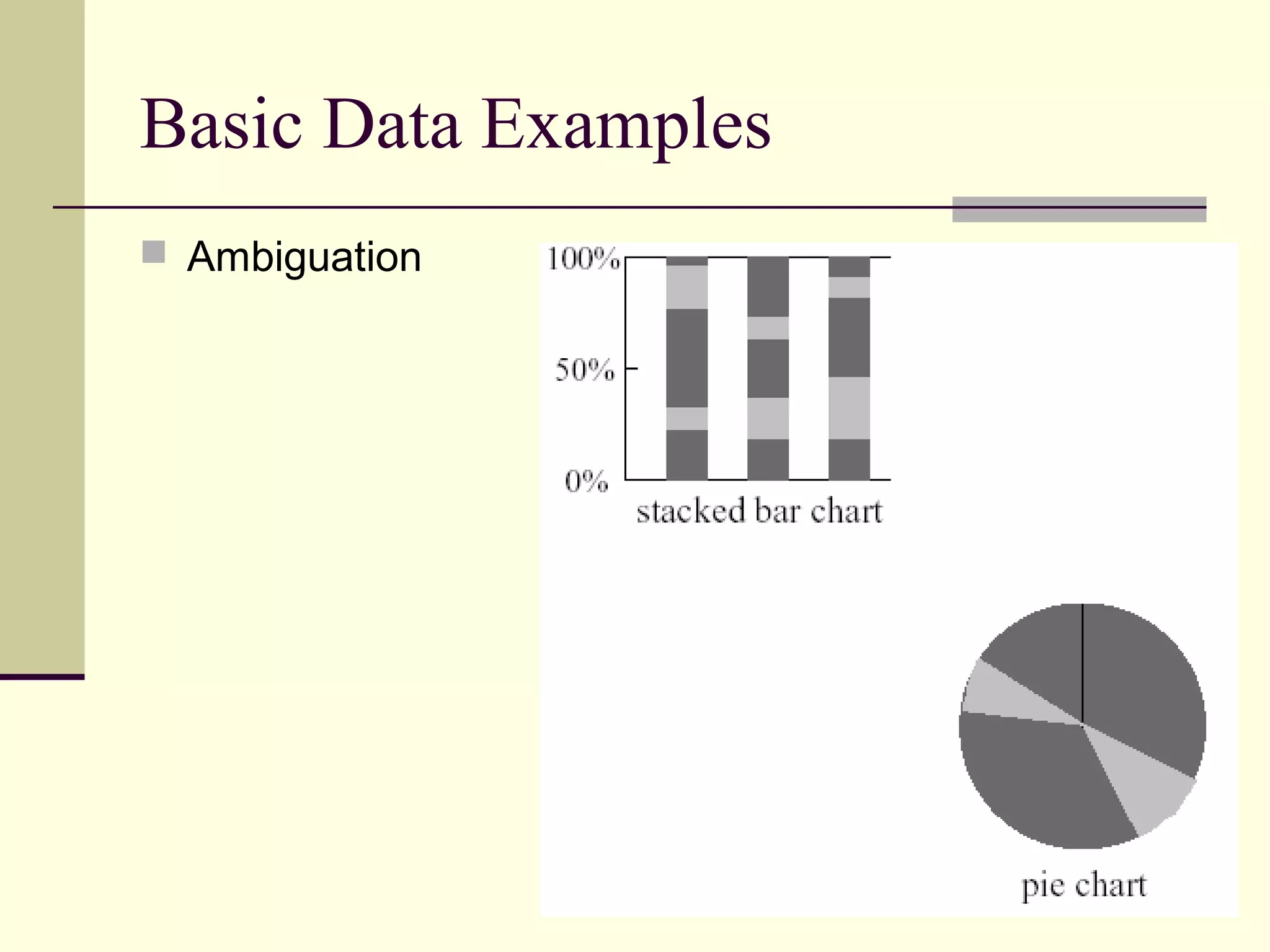

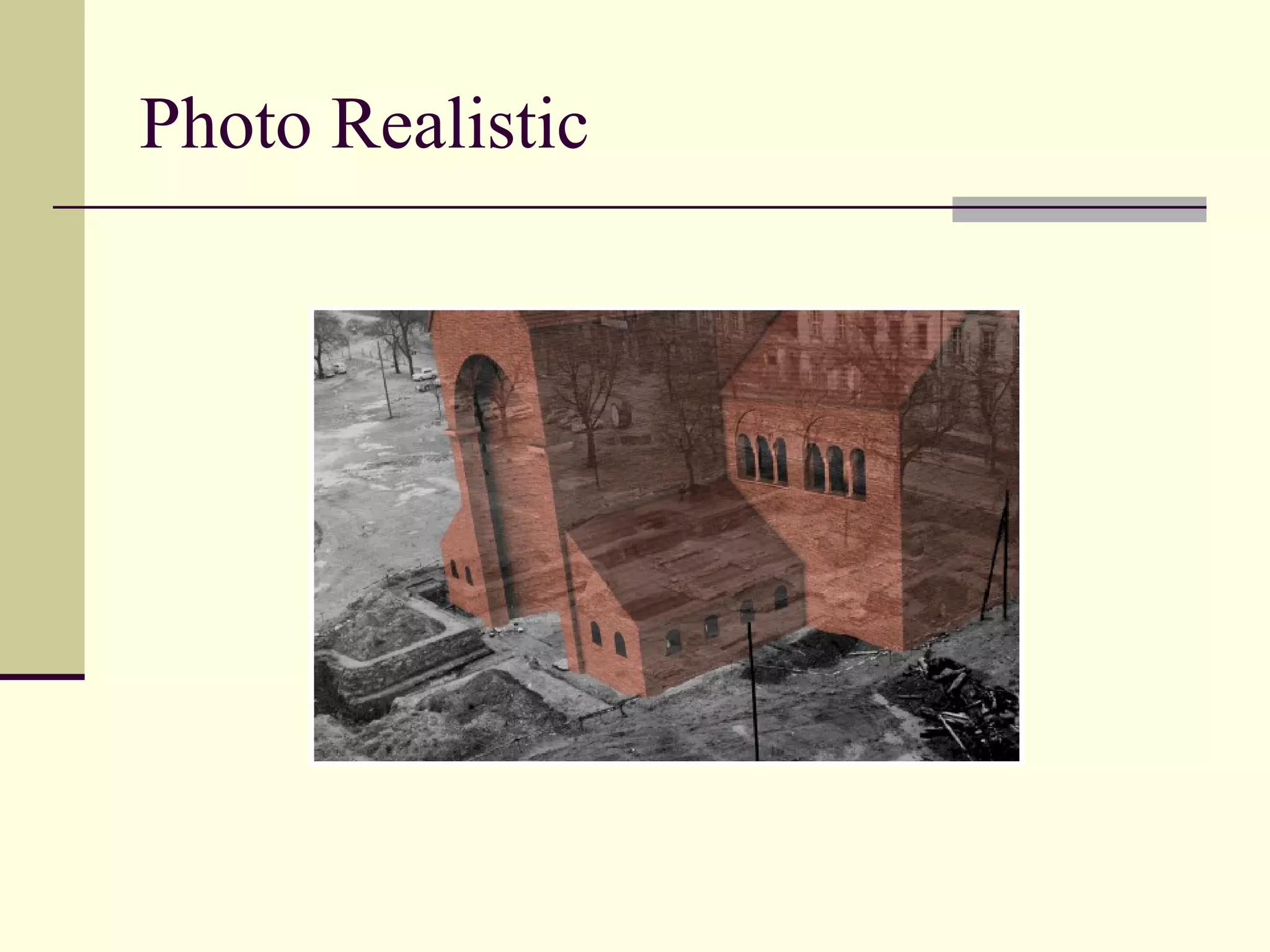

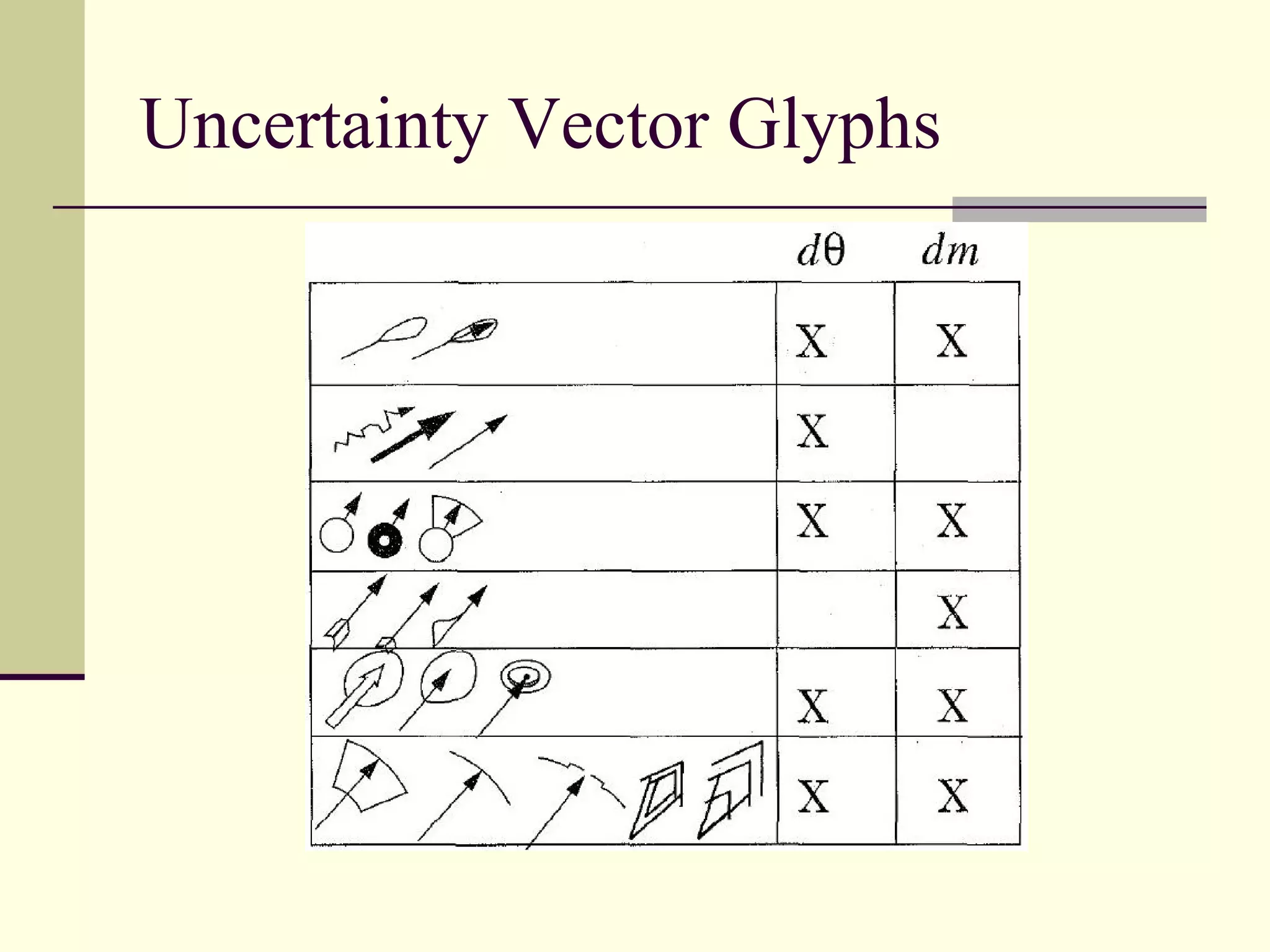

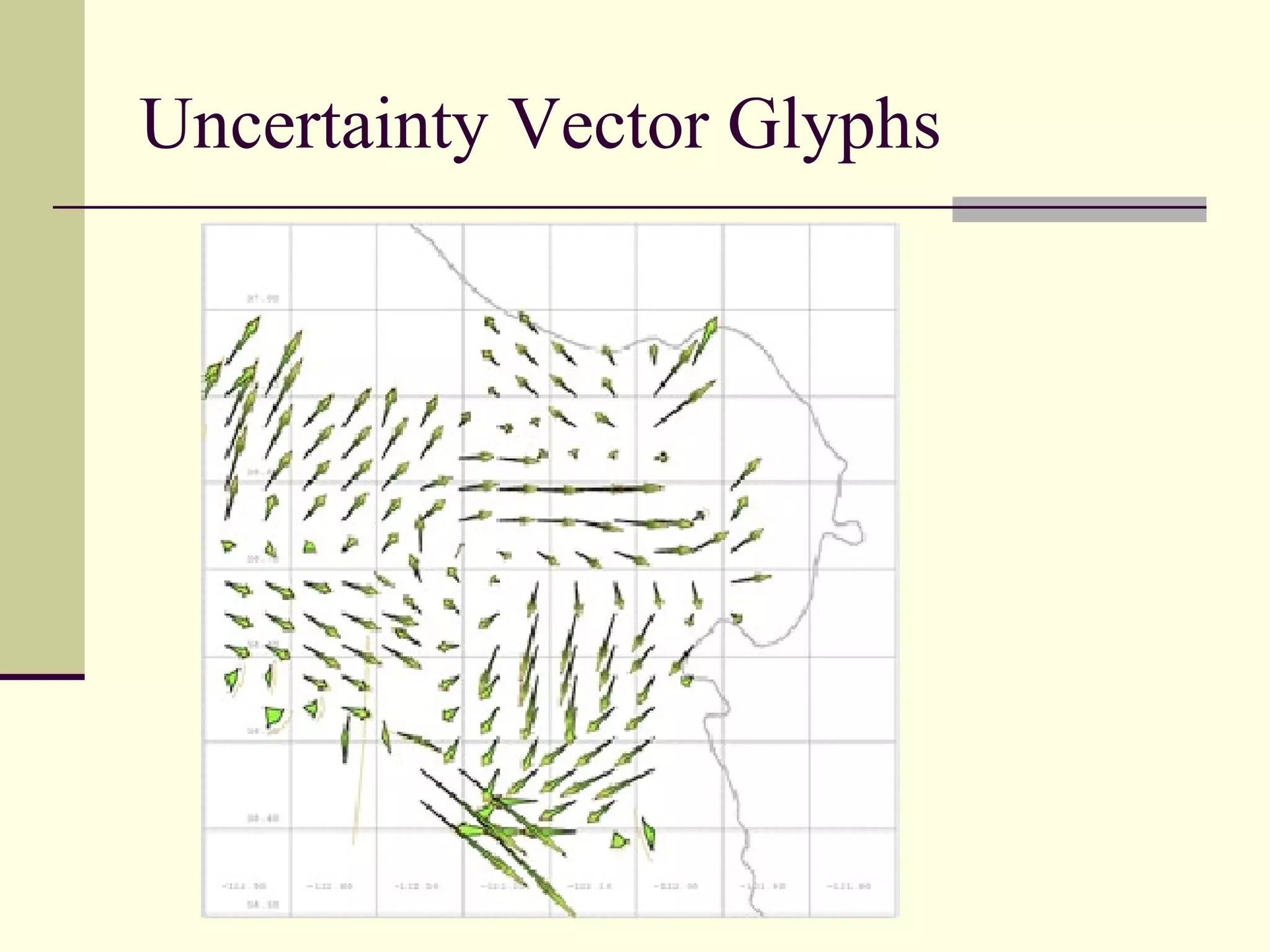

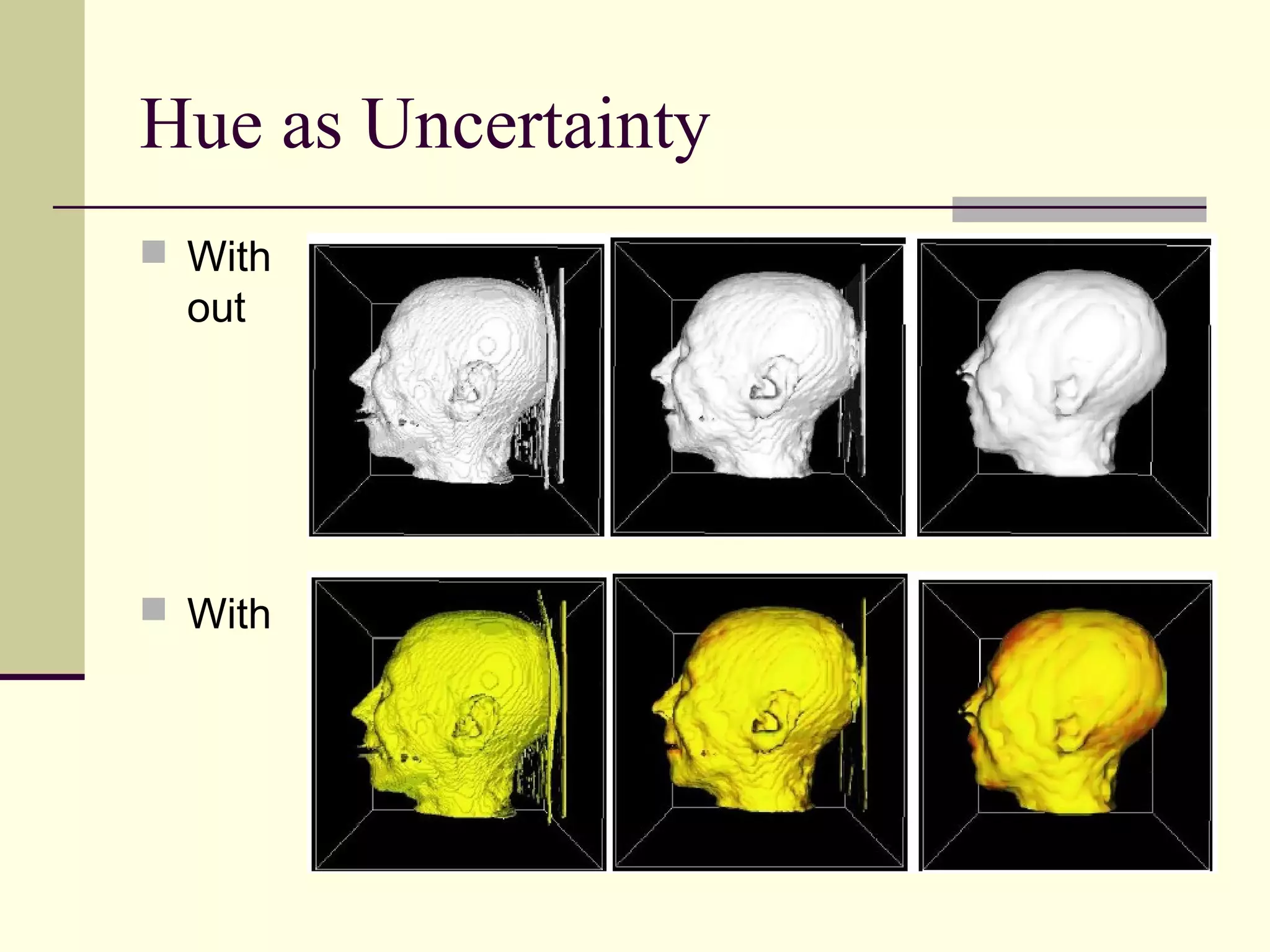

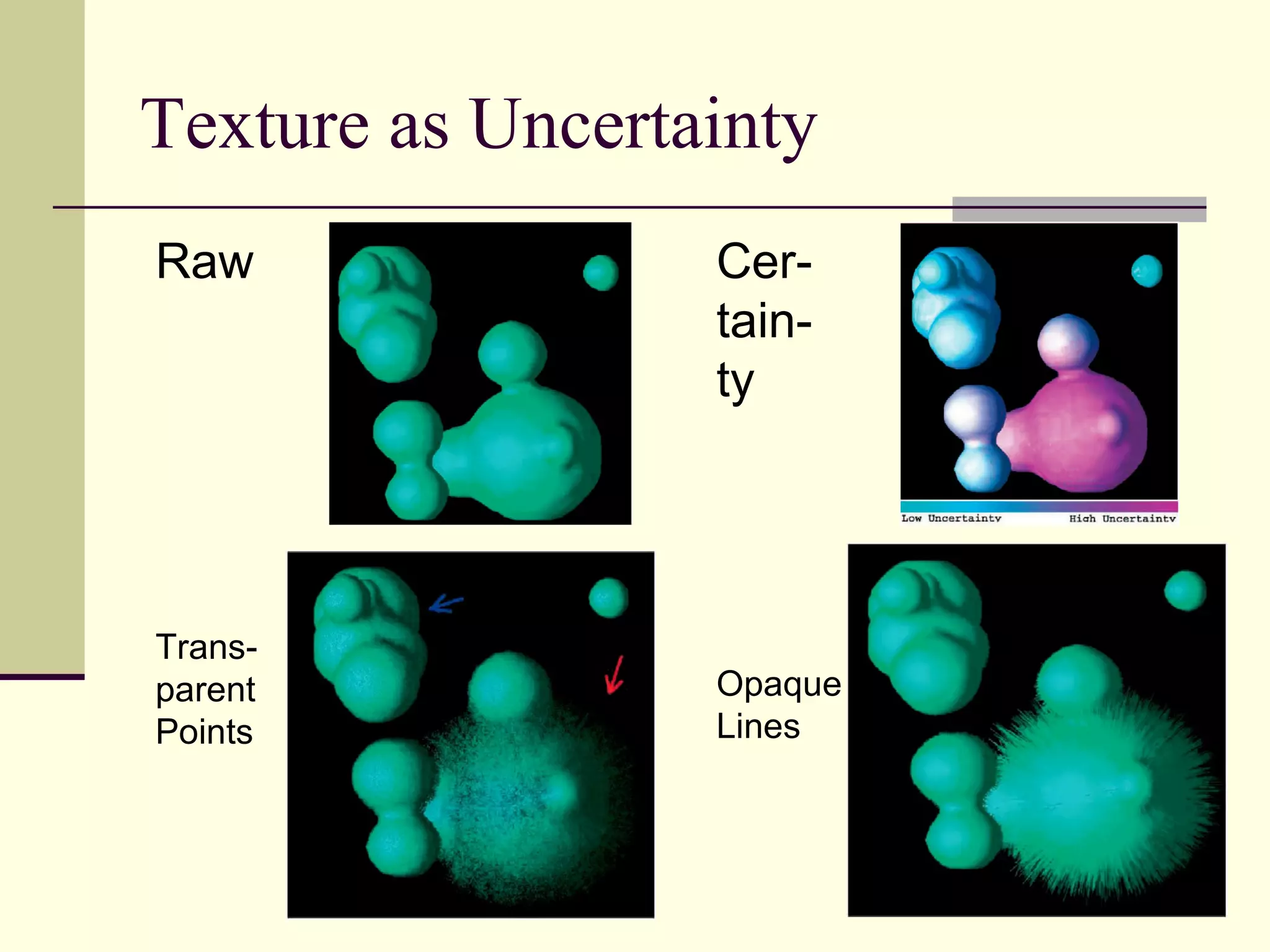

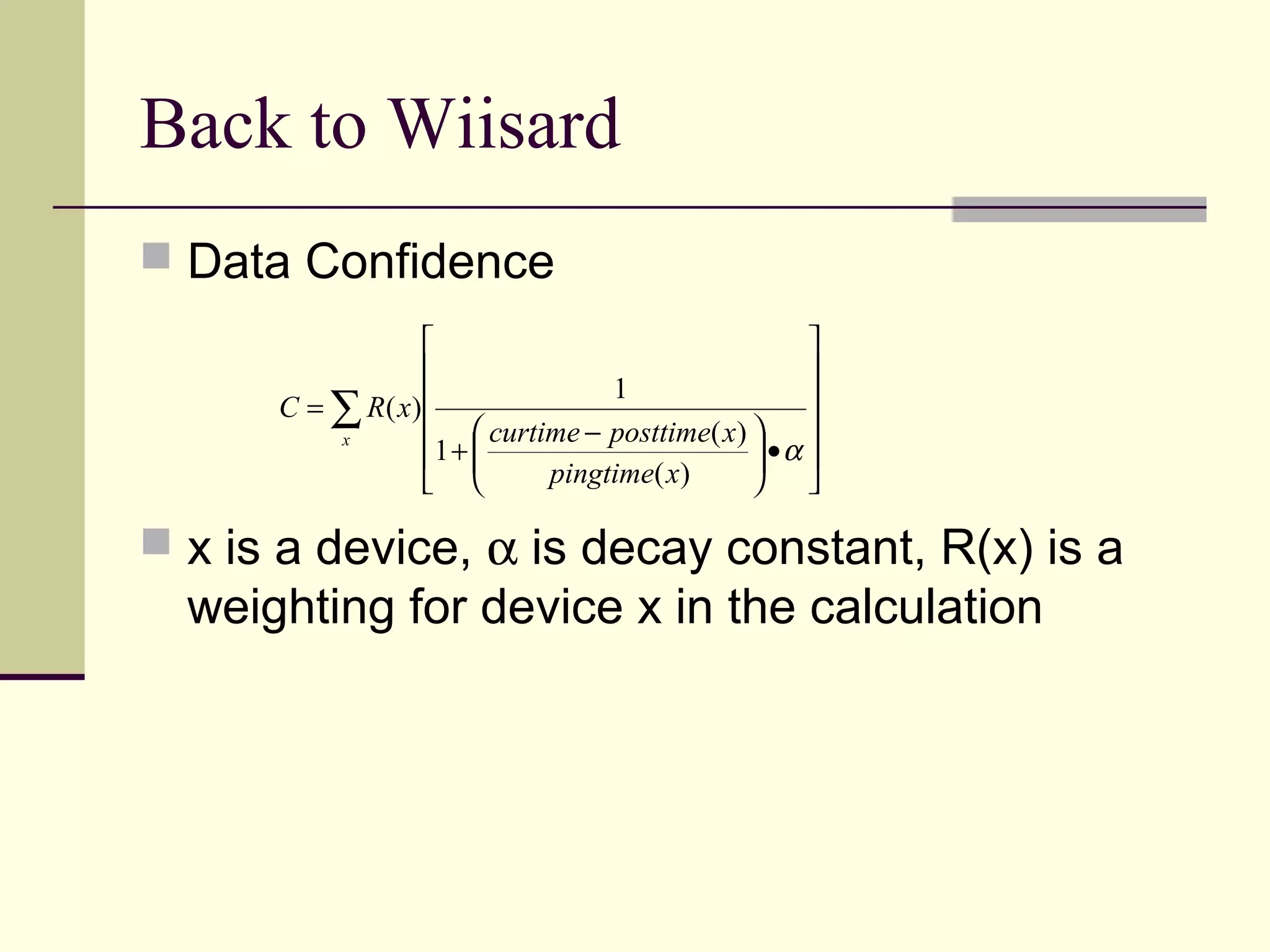

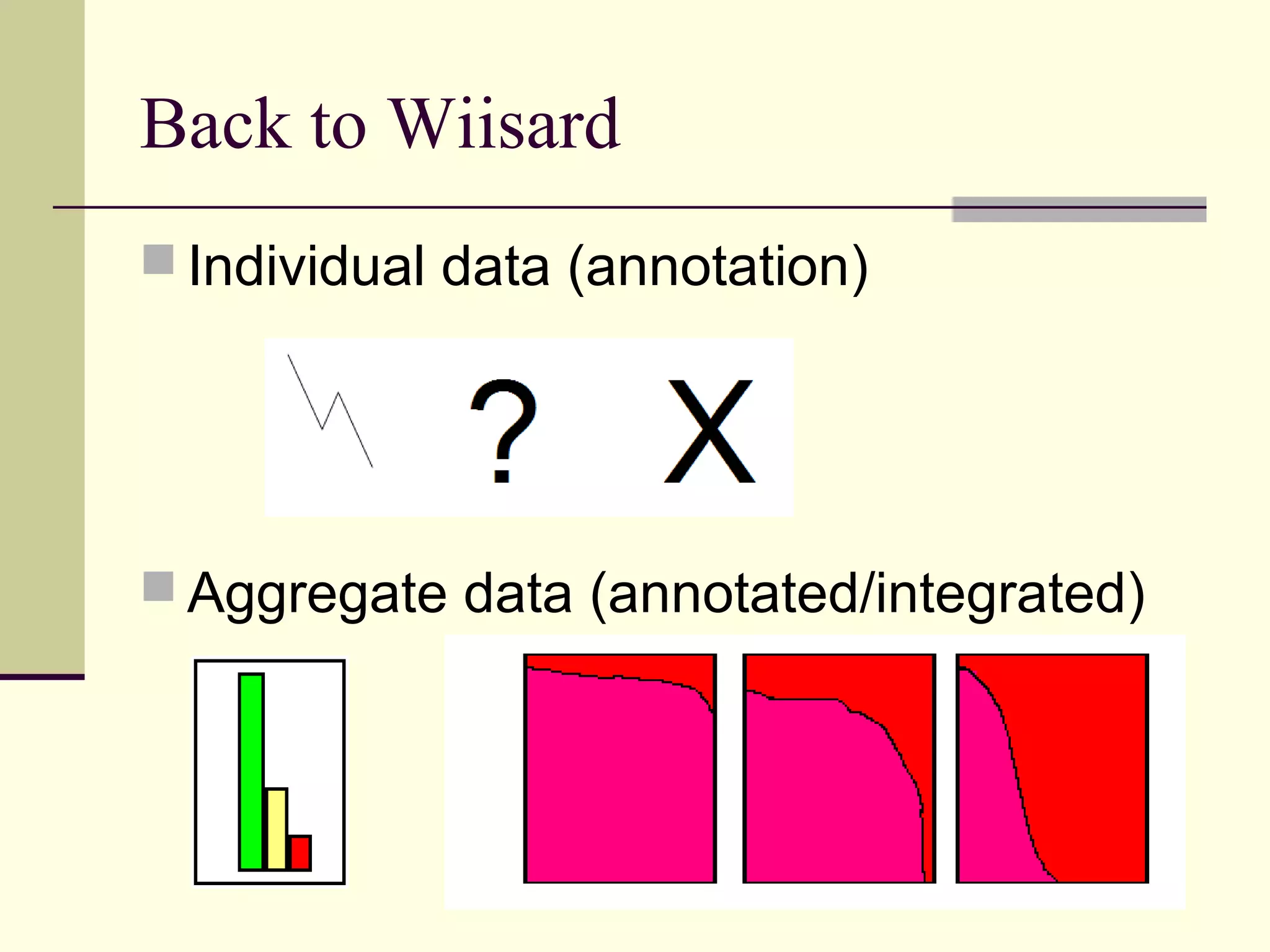

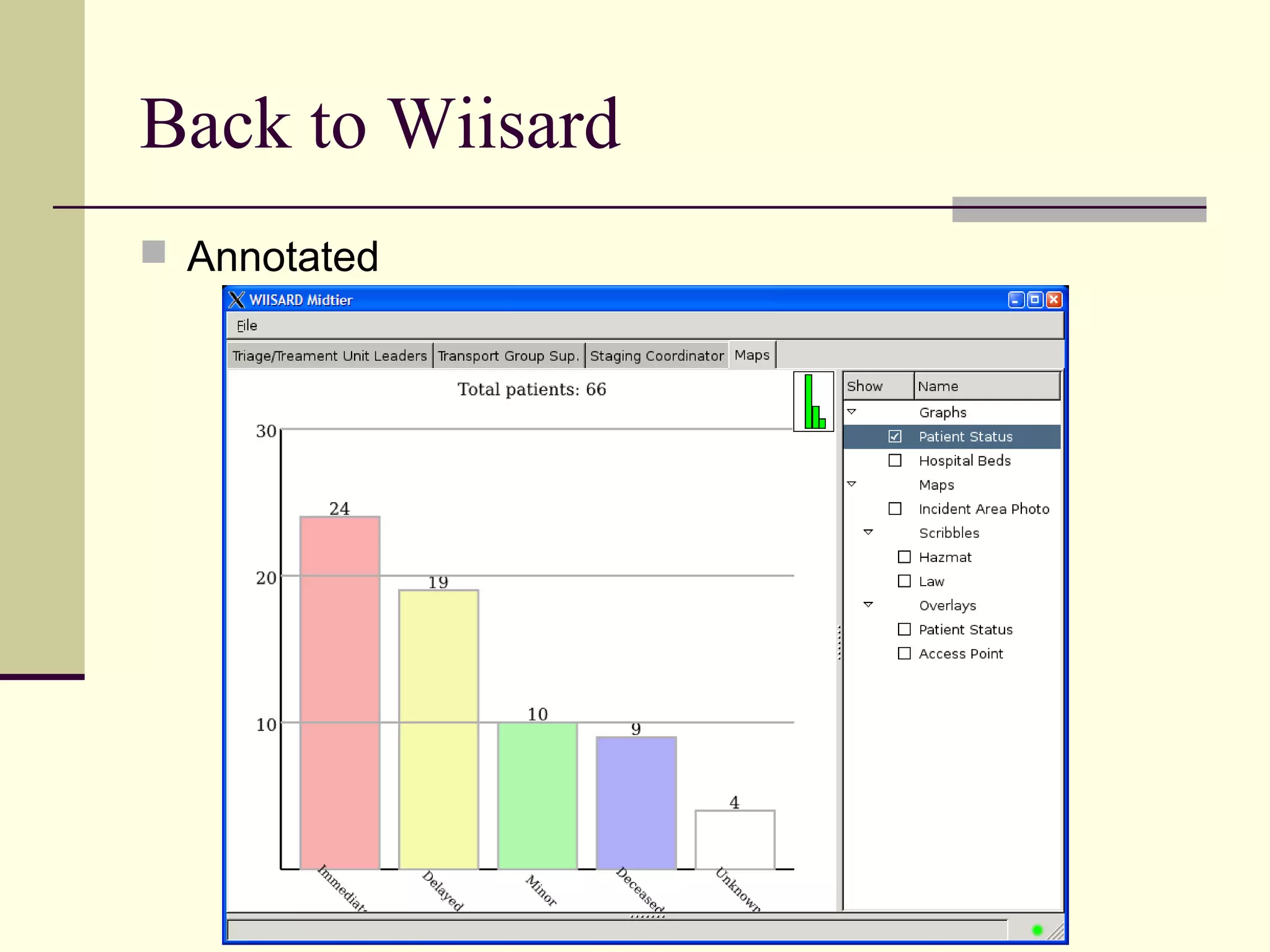

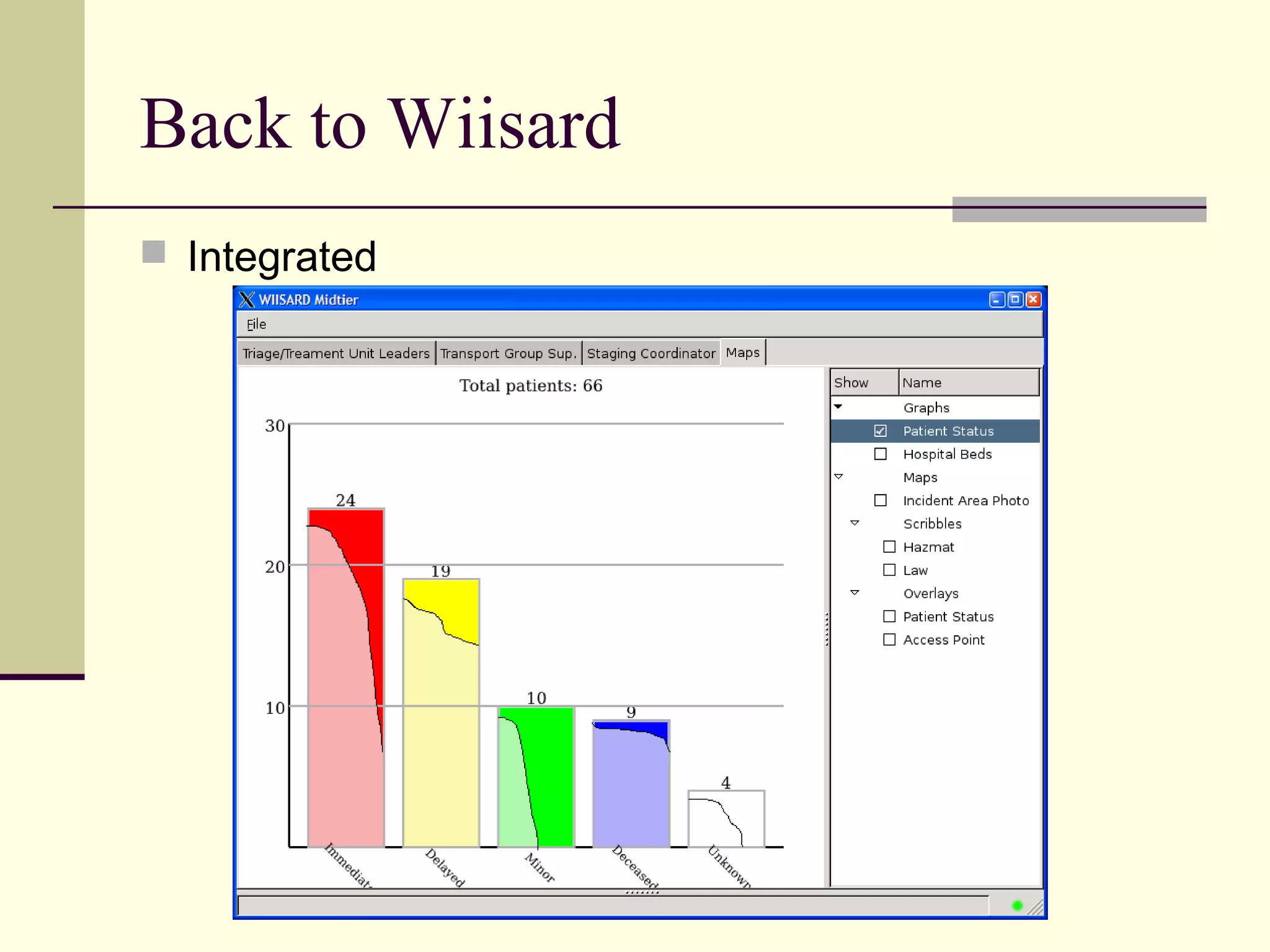

This document discusses visualizing data quality and uncertainty in the context of the Wiisard project. Wiisard is a joint project between the VA and UC San Diego to aid in mass casualty triage using PDAs and tablet PCs. The problem is that if the wireless network becomes partitioned, the displayed patient information may become outdated, leading to poor medical resource deployment decisions. The document outlines research in visualizing uncertainty in data to help humans make better judgments. It provides examples of visualizing uncertainty in data using techniques like contour widths, transparency, and color hue. It concludes with research questions on determining relevant data quality metrics and developing visualizations suited to different users and contexts.