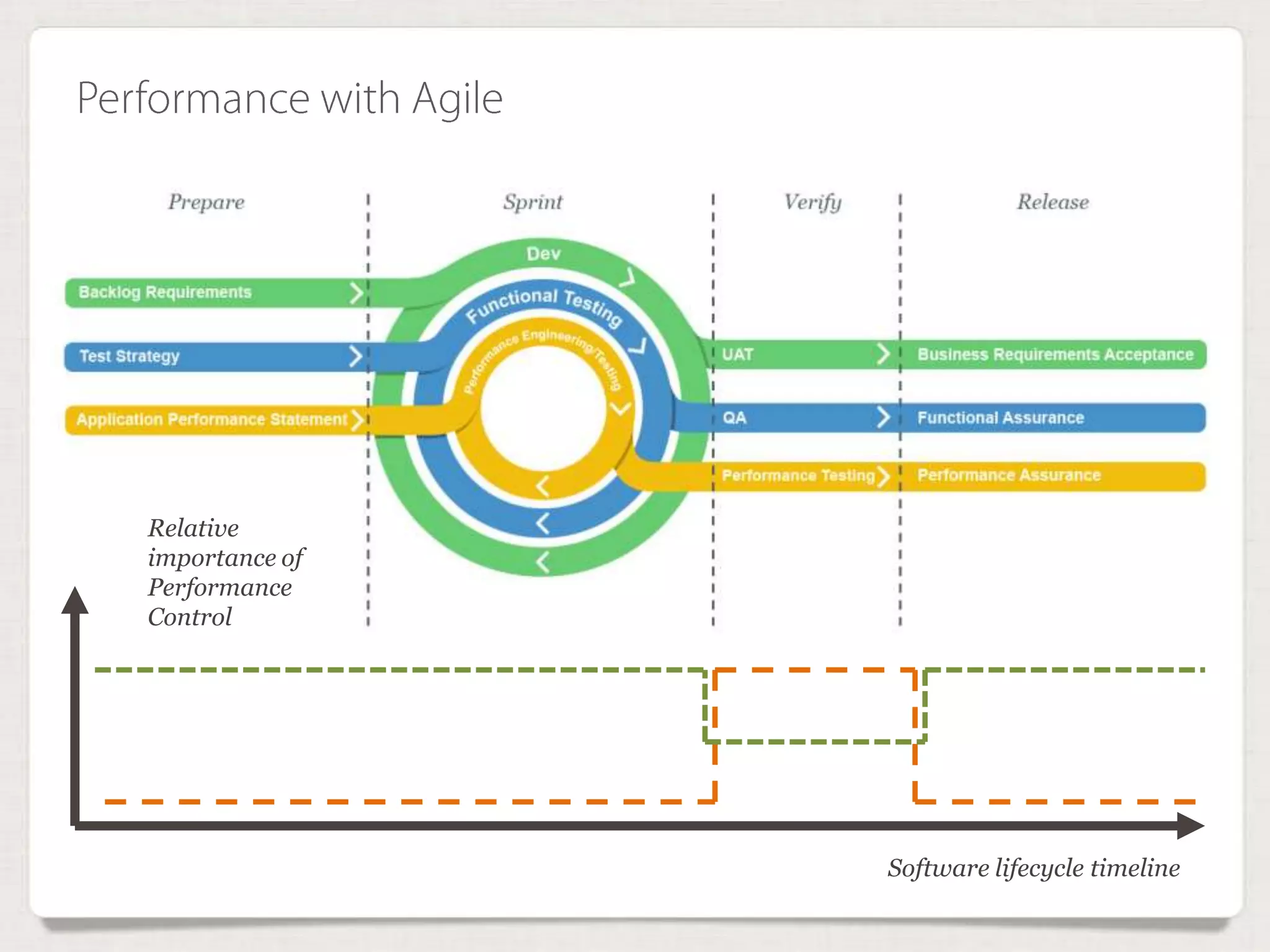

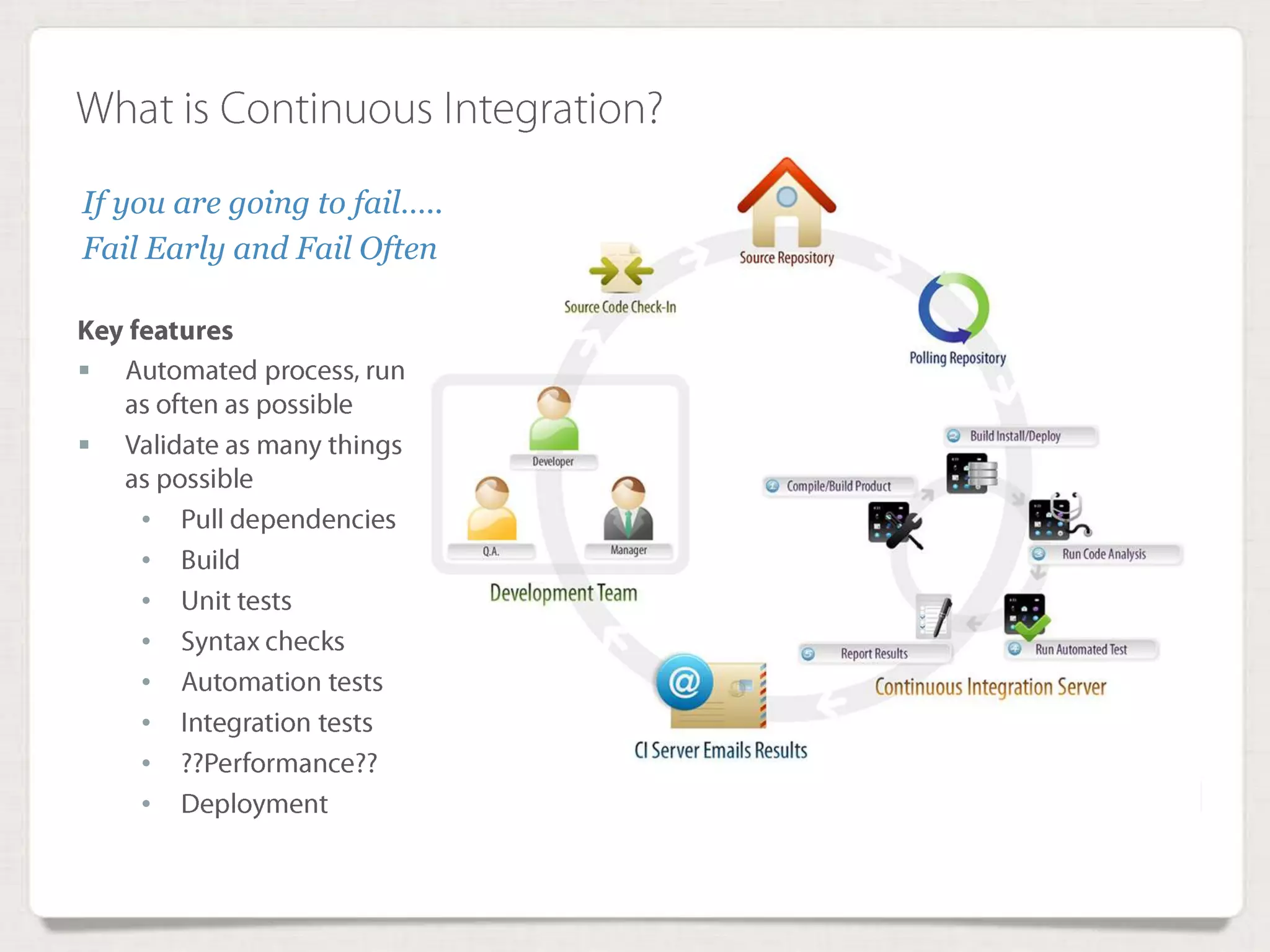

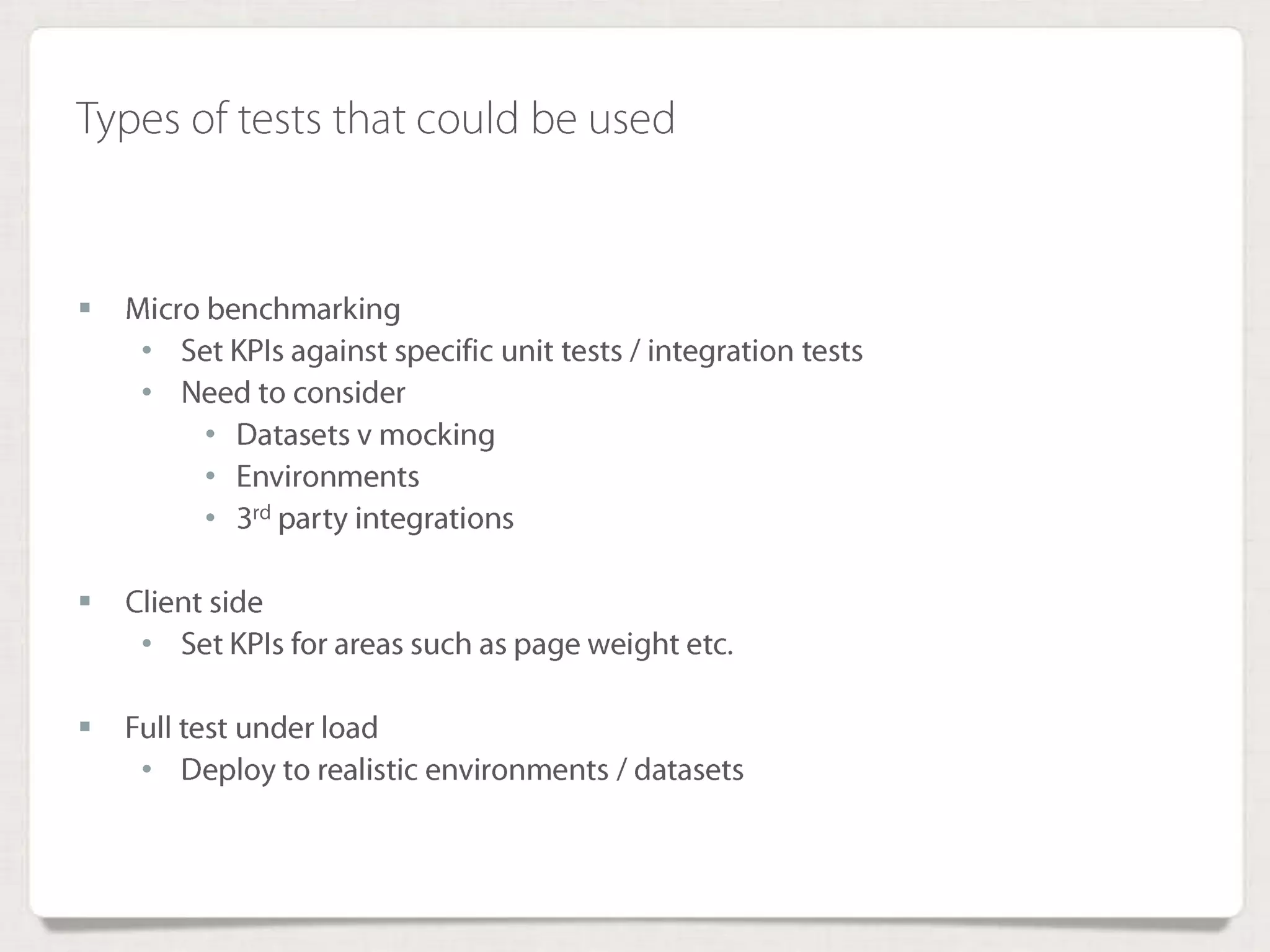

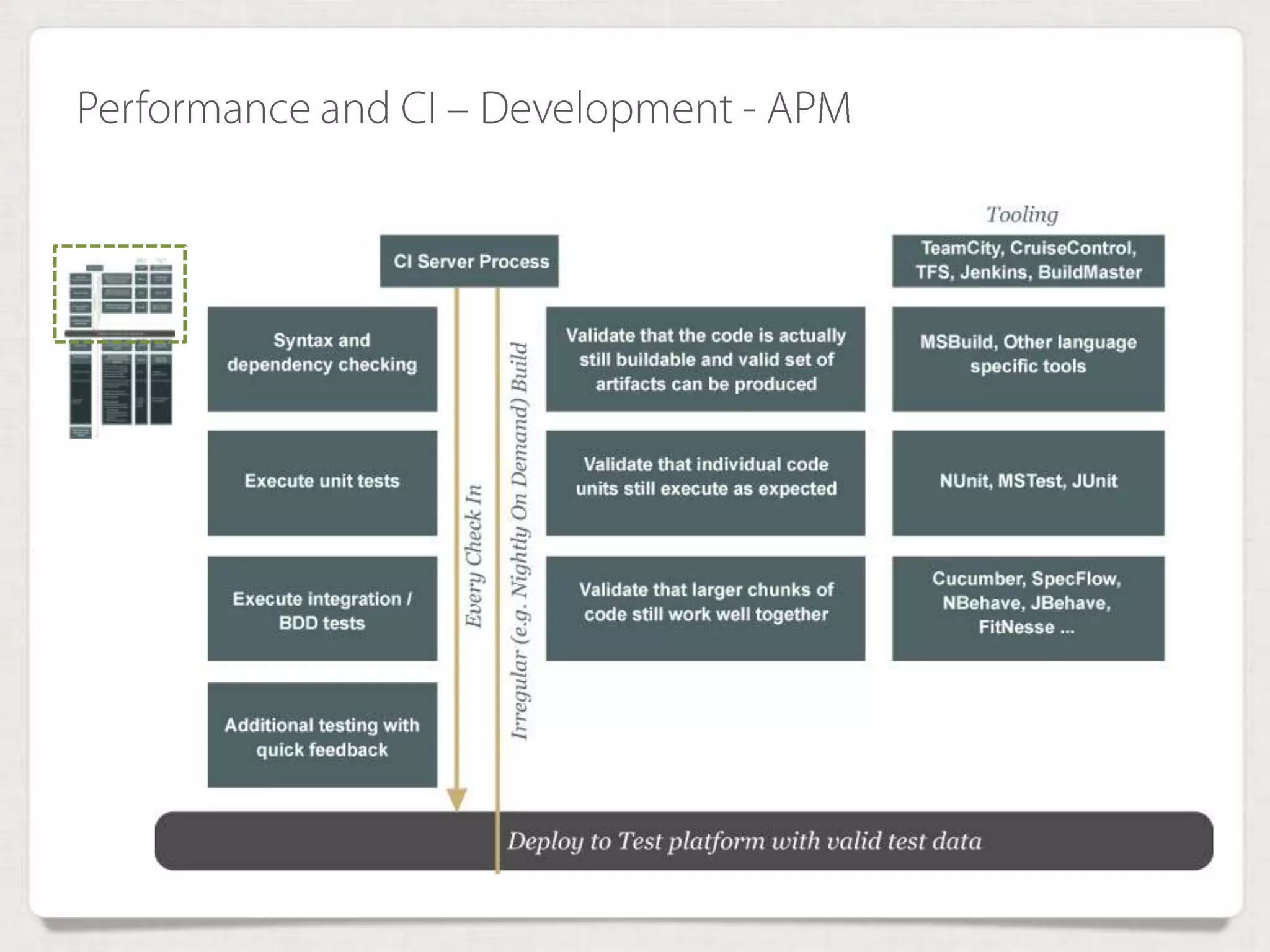

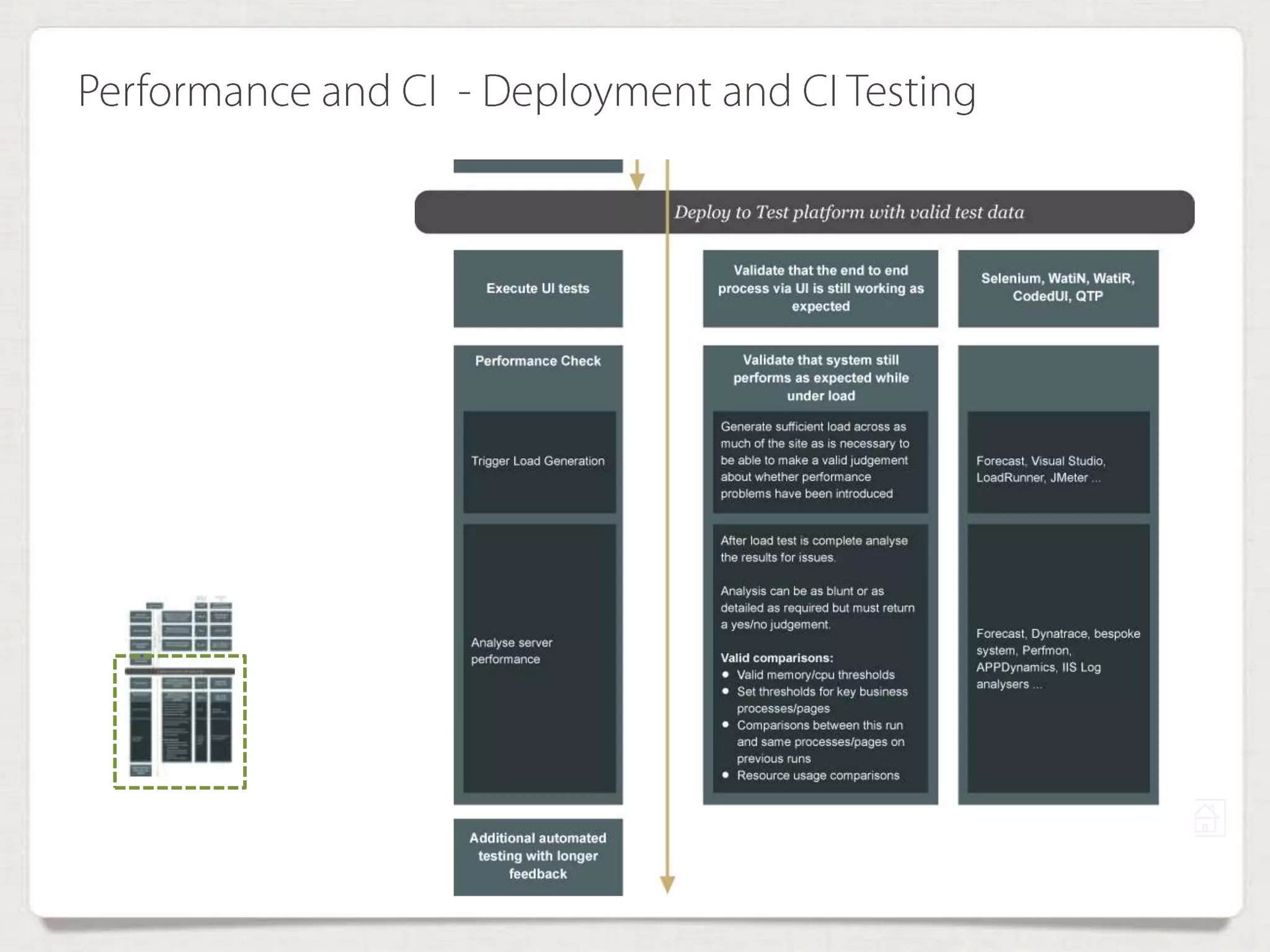

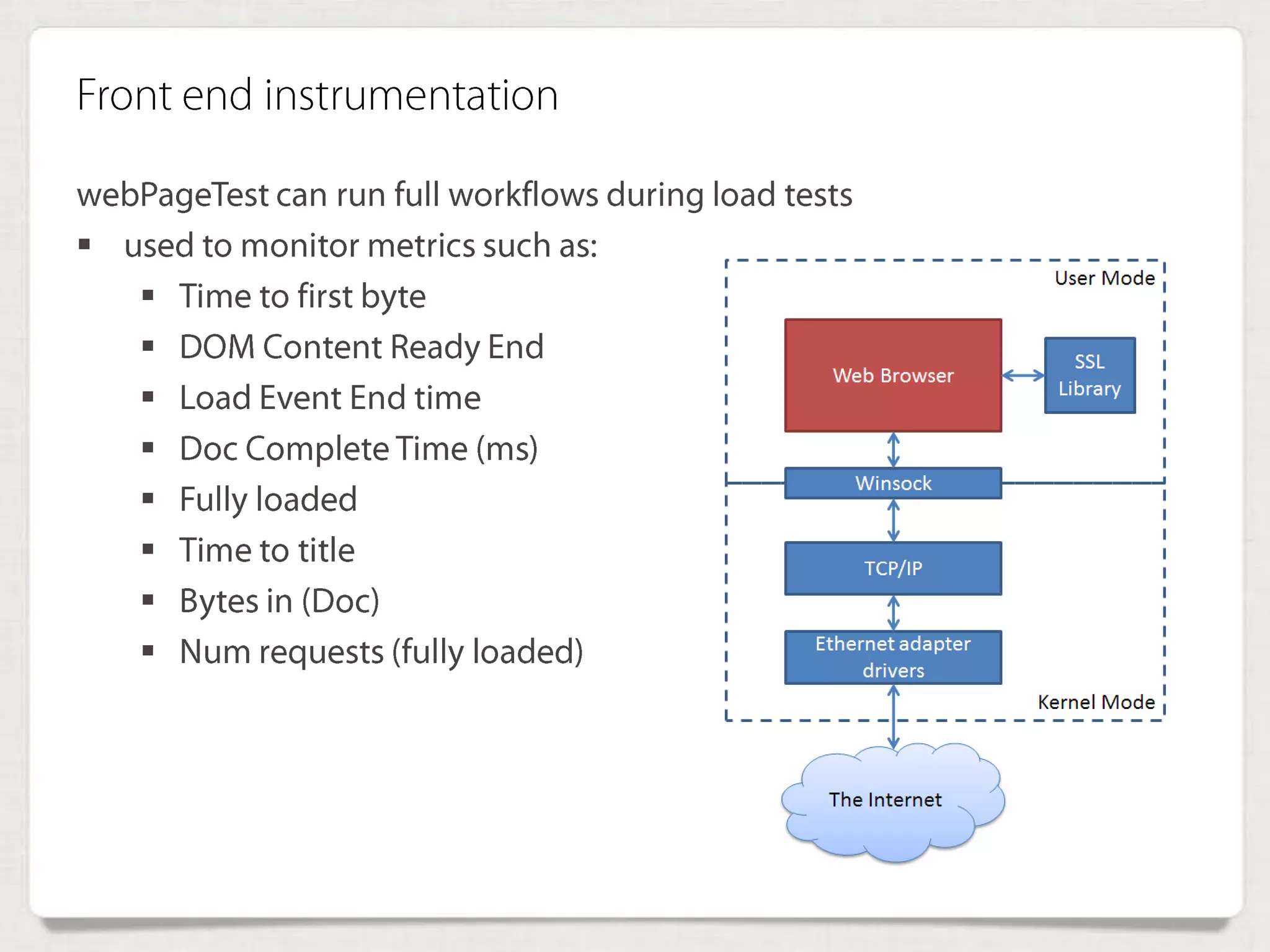

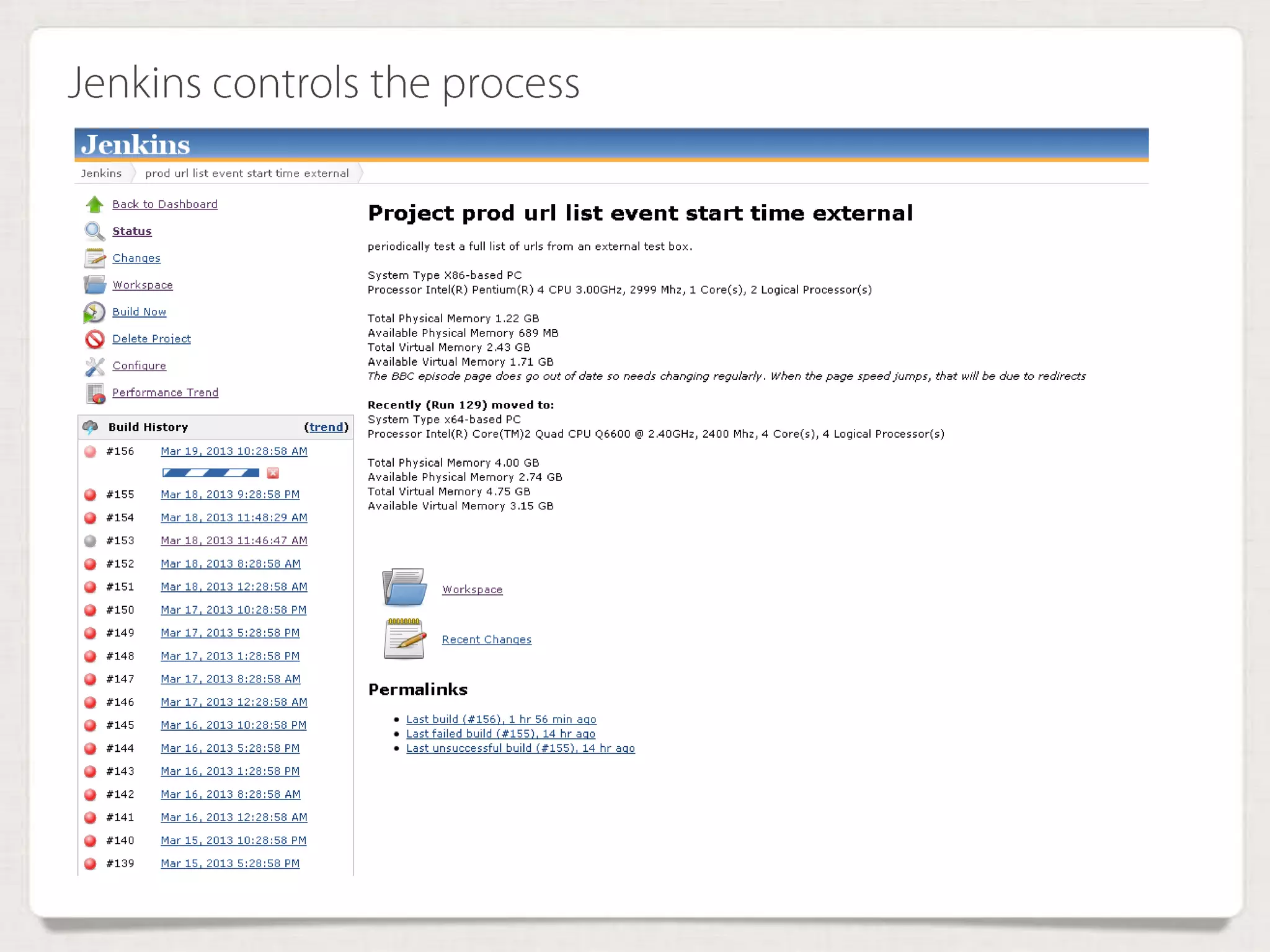

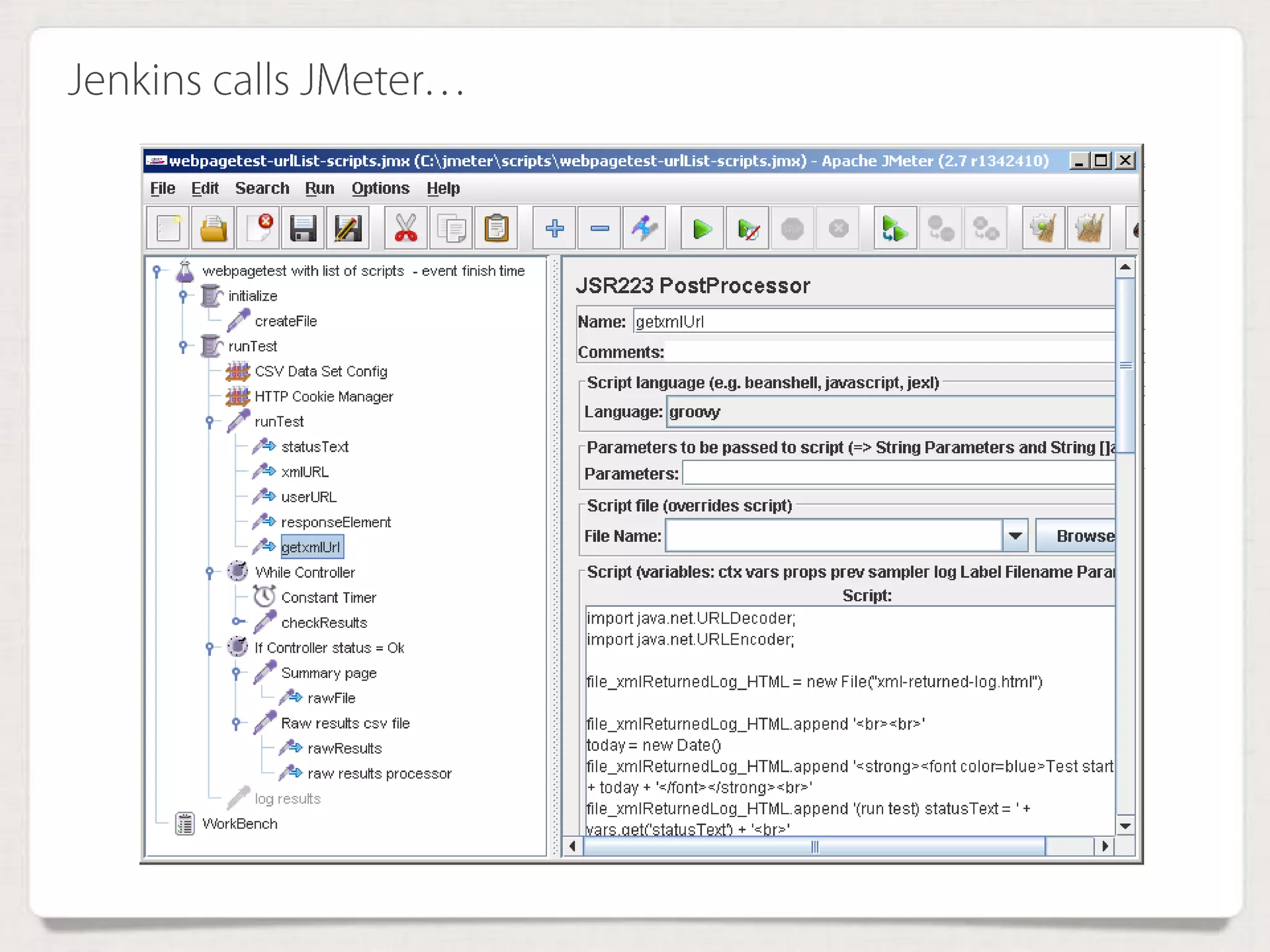

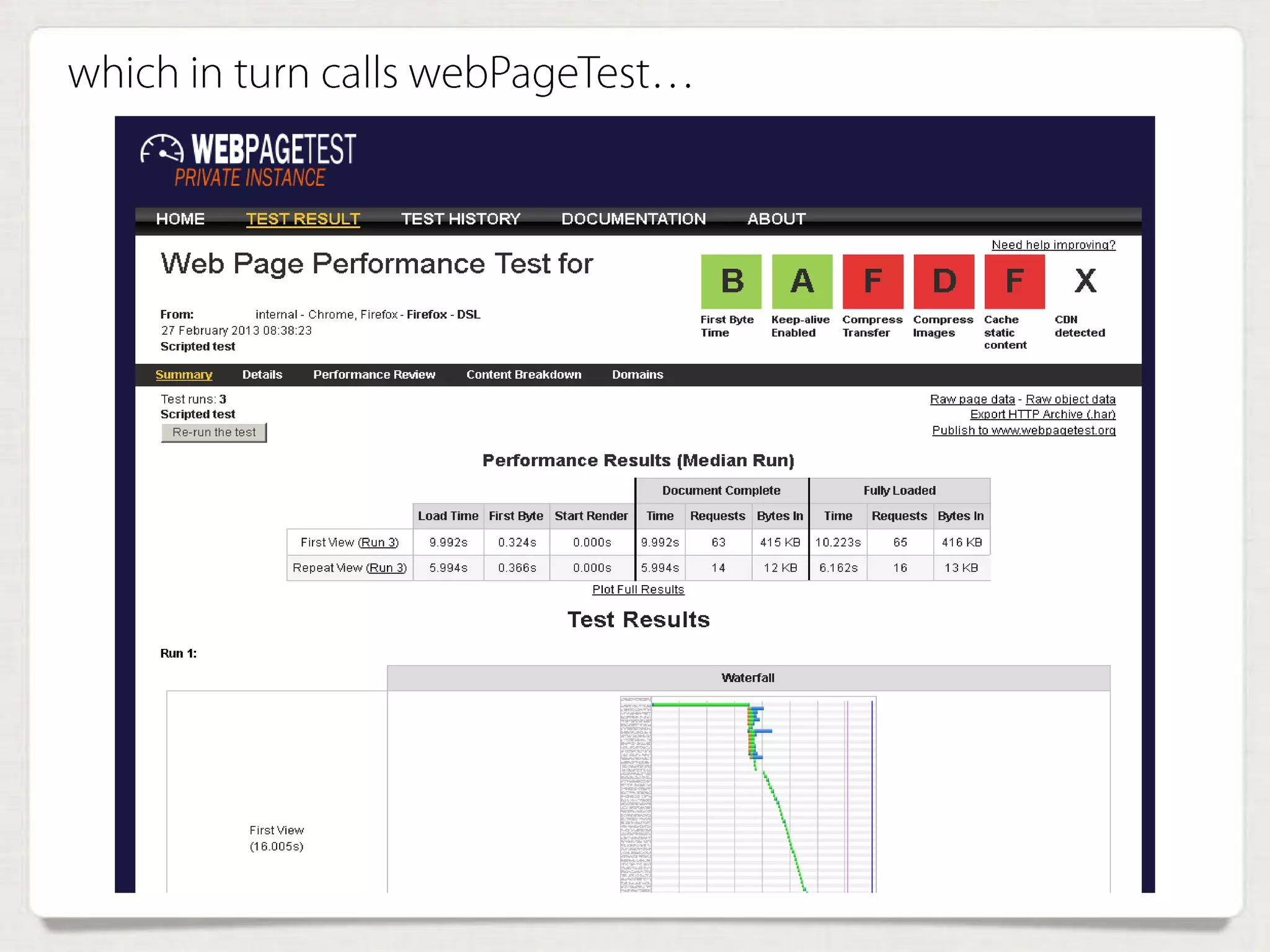

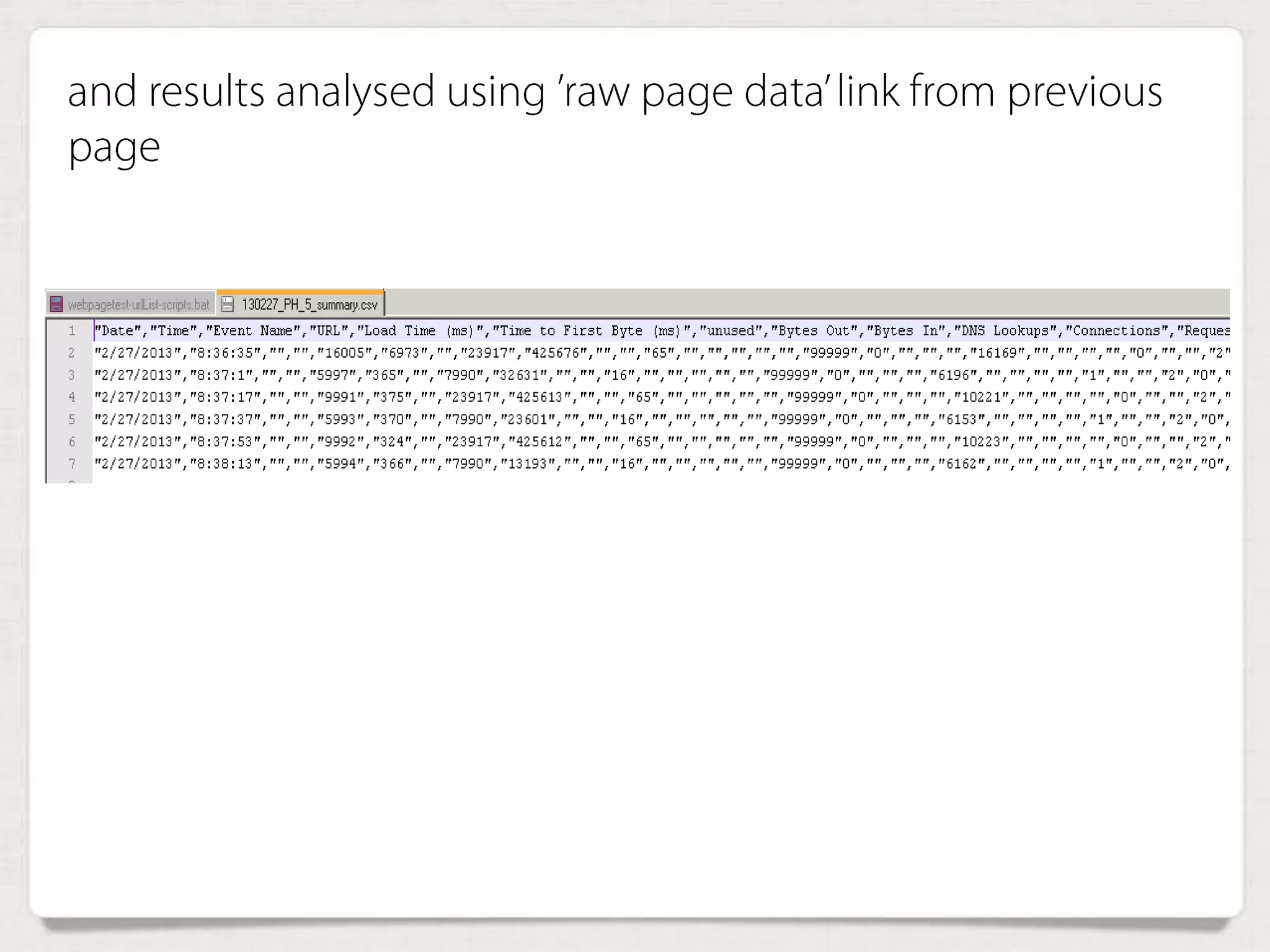

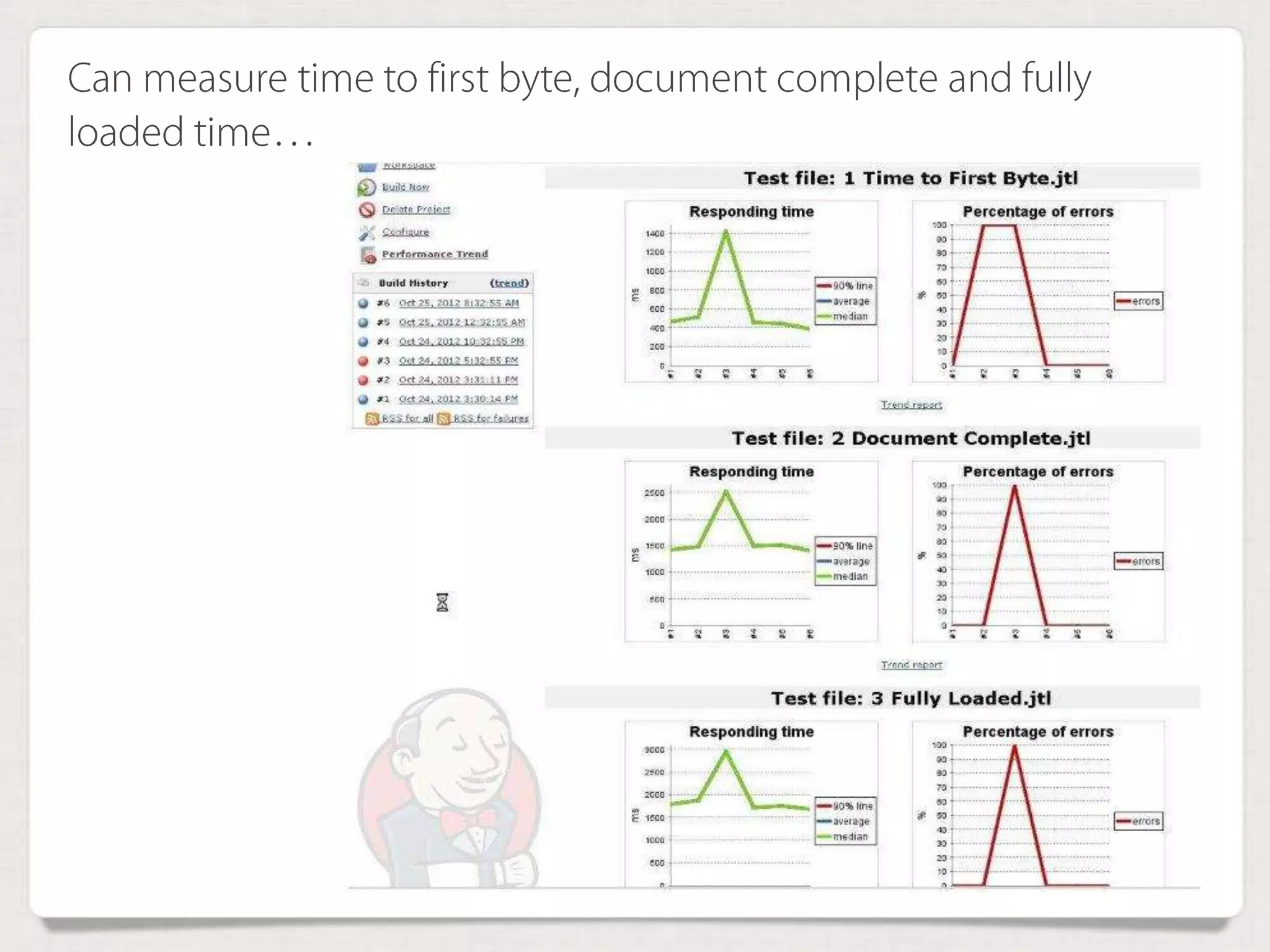

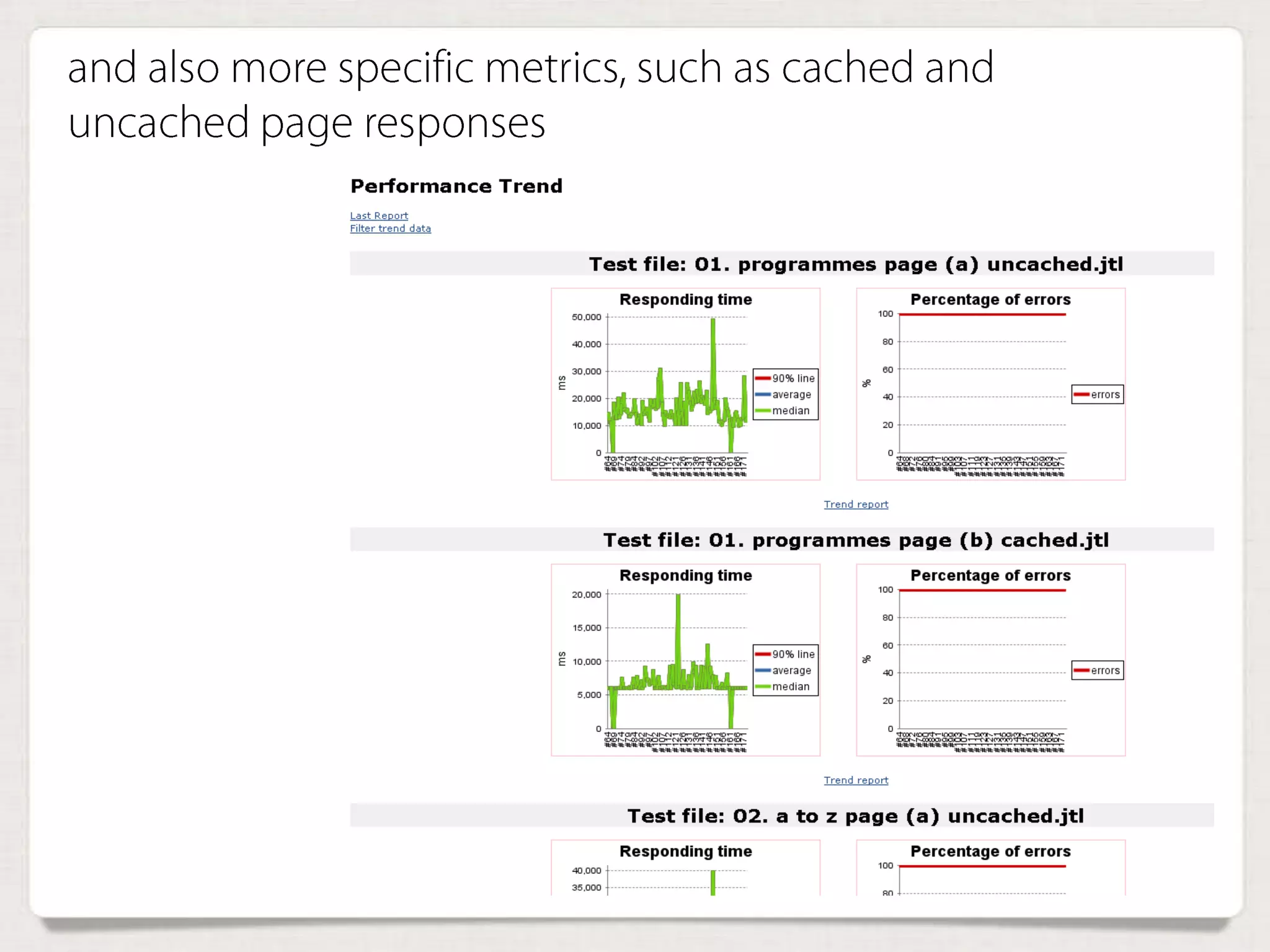

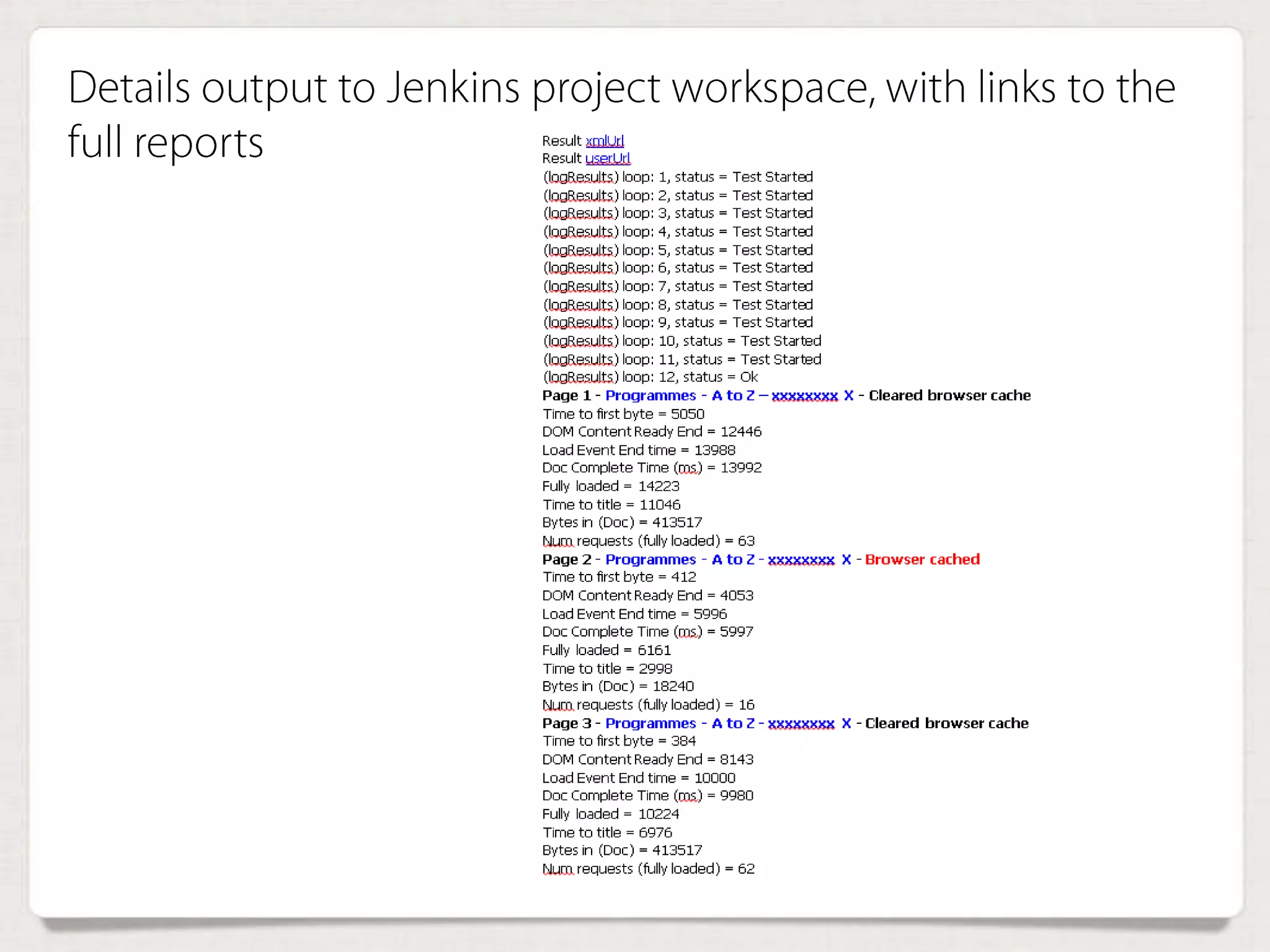

The document discusses the importance of integrating performance evaluation into the continuous integration (CI) process for software development, emphasizing that performance issues are as critical as functional ones. It outlines challenges in implementing performance controls, such as a lack of established frameworks and the need for suitable tooling, while highlighting successful strategies used at Channel 4. The use of tools like Jenkins, JMeter, and WebPageTest is illustrated for automating performance testing and achieving efficient feedback loops.