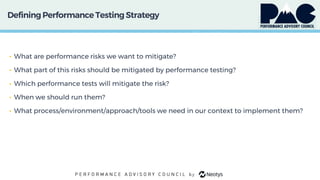

The document discusses context-driven performance testing, emphasizing the importance of adapting testing practices to the specific context of a project rather than relying on rigid best practices. It explores the challenges of performance testing in agile development, the need for early and continuous performance testing, and the integration of automation in various testing environments. Key strategies and considerations are outlined for effective performance testing, including resource allocation, risk mitigation, and tailored approaches to different components of a system.

![The MainIssue on theAgile Side

• It doesn’t [always] work this way in practice

• That is why you have “Hardening Iterations”, “Technical Debt” and similar

notions

• Same old problem: functionality gets priority over performance](https://image.slidesharecdn.com/alexpodelko-neotyspac2019-190215132100/85/Alexander-Podelko-Context-Driven-Performance-Testing-10-320.jpg)

![Examples

• Handling full/extra load

• System level, production[-like env], realistic load

• Catching regressions

• Continuous testing, limited scale/env

• Early detection of performance problems

• Exploratory tests, targeted workload

• Performance optimization/investigation

• Dedicated env, targeted workload](https://image.slidesharecdn.com/alexpodelko-neotyspac2019-190215132100/85/Alexander-Podelko-Context-Driven-Performance-Testing-36-320.jpg)