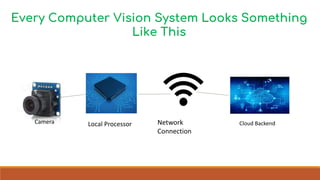

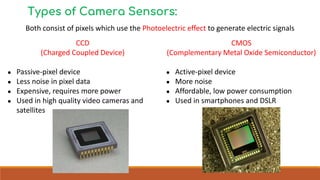

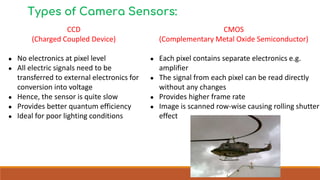

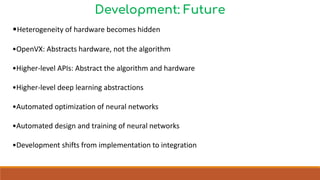

Computer vision systems analyze visual data from cameras. They typically involve cameras, local processors, network connections, and cloud backends. Computer vision has applications in consumer products, automotive, medical, defense, retail, gaming, security, education, and transportation. The architecture of computer vision systems involves different processors for low-level, medium-level, and high-level operations. Common camera sensors are CCD and CMOS, which use different technologies to capture digital images. Computer vision has applications in automotive safety, object tracking, hazardous area scanning, and biological imaging. Future developments include more heterogeneous and distributed hardware with higher-level programming interfaces.